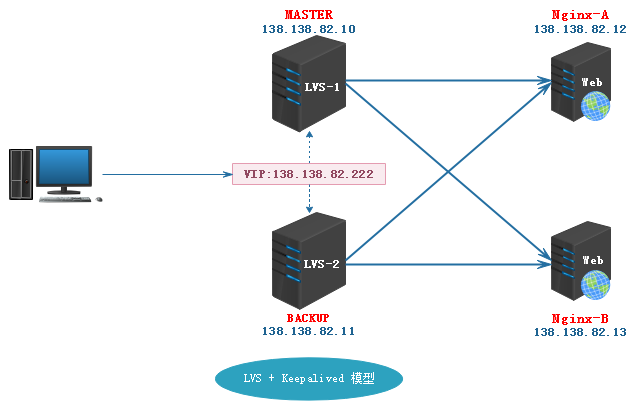

应用环境:

LVS负责多台WEB端的负载均衡(LB);Keepalived负责LVS的高可用(HA),这里介绍主备模型。

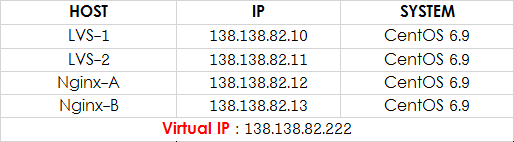

测试环境:

配置步骤:

1. 安装软件

在LVS-1和LVS-2两台主机上安装ipvsadm和keepalived

~]# yum install ipvsadm keepalived -y

在两台Web主机上安装Nginx

~]# yum install nginx -y //修改访问主页内容,方便最后测试,这里改为了Nginx Web 1 [IP:12]和Nginx Web 1 [IP:13]

2. 配置Keepalived

说明:keepalived底层有关于IPVS的功能模块,可以直接在其配置文件中实现LVS的配置,不需要通过ipvsadm命令再单独配置。

[root@lvs-1 ~]# vim /etc/keepalived/keepalived.conf // Master配置好的信息如下

! Configuration File for keepalived global_defs { router_id LVS ## 不一定要与主机名相同,也不必与BACKUP的名字一致 } vrrp_instance VI_1 { state MASTER ## LVS-1配置了为主,另外一台LVS-2配置为BACKUP interface eth0 ## 注意匹配网卡名 virtual_router_id 51 ## 虚拟路由ID(0-255),在一个VRRP实例中主备服务器ID必须一样 priority 150 ## 优先级值设定:MASTER要比BACKUP的值大 advert_int 3 ## 通告时间间隔:单位秒,主备要一致 authentication { ##认证机制 auth_type PASS ## 默认PASS; 有两种:PASS或AH auth_pass 1111 ## 默认1111; 可多位字符串,但仅前8位有效 } virtual_ipaddress { 138.138.82.222 ## 虚拟IP;可多个,写法为每行一个 } } virtual_server 138.138.82.222 80 { delay_loop 3 ## 设置健康状态检查时间 lb_algo rr ## 调度算法,这里用了rr轮询算法,便于后面测试查看 lb_kind DR ## 这里测试用了Direct Route 模式, # persistence_timeout 1 ## 持久连接超时时间,先注释掉,不然在单台上测试时,全部会被lvs调度到其中一台Real Server protocol TCP real_server 138.138.82.12 80 { weight 1 TCP_CHECK { connect_timeout 10 ##设置响应超时时间 nb_get_retry 3 ##设置超时重试次数 delay_before_retry 3 ##设置超时重试间隔时间 connect_port 80 } } real_server 138.138.82.13 80 { weight 1 TCP_CHECK { connect_timeout 10 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } }

保存,退出;

[root@lvs-2 ~]# vim /etc/keepalived/keepalived.conf //同样,修改BACKUP上的配置文件,如下

! Configuration File for keepalived global_defs { router_id LVS } vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 51 priority 120 advert_int 3 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 138.138.82.222 } } virtual_server 138.138.82.222 80 { delay_loop 3 lb_algo rr lb_kind DR # persistence_timeout 1 protocol TCP real_server 138.138.82.12 80 { weight 1 TCP_CHECK { connect_timeout 10 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } real_server 138.138.82.13 80 { weight 1 TCP_CHECK { connect_timeout 10 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } }

保存,退出;

启动并加入开机启动

[root@lvs-1 ~]# service keepalived start

[root@lvs-1 ~]# chkconfig keepalived on

同样,在lvs-2上也启动keepalived并加入开机启动:

[root@lvs-2 ~]# service keepalived start

[root@lvs-2 ~]# chkconfig keepalived on

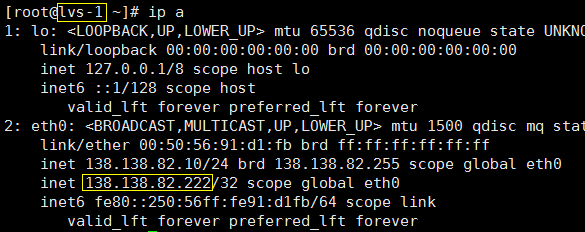

查看:

说明:

此时,Virtual IP是飘在MASTER上面,如果断开MASTER(关闭MASTER上keepalived),Virtual IP就会飘到BACKUP上;

再当MASTER复活(启动keepalived),Virtual IP会再次回到MATSTER上,可通过/var/log/message查看变更信息;

3. 配置WEB端(两台Nginx)

这里直接新建一个配置脚本,方便操作;

~]# vim lvs-web.sh //新建一个脚本,假定该脚本名为lvs-web.sh

#!/bin/bash VIP=138.138.82.222 case "$1" in start) echo "start LVS of RealServer DR" /sbin/ifconfig lo:0 $VIP broadcast $VIP netmask 255.255.255.255 up /sbin/route add -host $VIP dev lo:0 echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce ;; stop) /sbin/ifconfig lo:0 down echo "close LVS of RealServer DR" echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce ;; *) echo "Usage: $0 {start|stop}" exit 1 esac exit 0

保存,退出;

运行脚本:

~]# sh lvs-web.sh start // start:启动; stop:停止;

4. 测试

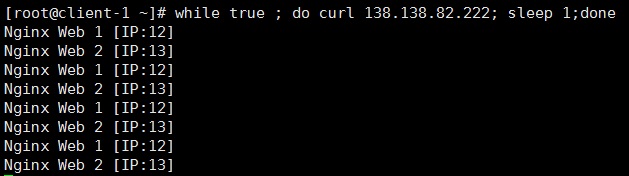

测试①

若一切顺利,正常测试如下:

~]# while true ; do curl 138.138.82.222; sleep 1;done //每秒执行一次curl 138.138.82.222

默认现在访问VIP:138.138.82.222,走的是LVS-1(MASTER)

测试②

断开MASTER:VIP飘到BACKUP上,访问VIP正常,Client 轮询依旧;

复活MASTER:VIP飘回MASTER上,访问VIP正常,Client 轮询依旧; // 成功实现:LVS的高可用 和 Nginx的负载均衡

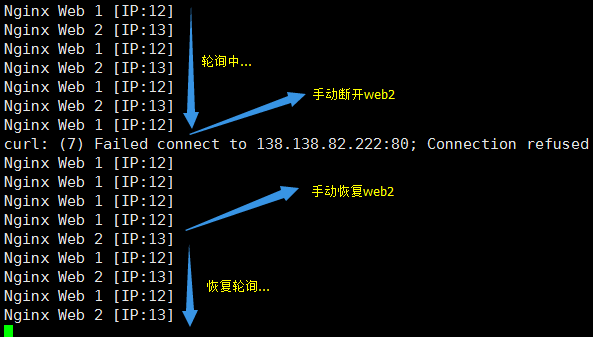

测试③

手动断开Nginx,然后再手动启动Nginx:

结束.