转发请注明原创地址http://www.cnblogs.com/dongxiao-yang/p/6234673.html

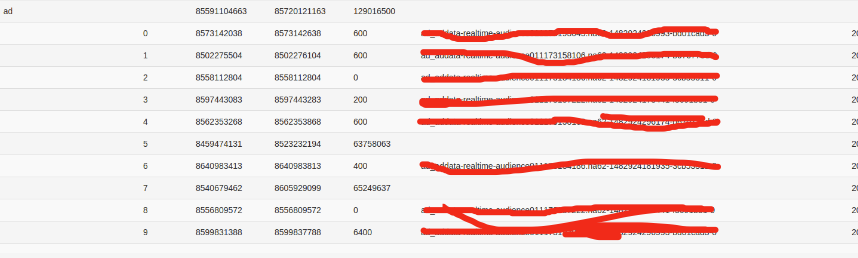

最近业务同学反馈kafka上线的时候某个topic的部分分区一直没有owner注册上,监控界面形式如图,其中分区5和7无法被消费者注册到,重启客户端程序rebalance依旧是这两个分区没有被消费。

由于最近业务方机房大迁移,第一反应是网络连通性,但是消费端程序挨个测试网络没有问题,而且即使通过增加或者减少consumer数量,甚至消费端只开一个客户端,rebalance结束后依然会有分区没有owner,而且随着消费端个数的变化,无owner的分区号也发生了变化,整个rebalance过程客户端程序没有任何错误日志。

这种情况还得去过客户端日志,在只起了两个客户端的时候发现有这么一段:

16/12/29 15:52:56 INFO consumer.ZookeeperConsumerConnector: [xxx], Consumer xxx rebalancing the following partitions: ArrayBuffer(5, 7, 3, 8, 1, 4, 6, 2, 0, 9) for topic onlineAdDemographicPredict with consumers: List(aaa-0, yyy-0, xxx-0)

16/12/29 15:52:56 INFO consumer.ZookeeperConsumerConnector: [xxx], xxx-0 attempting to claim partition 2

16/12/29 15:52:56 INFO consumer.ZookeeperConsumerConnector: [xxx], xxx-0 attempting to claim partition 0

16/12/29 15:52:56 INFO consumer.ZookeeperConsumerConnector: [xxx], xxx-0 attempting to claim partition 9

16/12/29 15:52:56 INFO consumer.ZookeeperConsumerConnector: [xxx], xxx-0 successfully owned partition 0 for topic onlineAdDemographicPredict

16/12/29 15:52:56 INFO consumer.ZookeeperConsumerConnector: [xxx], xxx-0 successfully owned partition 9 for topic onlineAdDemographicPredict

16/12/29 15:52:56 INFO consumer.ZookeeperConsumerConnector: [xxx], xxx-0 successfully owned partition 2 for topic onlineAdDemographicPredict

ArrayBuffer里分区10个分区都全了说明客户端读取所有Partirtion个数是没有问题的,出问题的是with consumers: List()这个信息,此时业务方只起了xxx和yyy两个客户端,

但是Consumer确拿到了三个client-id,然后经过计算自己正好需要注册三个分区2,0,9,剩下的分区就没人认领了。

查找日志对应kafka源码如下

class RangeAssignor() extends PartitionAssignor with Logging { def assign(ctx: AssignmentContext) = { val partitionOwnershipDecision = collection.mutable.Map[TopicAndPartition, ConsumerThreadId]() for ((topic, consumerThreadIdSet) <- ctx.myTopicThreadIds) { val curConsumers = ctx.consumersForTopic(topic) val curPartitions: Seq[Int] = ctx.partitionsForTopic(topic) val nPartsPerConsumer = curPartitions.size / curConsumers.size val nConsumersWithExtraPart = curPartitions.size % curConsumers.size info("Consumer " + ctx.consumerId + " rebalancing the following partitions: " + curPartitions + " for topic " + topic + " with consumers: " + curConsumers) for (consumerThreadId <- consumerThreadIdSet) { val myConsumerPosition = curConsumers.indexOf(consumerThreadId) assert(myConsumerPosition >= 0) val startPart = nPartsPerConsumer * myConsumerPosition + myConsumerPosition.min(nConsumersWithExtraPart) val nParts = nPartsPerConsumer + (if (myConsumerPosition + 1 > nConsumersWithExtraPart) 0 else 1) /** * Range-partition the sorted partitions to consumers for better locality. * The first few consumers pick up an extra partition, if any. */ if (nParts <= 0) warn("No broker partitions consumed by consumer thread " + consumerThreadId + " for topic " + topic) else { for (i <- startPart until startPart + nParts) { val partition = curPartitions(i) info(consumerThreadId + " attempting to claim partition " + partition) // record the partition ownership decision partitionOwnershipDecision += (TopicAndPartition(topic, partition) -> consumerThreadId) } } } } partitionOwnershipDecision } }

object PartitionAssignor { def createInstance(assignmentStrategy: String) = assignmentStrategy match { case "roundrobin" => new RoundRobinAssignor() case _ => new RangeAssignor() } } class AssignmentContext(group: String, val consumerId: String, excludeInternalTopics: Boolean, zkClient: ZkClient) { val myTopicThreadIds: collection.Map[String, collection.Set[ConsumerThreadId]] = { val myTopicCount = TopicCount.constructTopicCount(group, consumerId, zkClient, excludeInternalTopics) myTopicCount.getConsumerThreadIdsPerTopic } val partitionsForTopic: collection.Map[String, Seq[Int]] = ZkUtils.getPartitionsForTopics(zkClient, myTopicThreadIds.keySet.toSeq) val consumersForTopic: collection.Map[String, List[ConsumerThreadId]] = ZkUtils.getConsumersPerTopic(zkClient, group, excludeInternalTopics) val consumers: Seq[String] = ZkUtils.getConsumersInGroup(zkClient, group).sorted }

class ZKGroupDirs(val group: String) { def consumerDir = ConsumersPath def consumerGroupDir = consumerDir + "/" + group def consumerRegistryDir = consumerGroupDir + "/ids" def consumerGroupOffsetsDir = consumerGroupDir + "/offsets" def consumerGroupOwnersDir = consumerGroupDir + "/owners" } def getConsumersPerTopic(group: String, excludeInternalTopics: Boolean): mutable.Map[String, List[ConsumerThreadId]] = { val dirs = new ZKGroupDirs(group) val consumers = getChildrenParentMayNotExist(dirs.consumerRegistryDir) val consumersPerTopicMap = new mutable.HashMap[String, List[ConsumerThreadId]] for (consumer <- consumers) { val topicCount = TopicCount.constructTopicCount(group, consumer, this, excludeInternalTopics) for ((topic, consumerThreadIdSet) <- topicCount.getConsumerThreadIdsPerTopic) { for (consumerThreadId <- consumerThreadIdSet) consumersPerTopicMap.get(topic) match { case Some(curConsumers) => consumersPerTopicMap.put(topic, consumerThreadId :: curConsumers) case _ => consumersPerTopicMap.put(topic, List(consumerThreadId)) } } } for ( (topic, consumerList) <- consumersPerTopicMap ) consumersPerTopicMap.put(topic, consumerList.sortWith((s,t) => s < t)) consumersPerTopicMap }

def constructTopicCount(group: String, consumerId: String, zkUtils: ZkUtils, excludeInternalTopics: Boolean) : TopicCount = { val dirs = new ZKGroupDirs(group) val topicCountString = zkUtils.readData(dirs.consumerRegistryDir + "/" + consumerId)._1 var subscriptionPattern: String = null var topMap: Map[String, Int] = null try { Json.parseFull(topicCountString) match { case Some(m) => val consumerRegistrationMap = m.asInstanceOf[Map[String, Any]] consumerRegistrationMap.get("pattern") match { case Some(pattern) => subscriptionPattern = pattern.asInstanceOf[String] case None => throw new KafkaException("error constructing TopicCount : " + topicCountString) } consumerRegistrationMap.get("subscription") match { case Some(sub) => topMap = sub.asInstanceOf[Map[String, Int]] case None => throw new KafkaException("error constructing TopicCount : " + topicCountString) } case None => throw new KafkaException("error constructing TopicCount : " + topicCountString) } } catch { case e: Throwable =>

通过上面着色的代码一路跟下来,可以看出来Consumer获取group所有客户端数量逻辑是读取zk上 /kafkachroot/consumers/{groupid}/ids路径下

所有存在的consumerid,然后读取这些consumerid对应的topic信息,最终返回一个[topic, List[ConsumerThreadId]] 的二维数组。

于是跑到zk上看节点结构,发现在出问题的group/ids 路径下果然存在aaa这个临时节点,通知应用方发现原来有个很老的程序之前也用同样的groupid消费过这个topic,但是现在业务程序很久没人管处在一个半假死的状态,所以这个临时节点一直不过期,导致后来使用同样group消费同样的每次都会感知到一个多余的消费段存在,所以每次都有部分分区无法被消费。

附:

2 本文讨论的版本建立在kafka 0.8.2-beta版本前提上,新出的版本目前没有研究,可能情况不符。