gluster进程: 端口号

glusterd 24007

glusterfsd 49153 提供卷服务, 停止卷后,进程退出。

glusterfs

glusterfs

root 3665 0.0 0.2 577312 9908 ? Ssl 18:47 0:02 /usr/sbin/glusterd --pid-file=/var/run/glusterd.pid root 19417 0.0 0.0 103300 2020 pts/0 R+ 19:36 0:00 grep gluster root 122826 0.0 0.3 1273460 13516 ? Ssl 19:24 0:00 /usr/sbin/glusterfsd -s 10.17.55.253 --volfile-id GlusterFs.10.17.55.253.mnt-b02abd665d4e1092d93f950ae499a0fa-glusterfs_storage -p /var/lib/glusterd/vols/GlusterFs/run/10.17.55.253-mnt-b02abd665d4e1092d93f950ae499a0fa-glusterfs_storage.pid -S /var/run/gluster/73372af1394228c2758045d0446e5297.socket --brick-name /mnt/b02abd665d4e1092d93f950ae499a0fa/glusterfs_storage -l /var/log/glusterfs/bricks/mnt-b02abd665d4e1092d93f950ae499a0fa-glusterfs_storage.log --xlator-option *-posix.glusterd-uuid=17a62946-b3f5-432e-9389-13fd6bb95e58 --brick-port 49153 --xlator-option GlusterFs-server.listen-port=49153 root 122878 0.0 0.2 582476 8496 ? Ssl 19:24 0:00 /usr/sbin/glusterfs -s localhost --volfile-id gluster/glustershd -p /var/lib/glusterd/glustershd/run/glustershd.pid -l /var/log/glusterfs/glustershd.log -S /var/run/gluster/e1fd6db52b653d9cfb69e179e4a5ab93.socket --xlator-option *replicate*.node-uuid=17a62946-b3f5-432e-9389-13fd6bb95e58 root 123248 97.4 0.3 542136 15236 ? Ssl 19:25 11:00 /usr/sbin/glusterfs --volfile-server=127.0.0.1 --volfile-id=/GlusterFs /mnt/d1f561a32ac1bf17cf183f36baac34d4

gluster 命令: 版本-3.4.2

[root@vClass-v0jTX etc]# gluster help volume info [all|<VOLNAME>] - list information of all volumes volume create <NEW-VOLNAME> [stripe <COUNT>] [replica <COUNT>] [device vg] [transport <tcp|rdma|tcp,rdma>] <NEW-BRICK> ... [force] - create a new volume of specified type with mentioned bricks volume delete <VOLNAME> - delete volume specified by <VOLNAME> volume start <VOLNAME> [force] - start volume specified by <VOLNAME> volume stop <VOLNAME> [force] - stop volume specified by <VOLNAME> volume add-brick <VOLNAME> [<stripe|replica> <COUNT>] <NEW-BRICK> ... [force] - add brick to volume <VOLNAME> volume remove-brick <VOLNAME> [replica <COUNT>] <BRICK> ... {start|stop|status|commit|force} - remove brick from volume <VOLNAME> volume rebalance <VOLNAME> [fix-layout] {start|stop|status} [force] - rebalance operations volume replace-brick <VOLNAME> <BRICK> <NEW-BRICK> {start [force]|pause|abort|status|commit [force]} - replace-brick operations volume set <VOLNAME> <KEY> <VALUE> - set options for volume <VOLNAME> volume help - display help for the volume command volume log rotate <VOLNAME> [BRICK] - rotate the log file for corresponding volume/brick volume sync <HOSTNAME> [all|<VOLNAME>] - sync the volume information from a peer volume reset <VOLNAME> [option] [force] - reset all the reconfigured options volume geo-replication [<VOLNAME>] [<SLAVE-URL>] {start|stop|config|status|log-rotate} [options...] - Geo-sync operations volume profile <VOLNAME> {start|info|stop} [nfs] - volume profile operations volume quota <VOLNAME> <enable|disable|limit-usage|list|remove> [path] [value] - quota translator specific operations volume top <VOLNAME> {[open|read|write|opendir|readdir [nfs]] |[read-perf|write-perf [nfs|{bs <size> count <count>}]]|[clear [nfs]]} [brick <brick>] [list-cnt <count>] - volume top operations volume status [all | <VOLNAME> [nfs|shd|<BRICK>]] [detail|clients|mem|inode|fd|callpool] - display status of all or specified volume(s)/brick volume heal <VOLNAME> [{full | info {healed | heal-failed | split-brain}}] - self-heal commands on volume specified by <VOLNAME> volume statedump <VOLNAME> [nfs] [all|mem|iobuf|callpool|priv|fd|inode|history]... - perform statedump on bricks volume list - list all volumes in cluster volume clear-locks <VOLNAME> <path> kind {blocked|granted|all}{inode [range]|entry [basename]|posix [range]} - Clear locks held on path peer probe <HOSTNAME> - probe peer specified by <HOSTNAME> peer detach <HOSTNAME> [force] - detach peer specified by <HOSTNAME> peer status - list status of peers peer help - Help command for peer quit - quit help - display command options exit - exit bd help - display help for bd command bd create <volname>:<bd> <size> - create a block device where size can be suffixed with KB, MB etc. Default size is in MB bd delete <volname>:<bd> - Delete a block device bd clone <volname>:<bd> <newbd> - clone device bd snapshot <volname>:<bd> <newbd> <size> - snapshot device where size can be suffixed with KB, MB etc. Default size is in MB

gluster: 3.10.2 版本 增加了 tier、bitrot命令。

volume info [all|<VOLNAME>] - list information of all volumes volume create <NEW-VOLNAME> [stripe <COUNT>] [replica <COUNT> [arbiter <COUNT>]] [disperse [<COUNT>]] [disperse-data <COUNT>] [redundancy <COUNT>] [transport <tcp|rdma|tcp,rdma>] <NEW-BRICK>?<vg_name>... [force] - create a new volume of specified type with mentioned bricks volume delete <VOLNAME> - delete volume specified by <VOLNAME> volume start <VOLNAME> [force] - start volume specified by <VOLNAME> volume stop <VOLNAME> [force] - stop volume specified by <VOLNAME>

卷分层,缓存

volume tier <VOLNAME> status volume tier <VOLNAME> start [force] 启动缓存 volume tier <VOLNAME> stop 停止缓存 volume tier <VOLNAME> attach [<replica COUNT>] <NEW-BRICK>... [force] 创建缓存 volume tier <VOLNAME> detach <start|stop|status|commit|[force]> - Tier translator specific operations. volume attach-tier <VOLNAME> [<replica COUNT>] <NEW-BRICK>... - NOTE: this is old syntax, will be depreciated in next release. Please use gluster volume tier <vol> attach [<replica COUNT>] <NEW-BRICK>... volume detach-tier <VOLNAME> <start|stop|status|commit|force> - NOTE: this is old syntax, will be depreciated in next release. Please use gluster volume tier <vol> detach {start|stop|commit} [force] volume add-brick <VOLNAME> [<stripe|replica> <COUNT> [arbiter <COUNT>]] <NEW-BRICK> ... [force] - add brick to volume <VOLNAME> volume remove-brick <VOLNAME> [replica <COUNT>] <BRICK> ... <start|stop|status|commit|force> - remove brick from volume <VOLNAME> volume rebalance <VOLNAME> {{fix-layout start} | {start [force]|stop|status}} - rebalance operations volume replace-brick <VOLNAME> <SOURCE-BRICK> <NEW-BRICK> {commit force} - replace-brick operations volume set <VOLNAME> <KEY> <VALUE> - set options for volume <VOLNAME> volume help - display help for the volume command volume log <VOLNAME> rotate [BRICK] - rotate the log file for corresponding volume/brick volume log rotate <VOLNAME> [BRICK] - rotate the log file for corresponding volume/brick NOTE: This is an old syntax, will be deprecated from next release. volume sync <HOSTNAME> [all|<VOLNAME>] - sync the volume information from a peer volume reset <VOLNAME> [option] [force] - reset all the reconfigured options volume profile <VOLNAME> {start|info [peek|incremental [peek]|cumulative|clear]|stop} [nfs] - volume profile operations volume quota <VOLNAME> {enable|disable|list [<path> ...]| list-objects [<path> ...] | remove <path>| remove-objects <path> | default-soft-limit <percent>} | volume quota <VOLNAME> {limit-usage <path> <size> [<percent>]} | volume quota <VOLNAME> {limit-objects <path> <number> [<percent>]} | volume quota <VOLNAME> {alert-time|soft-timeout|hard-timeout} {<time>} - quota translator specific operations volume inode-quota <VOLNAME> enable - quota translator specific operations volume top <VOLNAME> {open|read|write|opendir|readdir|clear} [nfs|brick <brick>] [list-cnt <value>] | volume top <VOLNAME> {read-perf|write-perf} [bs <size> count <count>] [brick <brick>] [list-cnt <value>] - volume top operations volume status [all | <VOLNAME> [nfs|shd|<BRICK>|quotad|tierd]] [detail|clients|mem|inode|fd|callpool|tasks] - display status of all or specified volume(s)/brick volume heal <VOLNAME> [enable | disable | full |statistics [heal-count [replica <HOSTNAME:BRICKNAME>]] |info [healed | heal-failed | split-brain] |split-brain {bigger-file <FILE> | latest-mtime <FILE> |source-brick <HOSTNAME:BRICKNAME> [<FILE>]} |granular-entry-heal {enable | disable}] - self-heal commands on volume specified by <VOLNAME> volume statedump <VOLNAME> [[nfs|quotad] [all|mem|iobuf|callpool|priv|fd|inode|history]... | [client <hostname:process-id>]] - perform statedump on bricks volume list - list all volumes in cluster volume clear-locks <VOLNAME> <path> kind {blocked|granted|all}{inode [range]|entry [basename]|posix [range]} - Clear locks held on path volume barrier <VOLNAME> {enable|disable} - Barrier/unbarrier file operations on a volume volume get <VOLNAME> <key|all> - Get the value of the all options or given option for volume <VOLNAME> volume bitrot <VOLNAME> {enable|disable} | 错误侦测 volume bitrot <volname> scrub-throttle {lazy|normal|aggressive} | volume bitrot <volname> scrub-frequency {hourly|daily|weekly|biweekly|monthly} | volume bitrot <volname> scrub {pause|resume|status|ondemand} - Bitrot translator specific operation. For more information about bitrot command type 'man gluster' volume reset-brick <VOLNAME> <SOURCE-BRICK> {{start} | {<NEW-BRICK> commit}} - reset-brick operations peer probe { <HOSTNAME> | <IP-address> } - probe peer specified by <HOSTNAME> peer detach { <HOSTNAME> | <IP-address> } [force] - detach peer specified by <HOSTNAME> peer status - list status of peers peer help - Help command for peer pool list - list all the nodes in the pool (including localhost) quit - quit help - display command options exit - exit snapshot help - display help for snapshot commands snapshot create <snapname> <volname> [no-timestamp] [description <description>] [force] - Snapshot Create. snapshot clone <clonename> <snapname> - Snapshot Clone. snapshot restore <snapname> - Snapshot Restore. snapshot status [(snapname | volume <volname>)] - Snapshot Status. snapshot info [(snapname | volume <volname>)] - Snapshot Info. snapshot list [volname] - Snapshot List. snapshot config [volname] ([snap-max-hard-limit <count>] [snap-max-soft-limit <percent>]) | ([auto-delete <enable|disable>])| ([activate-on-create <enable|disable>]) - Snapshot Config. snapshot delete (all | snapname | volume <volname>) - Snapshot Delete. snapshot activate <snapname> [force] - Activate snapshot volume. snapshot deactivate <snapname> - Deactivate snapshot volume. global help - list global commands nfs-ganesha {enable| disable} - Enable/disable NFS-Ganesha support get-state [<daemon>] [odir </path/to/output/dir/>] [file <filename>] - Get local state representation of mentioned daemon

1. 服务器节点

为存储池添加/移除服务器节点, 在其中一个节点上操作即可:

# gluster peer status // 查看所有节点信息,显示时不包括本节点 # gluster peer probe <SERVER> // 添加节点 # gluster peer detach <SERVER> // 移除节点,需要提前将该节点上的brick移除

注意,移除节点时,需要提前将该节点上的Brick移除。

查看所有节点的基本状态(显示的时候不包括本节点):

# gluster peer status

[root@vClass-DgqxC init.d]# gluster peer probe 10.17.5.247

peer probe: success

[root@vClass-DgqxC init.d]# gluster peer status

Number of Peers: 1

Hostname: 10.17.5.247

Port: 24007

Uuid: 8fd5d483-c883-4e22-a372-1ac058ce10d2

State: Peer in Cluster (Connected)

[root@vClass-DgqxC init.d]#

2. 卷管理

2.1. 创建/启动/停止/删除卷

# gluster volume create <NEW-VOLNAME>[stripe <COUNT> | replica <COUNT>] [transport [tcp | rdma | tcp,rdma]] <NEW-BRICK1> <NEW-BRICK2> <NEW-BRICK3> <NEW-BRICK4>... # gluster volume start <VOLNAME> # gluster volume stop <VOLNAME> # gluster volume delete <VOLNAME>

注意,删除卷的前提是先停止卷。

<1>复制卷

语法: gluster volume create NEW-VOLNAME [replica COUNT] [transport tcp | rdma | tcp, rdma] NEW-BRICK

示例1:gluster volume create test-volume replica 2 transport tcp server1:/exp1/brick server2:/exp2/brick

<2>条带卷

语法:gluster volume create NEW-VOLNAME [stripe COUNT] [transport tcp | rdma | tcp, rdma] NEW-BRICK...

示例:gluster volume create test-volume stripe 2 transport tcp server1:/exp1/brick server2:/exp2/brick

<3>分布式卷

语法: gluster volume create NEW-VOLNAME [transport tcp | rdma | tcp, rdma] NEW-BRICK

示例1:gluster volume create test-volume server1:/exp1/brick server2:/exp2/brick

示例2:gluster volume create test-volume transport rdma server1:/exp1/brick server2:/exp2/brick server3:/exp3/brick server4:/exp4/brick

<4>分布式复制卷

语法: gluster volume create NEW-VOLNAME [replica COUNT] [transport tcp | rdma | tcp, rdma] NEW-BRICK...

示例: gluster volume create test-volume replica 2 transport tcp server1:/exp1/brick server2:/exp2/brick server3:/exp3/brick server4:/exp4/brick

<5>分布式条带卷

语法:gluster volume create NEW-VOLNAME [stripe COUNT] [transport tcp | rdma | tcp, rdma] NEW-BRICK...

示例:gluster volume create test-volume stripe 2 transport tcp server1:/exp1/brick server2:/exp2/brick server3:/exp3/brick server4:/exp4/brick

<6>条带复制卷

语法:gluster volume create NEW-VOLNAME [stripe COUNT] [replica COUNT] [transport tcp | rdma | tcp, rdma] NEW-BRICK...

示例:gluster volume create test-volume stripe 2 replica 2 transport tcp server1:/exp1/brick server2:/exp2/brick server3:/exp3/brick server4:/exp4/brick

2.2 查看卷信息

列出集群中的所有卷:

# gluster volume list /*列出集群中的所有卷*/

查看集群中的卷信息:

# gluster volume info [all] /*查看集群中的卷信息*/

查看集群中的卷状态:

# gluster volume status [all] /*查看集群中的卷状态*/

# gluster volume status <VOLNAME> [detail| clients | mem | inode | fd]

查看本节点的文件系统信息:

# df -h [<MOUNT-PATH>]

查看本节点的磁盘信息:

# fdisk -l

gluster> volume list

test-volume

gluster> volume info Volume Name: test-volume Type: Replicate Volume ID: bea9fbb5-6cbb-4fa6-905c-5b4791e53ae2 Status: Started Number of Bricks: 1 x 2 = 2 Transport-type: tcp Bricks: Brick1: 10.17.5.247:/mnt/ab796251092bf7c1d7657e728940927b/glusterfs_storage Brick2: 10.17.66.112:/mnt/02615f07eb1ecf582411bc5554a38170/glusterfs_storage

gluster> volume status Status of volume: test-volume Gluster process Port Online Pid ------------------------------------------------------------------------------ Brick 10.17.5.247:/mnt/ab796251092bf7c1d7657e728940927b /glusterfs_storage 49152 Y 24996 Brick 10.17.66.112:/mnt/02615f07eb1ecf582411bc5554a3817 0/glusterfs_storage 49152 Y 20567 NFS Server on localhost 2049 Y 20579 Self-heal Daemon on localhost N/A Y 20584 NFS Server on 10.17.5.247 2049 Y 25008 Self-heal Daemon on 10.17.5.247 N/A Y 25012 There are no active volume tasks

gluster> volume status test-volume detail Status of volume: test-volume ------------------------------------------------------------------------------ Brick : Brick 10.17.5.247:/mnt/ab796251092bf7c1d7657e728940927b/glusterfs_storage Port : 49152 Online : Y Pid : 24996 File System : xfs Device : /dev/mapper/defaultlocal-defaultlocal Mount Options : rw,noatime,nodiratime,osyncisdsync,usrquota,grpquota Inode Size : 256 Disk Space Free : 14.8GB Total Disk Space : 24.4GB Inode Count : 25698304 Free Inodes : 25698188 ------------------------------------------------------------------------------ Brick : Brick 10.17.66.112:/mnt/02615f07eb1ecf582411bc5554a38170/glusterfs_storage Port : 49152 Online : Y Pid : 20567 File System : xfs Device : /dev/mapper/defaultlocal-defaultlocal Mount Options : rw,noatime,nodiratime,usrquota,grpquota Inode Size : 256 Disk Space Free : 4.4GB Total Disk Space : 4.4GB Inode Count : 4726784 Free Inodes : 4726699

gluster> volume status test-volume mem Memory status for volume : test-volume ---------------------------------------------- Brick : 10.17.5.247:/mnt/ab796251092bf7c1d7657e728940927b/glusterfs_storage Mallinfo -------- Arena : 2760704 Ordblks : 8 Smblks : 1 Hblks : 12 Hblkhd : 17743872 Usmblks : 0 Fsmblks : 80 Uordblks : 2623888 Fordblks : 136816 Keepcost : 134384 Mempool Stats ------------- Name HotCount ColdCount PaddedSizeof AllocCount MaxAlloc Misses Max-StdAlloc ---- -------- --------- ------------ ---------- -------- -------- ------------ test-volume-server:fd_t 0 1024 108 0 0 0 0 test-volume-server:dentry_t 0 16384 84 0 0 0 0 test-volume-server:inode_t 1 16383 156 4 2 0 0 test-volume-locks:pl_local_t 0 32 140 3 1 0 0 test-volume-marker:marker_local_t 0 128 316 0 0 0 0 test-volume-server:rpcsvc_request_t 0 512 6116 38 2 0 0 glusterfs:struct saved_frame 0 8 124 2 2 0 0 glusterfs:struct rpc_req 0 8 2236 2 2 0 0 glusterfs:rpcsvc_request_t 1 7 6116 10 1 0 0 glusterfs:data_t 119 16264 52 790 167 0 0 glusterfs:data_pair_t 117 16266 68 752 157 0 0 glusterfs:dict_t 13 4083 140 65 15 0 0 glusterfs:call_stub_t 0 1024 3756 22 2 0 0 glusterfs:call_stack_t 1 1023 1836 33 2 0 0 glusterfs:call_frame_t 0 4096 172 104 10 0 0 ---------------------------------------------- Brick : 10.17.66.112:/mnt/02615f07eb1ecf582411bc5554a38170/glusterfs_storage Mallinfo -------- Arena : 2760704 Ordblks : 10 Smblks : 1 Hblks : 12 Hblkhd : 17743872 Usmblks : 0 Fsmblks : 80 Uordblks : 2624400 Fordblks : 136304 Keepcost : 133952 Mempool Stats ------------- Name HotCount ColdCount PaddedSizeof AllocCount MaxAlloc Misses Max-StdAlloc ---- -------- --------- ------------ ---------- -------- -------- ------------ test-volume-server:fd_t 0 1024 108 4 1 0 0 test-volume-server:dentry_t 0 16384 84 0 0 0 0 test-volume-server:inode_t 2 16382 156 4 2 0 0 test-volume-locks:pl_local_t 0 32 140 5 1 0 0 test-volume-marker:marker_local_t 0 128 316 0 0 0 0 test-volume-server:rpcsvc_request_t 0 512 6116 58 2 0 0 glusterfs:struct saved_frame 0 8 124 3 2 0 0 glusterfs:struct rpc_req 0 8 2236 3 2 0 0 glusterfs:rpcsvc_request_t 1 7 6116 10 1 0 0 glusterfs:data_t 119 16264 52 859 167 0 0 glusterfs:data_pair_t 117 16266 68 796 157 0 0 glusterfs:dict_t 13 4083 140 80 15 0 0 glusterfs:call_stub_t 0 1024 3756 47 2 0 0 glusterfs:call_stack_t 1 1023 1836 54 2 0 0 glusterfs:call_frame_t 0 4096 172 183 13 0 0 ----------------------------------------------

gluster> volume status test-volume clients Client connections for volume test-volume ---------------------------------------------- Brick : 10.17.5.247:/mnt/ab796251092bf7c1d7657e728940927b/glusterfs_storage Clients connected : 4 Hostname BytesRead BytesWritten -------- --------- ------------ 10.17.5.247:1021 2340 2264 10.17.5.247:1015 3348 2464 10.17.66.112:1019 848 436 10.17.66.112:1012 1104 684 ---------------------------------------------- Brick : 10.17.66.112:/mnt/02615f07eb1ecf582411bc5554a38170/glusterfs_storage Clients connected : 4 Hostname BytesRead BytesWritten -------- --------- ------------ 10.17.66.112:1023 3256 3100 10.17.66.112:1022 4192 2656 10.17.5.247:1020 1228 808 10.17.5.247:1014 2924 2476 ----------------------------------------------

gluster> volume status test-volume inode Inode tables for volume test-volume ---------------------------------------------- Brick : 10.17.5.247:/mnt/ab796251092bf7c1d7657e728940927b/glusterfs_storage Active inodes: GFID Lookups Ref IA type ---- ------- --- ------- 00000000-0000-0000-0000-000000000001 0 65 D ---------------------------------------------- Brick : 10.17.66.112:/mnt/02615f07eb1ecf582411bc5554a38170/glusterfs_storage Active inodes: GFID Lookups Ref IA type ---- ------- --- ------- 00000000-0000-0000-0000-000000000001 0 127 D LRU inodes: GFID Lookups Ref IA type ---- ------- --- ------- d271a384-01c1-4e29-9171-0b3b58033936 4 0 D ----------------------------------------------

gluster> volume status test-volume fd FD tables for volume test-volume ---------------------------------------------- Brick : 10.17.5.247:/mnt/ab796251092bf7c1d7657e728940927b/glusterfs_storage Connection 1: RefCount = 0 MaxFDs = 128 FirstFree = 0 No open fds Connection 2: RefCount = 0 MaxFDs = 128 FirstFree = 0 No open fds Connection 3: RefCount = 0 MaxFDs = 128 FirstFree = 0 No open fds Connection 4: RefCount = 0 MaxFDs = 128 FirstFree = 0 No open fds ---------------------------------------------- Brick : 10.17.66.112:/mnt/02615f07eb1ecf582411bc5554a38170/glusterfs_storage Connection 1: RefCount = 0 MaxFDs = 128 FirstFree = 0 No open fds Connection 2: RefCount = 0 MaxFDs = 128 FirstFree = 0 No open fds Connection 3: RefCount = 0 MaxFDs = 128 FirstFree = 0 No open fds Connection 4: RefCount = 0 MaxFDs = 128 FirstFree = 0 No open fds ----------------------------------------------

2.3. 配置卷

# gluster volume set <VOLNAME> <OPTION> <PARAMETER>

如何查看配置项?

2.4. 扩展卷

# gluster volume add-brick <VOLNAME> <NEW-BRICK>

注意,如果是复制卷或者条带卷,则每次添加的Brick数必须是replica或者stripe的整数倍。

2.5. 收缩卷

先将数据迁移到其它可用的Brick,迁移结束后才将该Brick移除:

# gluster volume remove-brick <VOLNAME> <BRICK> start

在执行了start之后,可以使用status命令查看移除进度:

# gluster volume remove-brick <VOLNAME> <BRICK> status

不进行数据迁移,直接删除该Brick:

# gluster volume remove-brick <VOLNAME> <BRICK> commit

注意,如果是复制卷或者条带卷,则每次移除的Brick数必须是replica或者stripe的整数倍。

gluster> volume remove-brick test-volume 10.17.66.112:/mnt/02615f07eb1ecf582411bc5554a38170/glusterfs_storage commit Removing brick(s) can result in data loss. Do you want to Continue? (y/n) y volume remove-brick commit: failed: Removing bricks from replicate configuration is not allowed without reducing replica count explicitly. gluster> volume remove-brick test-volume replic 1 10.17.66.112:/mnt/02615f07eb1ecf582411bc5554a38170/glusterfs_storage commit Removing brick(s) can result in data loss. Do you want to Continue? (y/n) y volume remove-brick commit: success gluster> volume info Volume Name: test-volume Type: Distribute Volume ID: bea9fbb5-6cbb-4fa6-905c-5b4791e53ae2 Status: Started Number of Bricks: 1 Transport-type: tcp Bricks: Brick1: 10.17.5.247:/mnt/ab796251092bf7c1d7657e728940927b/glusterfs_storage gluster>

2.6. 迁移卷

使用start命令开始进行迁移:

# gluster volume replace-brick <VOLNAME> <BRICK> <NEW-BRICK> start

在数据迁移过程中,可以使用pause命令暂停迁移:

# gluster volume replace-brick <VOLNAME> <BRICK> <NEW-BRICK> pause

在数据迁移过程中,可以使用abort命令终止迁移:

# gluster volume replace-brick <VOLNAME> <BRICK> <NEW-BRICK> abort

在数据迁移过程中,可以使用status命令查看迁移进度:

# gluster volume replace-brick <VOLNAME> <BRICK> <NEW-BRICK> status

在数据迁移结束后,执行commit命令来进行Brick替换:

# gluster volume replace-brick <VOLNAME> <BRICK> <NEW-BRICK> commit

2.7. 平衡卷:

平衡布局是很有必要的,因为布局结构是静态的,当新的bricks 加入现有卷,新创建的文件会分布到旧的bricks 中,所以需要平衡布局结构,使新加入的bricks 生效。布局平衡只是使

新布局生效,并不会在新的布局移动老的数据,如果你想在新布局生效后,重新平衡卷中的数据,还需要对卷中的数据进行平衡。

当你扩展或者缩小卷之后,需要重新在服务器直接重新平衡一下数据,重新平衡的操作被分

为两个步骤:

1、Fix Layout

修改扩展或者缩小后的布局,以确保文件可以存储到新增加的节点中。

2、Migrate Data

重新平衡数据在新加入bricks 节点之后。

* Fix Layout and Migrate Data

先重新修改布局然后移动现有的数据(重新平衡)

#gluster volume rebalance VOLNAME fix-layout start

#gluster volume rebalance VOLNAME migrate-data start

也可以两步合一步同时操作

#gluster volume rebalance VOLNAME start

#gluster volume rebalance VOLNAME status // 你可以在在平衡过程中查看平衡信息

#gluster volume rebalance VOLNAME stop // 你也可以暂停平衡,再次启动平衡的时候会从上次暂停的地方继续开始平衡。

2.8. 重新均衡卷

不迁移数据:

# gluster volume rebalance <VOLNAME> lay-outstart

# gluster volume rebalance <VOLNAME> start

# gluster volume rebalance <VOLNAME> startforce

# gluster volume rebalance <VOLNAME> status

# gluster volume rebalance <VOLNAME> stop

2.9. I/O 信息查看:

Profile Command 提供接口查看一个卷中的每一个brick 的IO 信息

#gluster volume profile VOLNAME start // 启动profiling,之后则可以进行IO信息查看 #gluster volume profile VOLNAME info // 查看IO信息,可以查看到每一个Brick的IO信息 #gluster volume profile VOLNAME stop // 查看结束之后关闭profiling功能

2.10. 系统配额:

1、开启/关闭系统配额

#gluster volume quota VOLNAME enable/disable

2、设置(重置)目录配额

#gluster volume quota VOLNAME limit-usage /img limit-value

#gluster volume quota img limit-usage /quota 10GB

设置img 卷下的quota 子目录的限额为10GB。这个目录是以系统挂载目录为根目录”/”,所以/quota 即客户端挂载目录下的子目录quota

3、配额查看

#gluster volume quota VOLNAME list

#gluster volume quota VOLNAME list

可以使用如上两个命令进行系统卷的配额查看,第一个命令查看目的卷的所有配额设置,

第二个命令则是执行目录进行查看。可以显示配额大小及当前使用容量,若无使用容量(最小0KB)则说明设置的目录可能是错误的(不存在)。

2.11. 地域复制:

gluster volume geo-replication MASTER SLAVE start/status/stop

// 地域复制是系统提供的灾备功能,能够将系统的全部数据进行异步的增量备份到另外的磁盘中。

#gluster volume geo-replication img 192.168.10.8:/data1/brick1 start

如上,开始执行将img 卷的所有内容备份到10.8 下的/data1/brick1 中的task,需要注意的是,这个备份目标不能是系统中的Brick。

2.12. Top监控:

Top command 允许你查看bricks 的性能例如:read, write, fileopen calls, file read calls, file write calls, directory open calls, and directory real calls

所有的查看都可以设置top 数,默认100

#gluster volume top VOLNAME open [brick BRICK-NAME][list-cntcnt] // 查看打开的fd #gluster volume top VOLNAME read [brick BRICK-NAME][list-cntcnt] // 查看调用次数最多的读调用 #gluster volume top VOLNAME write [brick BRICK-NAME][list-cntcnt] // 查看调用次数最多的写调用 #gluster volume top VOLNAME opendir [brick BRICK-NAME][list-cntcnt] // 查看次数最多的目录调用 #gluster volume top VOLNAME readdir [brick BRICK-NAME][list-cntcnt] // 查看次数最多的目录调用 #gluster volume top VOLNAME read-perf[bsblk-size count count][brick BRICK-NAME][list-cntcnt] // 查看每个Brick的读性能 #gluster volume top VOLNAME write-perf[bsblk-size count count][brick BRICK-NAME][list-cntcnt] // 查看每个Brick的写性能

2.13. 性能优化配置选项:

#gluster volume set arch-img cluster.min-free-disk 默认是10% 磁盘剩余告警 #gluster volume set arch-img cluster.min-free-inodes 默认是5% inodes剩余告警 #gluster volume set img performance.read-ahead-page-count 8 默认4,预读取的数量 #gluster volume set img performance.io-thread-count 16 默认16 io操作的最大线程 #gluster volume set arch-img network.ping-timeout 10 默认42s #gluster volume set arch-img performance.cache-size 2GB 默认128M或32MB, #gluster volume set arch-img cluster.self-heal-daemonon 开启目录索引的自动愈合进程 #gluster volume set arch-img cluster.heal-timeout 300 自动愈合的检测间隔,默认为600s #3.4.2版本才有 #gluster volume set arch-img performance.write-behind-window-size 256MB #默认是1M 能提高写性能单个文件后写缓冲区的大小默认1M

# gluster volume set dis-rep features.shard on

# gluster volume set dis-rep features.shard-block-size 16MB

预读中继(performance/read-ahead)属于性能调整中继的一种,用预读的方式提高读取的性能。

读取操作前就预先抓取数据。这个有利于应用频繁持续性的访问文件,当应用完成当前数据块读取的时候,下一个数据块就已经准备好了。

额外的,预读中继也可以扮演读聚合器,许多小的读操作被绑定起来,当成一个大的读请求发送给服务器。

预读处理有page-size和page-count来定义,page-size定义了,一次预读取的数据块大小,page-count定义的是被预读取的块的数量

不过官方网站上说这个中继在以太网上没有必要,一般都能跑满带宽。主要是在IB-verbs或10G的以太网上用。

volume readahead-example

type performance/read-ahead

option page-size 256 # 每次预读取的数据块大小

option page-count4 # 每次预读取数据块的数量

option force-atime-update off#是否强制在每次读操作时更新文件的访问时间,不设置这个,访问时间将有些不精确,这个将影响预读转换器读取数据时的那一时刻而不是应用真实读到数据的那一时刻。

subvolumes

end-volume

回写中继(performance/read-ahead)属于性能调整中继的一种,作用是在写数据时,先写入缓存内,再写入硬盘。以提高写入的性能。

回写中继改善了了写操作的延时。它会先把写操作发送到后端存储,同时返回给应用写操作完毕,而实际上写的操作还正在执行。使用后写转换器就可以像流水线一样把写请求持续发送。这个后写操作模块更适合使用在client端,以期减少应用的写延迟。

回写中继同样可以聚合写请求。如果aggregate-size选项设置了的话,当连续的写入大小累积起来达到了设定的值,就通过一个写操作写入到存储上。这个操作模式适合应用在服务器端,以为这个可以在多个文件并行被写入磁盘时降低磁头动作。

volume write-behind-example

type performance/write-behind

option cache-size3MB #缓存大小,当累积达到这个值才进行实际的写操作

option flush-behind on #这个参数调整close()/flush()太多的情况,适用于大量小文件的情况

subvolumes

end-volume

IO线程中继(performance/io-threads)属于性能调整中继的一种,作用是增加IO的并发线程,以提高IO性能。

IO线程中继试图增加服务器后台进程对文件元数据读写I/O的处理能力。由于GlusterFS服务是单线程的,使用IO线程转换器可以较大的提高性能。这个转换器最好是被用于服务器端,而且是在服务器协议转换器后面被加载。

IO线程操作会将读和写操作分成不同的线程。同一时刻存在的总线程是恒定的并且是可以配置的。

volume iothreads type performance/io-threads option thread-count 32 # 线程使用的数量 subvolumes end-volume

IO缓存中继(performance/io-threads)属于性能调整中继的一种,作用是缓存住已经被读过的数据,以提高IO性能。

IO缓存中继可以缓存住已经被读过的数据。这个对于多个应用对同一个数据多次访问,并且如果读的操作远远大于写的操作的话是很有用的(比如,IO缓存很适合用于提供web服务的环境,大量的客户端只会进行简单的读取文件的操作,只有很少一部分会去写文件)。

当IO缓存中继检测到有写操作的时候,它就会把相应的文件从缓存中删除。

IO缓存中继会定期的根据文件的修改时间来验证缓存中相应文件的一致性。验证超时时间是可以配置的。

volume iothreads type performance/ io-cache option cache-size 32MB #可以缓存的最大数据量 option cache-timeout 1 #验证超时时间,单位秒 option priority *:0 #文件匹配列表及其设置的优先级 subvolumes end-volume

3 Brick管理

3.1 添加 Brick

# gluster volume add-brick test-volume 192.168.1.{151,152}:/mnt/brick2

3.2 删除 Brick

若是副本卷,则移除的Bricks数是replica的整数倍

#gluster volume remove-brick test-volume 192.168.1.{151,152}:/mnt/brick2 start

在执行开始移除之后,可以使用status命令进行移除状态查看。

#gluster volume remove-brick test-volume 192.168.1.{151,152}:/mnt/brick2 status

使用commit命令执行Brick移除,则不会进行数据迁移而直接删除Brick,符合不需要数据迁移的用户需求。

#gluster volume remove-brick test-volume 192.168.1.{151,152}:/mnt/brick2 commit

3.3 替换 Brick

任务:把192.168.1.151:/mnt/brick0 替换为192.168.1.151:/mnt/brick2

<1>开始替换

#gluster volume replace-brick test-volume 192.168.1.:/mnt/brick0 ..152:/mnt/brick2 start

异常信息:volume replace-brick: failed: /data/share2 or a prefix of it is already part of a volume

说明 /mnt/brick2 曾经是一个Brick。具体解决方法

# rm -rf /mnt/brick2/.glusterfs # setfattr -x trusted.glusterfs.volume-id /mnt/brick2 # setfattr -x trusted.gfid /mnt/brick2

//如上,执行replcace-brick卷替换启动命令,使用start启动命令后,开始将原始Brick的数据迁移到即将需要替换的Brick上。

<2>查看是否替换完

#gluster volume replace-brick test-volume 192.168.1.151:/mnt/brick0 ..152:/mnt/brick2 status

<3>在数据迁移的过程中,可以执行abort命令终止Brick替换。

#gluster volume replace-brick test-volume 192.168.1.151:/mnt/brick0 ..152:/mnt/brick2 abort

<4>在数据迁移结束之后,执行commit命令结束任务,则进行Brick替换。使用volume info命令可以查看到Brick已经被替换。

#gluster volume replace-brick test-volume 192.168.1.151:/mnt/brick0 .152:/mnt/brick2 commit

# 此时我们再往 /sf/data/vs/gfs/rep2上添加数据的话,数据会同步到 192.168.1.152:/mnt/brick0和192.168.1.152:/mnt/brick2上。而不会同步到

192.168.1.151:/mnt/brick0 上。

glusterfs 命令

[root@vClass-DgqxC glusterfs]# glusterfs --help Usage: glusterfs [OPTION...] --volfile-server=SERVER [MOUNT-POINT] or: glusterfs [OPTION...] --volfile=VOLFILE [MOUNT-POINT] Basic options: -f, --volfile=VOLFILE File to use as VOLUME_FILE -l, --log-file=LOGFILE File to use for logging [default: /var/log/glusterfs/glusterfs.log] -L, --log-level=LOGLEVEL Logging severity. Valid options are DEBUG, INFO, WARNING, ERROR, CRITICAL and NONE [default: INFO] -s, --volfile-server=SERVER Server to get the volume file from. This option overrides --volfile option --volfile-max-fetch-attempts=MAX-ATTEMPTS Maximum number of connect attempts to server. This option should be provided with --volfile-server option[default: 1] Advanced Options: --acl Mount the filesystem with POSIX ACL support --debug Run in debug mode. This option sets --no-daemon, --log-level to DEBUG and --log-file to console --enable-ino32[=BOOL] Use 32-bit inodes when mounting to workaround broken applicationsthat don't support 64-bit inodes --fopen-keep-cache Do not purge the cache on file open --mac-compat[=BOOL] Provide stubs for attributes needed for seamless operation on Macs [default: "off"] -N, --no-daemon Run in foreground -p, --pid-file=PIDFILE File to use as pid file --read-only Mount the filesystem in 'read-only' mode --selinux Enable SELinux label (extened attributes) support on inodes -S, --socket-file=SOCKFILE File to use as unix-socket --volfile-id=KEY 'key' of the volfile to be fetched from server --volfile-server-port=PORT Listening port number of volfile server --volfile-server-transport=TRANSPORT Transport type to get volfile from server [default: socket] --volume-name=XLATOR-NAME Translator name to be used for MOUNT-POINT [default: top most volume definition in VOLFILE] --worm Mount the filesystem in 'worm' mode --xlator-option=XLATOR-NAME.OPTION=VALUE Add/override an option for a translator in volume file with specified value Fuse options: --attribute-timeout=SECONDS Set attribute timeout to SECONDS for inodes in fuse kernel module [default: 1] --background-qlen=N Set fuse module's background queue length to N [default: 64] --congestion-threshold=N Set fuse module's congestion threshold to N [default: 48] --direct-io-mode[=BOOL] Use direct I/O mode in fuse kernel module [default: "off" if big writes are supported, else "on" for fds not opened with O_RDONLY] --dump-fuse=PATH Dump fuse traffic to PATH --entry-timeout=SECONDS Set entry timeout to SECONDS in fuse kernel module [default: 1] --gid-timeout=SECONDS Set auxilary group list timeout to SECONDS for fuse translator [default: 0] --negative-timeout=SECONDS Set negative timeout to SECONDS in fuse kernel module [default: 0] --use-readdirp[=BOOL] Use readdirp mode in fuse kernel module [default: "off"] --volfile-check Enable strict volume file checking Miscellaneous Options: -?, --help Give this help list --usage Give a short usage message -V, --version Print program version Mandatory or optional arguments to long options are also mandatory or optional for any corresponding short options. Report bugs to <gluster-users@gluster.org>.

glusterfs --version

glusterfs --version glusterfs 3.4.2 built on Mar 19 2014 02:14:52 Repository revision: git://git.gluster.com/glusterfs.git Copyright (c) 2006-2013 Red Hat, Inc. <http://www.redhat.com/> GlusterFS comes with ABSOLUTELY NO WARRANTY. It is licensed to you under your choice of the GNU Lesser General Public License, version 3 or any later version (LGPLv3 or later), or the GNU General Public License, version 2 (GPLv2), in all cases as published by the Free Software Foundation.

glusterfs配置

默认配置文件位置: /var/lib/glusterd/

/var/lib/glusterd/glustershdglustershd-server.vol

卷的配置文件: /var/lib/glusterd/vols/[volname....]

glusterfsd 进程使用的配置文件

peer的配置文件: /var/lib/glusterd/peers/[peer id] 非本地,其他主机

服务器端配置

# vi /etc/glusterfs/glusterfsd.vol volume posix type storage/posix option directory /sda4 end-volume volume locks type features/locks subvolumes posix end-volume volume brick type performance/io-threads option thread-count 8 subvolumes locks end-volume volume server type protocol/server option transport-type tcp subvolumes brick option auth.addr.brick.allow * end-volume

保存后启动GlusterFS:

# service glusterfsd start

客户端配置

# vi /etc/glusterfs/glusterfs.vol volume brick1 type protocol/client option transport-type tcp end-volume volume brick2 type protocol/client option transport-type tcp option remote-host .12 option remote-subvolume brick end-volume volume brick3 type protocol/client option transport-type tcp option remote-host .13 option remote-subvolume brick end-volume volume brick4 type protocol/client option transport-type tcp option remote-host .14 option remote-subvolume brick end-volume volume brick5 type protocol/client option transport-type tcp option remote-host .15 option remote-subvolume brick end-volume volume brick6 type protocol/client option transport-type tcp option remote-host .16 option remote-subvolume brick end-volume volume afr1 type cluster/replicate subvolumes brick1 brick2 end-volume volume afr2 type cluster/replicate subvolumes brick3 brick4 end-volume volume afr3 type cluster/replicate subvolumes brick5 brick6 end-volume volume unify type cluster/distribute subvolumes afr1 afr2 afr3 end-volume

GlusterFS的主要配置都在客户端上,上面配置文件的意思是把6台服务器分成3个replicate卷,再用这3个replicate卷做成一个distribute,提供应用程序使用。

配置文件:

/etc/glusterfs/glusterd.vol

volume management type mgmt/glusterd option working-directory /var/lib/glusterd option transport-type socket,rdma option transport.socket.keepalive-time 10 option transport.socket.keepalive-interval 2 option transport.socket.read-fail-log off # option base-port 49152 end-volume

/usr/sbin/glusterfs --volfile-id=test-volume --volfile-server=10.17.5.247 glusterfs-mnt

Given volfile: +------------------------------------------------------------------------------+ 1: volume test-volume-client-0 2: type protocol/client 3: option password ed44ac73-28d1-41a9-be3a-2888738a50b6 4: option username 8b67c943-8c92-4fbd-9cd5-8750b2f513a4 5: option transport-type tcp 6: option remote-subvolume /mnt/ab796251092bf7c1d7657e728940927b/glusterfs_storage 7: option remote-host 10.17.5.247 8: end-volume 9: 10: volume test-volume-client-1 11: type protocol/client 12: option password ed44ac73-28d1-41a9-be3a-2888738a50b6 13: option username 8b67c943-8c92-4fbd-9cd5-8750b2f513a4 14: option transport-type tcp 15: option remote-subvolume /mnt/02615f07eb1ecf582411bc5554a38170/glusterfs_storage 16: option remote-host 10.17.66.112 17: end-volume 18: 19: volume test-volume-dht 20: type cluster/distribute 21: subvolumes test-volume-client-0 test-volume-client-1 22: end-volume 23: 24: volume test-volume-write-behind 25: type performance/write-behind 26: subvolumes test-volume-dht 27: end-volume 28: 29: volume test-volume-read-ahead 30: type performance/read-ahead 31: subvolumes test-volume-write-behind 32: end-volume 33: 34: volume test-volume-io-cache 35: type performance/io-cache 36: subvolumes test-volume-read-ahead 37: end-volume 38: 39: volume test-volume-quick-read 40: type performance/quick-read 41: subvolumes test-volume-io-cache 42: end-volume 43: 44: volume test-volume-open-behind 45: type performance/open-behind 46: subvolumes test-volume-quick-read 47: end-volume 48: 49: volume test-volume-md-cache 50: type performance/md-cache 51: subvolumes test-volume-open-behind 52: end-volume 53: 54: volume test-volume 55: type debug/io-stats 56: option count-fop-hits off 57: option latency-measurement off 58: subvolumes test-volume-md-cache 59: end-volume

启动一个卷,有两个进程: glusterfs 和 glusterfsd 两个进程进程。

root 55579 0.0 0.5 472788 21640 ? Ssl Jun27 0:03 /usr/sbin/glusterfsd -s 10.17.66.112

--volfile-id test-volume.10.17.66.112.mnt-02615f07eb1ecf582411bc5554a38170-glusterfs_storage

-p /var/lib/glusterd/vols/test-volume/run/10.17.66.112-mnt-02615f07eb1ecf582411bc5554a38170-glusterfs_storage.pid

-S /var/run/50a30acb134418925f349597a9e42150.socket

--brick-name /mnt/02615f07eb1ecf582411bc5554a38170/glusterfs_storage

-l /var/log/glusterfs/bricks/mnt-02615f07eb1ecf582411bc5554a38170-glusterfs_storage.log

--xlator-option *-posix.glusterd-uuid=7c8a3979-923a-454e-ab34-087e8937c99e

--brick-port 49153 --xlator-option test-volume-server.listen-port=49153 root 105463 0.5 1.0 291320 43436 ? Ssl 14:42 0:00 /usr/sbin/glusterfs -s localhost

--volfile-id gluster/nfs

-p /var/lib/glusterd/nfs/run/nfs.pid

-l /var/log/glusterfs/nfs.log

-S /var/run/62d848065f5f7d608d3e2cddf8080ca1.socket

localhost是 10.17.66.112

root 8970 0.1 0.5 471760 21288 ? Ssl 14:56 0:00 /usr/sbin/glusterfsd -s 10.17.66.112

--volfile-id test-vol2.10.17.66.112.mnt-dir1

-p /var/lib/glusterd/vols/test-vol2/run/10.17.66.112-mnt-dir1.pid

-S /var/run/b8a5734482b6ce3857f9005c434914d0.socket

--brick-name /mnt/dir1 -l /var/log/glusterfs/bricks/mnt-dir1.log

--xlator-option *-posix.glusterd-uuid=7c8a3979-923a-454e-ab34-087e8937c99e

--brick-port 49154

--xlator-option test-vol2-server.listen-port=49154 root 8983 0.4 0.5 405196 21020 ? Ssl 14:56 0:00 /usr/sbin/glusterfsd -s 10.17.66.112

--volfile-id test-vol2.10.17.66.112.mnt-dir2

-p /var/lib/glusterd/vols/test-vol2/run/10.17.66.112-mnt-dir2.pid

-S /var/run/a8ed48f14e7a0330e27285433cb9d53c.socket

--brick-name /mnt/dir2

-l /var/log/glusterfs/bricks/mnt-dir2.log

--xlator-option *-posix.glusterd-uuid=7c8a3979-923a-454e-ab34-087e8937c99e

--brick-port 49155

--xlator-option test-vol2-server.listen-port=49155 root 8992 0.1 0.5 471760 21000 ? Ssl 14:56 0:00 /usr/sbin/glusterfsd -s 10.17.66.112

--volfile-id test-vol2.10.17.66.112.mnt-dir3

-p /var/lib/glusterd/vols/test-vol2/run/10.17.66.112-mnt-dir3.pid

-S /var/run/951f675995a3c3e8fdd912d4eb113d45.socket

--brick-name /mnt/dir3

-l /var/log/glusterfs/bricks/mnt-dir3.log

--xlator-option *-posix.glusterd-uuid=7c8a3979-923a-454e-ab34-087e8937c99e

--brick-port 49156

--xlator-option test-vol2-server.listen-port=49156 root 9001 0.1 0.5 405196 20988 ? Ssl 14:56 0:00 /usr/sbin/glusterfsd -s 10.17.66.112

--volfile-id test-vol2.10.17.66.112.mnt-dir4

-p /var/lib/glusterd/vols/test-vol2/run/10.17.66.112-mnt-dir4.pid

-S /var/run/0d19719155089fe9e262af568636017a.socket

--brick-name /mnt/dir4

-l /var/log/glusterfs/bricks/mnt-dir4.log

--xlator-option *-posix.glusterd-uuid=7c8a3979-923a-454e-ab34-087e8937c99e

--brick-port 49157

--xlator-option test-vol2-server.listen-port=49157 root 9012 1.0 1.2 305420 50120 ? Ssl 14:56 0:00 /usr/sbin/glusterfs -s localhost

--volfile-id gluster/nfs

-p /var/lib/glusterd/nfs/run/nfs.pid

-l /var/log/glusterfs/nfs.log

-S /var/run/62d848065f5f7d608d3e2cddf8080ca1.socket root 9018 0.6 0.6 543608 26844 ? Ssl 14:56 0:00 /usr/sbin/glusterfs -s localhost

--volfile-id gluster/glustershd

-p /var/lib/glusterd/glustershd/run/glustershd.pid

-l /var/log/glusterfs/glustershd.log

-S /var/run/0c9486f066c00daabdaf7b0ec89bdd57.socket

--xlator-option *replicate*.node-uuid=7c8a3979-923a-454e-ab34-087e8937c99e

GlusterFS挂载

GlusterFS挂载为在客户端上执行:

# glusterfs -f /etc/glusterfs/glusterfs.vol /gmnt/ -l/var/log/glusterfs/glusterfs.log -f /etc/glusterfs/glusterfs.vol为指定GlusterFS的配置文件 /gmnt 是挂载点 -l /var/log/glusterfs/glusterfs.log为日志

另外,GlusterFS也可以结果fstab或autofs方式开机挂载。挂载后就可以在/gmnt内读写文件了,用法与读写本地硬盘一样。

Gluster的底层核心是GlusterFS分布式文件系统,为了满足不同的应用负载需求,它提供了许多可调节的系统参数,其中与性能调优相关的主要参数包括:

(1)全局Cache-Size,缺省值32MB

(2)每文件Write-Cache-Size,缺省值1MB

(3)I/O并发数量,缺省值16

(4)Read-ahead开关,缺省值On

(5)条带大小,缺省值128KB

FAQ:

1. 重新添加一个曾经交付的brick,报错:

异常信息:volume replace-brick: failed: /data/share2 or a prefix of it is already part of a volume

说明 /mnt/brick2 曾经是一个Brick。具体解决方法

# rm -rf /mnt/brick2/.glusterfs # setfattr -x trusted.glusterfs.volume-id /mnt/brick2 // 移除目录的扩展属性 # setfattr -x trusted.gfid /mnt/brick2

2. 在glusterfs挂载点上,操作,出现: 传输端点尚未连接

通过 volume info 查看正常。

[root@vClass-ftYDC temp]# ls ls: 无法打开目录.: 传输端点尚未连接 [root@vClass-ftYDC temp]# df 文件系统 1K-块 已用 可用 已用% 挂载点 /dev/mapper/vg_vclassftydc-lv_root 13286512 3914004 8674552 32% / tmpfs 2013148 4 2013144 1% /dev/shm /dev/sda1 487652 31759 426197 7% /boot /dev/mapper/vg_vclassftydc-lv_var 20511356 496752 18949644 3% /var /dev/mapper/ssd-ssd 20836352 9829584 11006768 48% /mnt/787fe74d9bef93c17a7aa195a05245b3 /dev/mapper/defaultlocal-defaultlocal 25567232 10059680 15507552 40% /mnt/ab796251092bf7c1d7657e728940927b df: "/mnt/glusterfs-mnt": 传输端点尚未连接 [root@vClass-ftYDC temp]# cd .. -bash: cd: ..: 传输端点尚未连接 [root@vClass-ftYDC temp]#

原因: 出现如下3的情况。即glusterfs的挂载目录,umount了。

3. 挂载glusterfs后,一段时间发现被umount,挂载日志如下:

[2017-06-28 05:45:51.861186] I [dht-layout.c:726:dht_layout_dir_mismatch] 0-test-vol2-dht: / - disk layout missing [2017-06-28 05:45:51.861332] I [dht-common.c:623:dht_revalidate_cbk] 0-test-vol2-dht: mismatching layouts for / [2017-06-28 05:46:02.499449] I [dht-layout.c:726:dht_layout_dir_mismatch] 0-test-vol2-dht: / - disk layout missing [2017-06-28 05:46:02.499520] I [dht-common.c:623:dht_revalidate_cbk] 0-test-vol2-dht: mismatching layouts for / [2017-06-28 05:46:05.701259] I [fuse-bridge.c:4628:fuse_thread_proc] 0-fuse: unmounting /mnt/glusterfs-mnt/ [2017-06-28 05:46:05.701898] W [glusterfsd.c:1002:cleanup_and_exit] (-->/lib64/libc.so.6(clone+0x6d) [0x7f09e546090d] (-->/lib64/libpthread.so.0(+0x7851) [0x7f09e5aff851] (-->/usr/sbin/glusterfs(glusterfs_sigwaiter+0xcd) [0x40533d]))) 0-: received signum (15), shutting down [2017-06-28 05:46:05.701911] I [fuse-bridge.c:5260:fini] 0-fuse: Unmounting '/mnt/glusterfs-mnt/'.

4. 恢复节点配置信息

故障现象: 其中一个节点配置信息不正确

故障模拟:

删除server2部分配置信息

配置信息位置:/var/lib/glusterd/

修复方法:

触发自修复:通过Gluster工具同步配置信息

#gluster volume sync server1 all

5. 复制卷数据不一致

故障现象: 双副本卷数据出现不一致

故障模拟: 删除其中一个brick数据

修复方法

新mount一下

6. 复制卷的目录删除了

如何解决

修复方法: 先替换brick

#gluster volume replace-brick bbs_img 10.20.0.201:/brick1/share2 10.20.0.201:/brick1/share start

#gluster volume replace-brick bbs_img 10.20.0.201:/brick1/share2 10.20.0.201:/brick1/share commit

还原的时候注意会报错

解决办法

rm -rf /data/share2/.glusterfs

setfattr -x trusted.glusterfs.volume-id /data/share2

setfattr -x trusted.gfid /data/share2

7. 出现这个问题

[root@vClass-v0jTX init.d]# gluster

gluster> volume set test_vol01 features.shard on

volume set: failed: One of the client 192.168.10.170:1023 is running with op-version 2 and doesn't support the required op-version 30700. This client needs to be upgraded or disconnected before running this command again

gluster>

8. 移除brick出现如下问题

gluster> volume remove-brick test_vol01 192.168.10.186:/mnt/63ef41a63399e6640a3c4abefa725497 192.168.10.186:/mnt/ad242fbe177ba330a0ea75a9d23fc936 force

Removing brick(s) can result in data loss. Do you want to Continue? (y/n) y

volume remove-brick commit force: failed: One or more nodes do not support the required op-version. Cluster op-version must atleast be 30600.

gluster>

分析: 一个节点从3.4.2 升级到3.10.2版本。

解决:

9. gluster 挂载点无法使用,无法umount

[root@vClass-1iUBT etc]# umount /mnt/d1f561a32ac1bf17cf183f36baac34d4 umount: /mnt/d1f561a32ac1bf17cf183f36baac34d4: device is busy. (In some cases useful info about processes that use the device is found by lsof(8) or fuser(1)) [root@vClass-1iUBT etc]#

原因: 有一个glusterfs 进程正在使用。通过ps 查看 参数中有mount的,kill掉后,即可umount操作。

10. 挂载点无效的问题

在 /var/log/gluster/ 目录下的挂载日志中,出现如下日志。

pending frames: frame : type(1) op(TRUNCATE) frame : type(1) op(OPEN) frame : type(0) op(0) frame : type(1) op(OPEN) frame : type(0) op(0) patchset: git://git.gluster.org/glusterfs.git signal received: 11 time of crash: 2017-08-23 08:40:38 signal received: 11 time of crash: 2017-08-23 08:40:38 configuration details: argp 1 backtrace 1 dlfcn 1 libpthread 1 argp 1 backtrace 1 dlfcn 1 libpthread 1 llistxattr 1 setfsid 1 spinlock 1 epoll.h 1 xattr.h 1 st_atim.tv_nsec 1 llistxattr 1 setfsid 1 spinlock 1 epoll.h 1 xattr.h 1 st_atim.tv_nsec 1 package-string: glusterfs 3.10.2 package-string: glusterfs 3.10.2 /usr/lib64/libglusterfs.so.0(_gf_msg_backtrace_nomem+0x9c)[0x7fd00cb58f4c] /usr/lib64/libglusterfs.so.0(gf_print_trace+0x338)[0x7fd00cb64568] /lib64/libc.so.6(+0x32920)[0x7fd00b4d1920] /usr/lib64/glusterfs/3.10.2/xlator/features/shard.so(+0x16053)[0x7fcfff4fd053] /usr/lib64/glusterfs/3.10.2/xlator/features/shard.so(+0x1641d)[0x7fcfff4fd41d] /usr/lib64/glusterfs/3.10.2/xlator/features/shard.so(+0x16546)[0x7fcfff4fd546] /lib64/libc.so.6(+0x32920)[0x7fd00b4d1920] /usr/lib64/glusterfs/3.10.2/xlator/features/shard.so(+0x16053)[0x7fcfff4fd053] /usr/lib64/glusterfs/3.10.2/xlator/features/shard.so(+0x1641d)[0x7fcfff4fd41d] /usr/lib64/glusterfs/3.10.2/xlator/features/shard.so(+0x16546)[0x7fcfff4fd546] /usr/lib64/glusterfs/3.10.2/xlator/features/shard.so(+0x18f51)[0x7fcfff4fff51] /usr/lib64/glusterfs/3.10.2/xlator/features/shard.so(+0x19759)[0x7fcfff500759] /usr/lib64/glusterfs/3.10.2/xlator/features/shard.so(+0x5f61)[0x7fcfff4ecf61] /usr/lib64/glusterfs/3.10.2/xlator/features/shard.so(+0xf0ac)[0x7fcfff4f60ac] /usr/lib64/glusterfs/3.10.2/xlator/performance/write-behind.so(+0x4e51)[0x7fcfff2d3e51] /usr/lib64/libglusterfs.so.0(call_resume_keep_stub+0x78)[0x7fd00cb75378] /usr/lib64/glusterfs/3.10.2/xlator/performance/write-behind.so(+0x713a)[0x7fcfff2d613a] /usr/lib64/glusterfs/3.10.2/xlator/performance/write-behind.so(+0xa828)[0x7fcfff2d9828] /usr/lib64/glusterfs/3.10.2/xlator/performance/write-behind.so(+0xb238)[0x7fcfff2da238] /usr/lib64/glusterfs/3.10.2/xlator/performance/read-ahead.so(+0x5043)[0x7fcfff0c4043] /usr/lib64/libglusterfs.so.0(default_truncate+0xdf)[0x7fd00cbd81ef] /usr/lib64/glusterfs/3.10.2/xlator/performance/io-cache.so(+0x9032)[0x7fcffeca8032] /usr/lib64/glusterfs/3.10.2/xlator/performance/quick-read.so(+0x3dad)[0x7fcffea9adad] /usr/lib64/glusterfs/3.10.2/xlator/features/shard.so(+0xf0ac)[0x7fcfff4f60ac] /usr/lib64/glusterfs/3.10.2/xlator/performance/write-behind.so(+0x4e51)[0x7fcfff2d3e51] /usr/lib64/libglusterfs.so.0(call_resume_keep_stub+0x78)[0x7fd00cb75378] /usr/lib64/glusterfs/3.10.2/xlator/performance/write-behind.so(+0x713a)[0x7fcfff2d613a] /usr/lib64/glusterfs/3.10.2/xlator/performance/write-behind.so(+0xa828)[0x7fcfff2d9828] /usr/lib64/glusterfs/3.10.2/xlator/performance/write-behind.so(+0xb238)[0x7fcfff2da238] /usr/lib64/glusterfs/3.10.2/xlator/performance/read-ahead.so(+0x5043)[0x7fcfff0c4043] /usr/lib64/libglusterfs.so.0(default_truncate+0xdf)[0x7fd00cbd81ef] /usr/lib64/glusterfs/3.10.2/xlator/performance/io-cache.so(+0x9032)[0x7fcffeca8032] /usr/lib64/glusterfs/3.10.2/xlator/performance/quick-read.so(+0x3dad)[0x7fcffea9adad] /usr/lib64/libglusterfs.so.0(default_truncate+0xdf)[0x7fd00cbd81ef] /usr/lib64/glusterfs/3.10.2/xlator/performance/md-cache.so(+0x623e)[0x7fcffe67523e] /usr/lib64/glusterfs/3.10.2/xlator/debug/io-stats.so(+0x831e)[0x7fcffe44f31e] /usr/lib64/libglusterfs.so.0(default_truncate+0xdf)[0x7fd00cbd81ef] /usr/lib64/glusterfs/3.10.2/xlator/performance/md-cache.so(+0x623e)[0x7fcffe67523e] /usr/lib64/glusterfs/3.10.2/xlator/debug/io-stats.so(+0x831e)[0x7fcffe44f31e] /usr/lib64/libglusterfs.so.0(default_truncate+0xdf)[0x7fd00cbd81ef] /usr/lib64/glusterfs/3.10.2/xlator/meta.so(+0x3776)[0x7fcffe232776] /usr/lib64/glusterfs/3.10.2/xlator/mount/fuse.so(+0x10196)[0x7fd0046e7196] /usr/lib64/glusterfs/3.10.2/xlator/mount/fuse.so(+0x7245)[0x7fd0046de245] /usr/lib64/glusterfs/3.10.2/xlator/mount/fuse.so(+0x6f6e)[0x7fd0046ddf6e] /usr/lib64/glusterfs/3.10.2/xlator/mount/fuse.so(+0x728e)[0x7fd0046de28e] /usr/lib64/glusterfs/3.10.2/xlator/mount/fuse.so(+0x72f3)[0x7fd0046de2f3] /usr/lib64/glusterfs/3.10.2/xlator/mount/fuse.so(+0x7328)[0x7fd0046de328] /usr/lib64/glusterfs/3.10.2/xlator/mount/fuse.so(+0x71b8)[0x7fd0046de1b8] /usr/lib64/glusterfs/3.10.2/xlator/mount/fuse.so(+0x726e)[0x7fd0046de26e] /usr/lib64/glusterfs/3.10.2/xlator/mount/fuse.so(+0x72b8)[0x7fd0046de2b8] /usr/lib64/glusterfs/3.10.2/xlator/mount/fuse.so(+0x1ef20)[0x7fd0046f5f20] /lib64/libpthread.so.0(+0x7851)[0x7fd00bc26851] /lib64/libc.so.6(clone+0x6d)[0x7fd00b58790d] --------- /usr/lib64/glusterfs/3.10.2/xlator/mount/fuse.so(+0x71b8)[0x7fd0046de1b8] /usr/lib64/glusterfs/3.10.2/xlator/mount/fuse.so(+0x726e)[0x7fd0046de26e] /usr/lib64/glusterfs/3.10.2/xlator/mount/fuse.so(+0x72b8)[0x7fd0046de2b8] /usr/lib64/glusterfs/3.10.2/xlator/mount/fuse.so(+0x1ef20)[0x7fd0046f5f20] /lib64/libpthread.so.0(+0x7851)[0x7fd00bc26851] /lib64/libc.so.6(clone+0x6d)[0x7fd00b58790d] ---------

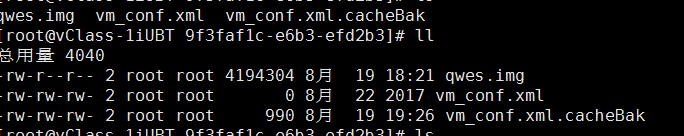

11. 在glusterfs存储目录出现文件异常大文件。

导致对文件操作失败。

如下是brick实际的存储目录下,在挂载点进行scp vm_conf.xml.cacheBak vm_conf.xml, 导致在 glusterfs挂载点下看到的 vm_conf.xml达到16E的文件大小。

链接:

官网 https://www.gluster.org/

http://blog.gluster.org/

源码: https://github.com/gluster/glusterfs

00086_GlusterFS性能监控&配额 https://github.com/jiobxn/one/wiki/00086_GlusterFS性能监控&配额

GlusterFS技术详解 http://www.tuicool.com/articles/AbE7Vr

用GlusterFS搭建高可用存储集群 http://blog.chinaunix.net/uid-144593-id-2804190.html

GlusterFS 笔记 https://my.oschina.net/kisops/blog/153466

使用Glusterfs作为kvm后端存储 https://my.oschina.net/kisops/blog/153466

Gusterfs架构和维护 http://www.2cto.com/net/201607/526218.html

GlusterFS性能调优基本思路 http://blog.csdn.net/liuaigui/article/details/18015427

GlusterFS分布式集群文件系统安装、配置及性能测试 http://www.3566t.com/news/show-748324.html

存储 | 缓存和分层 https://sanwen8.cn/p/3728WOT.html

GlusterFS企业级功能之EC纠删码 http://www.taocloudx.com/index.php?a=shows&catid=4&id=68

初探glusterfs-处理脑裂(split-brain)状态 http://nosmoking.blog.51cto.com/3263888/1718157/