一、原文地址

https://blog.csdn.net/leixiaohua1020/article/details/46889389

在此向雷霄骅致敬!!!

二、视频播放器实现思路

1)视频播放器大致可分为,视频文件IO模块,解复用模块,音视频解码模块,视频渲染模块,音频播放模块

2)ffmpeg中的代码可以实现上面所有的内容,但是为了手工实现一个播放器,上面的项目中只用ffmpeg来读取视频文件和解码视频文件

3)SDL是一个主要用于游戏领域的跨平台音视频渲染库,上面的项目中使用SDL渲染解码之后的YUV图像

三、项目代码分析

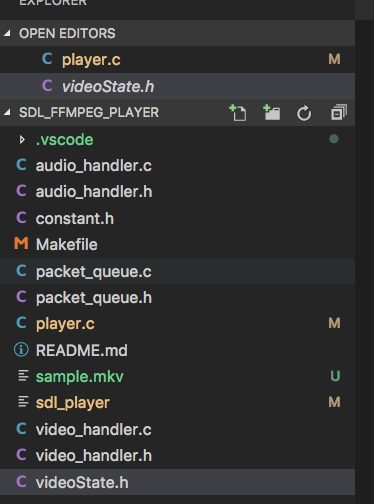

文件目录:

核心代码:

typedef struct VideoState {

AVFormatContext *pFormatCtx;

int videoStream, audioStream;

AVStream *audio_st;

PacketQueue audioq;

uint8_t audio_buf[(MAX_AUDIO_FRAME_SIZE * 3) / 2];

unsigned int audio_buf_size;

unsigned int audio_buf_index;

AVFrame audio_frame;

AVPacket audio_pkt;

uint8_t *audio_pkt_data;

int audio_pkt_size;

AVStream *video_st;

PacketQueue videoq;

VideoPicture pictq[VIDEO_PICTURE_QUEUE_SIZE];

int pictq_size, pictq_rindex, pictq_windex;

//picture原子保护

SDL_mutex *pictq_mutex;

SDL_cond *pictq_cond;

SDL_Thread *parse_tid;

SDL_Thread *video_tid;

char filename[1024];

int quit;

AVIOContext *io_context;

struct SwsContext *sws_ctx;

double video_clock;

double audio_clock;

double frame_last_delay;

double frame_last_pts;

double frame_timer;

} VideoState;

上面的结构体包含了播放器中共有的核心对象,整个程序中都会使用到,是整个播放器的上下文

可以看到里面包含两个队列,一个是视频队列,一个音频队列;看一下队列的实现:

typedef struct PacketQueue {

AVPacketList *first_pkt, *last_pkt;

int nb_packets;

int size;

//保持队列的原子性

SDL_mutex *mutex;

//put get 操作通信

SDL_cond *cond;

} PacketQueue;

队列的定义中包含一个临界区锁和一个信号量,这个保证了队列的存取多线程的安全性,实现如下:

int packet_queue_put(PacketQueue *q, AVPacket *pkt) {

AVPacketList *pkt1;

if(av_dup_packet(pkt) < 0) {

return -1;

}

pkt1 = (AVPacketList*)av_malloc(sizeof(AVPacketList));

if (!pkt1)

return -1;

pkt1->pkt = *pkt;

pkt1->next = NULL;

SDL_LockMutex(q->mutex);

if (!q->last_pkt)

q->first_pkt = pkt1;

else

q->last_pkt->next = pkt1;

q->last_pkt = pkt1;

q->nb_packets++;

q->size += pkt1->pkt.size;

SDL_CondSignal(q->cond);

SDL_UnlockMutex(q->mutex);

return 0;

}

int packet_queue_get(PacketQueue *q, AVPacket *pkt, int block)

{

AVPacketList *pkt1;

int ret;

SDL_LockMutex(q->mutex);

while(1){

if(global_video_state->quit) {

ret = -1;

break;

}

pkt1 = q->first_pkt;

if (pkt1) {

q->first_pkt = pkt1->next;

if (!q->first_pkt)

q->last_pkt = NULL;

q->nb_packets--;

q->size -= pkt1->pkt.size;

*pkt = pkt1->pkt;

av_free(pkt1);

ret = 1;

break;

} else if (!block) {

ret = 0;

break;

} else {

SDL_CondWait(q->cond, q->mutex);

}

}

SDL_UnlockMutex(q->mutex);

return ret;

}

多线程访问这个队列的时候,可以较好的实现数据之间的同步。

main入口函数分析:

int main(int argc, char *argv[])

{

SDL_Event event;

VideoState *is;

is = (VideoState*)av_mallocz(sizeof(VideoState));

global_video_state = is;

if(argc < 2) {

fprintf(stderr, "Usage: test <file>

");

exit(1);

}

// Register all formats and codecs

av_register_all();

avformat_network_init();

if(SDL_Init(SDL_INIT_VIDEO | SDL_INIT_AUDIO | SDL_INIT_TIMER)) {

fprintf(stderr, "Could not initialize SDL - %s

", SDL_GetError());

exit(1);

}

// Make a screen to put our video

screen = SDL_SetVideoMode(640, 480, 0, 0);

if(!screen) {

fprintf(stderr, "SDL: could not set video mode - exiting

");

exit(1);

}

av_strlcpy(is->filename, argv[1], 1024);

is->pictq_mutex = SDL_CreateMutex();

is->pictq_cond = SDL_CreateCond();

schedule_refresh(is, 40);

is->parse_tid = SDL_CreateThread(decode_thread, is);

if(!is->parse_tid) {

av_free(is);

return -1;

}

while(1){

SDL_WaitEvent(&event);

switch(event.type) {

case FF_QUIT_EVENT:

case SDL_QUIT:

is->quit = 1;

/*

* If the video has finished playing, then both the picture and

* audio queues are waiting for more data. Make them stop

* waiting and terminate normally.

*/

SDL_CondSignal(is->audioq.cond);

SDL_CondSignal(is->videoq.cond);

SDL_Quit();

return 0;

break;

case FF_ALLOC_EVENT:

alloc_picture(event.user.data1);

break;

case FF_REFRESH_EVENT:

video_refresh_timer(event.user.data1);

break;

default:

break;

}

}

return 0;

}

先看上面两行加粗的代码

第一行初始化音频、视频、定时器模块;这里是SDL初始化的代码,以上都是SDL初始化相关内容

第二行是真正的入口,创建一个解码线程,在解码线程中同时创建视频渲染线程

int stream_component_open(VideoState *is, int stream_index)

{

AVFormatContext *pFormatCtx = is->pFormatCtx;

AVCodecContext *codecCtx = NULL;

AVCodec *codec = NULL;

AVDictionary *optionsDict = NULL;

SDL_AudioSpec wanted_spec, spec;

if(stream_index < 0 || (unsigned int)stream_index >= pFormatCtx->nb_streams) {

return -1;

}

// Get a pointer to the codec context for the video stream

codecCtx = pFormatCtx->streams[stream_index]->codec;

if(codecCtx->codec_type == AVMEDIA_TYPE_AUDIO) {

// Set audio settings from codec info

wanted_spec.freq = codecCtx->sample_rate;

wanted_spec.format = AUDIO_S16SYS;

wanted_spec.channels = codecCtx->channels;

wanted_spec.silence = 0;

wanted_spec.samples = SDL_AUDIO_BUFFER_SIZE;

wanted_spec.callback = audio_callback;

wanted_spec.userdata = is;

if(SDL_OpenAudio(&wanted_spec, &spec) < 0) {

fprintf(stderr, "SDL_OpenAudio: %s

", SDL_GetError());

return -1;

}

}

codec = avcodec_find_decoder(codecCtx->codec_id);

if(!codec || (avcodec_open2(codecCtx, codec, &optionsDict) < 0)) {

fprintf(stderr, "Unsupported codec!

");

return -1;

};

wanted_spec.callback = audio_callback;

switch(codecCtx->codec_type) {

case AVMEDIA_TYPE_AUDIO:

is->audioStream = stream_index;

is->audio_st = pFormatCtx->streams[stream_index];

is->audio_buf_size = 0;

is->audio_buf_index = 0;

memset(&is->audio_pkt, 0, sizeof(is->audio_pkt));

packet_queue_init(&is->audioq);

SDL_PauseAudio(0);

break;

case AVMEDIA_TYPE_VIDEO:

is->videoStream = stream_index;

is->frame_timer = (double)av_gettime() / 1000000.0;

is->video_st = pFormatCtx->streams[stream_index];

packet_queue_init(&is->videoq);

is->video_tid = SDL_CreateThread(video_thread, is);

is->sws_ctx =

sws_getContext

(

is->video_st->codec->width,

is->video_st->codec->height,

is->video_st->codec->pix_fmt,

is->video_st->codec->width,

is->video_st->codec->height,

AV_PIX_FMT_YUV420P,

SWS_BILINEAR,

0,

0,

0

);

/* codecCtx->get_buffer2 = our_get_buffer; */

break;

default:

break;

}

return 0;

}

那现在解码线程+渲染线程都有了;他们之间的信息(task)是如何传递的呢?

就是刚才全局上下文中的音频和视频队列;

这个队列实现很精妙,在队列满的时候会导致生产者阻塞,在队列空的时候会导致消费者阻塞,如此一来,在播放器因为网络差的时候得不到视频文件,因此队列为空,后续所有的任务自动“暂停”

while(1){

if(is->quit) {

break;

}

// seek stuff goes here

if(is->audioq.size > MAX_AUDIOQ_SIZE ||

is->videoq.size > MAX_VIDEOQ_SIZE) {

SDL_Delay(10);

continue;

}

队列满的时候,停止放数据到队列中;

int queue_picture(VideoState *is, AVFrame *pFrame,double pts)

{

VideoPicture *vp;

AVPicture pict;

/* wait until we have space for a new pic */

SDL_LockMutex(is->pictq_mutex);

//最大显示缓存队列已蛮,等待信号

while(is->pictq_size >= VIDEO_PICTURE_QUEUE_SIZE &&

!is->quit) {

SDL_CondWait(is->pictq_cond, is->pictq_mutex);

}

SDL_UnlockMutex(is->pictq_mutex);

if(is->quit)

return -1;

同时在放数据的业务层,也判断一下自定义的缓冲区大小,如果满了就阻塞,等待队列释放空间,这样能精确控制内存

更精妙的是,在解码一帧图像的时候,这里利用信号和锁+SDL事件队列,方便控制了一帧图像的渲染;因为这张渲染的bmp是全局共享的,同样需要保证线程安全

if(!vp->bmp ||

vp->width != is->video_st->codec->width ||

vp->height != is->video_st->codec->height) {

SDL_Event event;

vp->allocated = 0;

/* we have to do it in the main thread */

event.type = FF_ALLOC_EVENT;

event.user.data1 = is;

SDL_PushEvent(&event);

/* wait until we have a picture allocated */

SDL_LockMutex(is->pictq_mutex);

while(!vp->allocated && !is->quit) {

SDL_CondWait(is->pictq_cond, is->pictq_mutex);

}

SDL_UnlockMutex(is->pictq_mutex);

if(is->quit) {

return -1;

}

}

借助上面的思路可以完全实现内存的上限控制,限制队列的大小就可以实现,这样在嵌入式设备上面可以精确控制内存的使用。

四、音视频同步

void video_refresh_timer(void *userdata) {

VideoState *is = (VideoState *)userdata;

VideoPicture *vp;

double actual_delay, delay, sync_threshold, ref_clock, diff;

if(is->video_st) {

if(is->pictq_size == 0) {

schedule_refresh(is, 1);

} else {

/* printf("vidoe clock %f audio clock %f

",is->video_clock,is->audio_clock); */

/* printf("audio clock %f",is->audio_clock); */

vp = &is->pictq[is->pictq_rindex];

delay = vp->pts - is->frame_last_pts; /* the pts from last time */

/* printf("delay %f ",delay); */

if(delay <= 0 || delay >= 1.0) {

/* if incorrect delay, use previous one */

delay = is->frame_last_delay;

}

/* save for next time */

is->frame_last_delay = delay;

is->frame_last_pts = vp->pts;

/* update delay to sync to audio */

ref_clock = get_audio_clock(is);

diff = vp->pts - ref_clock;

/* printf("diff %f

",diff); */

/* Skip or repeat the frame. Take delay into account

FFPlay still doesn't "know if this is the best guess." */

sync_threshold = (delay > AV_SYNC_THRESHOLD) ? delay : AV_SYNC_THRESHOLD;

if(fabs(diff) < AV_NOSYNC_THRESHOLD) {

if(diff <= -sync_threshold) {

delay = 0;

} else if(diff >= sync_threshold) {

delay = 2 * delay;

}

}

is->frame_timer += delay;

/* computer the REAL delay */

actual_delay = is->frame_timer - (av_gettime() / 1000000.0);

/* printf("diff %f actual delay %f

",diff,actual_delay); */

if(actual_delay < 0.010) {

/* Really it should skip the picture instead */

actual_delay = 0.010;

}

schedule_refresh(is, (int)(actual_delay * 1000 + 0.5));

/* show the picture! */

video_display(is);

/* update queue for next picture! */

if(++is->pictq_rindex == VIDEO_PICTURE_QUEUE_SIZE) {

is->pictq_rindex = 0;

}

SDL_LockMutex(is->pictq_mutex);

is->pictq_size--;

SDL_CondSignal(is->pictq_cond);

SDL_UnlockMutex(is->pictq_mutex);

}

} else {

schedule_refresh(is, 100);

}

}

利用SDL的timer模块,我们每次注册一个timer,渲染完一帧图像之后,根据pts,当前的clock,音频的clock设置下一帧渲染的timer

这样可以实现视频的连续刷新