https://www.codenong.com/cs106676560/

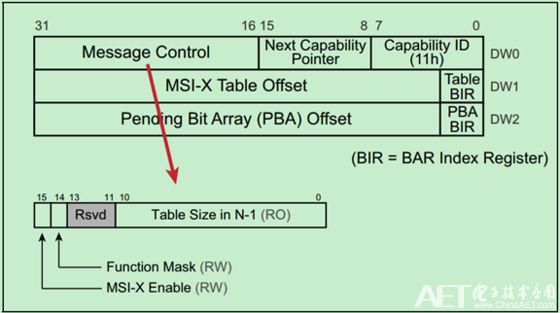

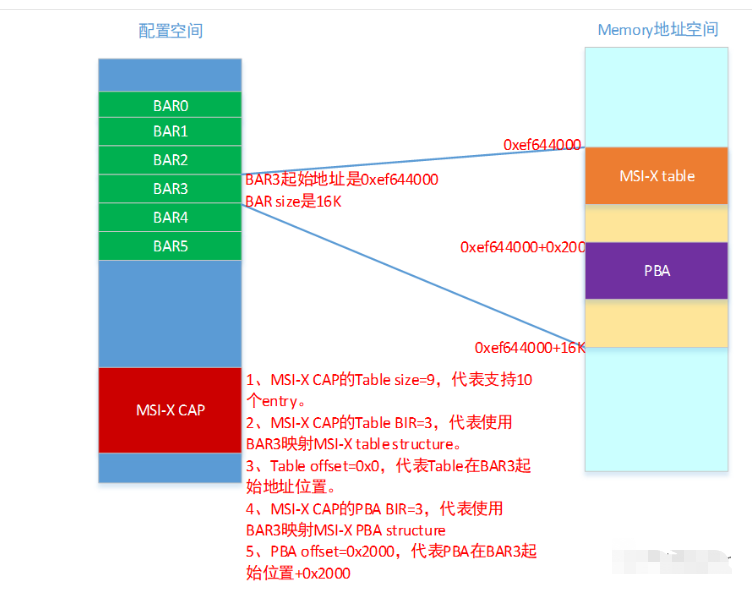

MSI只支持32个中断向量,而MSI-X支持多达2048个中断向量,但是MSI-X的相关寄存器在配置空间中占用的空间却更小。这是因为中断向量信息并不直接存储在这里,而是在一款特殊的Memory(MIMO)中。并通过BIR(Base address Indicator Register, or BAR Index Register)来确定其在MIMO中的具体位置。无论是MSI还是MSI-X,其本质上都是基于Memory Write 的,因此也可能会产生错误。比如PCIe中的ECRC错误等。

如下图所示:

Pending Table

Pending Table的组成结构如图6-4所示。

如上图所示,在Pending Table中,一个Entry由64位组成,其中每一位与MSI-X Table中的一个Entry对应,即Pending Table中的每一个Entry与MSI-X Table的64个Entry对应。与MSI机制类似,Pending位需要与Per Vector Mask位配置使用。

当Per Vector Mask位为1时,PCIe设备不能立即发送MSI-X中断请求,而是将对应的Pending位置1;当系统软件将Per Vector Mask位清零时,PCIe设备需要提交MSI-X中断请求,同时将Pending位清零。

[1] 此时PCI设备配置空间Command寄存器的“Interrupt Disable”位为1。

[2] MSI机制提交中断请求的方式类似与边界触发方式,而使用边界触发方式时,处理器可能会丢失某些中断请求,因此在设备驱动程序的开发过程中,可能需要使用这两个字段。

确认设备的MSI/MSI-X capability

lspci -v可以查看设备支持的capability, 如果有MSI或者MSI-x或者message signal interrupt的描述,并且这些描述后面都有一个enable的flag, “+”表示enable,"-"表示disable。

[root@localhost ixgbe]# lspci -xxx -vv -s 05:00.0 05:00.0 Ethernet controller: Huawei Technologies Co., Ltd. Hi1822 Family (2*25GE) (rev 45) Subsystem: Huawei Technologies Co., Ltd. Device d139 Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+ Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx- Latency: 0, Cache Line Size: 32 bytes NUMA node: 0 Region 0: Memory at 80007b00000 (64-bit, prefetchable) [size=128K] Region 2: Memory at 80008a20000 (64-bit, prefetchable) [size=32K] Region 4: Memory at 80000200000 (64-bit, prefetchable) [size=1M] Expansion ROM at e9200000 [disabled] [size=1M] Capabilities: [40] Express (v2) Endpoint, MSI 00 DevCap: MaxPayload 512 bytes, PhantFunc 0, Latency L0s unlimited, L1 unlimited ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset+ SlotPowerLimit 0.000W DevCtl: Report errors: Correctable+ Non-Fatal+ Fatal+ Unsupported- RlxdOrd+ ExtTag+ PhantFunc- AuxPwr- NoSnoop+ FLReset- MaxPayload 256 bytes, MaxReadReq 512 bytes DevSta: CorrErr- UncorrErr- FatalErr- UnsuppReq- AuxPwr+ TransPend- LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM not supported, Exit Latency L0s unlimited, L1 unlimited ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+ LnkCtl: ASPM Disabled; RCB 128 bytes Disabled- CommClk- ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt- LnkSta: Speed 8GT/s, Width x16, TrErr- Train- SlotClk- DLActive- BWMgmt- ABWMgmt- DevCap2: Completion Timeout: Range B, TimeoutDis+, LTR-, OBFF Not Supported DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis-, LTR-, OBFF Disabled LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis- Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS- Compliance De-emphasis: -6dB LnkSta2: Current De-emphasis Level: -3.5dB, EqualizationComplete+, EqualizationPhase1+ EqualizationPhase2+, EqualizationPhase3+, LinkEqualizationRequest- Capabilities: [80] MSI: Enable- Count=1/32 Maskable+ 64bit+ Address: 0000000000000000 Data: 0000 Masking: 00000000 Pending: 00000000 Capabilities: [a0] MSI-X: Enable+ Count=32 Masked- Vector table: BAR=2 offset=00000000 PBA: BAR=2 offset=00004000 Capabilities: [b0] Power Management version 3 Flags: PMEClk- DSI- D1- D2- AuxCurrent=0mA PME(D0+,D1+,D2+,D3hot+,D3cold+) Status: D0 NoSoftRst- PME-Enable- DSel=0 DScale=0 PME- Capabilities: [c0] Vital Product Data Product Name: Huawei IN200 2*100GE Adapter Read-only fields: [PN] Part number: SP572 End Capabilities: [100 v1] Advanced Error Reporting UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol- UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol- UESvrt: DLP+ SDES+ TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol- CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- NonFatalErr- CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- NonFatalErr- AERCap: First Error Pointer: 00, GenCap+ CGenEn- ChkCap+ ChkEn- Capabilities: [150 v1] Alternative Routing-ID Interpretation (ARI) ARICap: MFVC- ACS-, Next Function: 0 ARICtl: MFVC- ACS-, Function Group: 0 Capabilities: [200 v1] Single Root I/O Virtualization (SR-IOV) IOVCap: Migration-, Interrupt Message Number: 000 IOVCtl: Enable- Migration- Interrupt- MSE- ARIHierarchy+ IOVSta: Migration- Initial VFs: 120, Total VFs: 120, Number of VFs: 0, Function Dependency Link: 00 VF offset: 1, stride: 1, Device ID: 375e Supported Page Size: 00000553, System Page Size: 00000010 Region 0: Memory at 0000080007b20000 (64-bit, prefetchable) Region 2: Memory at 00000800082a0000 (64-bit, prefetchable) Region 4: Memory at 0000080000300000 (64-bit, prefetchable) VF Migration: offset: 00000000, BIR: 0 Capabilities: [310 v1] #19 Capabilities: [4e0 v1] Device Serial Number 44-a1-91-ff-ff-a4-9b-eb Capabilities: [4f0 v1] Transaction Processing Hints Device specific mode supported No steering table available Capabilities: [600 v1] Vendor Specific Information: ID=0000 Rev=0 Len=028 <?> Capabilities: [630 v1] Access Control Services ACSCap: SrcValid- TransBlk- ReqRedir- CmpltRedir- UpstreamFwd- EgressCtrl- DirectTrans- ACSCtl: SrcValid- TransBlk- ReqRedir- CmpltRedir- UpstreamFwd- EgressCtrl- DirectTrans- Kernel driver in use: vfio-pci Kernel modules: hinic 00: e5 19 00 02 06 04 10 00 45 00 00 02 08 00 00 00 10: 0c 00 b0 07 00 08 00 00 0c 00 a2 08 00 08 00 00 20: 0c 00 20 00 00 08 00 00 00 00 00 00 e5 19 39 d1 30: 00 00 40 e6 40 00 00 00 00 00 00 00 ff 00 00 00 40: 10 80 02 00 e2 8f 00 10 37 29 10 00 03 f1 43 00 50: 08 00 03 01 00 00 00 00 00 00 00 00 00 00 00 00 60: 00 00 00 00 92 03 00 00 00 00 00 00 0e 00 00 00 70: 03 00 1f 00 00 00 00 00 00 00 00 00 00 00 00 00 80: 05 a0 8a 01 00 00 00 00 00 00 00 00 00 00 00 00 90: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 a0: 11 b0 1f 80 02 00 00 00 02 40 00 00 00 00 00 00 b0: 01 c0 03 f8 00 00 00 00 00 00 00 00 00 00 00 00 c0: 03 00 28 80 37 32 78 ff 00 00 00 00 00 00 00 00 d0: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 e0: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 f0: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 [root@localhost ixgbe]#

[root@localhost ixgbe]# lspci -n -v -s 06:00.0 06:00.0 0200: 19e5:0200 (rev 45) Subsystem: 19e5:d139 Flags: fast devsel, NUMA node 0 [virtual] Memory at 80010400000 (64-bit, prefetchable) [size=128K] [virtual] Memory at 80011320000 (64-bit, prefetchable) [size=32K] [virtual] Memory at 80008b00000 (64-bit, prefetchable) [size=1M] Expansion ROM at e9300000 [disabled] [size=1M] Capabilities: [40] Express Endpoint, MSI 00 Capabilities: [80] MSI: Enable- Count=1/32 Maskable+ 64bit+ Capabilities: [a0] MSI-X: Enable- Count=32 Masked- Capabilities: [b0] Power Management version 3 Capabilities: [c0] Vital Product Data Capabilities: [100] Advanced Error Reporting Capabilities: [150] Alternative Routing-ID Interpretation (ARI) Capabilities: [200] Single Root I/O Virtualization (SR-IOV) Capabilities: [310] #19 Capabilities: [4e0] Device Serial Number 44-a1-91-ff-ff-a4-9b-ec Capabilities: [4f0] Transaction Processing Hints Capabilities: [600] Vendor Specific Information: ID=0000 Rev=0 Len=028 <?> Capabilities: [630] Access Control Services Kernel driver in use: vfio-pci Kernel modules: hinic

root@zj-x86:~# lspci -n -v -s 1a:00.1 1a:00.1 0200: 8086:37d0 (rev 09) Subsystem: 19e5:d123 Flags: bus master, fast devsel, latency 0, IRQ 31, NUMA node 0 Memory at a0000000 (64-bit, prefetchable) [size=16M] Memory at a3010000 (64-bit, prefetchable) [size=32K] Expansion ROM at a3700000 [disabled] [size=512K] Capabilities: [40] Power Management version 3 Capabilities: [50] MSI: Enable- Count=1/1 Maskable+ 64bit+ Capabilities: [70] MSI-X: Enable+ Count=129 Masked- Capabilities: [a0] Express Endpoint, MSI 00 Capabilities: [e0] Vital Product Data Capabilities: [100] Advanced Error Reporting Capabilities: [140] Device Serial Number 5c-ac-f7-ff-ff-6b-1d-f4 Capabilities: [150] Alternative Routing-ID Interpretation (ARI) Capabilities: [160] Single Root I/O Virtualization (SR-IOV) Capabilities: [1a0] Transaction Processing Hints Capabilities: [1b0] Access Control Services Kernel driver in use: i40e Kernel modules: i40e root@zj-x86:~#

I350网卡位于bus 3,device0,function 0。从配置空间可以看出网卡申请了一个BAR3,这正是MSI-X所使用的BAR3,MSI-X tablestructure存放在BAR3起始地址+0的位置,PBA structure存在BAR3起始地址+0x2000的位置。

root@zj-x86:~# lspci | grep -i ether

1a:00.0 Ethernet controller: Intel Corporation Ethernet Connection X722 for 10GbE SFP+ (rev 09)

1a:00.1 Ethernet controller: Intel Corporation Ethernet Connection X722 for 10GbE SFP+ (rev 09)

1a:00.2 Ethernet controller: Intel Corporation Ethernet Connection X722 for 1GbE (rev 09)

1a:00.3 Ethernet controller: Intel Corporation Ethernet Connection X722 for 1GbE (rev 09)

root@zj-x86:~# lspci -s 1a:00.1 -v

1a:00.1 Ethernet controller: Intel Corporation Ethernet Connection X722 for 10GbE SFP+ (rev 09)

Subsystem: Huawei Technologies Co., Ltd. Ethernet Connection X722 for 10GbE SFP+

Flags: bus master, fast devsel, latency 0, IRQ 31, NUMA node 0

Memory at a0000000 (64-bit, prefetchable) [size=16M]

Memory at a3010000 (64-bit, prefetchable) [size=32K]

Expansion ROM at a3700000 [disabled] [size=512K]

Capabilities: [40] Power Management version 3

Capabilities: [50] MSI: Enable- Count=1/1 Maskable+ 64bit+

Capabilities: [70] MSI-X: Enable+ Count=129 Masked-

Capabilities: [a0] Express Endpoint, MSI 00

Capabilities: [e0] Vital Product Data

Capabilities: [100] Advanced Error Reporting

Capabilities: [140] Device Serial Number 5c-ac-f7-ff-ff-6b-1d-f4

Capabilities: [150] Alternative Routing-ID Interpretation (ARI)

Capabilities: [160] Single Root I/O Virtualization (SR-IOV)

Capabilities: [1a0] Transaction Processing Hints

Capabilities: [1b0] Access Control Services

Kernel driver in use: i40e

Kernel modules: i40e

root@zj-x86:~#

[root@localhost ~]# lspci -s 05:00.0 -v

05:00.0 Ethernet controller: Huawei Technologies Co., Ltd. Hi1822 Family (2*25GE) (rev 45)

Subsystem: Huawei Technologies Co., Ltd. Device d139

Flags: fast devsel, NUMA node 0

[virtual] Memory at 80007b00000 (64-bit, prefetchable) [size=128K]

[virtual] Memory at 80008a20000 (64-bit, prefetchable) [size=32K]

[virtual] Memory at 80000200000 (64-bit, prefetchable) [size=1M]

Expansion ROM at e9200000 [disabled] [size=1M]

Capabilities: [40] Express Endpoint, MSI 00

Capabilities: [80] MSI: Enable- Count=1/32 Maskable+ 64bit+

Capabilities: [a0] MSI-X: Enable- Count=32 Masked-

Capabilities: [b0] Power Management version 3

Capabilities: [c0] Vital Product Data

Capabilities: [100] Advanced Error Reporting

Capabilities: [150] Alternative Routing-ID Interpretation (ARI)

Capabilities: [200] Single Root I/O Virtualization (SR-IOV)

Capabilities: [310] #19

Capabilities: [4e0] Device Serial Number 44-a1-91-ff-ff-a4-9b-eb

Capabilities: [4f0] Transaction Processing Hints

Capabilities: [600] Vendor Specific Information: ID=0000 Rev=0 Len=028 <?>

Capabilities: [630] Access Control Services

Kernel driver in use: vfio-pci

Kernel modules: hinic

[root@localhost ~]#

MSI的中断注册

kernel/irq/manage.c

request_irq()

+-> __setup_irq()

+-> irq_activate()

+-> msi_domain_activate()

// msi_domain_info中定义的irq_chip_write_msi_msg

+-> irq_chip_write_msi_msg()

// irq_chip对应的是pci_msi_create_irq_domain中关联的its_msi_irq_chip

+-> data->chip->irq_write_msi_msg(data, msg);

+-> pci_msi_domain_write_msg()

从这个流程可以看出,MSI是通过irq_write_msi_msg往一个地址发一个消息来激活一个中断。

4. 设备怎么使用MSI/MSI-x中断?

传统中断在系统初始化扫描PCI bus tree时就已自动为设备分配好中断号, 但是如果设备需要使用MSI,驱动需要进行一些额外的配置。

当前linux内核提供pci_alloc_irq_vectors来进行MSI/MSI-X capablity的初始化配置以及中断号分配。

|

1

2 |

int pci_alloc_irq_vectors(struct pci_dev *dev, unsigned int min_vecs,

unsigned int max_vecs, unsigned int flags); |

函数的返回值为该PCI设备分配的中断向量个数。

min_vecs是设备对中断向量数目的最小要求,如果小于该值,会返回错误。

max_vecs是期望分配的中断向量最大个数。

flags用于区分设备和驱动能够使用的中断类型,一般有4种:

|

1

2 3 4 |

#define PCI_IRQ_LEGACY (1 << 0) /* Allow legacy interrupts */

#define PCI_IRQ_MSI (1 << 1) /* Allow MSI interrupts */ #define PCI_IRQ_MSIX (1 << 2) /* Allow MSI-X interrupts */ #define PCI_IRQ_ALL_TYPES (PCI_IRQ_LEGACY | PCI_IRQ_MSI | PCI_IRQ_MSIX) |

PCI_IRQ_ALL_TYPES可以用来请求任何可能类型的中断。

此外还可以额外的设置PCI_IRQ_AFFINITY, 用于将中断分布在可用的cpu上。

使用示例:

|

1

|

i = pci_alloc_irq_vectors(dev->pdev, min_msix, msi_count, PCI_IRQ_MSIX | PCI_IRQ_AFFINITY);

|

与之对应的是释放中断资源的函数pci_free_irq_vectors(), 需要在设备remove时调用:

|

1

|

void pci_free_irq_vectors(struct pci_dev *dev);

|

此外,linux还提供了pci_irq_vector()用于获取IRQ number.

|

1

|

int pci_irq_vector(struct pci_dev *dev, unsigned int nr);

|

5. 设备的MSI/MSI-x中断是怎样处理的?

5.1 MSI的中断分配pci_alloc_irq_vectors()

深入理解下pci_alloc_irq_vectors()

pci_alloc_irq_vectors() --> pci_alloc_irq_vectors_affinity()

|

1

2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 |

int pci_alloc_irq_vectors_affinity(struct pci_dev *dev, unsigned int min_vecs,

unsigned int max_vecs, unsigned int flags, struct irq_affinity *affd) { struct irq_affinity msi_default_affd = {0}; int msix_vecs = -ENOSPC; int msi_vecs = -ENOSPC; if (flags & PCI_IRQ_AFFINITY) { if (!affd) affd = &msi_default_affd; } else { if (WARN_ON(affd)) affd = NULL; } if (flags & PCI_IRQ_MSIX) { msix_vecs = __pci_enable_msix_range(dev, NULL, min_vecs, max_vecs, affd, flags); ------(1) if (msix_vecs > 0) return msix_vecs; } if (flags & PCI_IRQ_MSI) { msi_vecs = __pci_enable_msi_range(dev, min_vecs, max_vecs, affd); ----- (2) if (msi_vecs > 0) return msi_vecs; } /* use legacy IRQ if allowed */ if (flags & PCI_IRQ_LEGACY) { if (min_vecs == 1 && dev->irq) { /* * Invoke the affinity spreading logic to ensure that * the device driver can adjust queue configuration * for the single interrupt case. */ if (affd) irq_create_affinity_masks(1, affd); pci_intx(dev, 1); ------ (3) return 1; } } if (msix_vecs == -ENOSPC) return -ENOSPC; return msi_vecs; } |

(1) 先确认申请的是否为MSI-X中断

|

1

2 3 4 |

__pci_enable_msix_range()

+-> __pci_enable_msix() +-> msix_capability_init() +-> pci_msi_setup_msi_irqs() |

msix_capability_init会对msi capability进行一些配置。

关键函数pci_msi_setup_msi_irqs, 会创建msi irq number:

|

1

2 3 4 5 6 7 8 9 10 |

static int pci_msi_setup_msi_irqs(struct pci_dev *dev, int nvec, int type)

{ struct irq_domain *domain; domain = dev_get_msi_domain(&dev->dev); if (domain && irq_domain_is_hierarchy(domain)) return msi_domain_alloc_irqs(domain, &dev->dev, nvec); return arch_setup_msi_irqs(dev, nvec, type); } |

这里的irq_domain获取的是pcie device结构体中定义的dev->msi_domain.

这里的msi_domain是在哪里定义的呢?

在drivers/irqchip/irq-gic-v3-its-pci-msi.c中, kernel启动时会:

|

1

2 3 4 |

its_pci_msi_init()

+-> its_pci_msi_init() +-> its_pci_msi_init_one() +-> pci_msi_create_irq_domain(handle, &its_pci_msi_domain_info,parent) |

pci_msi_create_irq_domain中会去创建pci_msi irq_domain, 传递的参数分别是its_pci_msi_domain_info以及设置parent为its irq_domain.

所以现在逻辑就比较清晰:

gic中断控制器初始化时会去add gic irq_domain, gic irq_domain是its irq_domain的parent节点,its irq_domain中的host data对应的pci_msi irq_domain.

|

1

2 3 4 5 6 7 8 9 10 11 |

gic irq_domain --> irq_domain_ops(gic_irq_domain_ops)

^ --> .alloc(gic_irq_domain_alloc) | its irq_domain --> irq_domain_ops(its_domain_ops) ^ --> .alloc(its_irq_domain_alloc) | --> ... | --> host_data(struct msi_domain_info) | --> msi_domain_ops(its_msi_domain_ops) | --> .msi_prepare(its_msi_prepare) | --> irq_chip, chip_data, handler... | --> void *data(struct its_node) |

pci_msi irq_domain对应的ops:

|

1

2 3 4 5 6 |

static const struct irq_domain_ops msi_domain_ops = {

.alloc = msi_domain_alloc, .free = msi_domain_free, .activate = msi_domain_activate, .deactivate = msi_domain_deactivate, }; |

回到上面的pci_msi_setup_msi_irqs()函数,获取了pci_msi irq_domain后, 调用msi_domain_alloc_irqs()函数分配IRQ number.

|

1

2 3 4 5 |

msi_domain_alloc_irqs()

// 对应的是its_pci_msi_ops中的its_pci_msi_prepare +-> msi_domain_prepare_irqs() // 分配IRQ number +-> __irq_domain_alloc_irqs() |

msi_domain_prepare_irqs()对应的是its_msi_prepare函数,会去创建一个its_device.

__irq_domain_alloc_irqs()会去分配虚拟中断号,从allocated_irq位图中取第一个空闲的bit位作为虚拟中断号。

至此, msi-x的中断分配已经完成,且msi-x的配置也已经完成。

(2) 如果不是MSI-X中断, 再确认申请的是否为MSI中断, 流程与MSI-x类似。

(3) 如果不是MSI/MSI-X中断, 再确认申请的是否为传统intx中断

5.2 MSI的中断注册

kernel/irq/manage.c

|

1

2 3 4 5 6 7 8 9 |

request_irq()

+-> __setup_irq() +-> irq_activate() +-> msi_domain_activate() // msi_domain_info中定义的irq_chip_write_msi_msg +-> irq_chip_write_msi_msg() // irq_chip对应的是pci_msi_create_irq_domain中关联的its_msi_irq_chip +-> data->chip->irq_write_msi_msg(data, msg); +-> pci_msi_domain_write_msg() |

从这个流程可以看出,MSI是通过irq_write_msi_msg往一个地址发一个消息来激活一个中断。

中断产生

1. 产生MSI中断请求

关于MSI以及MSI-X的详细说明可以参阅王齐老师的《PCI Express体系结构导读》及《Intel® 64 and IA-32 Architectures Software Developer’s Manual》

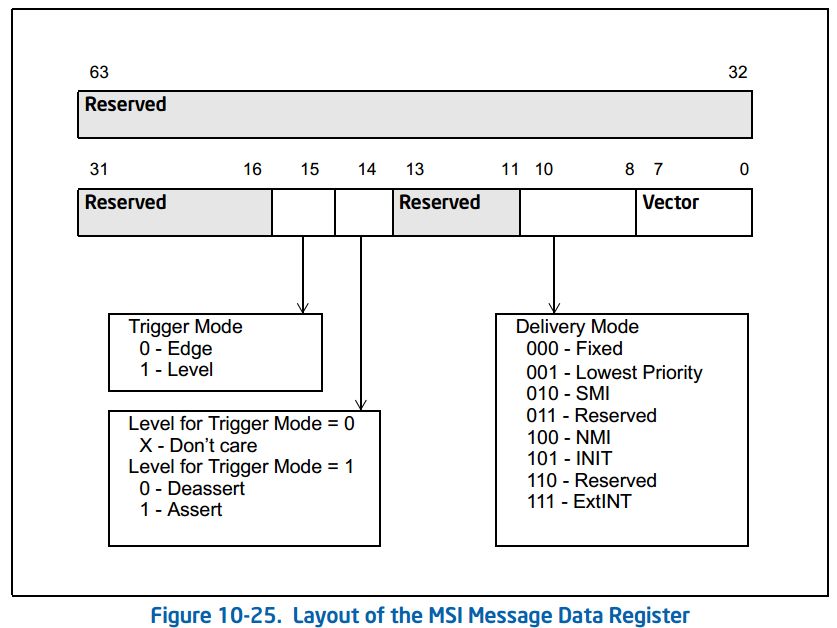

PCIe设备通过向MSI/MSI-X Capability中的Message Address地址写Message Data数据,组成一个TLP向处理器提交MSI/MSI-X中断请求,不同处理器采用不同机制处理MSI请求,x86使用FSB Interrupt Message的方式,下图是Intel手册中Message Data的格式,可以看到bit0~7是Vector,因此每一个MSI的请求中都携带了中断向量的值。

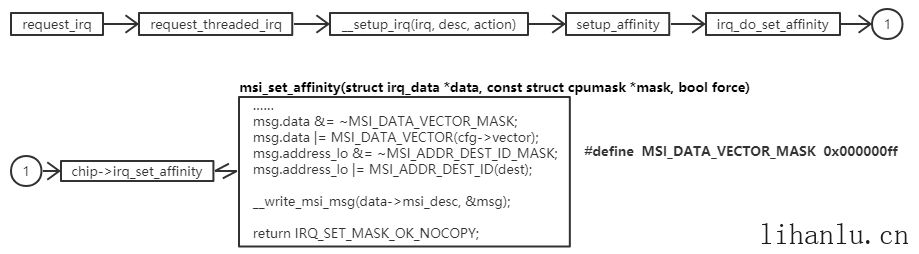

Message Data中Vector是如何设置的?如下图设备驱动在调用request_irq来申请中断的时候会调用到msi_set_affinity,该函数将Vector的值写入msg.data的低8bit,然后调用__write_msi_msg将Message Data写入PCIe配置空间。

2.中断整体流程

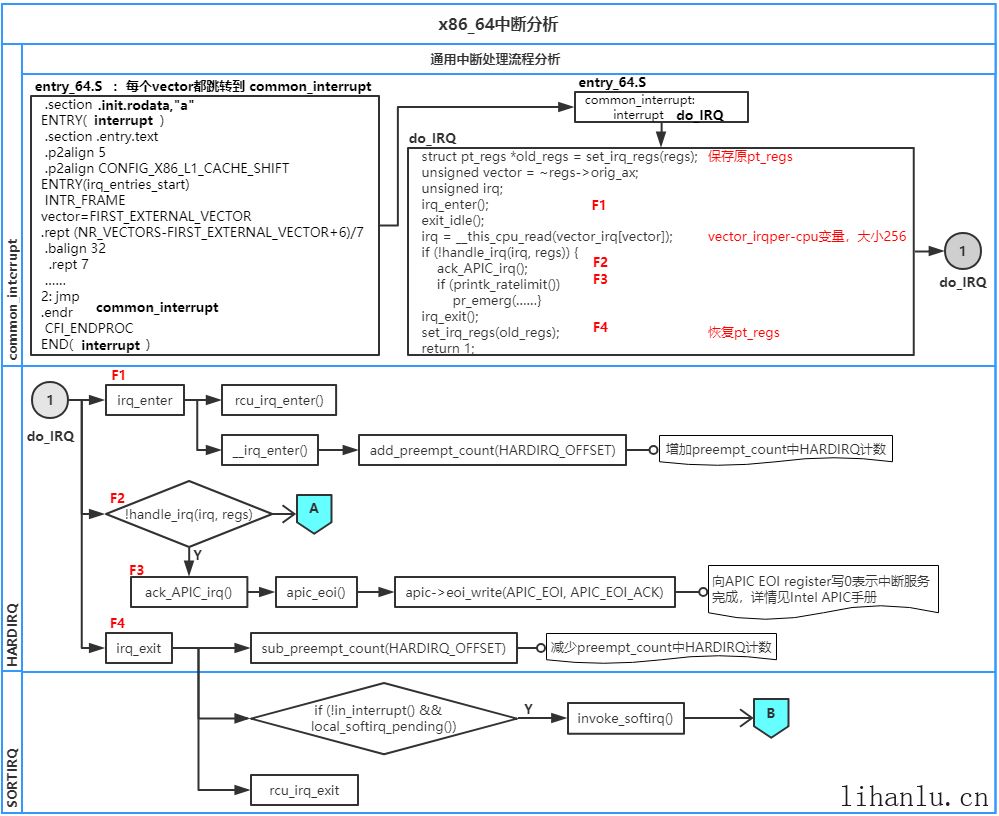

MSI报文中带有Vector信息,根据Vector查找IDT table中的处理函数,x86架构中的IDT无论Vector是多少处理函数都会跳转到common_interrupt,然后执行do_IRQ,函数do_IRQ主要包括以下几个部分:

-

调用irq_enter增加preempt_count中HARDIRQ的计数,标志着一个HARDIRQ的开始;

preempt_count变量不为零的时候不可以抢占。

-

irq = __this_cpu_read(vector_irq[vector])根据vector获得irq的值;

-

handle_irq做HARDIRQ处理;

-

如果handle_irq返回false则调用ack_APIC_irq()向APIC的EOI寄存器写0,通知APIC中断服务完成;

-

irq_exit调用sub_preempt_count(HARDIRQ_OFFSET)将irq_enter增加的计数减掉,这也标志着HARDIRQ的结束,然后调用in_interrupt()判断preempt_count为0且有softirq pending,就调用invoke_softirq。

2.1. preempt_count

preempt_count是thread_info结构体的成员变量,内核中对preempt_count的描述如下,可以看到其中有softirq count,hardirq count等,preempt_count可以作抢占计数和判断当前所在上下文的情况。

1

|

/*

|

2.2. irq与vector

vector_irq是一个per cpu数组,数组反映了各cpu上vector与irq的对应关系,index代表vector的值,数组中存储的值是irq。

1

|

|

2.3. handle_irq

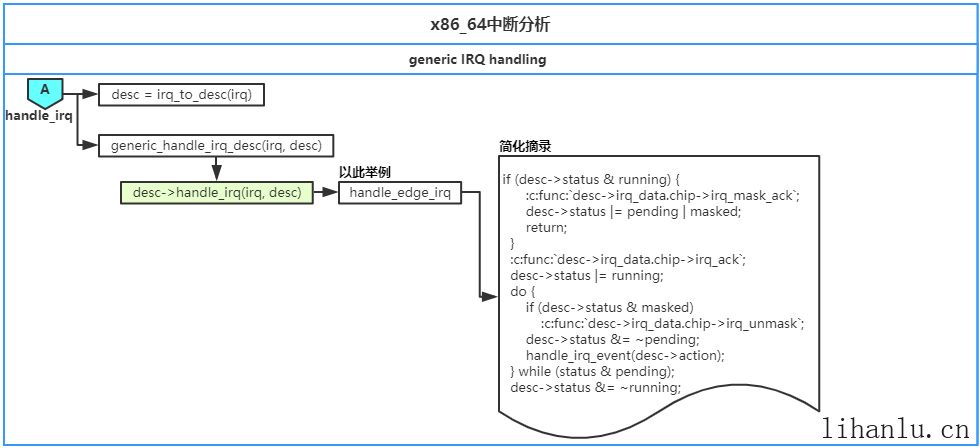

handle_irq根据irq获取中断描述符结构irq_desc,然后调用generic_handle_irq_desc。

1

|

bool handle_irq(unsigned irq, struct pt_regs *regs)

|

要继续深入分析,首先要理解中断代码有三个主要的抽象层次:

- High-level driver API 高级驱动API

- High-level IRQ flow handlers 高级IRQ流处理程序

- Chip-level hardware encapsulation 硬件芯片级封装

我们上面的分析(诸如common_interrupt等)包含了许多low-level architecture 代码。当中断触发时,这些底层架构代码通过调用desc->handle_irq来调用通用中断代码,这个handle_irq指向的函数属于High-level IRQ flow handlers的层次,称其为高级IRQ流处理程序(High-level IRQ flow handlers),Kernel中提供了一组预定义的irq-flow方法,这些函数在引导期间或在设备初始化期间由体系结构分配给特定的中断(对desc->handle_irq的赋值):

1

|

/*

|

高级IRQ流处理程序会调用desc->irq_data.chip原语(irq_chip中的,例如irq_ack),即Chip-level hardware encapsulation硬件芯片级封装,如果中断有specific handler的话还会调用外设的specific handler。

由于我碰巧用GDB断住了handle_edge_irq(边缘触发中断的通用实现),以此举例分析,详见下面的代码以及注释。

1

|

/**

|

handle_irq_event(desc)函数中调用handle_irq_event_percpu遍历action list执行specific handler,handle_irq_event_percpu函数不展开分析了。

需要注意的是handle_irq_event_percpu函数前后的锁操作,注意上面handle_edge_irq函数开始和结束的lock和unlock不是对应关系,handle_irq_event_percpu前面的raw_spin_unlock对应的是handle_edge_irq开头的raw_spin_lock,而handle_irq_event_percpu后面的raw_spin_lock对应的是handle_edge_irq最后的raw_spin_unlock。

1

|

irqreturn_t handle_irq_event(struct irq_desc *desc)

|

其他的High-level IRQ flow handlers函数就不做详细分析了,下面是内核文档中对一些High-level irq处理函数的简化摘录

-

handle_level_irq

1

2

3:c:func:`desc->irq_data.chip->irq_mask_ack`;

handle_irq_event(desc->action);

:c:func:`desc->irq_data.chip->irq_unmask`; -

handle_fastoi_irq

1

2handle_irq_event(desc->action);

:c:func:`desc->irq_data.chip->irq_eoi`; -

handle_edge_irq

1

2

3

4

5

6

7

8

9

10

11

12

13

14if (desc->status & running) {

:c:func:`desc->irq_data.chip->irq_mask_ack`;

desc->status |= pending | masked;

return;

}

:c:func:`desc->irq_data.chip->irq_ack`;

desc->status |= running;

do {

if (desc->status & masked)

:c:func:`desc->irq_data.chip->irq_unmask`;

desc->status &= ~pending;

handle_irq_event(desc->action);

} while (status & pending);

desc->status &= ~running; -

handle_simple_irq

1handle_irq_event(desc->action); -

handle_percpu_irq

1

2

3

4

5if (desc->irq_data.chip->irq_ack)

:c:func:`desc->irq_data.chip->irq_ack`;

handle_irq_event(desc->action);

if (desc->irq_data.chip->irq_eoi)

:c:func:`desc->irq_data.chip->irq_eoi`;

2.4. ack_APIC_irq

回到do_IRQ函数,如果handle_irq返回false则调用ack_APIC_irq向APIC的EOI寄存器写0,通知APIC中断服务完成。那handle_irq返回true就不用ack_APIC_irq了么?答案是高级IRQ流处理程序中调用desc->irq_data.chip原语时做的,例如上面分析的handle_edge_irq函数调用irq_ack时其实就是调用了ack_APIC_irq。

2.5. invoke_softirq

irq_exit将irq_enter增加的计数减掉标志着HARDIRQ的结束,然后调用in_interrupt()判断preempt_count为0且有softirq pending,就调用invoke_softirq。

1

|

static inline void invoke_softirq(void)

|