[root@localhost ixgbe]# grep tx_pkt_burst -rn * ixgbe_ethdev.c:1102: eth_dev->tx_pkt_burst = &ixgbe_xmit_pkts; ixgbe_ethdev.c:1582: eth_dev->tx_pkt_burst = &ixgbe_xmit_pkts; ixgbe_ethdev.c:2989: dev->tx_pkt_burst = NULL; ixgbe_ethdev.c:5360: dev->tx_pkt_burst = NULL; ixgbe_rxtx.c:2400: dev->tx_pkt_burst = ixgbe_xmit_pkts_vec; ixgbe_rxtx.c:2403: dev->tx_pkt_burst = ixgbe_xmit_pkts_simple; ixgbe_rxtx.c:2413: dev->tx_pkt_burst = ixgbe_xmit_pkts; ixgbe_vf_representor.c:206: ethdev->tx_pkt_burst = ixgbe_vf_representor_tx_burst

(gdb) bt #0 hinic_xmit_pkts (tx_queue=0x13e7e7000, tx_pkts=0xffffbd40ce00, nb_pkts=1) at /data1/dpdk-19.11/drivers/net/hinic/hinic_pmd_tx.c:1066 #1 0x0000000000465b18 in rte_eth_tx_burst (port_id=0, queue_id=0, tx_pkts=0xffffbd40ce00, nb_pkts=1) at /data1/dpdk-19.11/arm64-armv8a-linuxapp-gcc/include/rte_ethdev.h:4666 #2 0x00000000004666bc in reply_to_icmp_echo_rqsts () at /data1/dpdk-19.11/demo/dpdk-pingpong/main.c:695 #3 0x00000000004667e8 in server_loop () at /data1/dpdk-19.11/demo/dpdk-pingpong/main.c:735 #4 0x0000000000466820 in pong_launch_one_lcore (dummy=0x0) at /data1/dpdk-19.11/demo/dpdk-pingpong/main.c:742 #5 0x0000000000593538 in eal_thread_loop (arg=0x0) at /data1/dpdk-19.11/lib/librte_eal/linux/eal/eal_thread.c:153 #6 0x0000ffffbe617d38 in start_thread (arg=0xffffbd40d910) at pthread_create.c:309 #7 0x0000ffffbe55f5f0 in thread_start () at ../sysdeps/unix/sysv/linux/aarch64/clone.S:91 (gdb) s 1071 struct hinic_txq *txq = tx_queue; (gdb) n 1077 if (HINIC_GET_SQ_FREE_WQEBBS(txq) < txq->tx_free_thresh) (gdb) n 1081 for (nb_tx = 0; nb_tx < nb_pkts; nb_tx++) { (gdb) n 1082 mbuf_pkt = *tx_pkts++; (gdb) n 1083 queue_info = 0; (gdb) n 1086 if (unlikely(!hinic_get_sge_txoff_info(mbuf_pkt, (gdb) n 1093 wqe_wqebb_cnt = HINIC_SQ_WQEBB_CNT(sqe_info.sge_cnt); (gdb) n 1094 free_wqebb_cnt = HINIC_GET_SQ_FREE_WQEBBS(txq); (gdb) n 1095 if (unlikely(wqe_wqebb_cnt > free_wqebb_cnt)) { (gdb) n 1108 sq_wqe = hinic_get_sq_wqe(txq, wqe_wqebb_cnt, &sqe_info); (gdb) n 1111 if (unlikely(!hinic_mbuf_dma_map_sge(txq, mbuf_pkt, (gdb) n 1121 task = &sq_wqe->task; (gdb) n 1124 hinic_fill_tx_offload_info(mbuf_pkt, task, &queue_info, (gdb) n 1128 tx_info = &txq->tx_info[sqe_info.pi]; (gdb) n 1129 tx_info->mbuf = mbuf_pkt; (gdb) n 1130 tx_info->wqebb_cnt = wqe_wqebb_cnt; (gdb) n 1133 hinic_fill_sq_wqe_header(&sq_wqe->ctrl, queue_info, (gdb) n 1134 sqe_info.sge_cnt, sqe_info.owner); (gdb) n 1133 hinic_fill_sq_wqe_header(&sq_wqe->ctrl, queue_info, (gdb) n 1137 hinic_sq_wqe_cpu_to_be32(sq_wqe, sqe_info.seq_wqebbs); (gdb) n 1139 tx_bytes += mbuf_pkt->pkt_len; (gdb) n 1081 for (nb_tx = 0; nb_tx < nb_pkts; nb_tx++) { (gdb) n 1143 if (nb_tx) { (gdb) n 1144 hinic_sq_write_db(txq->sq, txq->cos); (gdb) n 1146 txq->txq_stats.packets += nb_tx; (gdb) n 1147 txq->txq_stats.bytes += tx_bytes; (gdb) n 1149 txq->txq_stats.burst_pkts = nb_tx; (gdb) n 1151 return nb_tx; (gdb) n 1152 } (gdb) n

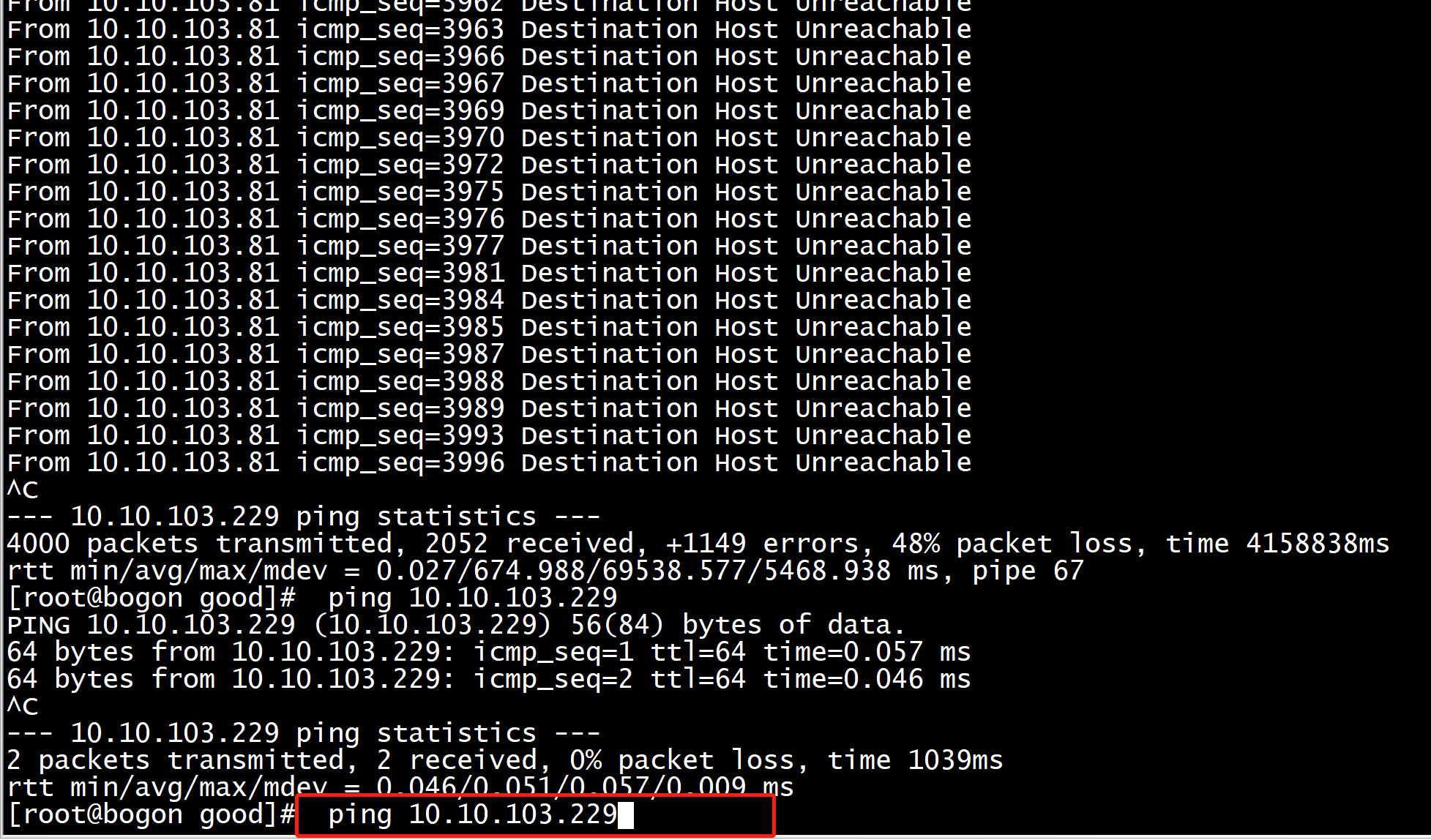

#!/bin/bash for i in {1..254};do ip=10.10.103.229 ping -c 1000 $ip &> /dev/null && echo $ip is up & done wait

ping 10.10.103.229 持续10多分钟,才之心到断点Breakpoint 1, hinic_xmit_mbuf_cleanup (txq=0x13e7e7000) at /data1/dpdk-19.11/drivers/net/hinic/hinic_pmd_tx.c:584

hinic_xmit_mbuf_cleanup 会执行free buf

参考 http://chinaunix.net/uid-28541347-id-5791061.html

net_hinic: Disable promiscuous, nic_dev: hinic-0000:05:00.0, port_id: 0, promisc: 0 net_hinic: Disable allmulticast succeed, nic_dev: hinic-0000:05:00.0, port_id: 0 Initilize port 0 done. tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) tip=10.10.103.229 ARP: hrd=1 proto=0x0800 hln=6 pln=4 op=1 (ARP Request) [Switching to Thread 0xffffbd40d910 (LWP 44270)] Breakpoint 1, hinic_xmit_mbuf_cleanup (txq=0x13e7e7000) at /data1/dpdk-19.11/drivers/net/hinic/hinic_pmd_tx.c:584 584 int i, nb_free = 0; (gdb) bt #0 hinic_xmit_mbuf_cleanup (txq=0x13e7e7000) at /data1/dpdk-19.11/drivers/net/hinic/hinic_pmd_tx.c:584 #1 0x000000000078ac00 in hinic_xmit_pkts (tx_queue=0x13e7e7000, tx_pkts=0xffffbd40ce80, nb_pkts=1) at /data1/dpdk-19.11/drivers/net/hinic/hinic_pmd_tx.c:1078 #2 0x00000000004663c8 in reply_to_icmp_echo_rqsts () #3 0x00000000004674fc in pong_launch_one_lcore () #4 0x0000000000593ae8 in eal_thread_loop (arg=0x0) at /data1/dpdk-19.11/lib/librte_eal/linux/eal/eal_thread.c:153 #5 0x0000ffffbe617d38 in start_thread (arg=0xffffbd40d910) at pthread_create.c:309 #6 0x0000ffffbe55f5f0 in thread_start () at ../sysdeps/unix/sysv/linux/aarch64/clone.S:91 (gdb) delete Delete all breakpoints? (y or n) y (gdb) c

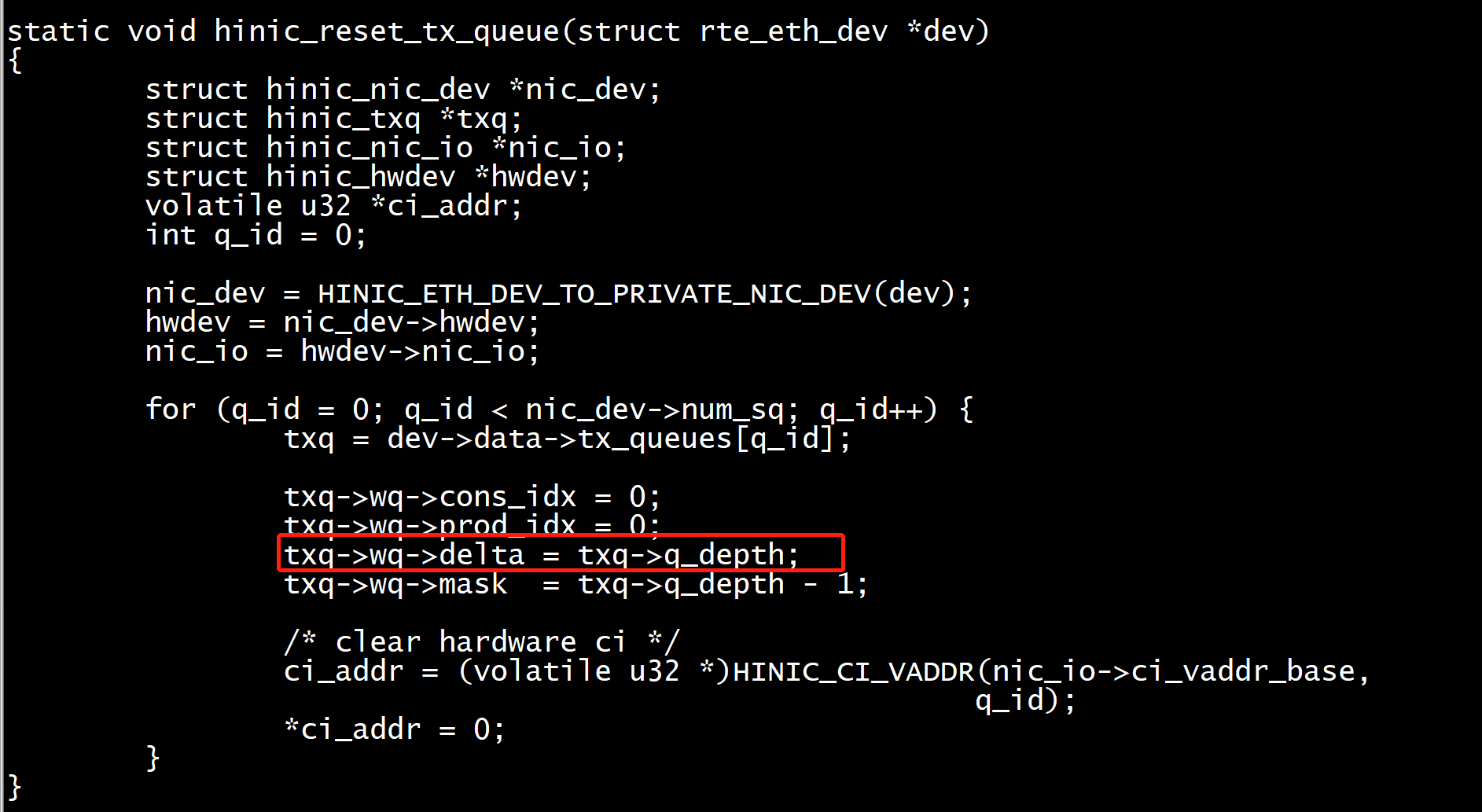

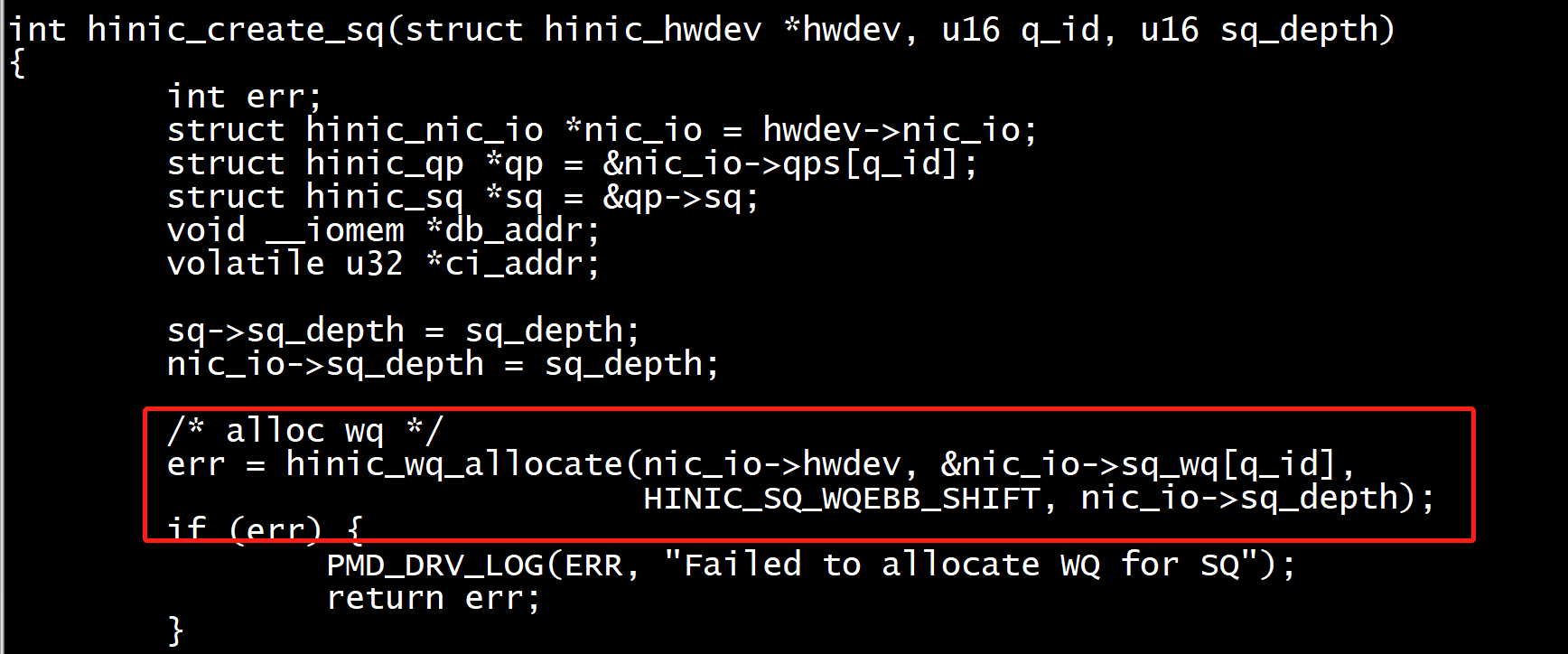

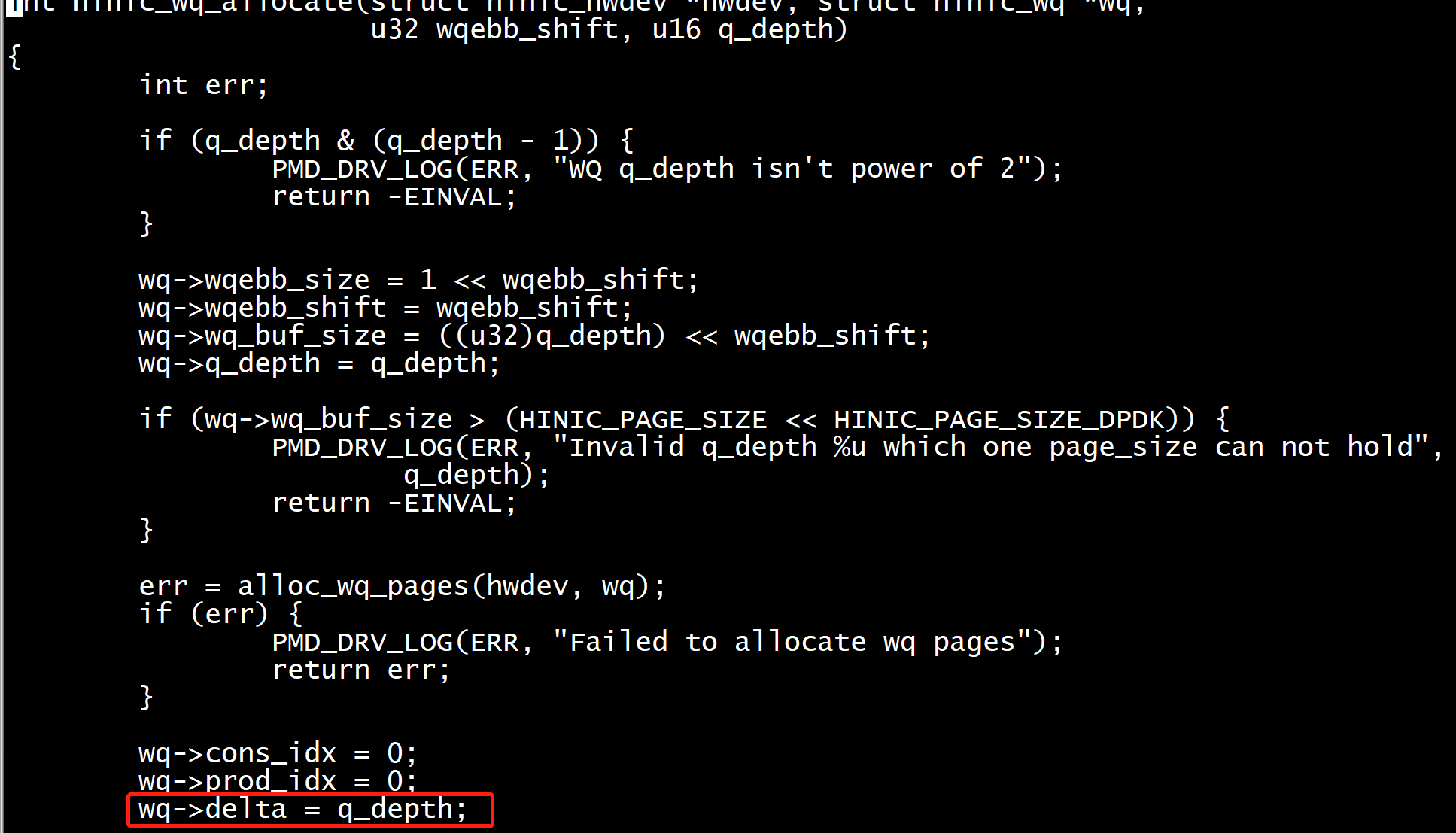

txq->wq->delta txq->q_depth初始化

static int hinic_tx_queue_setup(struct rte_eth_dev *dev, uint16_t queue_idx, uint16_t nb_desc, unsigned int socket_id, __rte_unused const struct rte_eth_txconf *tx_conf) { int rc; struct hinic_nic_dev *nic_dev; struct hinic_hwdev *hwdev; struct hinic_txq *txq; u16 sq_depth, tx_free_thresh; nic_dev = HINIC_ETH_DEV_TO_PRIVATE_NIC_DEV(dev); hwdev = nic_dev->hwdev; /* queue depth must be power of 2, otherwise will be aligned up */ sq_depth = (nb_desc & (nb_desc - 1)) ? ((u16)(1U << (ilog2(nb_desc) + 1))) : nb_desc; /* alloc tx sq hw wqepage */ rc = hinic_create_sq(hwdev, queue_idx, sq_depth); }

将nb_txd改小

rte_eth_tx_queue_setup(portid, 0, nb_txd,

rte_eth_dev_socket_id(portid),

&txq_conf);

Breakpoint 1, hinic_xmit_mbuf_cleanup (txq=0x13e7e7000) at /data1/dpdk-19.11/drivers/net/hinic/hinic_pmd_tx.c:584 584 int i, nb_free = 0; (gdb) s 586 int wqebb_cnt = 0; (gdb) list 581 { 582 struct hinic_tx_info *tx_info; 583 struct rte_mbuf *mbuf, *m, *mbuf_free[HINIC_MAX_TX_FREE_BULK]; 584 int i, nb_free = 0; 585 u16 hw_ci, sw_ci, sq_mask; 586 int wqebb_cnt = 0; 587 588 hw_ci = HINIC_GET_SQ_HW_CI(txq); 589 sw_ci = HINIC_GET_SQ_LOCAL_CI(txq); 590 sq_mask = HINIC_GET_SQ_WQE_MASK(txq); (gdb) n 588 hw_ci = HINIC_GET_SQ_HW_CI(txq); (gdb) n 589 sw_ci = HINIC_GET_SQ_LOCAL_CI(txq); (gdb) n 590 sq_mask = HINIC_GET_SQ_WQE_MASK(txq); (gdb) n 592 for (i = 0; i < txq->tx_free_thresh; ++i) { (gdb) n 593 tx_info = &txq->tx_info[sw_ci]; (gdb) n 594 if (hw_ci == sw_ci || (gdb) p *tx_info $1 = {mbuf = 0x13e9a9400, wqebb_cnt = 1, cpy_mbuf = 0x0} (gdb) n 595 (((hw_ci - sw_ci) & sq_mask) < tx_info->wqebb_cnt)) (gdb) n 594 if (hw_ci == sw_ci || (gdb) n 598 sw_ci = (sw_ci + tx_info->wqebb_cnt) & sq_mask; (gdb) n 600 if (unlikely(tx_info->cpy_mbuf != NULL)) { (gdb) n 605 wqebb_cnt += tx_info->wqebb_cnt; (gdb) n 606 mbuf = tx_info->mbuf; (gdb) n 608 if (likely(mbuf->nb_segs == 1)) { (gdb) n 609 m = rte_pktmbuf_prefree_seg(mbuf); (gdb) n 610 tx_info->mbuf = NULL; (gdb) n 612 if (unlikely(m == NULL)) (gdb) n 615 mbuf_free[nb_free++] = m; (gdb) n 616 if (unlikely(m->pool != mbuf_free[0]->pool || (gdb) n 592 for (i = 0; i < txq->tx_free_thresh; ++i) { (gdb) n 593 tx_info = &txq->tx_info[sw_ci]; (gdb) n 594 if (hw_ci == sw_ci || (gdb) n 595 (((hw_ci - sw_ci) & sq_mask) < tx_info->wqebb_cnt)) (gdb) n 594 if (hw_ci == sw_ci || (gdb) n 598 sw_ci = (sw_ci + tx_info->wqebb_cnt) & sq_mask; (gdb) n 600 if (unlikely(tx_info->cpy_mbuf != NULL)) { (gdb) n 605 wqebb_cnt += tx_info->wqebb_cnt; (gdb) n 606 mbuf = tx_info->mbuf; (gdb) n 608 if (likely(mbuf->nb_segs == 1)) { (gdb) n 609 m = rte_pktmbuf_prefree_seg(mbuf); (gdb) n 610 tx_info->mbuf = NULL; (gdb) n 612 if (unlikely(m == NULL)) (gdb) n 615 mbuf_free[nb_free++] = m; (gdb) n 616 if (unlikely(m->pool != mbuf_free[0]->pool || (gdb) n 592 for (i = 0; i < txq->tx_free_thresh; ++i) { (gdb) c Continuing. Breakpoint 1, hinic_xmit_mbuf_cleanup (txq=0x13e7e7000) at /data1/dpdk-19.11/drivers/net/hinic/hinic_pmd_tx.c:584 584 int i, nb_free = 0; (gdb) delete Delete all breakpoints? (y or n) y (gdb) c Continuing.

bnxt_xmit_pkts

uint16_t bnxt_xmit_pkts(void *tx_queue, struct rte_mbuf **tx_pkts, uint16_t nb_pkts) { struct bnxt_tx_queue *txq = tx_queue; uint16_t nb_tx_pkts = 0; uint16_t db_mask = txq->tx_ring->tx_ring_struct->ring_size >> 2; uint16_t last_db_mask = 0; /* Handle TX completions */ bnxt_handle_tx_cp(txq); /* Handle TX burst request */ for (nb_tx_pkts = 0; nb_tx_pkts < nb_pkts; nb_tx_pkts++) { if (bnxt_start_xmit(tx_pkts[nb_tx_pkts], txq)) { break; } else if ((nb_tx_pkts & db_mask) != last_db_mask) { B_TX_DB(txq->tx_ring->tx_doorbell, txq->tx_ring->tx_prod); last_db_mask = nb_tx_pkts & db_mask; } } if (nb_tx_pkts) B_TX_DB(txq->tx_ring->tx_doorbell, txq->tx_ring->tx_prod); return nb_tx_pkts; }

这个函数的逻辑可以分为三个部分来看:

首先是bnxt_handle_tx_cp,这里的cp是指complete(完成),这个函数主要负责处理之前网卡已经发送完成的mbuf,也就是网卡已经通过DMA将mbuf中的数据拷贝走,软件可以释放mbuf的逻辑;

其次是bnxt_start_xmit,这个是真正的发送逻辑,其实这里的发送也并不是真的把数据拷贝到网卡上,而是根据每个mbuf的数据地址设置到bd ring,从而告诉网卡DMA拷贝的源地址;

最后是B_TX_DB对tx_doorbell的写操作,作用就是前面bd的地址信息已经填充完毕,告诉网卡可以发起DMA了。

在分析具体函数前,首先熟悉一下相关的数据结构。和tx_queue相关联的有两个ring,一个是tx_ring(发送ring),一个是cp_ring(完成ring)。其数据结构关系如下:

下面分别介绍三个阶段的实现。

释放已经DMA完成的mbuf

bnxt_handle_tx_cp

static int bnxt_handle_tx_cp(struct bnxt_tx_queue *txq) { struct bnxt_cp_ring_info *cpr = txq->cp_ring; uint32_t raw_cons = cpr->cp_raw_cons; /* 记录完成队列上次完成队列释放(consumer)的index */ uint32_t cons; int nb_tx_pkts = 0; struct tx_cmpl *txcmp; if ((txq->tx_ring->tx_ring_struct->ring_size - (bnxt_tx_avail(txq->tx_ring))) > txq->tx_free_thresh) { /*如果发送ring中的已用desc数量大于tx_free_thresh*/ while (1) { cons = RING_CMP(cpr->cp_ring_struct, raw_cons); txcmp = (struct tx_cmpl *)&cpr->cp_desc_ring[cons]; /* struct tx_cmpl中的type由硬件设置,TX_CMPL_TYPE_TX_L2表示网卡已经DMA完成,软件可以释放mbuf中的数据了 */ if (CMP_TYPE(txcmp) == TX_CMPL_TYPE_TX_L2) nb_tx_pkts++; else RTE_LOG_DP(DEBUG, PMD, "Unhandled CMP type %02x ", CMP_TYPE(txcmp)); raw_cons = NEXT_RAW_CMP(raw_cons); /* raw_cons = raw_cons + 1 */ } if (nb_tx_pkts) /* nb_tx_pkts记录了本次可以释放的mbuf数量 */ bnxt_tx_cmp(txq, nb_tx_pkts); /* 释放mbuf */ cpr->cp_raw_cons = raw_cons; /* 更新 cpr->cp_raw_cons */ B_CP_DIS_DB(cpr, cpr->cp_raw_cons); /* 通过cp ring的cp_doorbell通知硬件对应的cp ring bd已经可以释放了*/ } return nb_tx_pkts; }

真正释放mbuf的操作是在bnxt_tx_cmp函数完成的。

bnxt_tx_cmp

static void bnxt_tx_cmp(struct bnxt_tx_queue *txq, int nr_pkts) { struct bnxt_tx_ring_info *txr = txq->tx_ring; uint16_t cons = txr->tx_cons; int i, j; for (i = 0; i < nr_pkts; i++) { struct bnxt_sw_tx_bd *tx_buf; struct rte_mbuf *mbuf; tx_buf = &txr->tx_buf_ring[cons]; /*tx_buf_ring存放txring中的mbuf*/ cons = RING_NEXT(txr->tx_ring_struct, cons); mbuf = tx_buf->mbuf; tx_buf->mbuf = NULL; /* EW - no need to unmap DMA memory? */ /* tx_buf->nr_bds记录一个mbuf对应的bd数量,一个mbuf可能对应多个bd */ for (j = 1; j < tx_buf->nr_bds; j++) cons = RING_NEXT(txr->tx_ring_struct, cons); /* cons = cons + 1 */ rte_pktmbuf_free(mbuf); } txr->tx_cons = cons; /* 清空了一部分mbuf,更新consumer index */ }

这里注意一点,在函数的最后更新tx_ring的consumer index,虽然对于发送端来说,软件驱动是productor(产生数据),网卡是consumer(消费数据),但是真正释放数据还是由软件驱动完成,所以consumer也是要在软件更新的。

数据包发送

数据包发送是在bnxt_start_xmit中完成的。

bnxt_start_xmit

static uint16_t bnxt_start_xmit(struct rte_mbuf *tx_pkt, struct bnxt_tx_queue *txq) { struct bnxt_tx_ring_info *txr = txq->tx_ring; struct tx_bd_long *txbd; struct tx_bd_long_hi *txbd1; uint32_t vlan_tag_flags, cfa_action; bool long_bd = false; uint16_t last_prod = 0; struct rte_mbuf *m_seg; struct bnxt_sw_tx_bd *tx_buf; static const uint32_t lhint_arr[4] = { TX_BD_LONG_FLAGS_LHINT_LT512, TX_BD_LONG_FLAGS_LHINT_LT1K, TX_BD_LONG_FLAGS_LHINT_LT2K, TX_BD_LONG_FLAGS_LHINT_LT2K }; if (tx_pkt->ol_flags & (PKT_TX_TCP_SEG | PKT_TX_TCP_CKSUM | PKT_TX_UDP_CKSUM | PKT_TX_IP_CKSUM | PKT_TX_VLAN_PKT | PKT_TX_OUTER_IP_CKSUM)) long_bd = true; /* 1. 将待发送的mbuf放入tx_ring的bnxt_sw_tx_bd中 */ tx_buf = &txr->tx_buf_ring[txr->tx_prod]; tx_buf->mbuf = tx_pkt; tx_buf->nr_bds = long_bd + tx_pkt->nb_segs; /* 一个mbuf可能对应多个bd,last_prod指向该mbuf对应的最后一个bd的index */ last_prod = (txr->tx_prod + tx_buf->nr_bds - 1) & txr->tx_ring_struct->ring_mask; if (unlikely(bnxt_tx_avail(txr) < tx_buf->nr_bds)) return -ENOMEM; /* 2. 根据mbuf的信息设置rx tx_desc_ring中对应的bd,其中关键是txbd->addr */ txbd = &txr->tx_desc_ring[txr->tx_prod]; txbd->opaque = txr->tx_prod; txbd->flags_type = tx_buf->nr_bds << TX_BD_LONG_FLAGS_BD_CNT_SFT; txbd->len = tx_pkt->data_len; if (txbd->len >= 2014) txbd->flags_type |= TX_BD_LONG_FLAGS_LHINT_GTE2K; else txbd->flags_type |= lhint_arr[txbd->len >> 9]; /* txbd->addr是mbuf的dma地址,也就是iova地址 */ txbd->addr = rte_cpu_to_le_32(RTE_MBUF_DATA_DMA_ADDR(tx_buf->mbuf)); /* txr->tx_prod = txr->tx_prod + 1 */ if (long_bd) { txbd->flags_type |= TX_BD_LONG_TYPE_TX_BD_LONG; vlan_tag_flags = 0; cfa_action = 0; if (tx_buf->mbuf->ol_flags & PKT_TX_VLAN_PKT) { /* shurd: Should this mask at * TX_BD_LONG_CFA_META_VLAN_VID_MASK? */ vlan_tag_flags = TX_BD_LONG_CFA_META_KEY_VLAN_TAG | tx_buf->mbuf->vlan_tci; /* Currently supports 8021Q, 8021AD vlan offloads * QINQ1, QINQ2, QINQ3 vlan headers are deprecated */ /* DPDK only supports 802.11q VLAN packets */ vlan_tag_flags |= TX_BD_LONG_CFA_META_VLAN_TPID_TPID8100; } /* 更新tx_ring的productor index */ txr->tx_prod = RING_NEXT(txr->tx_ring_struct, txr->tx_prod); txbd1 = (struct tx_bd_long_hi *) &txr->tx_desc_ring[txr->tx_prod]; txbd1->lflags = 0; txbd1->cfa_meta = vlan_tag_flags; txbd1->cfa_action = cfa_action; /* 根据mbuf的ol_flags设置bd中对应的flag */ if (tx_pkt->ol_flags & PKT_TX_TCP_SEG) { /* TSO */ txbd1->lflags |= TX_BD_LONG_LFLAGS_LSO; txbd1->hdr_size = tx_pkt->l2_len + tx_pkt->l3_len + tx_pkt->l4_len + tx_pkt->outer_l2_len + tx_pkt->outer_l3_len; txbd1->mss = tx_pkt->tso_segsz; } else if ((tx_pkt->ol_flags & PKT_TX_OIP_IIP_TCP_UDP_CKSUM) == PKT_TX_OIP_IIP_TCP_UDP_CKSUM) { /* Outer IP, Inner IP, Inner TCP/UDP CSO */ txbd1->lflags |= TX_BD_FLG_TIP_IP_TCP_UDP_CHKSUM; txbd1->mss = 0; } else if ((tx_pkt->ol_flags & PKT_TX_IIP_TCP_UDP_CKSUM) == PKT_TX_IIP_TCP_UDP_CKSUM) { /* (Inner) IP, (Inner) TCP/UDP CSO */ txbd1->lflags |= TX_BD_FLG_IP_TCP_UDP_CHKSUM; txbd1->mss = 0; } else if ((tx_pkt->ol_flags & PKT_TX_OIP_TCP_UDP_CKSUM) == PKT_TX_OIP_TCP_UDP_CKSUM) { /* Outer IP, (Inner) TCP/UDP CSO */ txbd1->lflags |= TX_BD_FLG_TIP_TCP_UDP_CHKSUM; txbd1->mss = 0; } else if ((tx_pkt->ol_flags & PKT_TX_OIP_IIP_CKSUM) == PKT_TX_OIP_IIP_CKSUM) { /* Outer IP, Inner IP CSO */ txbd1->lflags |= TX_BD_FLG_TIP_IP_CHKSUM; txbd1->mss = 0; } else if ((tx_pkt->ol_flags & PKT_TX_TCP_UDP_CKSUM) == PKT_TX_TCP_UDP_CKSUM) { /* TCP/UDP CSO */ txbd1->lflags |= TX_BD_LONG_LFLAGS_TCP_UDP_CHKSUM; txbd1->mss = 0; } else if (tx_pkt->ol_flags & PKT_TX_IP_CKSUM) { /* IP CSO */ txbd1->lflags |= TX_BD_LONG_LFLAGS_IP_CHKSUM; txbd1->mss = 0; } else if (tx_pkt->ol_flags & PKT_TX_OUTER_IP_CKSUM) { /* IP CSO */ txbd1->lflags |= TX_BD_LONG_LFLAGS_T_IP_CHKSUM; txbd1->mss = 0; } } else { txbd->flags_type |= TX_BD_SHORT_TYPE_TX_BD_SHORT; } m_seg = tx_pkt->next; /* i is set at the end of the if(long_bd) block */ while (txr->tx_prod != last_prod) { /* 更新tx_ring的productor index */ txr->tx_prod = RING_NEXT(txr->tx_ring_struct, txr->tx_prod); /* txr->tx_prod = txr->tx_prod + 1 */ tx_buf = &txr->tx_buf_ring[txr->tx_prod]; txbd = &txr->tx_desc_ring[txr->tx_prod]; txbd->addr = rte_cpu_to_le_32(RTE_MBUF_DATA_DMA_ADDR(m_seg)); txbd->flags_type = TX_BD_SHORT_TYPE_TX_BD_SHORT; txbd->len = m_seg->data_len; m_seg = m_seg->next; } txbd->flags_type |= TX_BD_LONG_FLAGS_PACKET_END; /* 更新tx_ring的productor index */ txr->tx_prod = RING_NEXT(txr->tx_ring_struct, txr->tx_prod); /* txr->tx_prod = txr->tx_prod + 1 */ return 0; }

其中值得注意的有两点,一个是mbuf向db(tx_bd_long)转换的过程,其bd地址设置为mbuf的iova地址,也就是dma地址。

txbd->addr = rte_cpu_to_le_32(RTE_MBUF_DATA_DMA_ADDR(tx_buf->mbuf)); #define RTE_MBUF_DATA_DMA_ADDR(mb) ((uint64_t)((mb)->buf_iova + (mb)->data_off))

另一方面是在发送过程中会更新tx_ring的productor index。 启动DMA 启动硬件DMA拷贝是通过一下语句完成: B_TX_DB(txq->tx_ring->tx_doorbell, txq->tx_ring->tx_prod) 将tx_ring的productor index(tx_ring->tx_prod)写入tx_ring的tx_doorbell中。而无论是cp_ring的cp_doorbell还是tx_ring的tx_doorbell都在在bnxt_alloc_hwrm_rings函数中初始化为设备的bar空间地址的。

cpr->cp_doorbell = (char *)pci_dev->mem_resource[2].addr + idx * 0x80; txr->tx_doorbell = (char *)pci_dev->mem_resource[2].addr + idx * 0x80;

ixgbe_xmit_pkts()

https://blog.csdn.net/hz5034/article/details/88381486

发送时回写的三种情况(默认为第一种,回写取决于RS):

1、TXDCTL[n].WTHRESH = 0 and a descriptor that has RS set is ready to be written back.

2、TXDCTL[n].WTHRESH > 0 and TXDCTL[n].WTHRESH descriptors have accumulated.

3、TXDCTL[n].WTHRESH > 0 and the corresponding EITR counter has reached zero. The timer expiration flushes any accumulated descriptors and sets an interrupt event (TXDW).

发送时回写:

1、挂载每个包的最后一个分段时,若当前使用的desc数大于上限(默认为32),设置RS

2、burst发包的最后一个包的最后一个分段,设置RS

uint16_t ixgbe_xmit_pkts(void *tx_queue, struct rte_mbuf **tx_pkts, uint16_t nb_pkts) { ... txq = tx_queue; sw_ring = txq->sw_ring; txr = txq->tx_ring; tx_id = txq->tx_tail; /* 相当于ixgbe的next_to_use */ txe = &sw_ring[tx_id]; /* 得到tx_tail指向的entry */ txp = NULL; ... /* 若空闲的mbuf数小于下限(默认为32),清理空闲的mbuf */ if (txq->nb_tx_free < txq->tx_free_thresh) ixgbe_xmit_cleanup(txq); ... /* TX loop */ for (nb_tx = 0; nb_tx < nb_pkts; nb_tx++) { ... tx_pkt = *tx_pkts++; /* 待发送的mbuf */ pkt_len = tx_pkt->pkt_len; /* 待发送的mbuf的长度 */ ... nb_used = (uint16_t)(tx_pkt->nb_segs + new_ctx); /* 使用的desc数 */ ... tx_last = (uint16_t) (tx_id + nb_used - 1); /* tx_last指向最后一个desc */ ... if (tx_last >= txq->nb_tx_desc) /* 注意是一个环形缓冲区 */ tx_last = (uint16_t) (tx_last - txq->nb_tx_desc); ... if (nb_used > txq->nb_tx_free) { ... if (ixgbe_xmit_cleanup(txq) != 0) { /* Could not clean any descriptors */ if (nb_tx == 0) /* 若是第一个包(未发包),return 0 */ return 0; goto end_of_tx; /* 若非第一个包(已发包),停止发包,更新发送队列参数 */ } ... } ... /* 每个包可能包含多个分段,m_seg指向第一个分段 */ m_seg = tx_pkt; do { txd = &txr[tx_id]; /* desc */ txn = &sw_ring[txe->next_id]; /* 下一个entry */ ... txe->mbuf = m_seg; /* 将m_seg挂载到txe */ ... slen = m_seg->data_len; /* m_seg的长度 */ buf_dma_addr = rte_mbuf_data_dma_addr(m_seg); /* m_seg的总线地址 */ txd->read.buffer_addr = rte_cpu_to_le_64(buf_dma_addr); /* 总线地址赋给txd->read.buffer_addr */ txd->read.cmd_type_len = rte_cpu_to_le_32(cmd_type_len | slen); /* 长度赋给txd->read.cmd_type_len */ ... txe->last_id = tx_last; /* last_id指向最后一个desc */ tx_id = txe->next_id; /* tx_id指向下一个desc */ txe = txn; /* txe指向下一个entry */ m_seg = m_seg->next; /* m_seg指向下一个分段 */ } while (m_seg != NULL); ... /* 最后一个分段 */ cmd_type_len |= IXGBE_TXD_CMD_EOP; txq->nb_tx_used = (uint16_t)(txq->nb_tx_used + nb_used); /* 更新nb_tx_used */ txq->nb_tx_free = (uint16_t)(txq->nb_tx_free - nb_used); /* 更新nb_tx_free */ ... if (txq->nb_tx_used >= txq->tx_rs_thresh) { /* 若使用的mbuf数大于上限(默认为32),设置RS */ ... cmd_type_len |= IXGBE_TXD_CMD_RS; ... txp = NULL; /* txp为NULL表示已设置RS */ } else txp = txd; /* txp非NULL表示未设置RS */ ... txd->read.cmd_type_len |= rte_cpu_to_le_32(cmd_type_len); } ... end_of_tx: /* burst发包的最后一个包的最后一个分段 */ ... if (txp != NULL) /* 若未设置RS,设置RS */ txp->read.cmd_type_len |= rte_cpu_to_le_32(IXGBE_TXD_CMD_RS); ... IXGBE_PCI_REG_WRITE_RELAXED(txq->tdt_reg_addr, tx_id); /* 将tx_id写入TDT */ txq->tx_tail = tx_id; /* tx_tail指向下一个desc */ ... return nb_tx; }

ixgbe_xmit_cleanup()

static inline int ixgbe_xmit_cleanup(struct ixgbe_tx_queue *txq) { ... uint16_t last_desc_cleaned = txq->last_desc_cleaned; ... /* 最后一个entry */ desc_to_clean_to = (uint16_t)(last_desc_cleaned + txq->tx_rs_thresh); if (desc_to_clean_to >= nb_tx_desc) /* 注意是环形缓冲区 */ desc_to_clean_to = (uint16_t)(desc_to_clean_to - nb_tx_desc); ... /* 最后一个entry的最后一个desc */ desc_to_clean_to = sw_ring[desc_to_clean_to].last_id; status = txr[desc_to_clean_to].wb.status; /* 若最后一个desc的DD为0,return -1 */ if (!(status & rte_cpu_to_le_32(IXGBE_TXD_STAT_DD))) { ... return -(1); } ... /* 将要清理的desc数 */ if (last_desc_cleaned > desc_to_clean_to) /* 注意是环形缓冲区 */ nb_tx_to_clean = (uint16_t)((nb_tx_desc - last_desc_cleaned) + desc_to_clean_to); else nb_tx_to_clean = (uint16_t)(desc_to_clean_to - last_desc_cleaned); ... txr[desc_to_clean_to].wb.status = 0; /* 清零DD */ ... txq->last_desc_cleaned = desc_to_clean_to; /* 更新last_desc_cleaned */ txq->nb_tx_free = (uint16_t)(txq->nb_tx_free + nb_tx_to_clean); /* 更新nb_tx_free */ ... return 0; }