https://dpdk-docs.readthedocs.io/en/latest/prog_guide/vhost_lib.html

1、 怎么实现vhost_dev的VhostOps的vhost_set_vring_kick和vhost_set_vring_call; vhost_net kernel方式的vhost_set_vring_kick和vhost_set_vring_call依赖于/dev/vhost_net的ioctl。

有两种实现方式: 1、guest是server,dpdk vhost user是client 2、 guest是client,dpdk vhost user是server

VHOST_SET_VRING_CALL 和 VHOST_SET_VRING_KICK的实现有两种:

qemu vhost user: VhostOps user_ops

dpdk : vhost_message_handler_t vhost_message_handlers[VHOST_USER_MAX]

2、vhost-user怎么实现vhost_dev的const VhostOps *vhost_ops;

hw/virtio/vhost-backend.c:294:static const VhostOps kernel_ops = { hw/virtio/vhost-user.c:2357:const VhostOps user_ops = { include/hw/virtio/vhost-backend.h:175:extern const VhostOps user_ops;

3、 vhost-user怎么实现vhost_dev的struct vhost_virtqueue

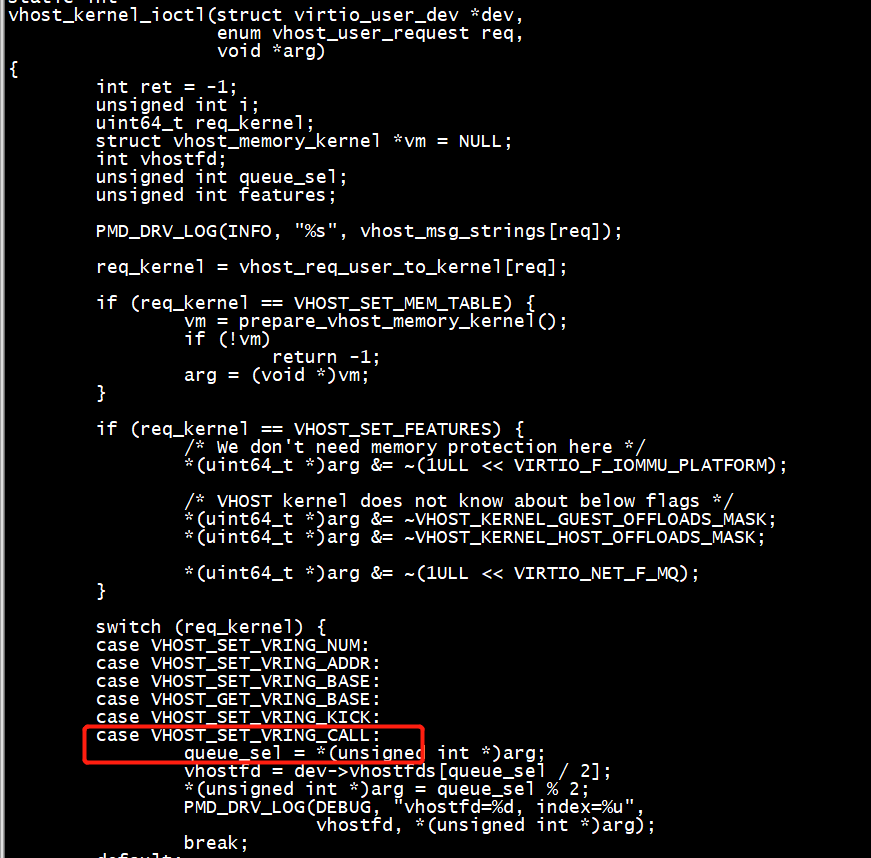

4、 vhost_kernel_ioctl处理VHOST_SET_VRING_CAL和VHOST_SET_VRING_KICK

5、发送和接收

/*将count个报文从host转发给guest*/

uint16_t rte_vhost_enqueue_burst(int vid, uint16_t queue_id,

struct rte_mbuf **pkts, uint16_t count)

/*从guest接收count个报文,并存储到pkts中*/

uint16_t rte_vhost_dequeue_burst(int vid, uint16_t queue_id,

struct rte_mempool *mbuf_pool, struct rte_mbuf **pkts, uint16_t count)

struct vhost_dev { MemoryListener memory_listener; /* MemoryListener是物理内存操作的回调函数集合 */ struct vhost_memory *mem; int n_mem_sections; MemoryRegionSection *mem_sections; struct vhost_virtqueue *vqs; /* vhost_virtqueue列表和个数 */ int nvqs; /* the first virtuque which would be used by this vhost dev */ int vq_index; unsigned long long features; /* vhost设备支持的features */ unsigned long long acked_features; /* guest acked的features */ unsigned long long backend_features; /* backend, e.g. tap设备,支持的features */ bool started; bool log_enabled; vhost_log_chunk_t *log; unsigned long long log_size; Error *migration_blocker; bool force; bool memory_changed; hwaddr mem_changed_start_addr; hwaddr mem_changed_end_addr; const VhostOps *vhost_ops; /* VhostOps基于kernel和user两种形态的vhost有不同的实现,内核的实现最终调用ioctl完成 */ void *opaque; };

static vhost_message_handler_t vhost_message_handlers[VHOST_USER_MAX] = { [VHOST_USER_NONE] = NULL, [VHOST_USER_GET_FEATURES] = vhost_user_get_features, [VHOST_USER_SET_FEATURES] = vhost_user_set_features, [VHOST_USER_SET_OWNER] = vhost_user_set_owner, [VHOST_USER_RESET_OWNER] = vhost_user_reset_owner, [VHOST_USER_SET_MEM_TABLE] = vhost_user_set_mem_table, [VHOST_USER_SET_LOG_BASE] = vhost_user_set_log_base, [VHOST_USER_SET_LOG_FD] = vhost_user_set_log_fd, [VHOST_USER_SET_VRING_NUM] = vhost_user_set_vring_num, [VHOST_USER_SET_VRING_ADDR] = vhost_user_set_vring_addr, [VHOST_USER_SET_VRING_BASE] = vhost_user_set_vring_base, [VHOST_USER_GET_VRING_BASE] = vhost_user_get_vring_base, [VHOST_USER_SET_VRING_KICK] = vhost_user_set_vring_kick, [VHOST_USER_SET_VRING_CALL] = vhost_user_set_vring_call, [VHOST_USER_SET_VRING_ERR] = vhost_user_set_vring_err, [VHOST_USER_GET_PROTOCOL_FEATURES] = vhost_user_get_protocol_features, [VHOST_USER_SET_PROTOCOL_FEATURES] = vhost_user_set_protocol_features, [VHOST_USER_GET_QUEUE_NUM] = vhost_user_get_queue_num, [VHOST_USER_SET_VRING_ENABLE] = vhost_user_set_vring_enable, [VHOST_USER_SEND_RARP] = vhost_user_send_rarp, [VHOST_USER_NET_SET_MTU] = vhost_user_net_set_mtu, [VHOST_USER_SET_SLAVE_REQ_FD] = vhost_user_set_req_fd, [VHOST_USER_IOTLB_MSG] = vhost_user_iotlb_msg, [VHOST_USER_POSTCOPY_ADVISE] = vhost_user_set_postcopy_advise, [VHOST_USER_POSTCOPY_LISTEN] = vhost_user_set_postcopy_listen, [VHOST_USER_POSTCOPY_END] = vhost_user_postcopy_end, [VHOST_USER_GET_INFLIGHT_FD] = vhost_user_get_inflight_fd, [VHOST_USER_SET_INFLIGHT_FD] = vhost_user_set_inflight_fd, };

const VhostOps user_ops = { .backend_type = VHOST_BACKEND_TYPE_USER, .vhost_backend_init = vhost_user_backend_init, .vhost_backend_cleanup = vhost_user_backend_cleanup, .vhost_backend_memslots_limit = vhost_user_memslots_limit, .vhost_set_log_base = vhost_user_set_log_base, .vhost_set_mem_table = vhost_user_set_mem_table, .vhost_set_vring_addr = vhost_user_set_vring_addr, .vhost_set_vring_endian = vhost_user_set_vring_endian, .vhost_set_vring_num = vhost_user_set_vring_num, .vhost_set_vring_base = vhost_user_set_vring_base, .vhost_get_vring_base = vhost_user_get_vring_base, .vhost_set_vring_kick = vhost_user_set_vring_kick, .vhost_set_vring_call = vhost_user_set_vring_call, .vhost_set_features = vhost_user_set_features, .vhost_get_features = vhost_user_get_features, .vhost_set_owner = vhost_user_set_owner, .vhost_reset_device = vhost_user_reset_device, .vhost_get_vq_index = vhost_user_get_vq_index, .vhost_set_vring_enable = vhost_user_set_vring_enable, .vhost_requires_shm_log = vhost_user_requires_shm_log, .vhost_migration_done = vhost_user_migration_done, .vhost_backend_can_merge = vhost_user_can_merge, .vhost_net_set_mtu = vhost_user_net_set_mtu, .vhost_set_iotlb_callback = vhost_user_set_iotlb_callback, .vhost_send_device_iotlb_msg = vhost_user_send_device_iotlb_msg, .vhost_get_config = vhost_user_get_config, .vhost_set_config = vhost_user_set_config, .vhost_crypto_create_session = vhost_user_crypto_create_session, .vhost_crypto_close_session = vhost_user_crypto_close_session, .vhost_backend_mem_section_filter = vhost_user_mem_section_filter, .vhost_get_inflight_fd = vhost_user_get_inflight_fd, .vhost_set_inflight_fd = vhost_user_set_inflight_fd, };

static int vhost_set_vring_file(struct vhost_dev *dev, VhostUserRequest request, struct vhost_vring_file *file) { int fds[VHOST_USER_MAX_RAM_SLOTS]; size_t fd_num = 0; VhostUserMsg msg = { .hdr.request = request, .hdr.flags = VHOST_USER_VERSION, .payload.u64 = file->index & VHOST_USER_VRING_IDX_MASK, .hdr.size = sizeof(msg.payload.u64), }; if (ioeventfd_enabled() && file->fd > 0) { fds[fd_num++] = file->fd; } else { msg.payload.u64 |= VHOST_USER_VRING_NOFD_MASK; } if (vhost_user_write(dev, &msg, fds, fd_num) < 0) { return -1; } return 0; } static int vhost_user_set_vring_kick(struct vhost_dev *dev, struct vhost_vring_file *file) { return vhost_set_vring_file(dev, VHOST_USER_SET_VRING_KICK, file); } static int vhost_user_set_vring_call(struct vhost_dev *dev, struct vhost_vring_file *file) { return vhost_set_vring_file(dev, VHOST_USER_SET_VRING_CALL, file); }

Vhost-user Overview

The goal of vhost-user is to implement such a Virtio transport, staying as close as possible to the vhost paradigm of using shared memory, ioeventfds and irqfds. A UNIX domain socket based mechanism allows to set up the resources used by a number of Vrings shared between two userspace processes, which will be placed in shared memory. The mechanism also configures the necessary eventfds to signal when a Vring gets a kick event from either side.

Vhost-user has been implemented in QEMU via a set of patches, giving the option to pass any virtio_net Vrings directly to another userspace process, implementing a virtio_net backend outside QEMU. This way, direct Snabbswitch to a QEMU guest virtio_net communication can be realized.

QEMU already implements the vhost interface for a fast zero-copy guest to host kernel data path. Configuration of this interface relies on a series of ioctls that define the control plane. In this scenario, the QEMU network backend invoked is the “tap” netdev. A usual way to run it is:

$ qemu -netdev type=tap,script=/etc/kvm/kvm-ifup,id=net0,vhost=on

-device virtio-net-pci,netdev=net0

The purpose of the vhost-user patches for QEMU is to provide the infrastructure and implementation of a user space vhost interface. The fundamental additions of this implementation are:

Added an option to -mem-path to allocate guest RAM as memory that can be shared with another process.

Added an option to -mem-path to allocate guest RAM as memory that can be shared with another process.

Use a Unix domain socket to communicate between QEMU and the user space vhost implementation.

Use a Unix domain socket to communicate between QEMU and the user space vhost implementation.

The user space application will receive file descriptors for the pre-allocated shared guest RAM. It will directly access the related vrings in the guest's memory space.

The user space application will receive file descriptors for the pre-allocated shared guest RAM. It will directly access the related vrings in the guest's memory space.

Overall architecture of vhost-user

In the target implementation the vhost client is in QEMU. The target backend is Snabbswitch.

Compilation and Usage

QEMU Compilation

A version of QEMU patched with the latest vhost-user patches can be retrieved from the Virtual Open Systems repository at https://github.com/virtualopensystems/qemu.git, branch vhost-user-v5.

To clone it:

$ git clone -b vhost-user-v5 https://github.com/virtualopensystems/qemu.git

Compilation is straightforward:

$ mkdir qemu/obj

$ cd qemu/obj/

$ ../configure --target-list=x86_64-softmmu

$ make -j

This will build QEMU as qemu/obj/x86_64-softmmu/qemu-system-x86_64.

Using QEMU with Vhost-user

To run QEMU with the vhost-user backend, one has to provide the named UNIX domain socked that needs to be already opened by the backend:

$ qemu -m 1024 -mem-path /hugetlbfs,prealloc=on,share=on

-netdev type=vhost-user,id=net0,file=/path/to/socket

-device virtio-net-pci,netdev=net0

Vhost 库

Vhost库实现了一个用户空间virtio网络服务器,允许用户直接操作virtio。 换句话说,它允许用户通过VM virtio网络设备获取/发送数据包。 为了达到这个功能,一个vhost库需要实现:

-

访问guest内存:

对于QEMU,这是通过使用

-object memory-backend-file,share=on,...选项实现的。 这意味着QEMU将创建一个文件作为guest RAM。 选项share=on允许另一个进程映射该文件,这意味着该进程可以访问这个guest RAM。 -

知道关于vring所有必要的信息:

诸如可用环形存储链表的存储空间。Vhost定义了一些消息(通过Unix套接字传递)来告诉后端所有需要知道如何操作vring的信息。

27.1. Vhost API 概述

以下是一些关键的Vhost API函数概述:

-

rte_vhost_driver_register(path, flags)此函数将vhost驱动程序注册到系统中。

path指定Unix套接字的文件路径。当前支持的flags包括:

-

RTE_VHOST_USER_CLIENT当使用该flag时,DPDK vhost-user 作为客户端。 请参阅以下说明。

-

RTE_VHOST_USER_NO_RECONNECT当 DPDK vhost-user 作为客户端时,它将不断尝试连接到服务端(QEMU),知道成功。 这在以下两个情况中是非常有用的:

- 当 QEMU 还没启动时

- 当 QEMU 重启时(如guset OS 重启)

这个重新连接选项是默认启用的,但是,可以通过设置这个标志来关闭它。

-

RTE_VHOST_USER_DEQUEUE_ZERO_COPY设置此flag时将启用出队了零复制。默认情况下是禁用的。

在设置此标志时,需要知道以下原则:

-

零拷贝对于小数据包(小于512)是不好的。

-

零拷贝对VM2VM情况比较好。对于两个虚拟机之间的ipref,提升性能可能高达70%(当TSO使能时).

-

对于VM2NIC情况,

nb_tx_desc必须足够小:如果未启动virtio间接特性则 <=64,否则 <= 128。这是因为,当启用出队列零拷贝时,只有当相应的mbuf被释放时,客户端TX使用的vring才会被更新。 因此,nb_tx_desc必须足够小,以便PMD驱动程序将耗尽可用的TX描述符,并及时释放mbufs。 否则,guset TX vring将无mbuf使用。

-

Guest的内存应该使用应该使用huge page支持以获得更好的性能。最好使用1G大小的页面。

当启用出队零拷贝时,必须建立guest 物理地址和host物理地址之间的映射。 使用non-huge page则意味着更多的页面细分。 为了简单起见,DPDK vhost对这些段进行了线性搜索,因此,段越少,我们得到的映射就越快。 注意:将来我们可能使用树搜索来提升速度。

-

-

-

rte_vhost_driver_set_features(path, features)此函数设置vhost-user驱动支持的功能位。 vhost-user驱动可以是vhost-user net,但也可以是其他的,例如vhost-user SCSI。

-

rte_vhost_driver_callback_register(path, vhost_device_ops)此函数注册一组回调函数,以便在发生某些事件时让DPDK应用程序采取适当的操作。 目前支持以下事件:

-

new_device(int vid)这个回调在virtio设备准备就绪时调用,

vid是虚拟设备ID。 -

destroy_device(int vid)当virtio设备关闭时(或vhost连接中断),调用此函数处理。

-

vring_state_changed(int vid, uint16_t queue_id, int enable)当特定队列的状态发生改变,如启用或禁用,将调用此回调。

-

features_changed(int vid, uint64_t features)这个函数在feature改变时被调用。例如,

VHOST_F_LOG_ALL将分别在实时迁移的开始/结束时设置/清除。

-

-

rte_vhost_driver_disable/enable_features(path, features))该函数禁用或启用某些功能。例如,可以使用它来禁用可合并的缓冲区和TSO功能,这两个功能默认都是启用的。

-

rte_vhost_driver_start(path)这个函数触发vhost-user协商。它应该在初始化一个vhost-user驱动程序结束时被调用。

-

rte_vhost_enqueue_burst(vid, queue_id, pkts, count)传输(入队)从host到guest的

count包。 -

rte_vhost_dequeue_burst(vid, queue_id, mbuf_pool, pkts, count)接收(出队)来自guest的

count包,并将它们存储在pkts。

27.2. Vhost-user 实现

Vhost-user 使用Unix套接字来传递消息。这意味着DPDK vhost-user的实现具有两种角色:

-

DPDK vhost-user作为server:

DPDK 将创建一个Unix套接字服务器文件,并监听来自前端的连接。

注意,这是默认模式,也是DPDK v16.07之前的唯一模式。

-

DPDK vhost-user最为client:

与服务器模式不同,此模式不会创建套接字文件; 它只是试图连接到服务器(而不是创建文件的响应)。

当DPDK vhost-user应用程序重新启动时,DPDK vhost-user将尝试再次连接到服务器。这是“重新连接”功能的工作原理。

Note

- “重连” 功能需要 QEMU v2.7 及以上的版本。

- vhost支持的功能在重新启动之前和之后必须完全相同。例如,如果TSO被禁用,但是重启之后被启用了,将导致未定义的错误。

无论使用哪种模式,建立连接之后,DPDK vhost-user 都将开始接收和处理来自QEMU的vhost消息。

对于带有文件描述符的消息,文件描述符可以直接在vhost进程中使用,因为它已经被Unix套接字安装了。

当前支持的vhost 消息包括:

VHOST_SET_MEM_TABLEVHOST_SET_VRING_KICKVHOST_SET_VRING_CALLVHOST_SET_LOG_FDVHOST_SET_VRING_ERR

对于 VHOST_SET_MEM_TABLE 消息,QEMU将在消息的辅助数据中为每个存储区域及其文件描述符发送信息。 文件描述符用于映射该区域。

VHOST_SET_VRING_KICK 用作将vhost设备放入数据面的信号, VHOST_GET_VRING_BASE 用作从数据面移除vhost设备的信号。

当套接字连接关闭,vhost将销毁设备。

27.3. 支持Vhost的vSwitch

有关更多vhost详细信息以及如何在vSwitch中支持vhost,请参阅《DPDK Sample Applications Guide》。

dpdk vhost不需要vhost-net

[root@localhost ~]# lsof /dev/vhost-net

[root@localhost ~]# ps -elf | grep qemu

3 S root 49916 1 24 80 0 - 94022 poll_s 02:29 ? 00:00:19 qemu-system-aarch64 -name vm2 -daemonize -enable-kvm -M virt -cpu host -smp 16 -m 4096 -object memory-backend-file,id=mem,size=4096M,mem-path=/mnt/huge,share=on -numa node,memdev=mem -mem-prealloc -drive file=vhuser-test1.qcow2 -global virtio-blk-device.scsi=off -device virtio-scsi-device,id=scsi -kernel vmlinuz-4.18 --append console=ttyAMA0 root=UUID=6a09973e-e8fd-4a6d-a8c0-1deb9556f477 iommu=pt intel_iommu=on iommu.passthrough=1 -initrd initramfs-4.18 -serial telnet:localhost:4322,server,nowait -monitor telnet:localhost:4321,server,nowait -chardev socket,id=char0,path=/tmp/vhost1,server -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce -device virtio-net-pci,netdev=netdev0,mac=52:54:00:00:00:01,mrg_rxbuf=on,rx_queue_size=256,tx_queue_size=256 -vnc :10

0 S root 49991 49249 0 80 0 - 1729 pipe_w 02:30 pts/3 00:00:00 grep --color=auto qemu

[root@localhost ~]#

打开/tmp/vhost1的都是qemu线程

[root@localhost test-pmd]# lsof /tmp/vhost1 COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME qemu-syst 49916 root 14u unix 0xffffa05fdf7e7980 0t0 371313 /tmp/vhost1 qemu-syst 49916 root 16u unix 0xffff805fca51ea00 0t0 371331 /tmp/vhost1 [root@localhost test-pmd]#

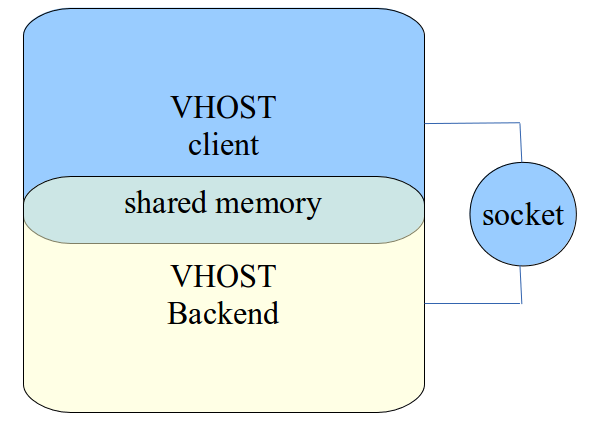

vhost-user 基于 C/S 的模式,采用 UNIX 域套接字(UNIX domain socket)来完成进程间的事件通知和数据交互,相比 vhost 中采用 ioctl 的方式,vhost-user 采用 socket 的方式大大简化了操作。

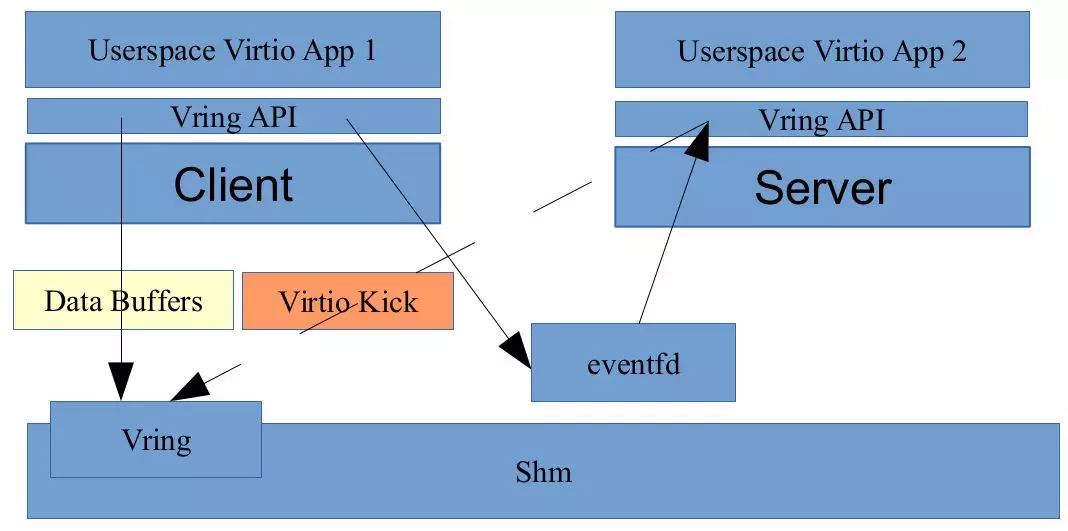

vhost-user 基于 vring 这套通用的共享内存通信方案,只要 client 和 server 按照 vring 提供的接口实现所需功能即可,常见的实现方案是 client 实现在 guest OS 中,一般是集成在 virtio 驱动上,server 端实现在 qemu 中,也可以实现在各种数据面中,如 OVS,Snabbswitch 等虚拟交换机。

如果使用 qemu 作为 vhost-user 的 server 端实现,在启动 qemu 时,我们需要指定 -mem-path 和 -netdev 参数,如:

$ qemu -m 1024 -mem-path /hugetlbfs,prealloc=on,share=on

-netdev type=vhost-user,id=net0,file=/path/to/socket

-device virtio-net-pci,netdev=net0

指定 -mem-path 意味着 qemu 会在 guest OS 的内存中创建一个文件,share=on 选项允许其他进程访问这个文件,也就意味着能访问 guest OS 内存,达到共享内存的目的。

-netdev type=vhost-user 指定通信方案,file=/path/to/socket 指定 socket 文件。

当 qemu 启动之后,首先会进行 vring 的初始化,并通过 socket 建立 C/S 的共享内存区域和事件机制,然后 client 通过 eventfd 将 virtio kick 事件通知到 server 端,server 端同样通过 eventfd 进行响应,完成整个数据交互。

//driver etvirtiovirtio_uservhost_kernel.c /* vhost kernel ioctls */ #define VHOST_VIRTIO 0xAF /*返回vhost支持的virtio-net功能子集*/ #define VHOST_GET_FEATURES _IOR(VHOST_VIRTIO, 0x00, __u64) /*检查功能掩码,设置vhost和virtio前端共同支持的特性,需要两者同时支持才能生效*/ #define VHOST_SET_FEATURES _IOW(VHOST_VIRTIO, 0x00, __u64) /*将设备设置为当前进程所有*/ #define VHOST_SET_OWNER _IO(VHOST_VIRTIO, 0x01) /*当前进程释放对设备的所有权*/ #define VHOST_RESET_OWNER _IO(VHOST_VIRTIO, 0x02) /*设置内存空间布局信息,用于报文收发时的地址转换*/ #define VHOST_SET_MEM_TABLE _IOW(VHOST_VIRTIO, 0x03, struct vhost_memory_kernel) /*下面两个宏,用于guest在线迁移*/ #define VHOST_SET_LOG_BASE _IOW(VHOST_VIRTIO, 0x04, __u64) #define VHOST_SET_LOG_FD _IOW(VHOST_VIRTIO, 0x07, int) /*vhost记录每个虚拟队列的大小*/ #define VHOST_SET_VRING_NUM _IOW(VHOST_VIRTIO, 0x10, struct vhost_vring_state) /*由qemu发送virtqueue结构的虚拟地址。vhost将该地址转换成vhost的虚拟地址。*/ #define VHOST_SET_VRING_ADDR _IOW(VHOST_VIRTIO, 0x11, struct vhost_vring_addr) /*传递初始索引值,vhost通过该索引值找到初始描述符*/ #define VHOST_SET_VRING_BASE _IOW(VHOST_VIRTIO, 0x12, struct vhost_vring_state) /*将虚拟队列的当前可用索引值发送给qemu*/ #define VHOST_GET_VRING_BASE _IOWR(VHOST_VIRTIO, 0x12, struct vhost_vring_state) /*传递eventfd文件描述符。当guest有新的数据要发送时,通过该文件描述符通知vhsot接收数据 * 并发送到目的地;vhost使用eventfd代理模块把这个文件描述符从qemu上下文切换到自己的进程 * 上下文 */ #define VHOST_SET_VRING_KICK _IOW(VHOST_VIRTIO, 0x20, struct vhost_vring_file) /*也是用来传递eventfd文件描述符。使vhost能够在完成对新的数据包接收时,通过中断方式通知 *guest准备接收数据包。使用eventfd代理模块把这个文件描述符从qemu上下文切换到自己的进程 *上下文 */ #define VHOST_SET_VRING_CALL _IOW(VHOST_VIRTIO, 0x21, struct vhost_vring_file) /*代码中仅有定义,未使用*/ #define VHOST_SET_VRING_ERR _IOW(VHOST_VIRTIO, 0x22, struct vhost_vring_file) /*用来支持virtio-user*/ #define VHOST_NET_SET_BACKEND _IOW(VHOST_VIRTIO, 0x30, struct vhost_vring_file)

RTE_PMD_REGISTER_VDEV(net_virtio_user, virtio_user_driver); static struct rte_vdev_driver virtio_user_driver = { .probe = virtio_user_pmd_probe, .remove = virtio_user_pmd_remove, }; virtio_user_pmd_probe-->virtio_user_dev_init-->virtio_user_dev_setup virtio_user_dev_init(struct virtio_user_dev *dev, char *path, int queues, int cq, int queue_size, const char *mac, char **ifname, int server, int mrg_rxbuf, int in_order, int packed_vq) { pthread_mutex_init(&dev->mutex, NULL); strlcpy(dev->path, path, PATH_MAX); dev->started = 0; dev->max_queue_pairs = queues; dev->queue_pairs = 1; /* mq disabled by default */ dev->queue_size = queue_size; dev->is_server = server; dev->mac_specified = 0; dev->frontend_features = 0; dev->unsupported_features = ~VIRTIO_USER_SUPPORTED_FEATURES; parse_mac(dev, mac); if (*ifname) { dev->ifname = *ifname; *ifname = NULL; } if (virtio_user_dev_setup(dev) < 0) { PMD_INIT_LOG(ERR, "backend set up fails"); return -1; } if (!dev->is_server) { if (dev->ops->send_request(dev, VHOST_USER_SET_OWNER, NULL) < 0) { PMD_INIT_LOG(ERR, "set_owner fails: %s", strerror(errno)); return -1; } if (dev->ops->send_request(dev, VHOST_USER_GET_FEATURES, &dev->device_features) < 0) { PMD_INIT_LOG(ERR, "get_features failed: %s", strerror(errno)); return -1; } } else { /* We just pretend vhost-user can support all these features. * Note that this could be problematic that if some feature is * negotiated but not supported by the vhost-user which comes * later. */ dev->device_features = VIRTIO_USER_SUPPORTED_FEATURES; } } virtio_user_dev_setup(struct virtio_user_dev *dev) { uint32_t q; dev->vhostfd = -1; dev->vhostfds = NULL; dev->tapfds = NULL; if (dev->is_server) { if (access(dev->path, F_OK) == 0 && !is_vhost_user_by_type(dev->path)) { PMD_DRV_LOG(ERR, "Server mode doesn't support vhost-kernel!"); return -1; } dev->ops = &virtio_ops_user; } else { if (is_vhost_user_by_type(dev->path)) { dev->ops = &virtio_ops_user; } else { dev->ops = &virtio_ops_kernel; dev->vhostfds = malloc(dev->max_queue_pairs * sizeof(int)); dev->tapfds = malloc(dev->max_queue_pairs * sizeof(int)); if (!dev->vhostfds || !dev->tapfds) { PMD_INIT_LOG(ERR, "Failed to malloc"); return -1; } for (q = 0; q < dev->max_queue_pairs; ++q) { dev->vhostfds[q] = -1; dev->tapfds[q] = -1; } } } if (dev->ops->setup(dev) < 0) return -1; if (virtio_user_dev_init_notify(dev) < 0) return -1; if (virtio_user_fill_intr_handle(dev) < 0) return -1; return 0; }

virtio_ops_kernel

struct virtio_user_backend_ops virtio_ops_kernel = { .setup = vhost_kernel_setup, .send_request = vhost_kernel_ioctl, .enable_qp = vhost_kernel_enable_queue_pair }; vhost_kernel_ioctl(struct virtio_user_dev *dev, enum vhost_user_request req, void *arg) { int ret = -1; unsigned int i; uint64_t req_kernel; struct vhost_memory_kernel *vm = NULL; int vhostfd; unsigned int queue_sel; unsigned int features; PMD_DRV_LOG(INFO, "%s", vhost_msg_strings[req]); req_kernel = vhost_req_user_to_kernel[req]; if (req_kernel == VHOST_SET_MEM_TABLE) { vm = prepare_vhost_memory_kernel(); if (!vm) return -1; arg = (void *)vm; } if (req_kernel == VHOST_SET_FEATURES) { /* We don't need memory protection here */ *(uint64_t *)arg &= ~(1ULL << VIRTIO_F_IOMMU_PLATFORM); /* VHOST kernel does not know about below flags */ *(uint64_t *)arg &= ~VHOST_KERNEL_GUEST_OFFLOADS_MASK; *(uint64_t *)arg &= ~VHOST_KERNEL_HOST_OFFLOADS_MASK; *(uint64_t *)arg &= ~(1ULL << VIRTIO_NET_F_MQ); } switch (req_kernel) { case VHOST_SET_VRING_NUM: case VHOST_SET_VRING_ADDR: case VHOST_SET_VRING_BASE: case VHOST_GET_VRING_BASE: case VHOST_SET_VRING_KICK: case VHOST_SET_VRING_CALL: queue_sel = *(unsigned int *)arg; vhostfd = dev->vhostfds[queue_sel / 2]; *(unsigned int *)arg = queue_sel % 2; PMD_DRV_LOG(DEBUG, "vhostfd=%d, index=%u", vhostfd, *(unsigned int *)arg); break; default: vhostfd = -1; } if (vhostfd == -1) { for (i = 0; i < dev->max_queue_pairs; ++i) { if (dev->vhostfds[i] < 0) continue; ret = ioctl(dev->vhostfds[i], req_kernel, arg); if (ret < 0) break; } } else { ret = ioctl(vhostfd, req_kernel, arg); } if (!ret && req_kernel == VHOST_GET_FEATURES) { features = tap_support_features(); /* with tap as the backend, all these features are supported * but not claimed by vhost-net, so we add them back when * reporting to upper layer. */ if (features & IFF_VNET_HDR) { *((uint64_t *)arg) |= VHOST_KERNEL_GUEST_OFFLOADS_MASK; *((uint64_t *)arg) |= VHOST_KERNEL_HOST_OFFLOADS_MASK; } /* vhost_kernel will not declare this feature, but it does * support multi-queue. */ if (features & IFF_MULTI_QUEUE) *(uint64_t *)arg |= (1ull << VIRTIO_NET_F_MQ); } if (vm) free(vm); if (ret < 0) PMD_DRV_LOG(ERR, "%s failed: %s", vhost_msg_strings[req], strerror(errno)); return ret; }

virtio_ops_user

struct virtio_user_backend_ops virtio_ops_user = { .setup = vhost_user_setup, .send_request = vhost_user_sock, .enable_qp = vhost_user_enable_queue_pair };

(gdb) set args -l 2,4,6,8,10,12,14,16,18 --socket-mem 1024,1024 -n 4 --vdev 'net_vhost0,iface=/tmp/vhost1,queues=4,client=1,iommu-support=1' -- --portmask=0x1 -i --rxd=512 --txd=512 --rxq=1 --txq=1 --nb-cores=8 --forward-mode=txonly (gdb) b rte_vhost_dequeue_burst Breakpoint 1 at 0x5b0014: file /data1/dpdk-19.11/lib/librte_vhost/virtio_net.c, line 2179. (gdb) b rte_vhost_enqueue_burst Breakpoint 2 at 0x5a19d0: file /data1/dpdk-19.11/lib/librte_vhost/virtio_net.c, line 1239. (gdb) r Starting program: /data1/dpdk-19.11/arm64-armv8a-linuxapp-gcc/build/app/test-pmd/testpmd -l 2,4,6,8,10,12,14,16,18 --socket-mem 1024,1024 -n 4 --vdev 'net_vhost0,iface=/tmp/vhost1,queues=4,client=1,iommu-support=1' -- --portmask=0x1 -i --rxd=512 --txd=512 --rxq=1 --txq=1 --nb-cores=8 --forward-mode=txonly

执行start

(gdb) bt #0 rte_vhost_dequeue_burst (vid=0, queue_id=1, mbuf_pool=0x15f83b580, pkts=0xffffffff7ef8, count=32) at /data1/dpdk-19.11/lib/librte_vhost/virtio_net.c:2179 #1 0x0000000000a7688c in eth_vhost_rx (q=0x140aa8f00, bufs=0xffffffff7ef8, nb_bufs=512) at /data1/dpdk-19.11/drivers/net/vhost/rte_eth_vhost.c:395 #2 0x000000000048746c in rte_eth_rx_burst (port_id=0, queue_id=0, rx_pkts=0xffffffff7ef8, nb_pkts=512) at /data1/dpdk-19.11/arm64-armv8a-linuxapp-gcc/include/rte_ethdev.h:4387 #3 0x000000000048a21c in flush_fwd_rx_queues () at /data1/dpdk-19.11/app/test-pmd/testpmd.c:1768 #4 0x000000000048a908 in start_packet_forwarding (with_tx_first=0) at /data1/dpdk-19.11/app/test-pmd/testpmd.c:1940 #5 0x000000000049913c in cmd_start_parsed (parsed_result=0xffffffffafd0, cl=0x1496780, data=0x0) at /data1/dpdk-19.11/app/test-pmd/cmdline.c:7196 #6 0x0000000000639928 in cmdline_parse (cl=0x1496780, buf=0x14967c8 "start ") at /data1/dpdk-19.11/lib/librte_cmdline/cmdline_parse.c:295 #7 0x00000000006377e0 in cmdline_valid_buffer (rdl=0x1496790, buf=0x14967c8 "start ", size=8) at /data1/dpdk-19.11/lib/librte_cmdline/cmdline.c:31 #8 0x000000000063cb18 in rdline_char_in (rdl=0x1496790, c=10 ' ') at /data1/dpdk-19.11/lib/librte_cmdline/cmdline_rdline.c:421 #9 0x0000000000637c18 in cmdline_in (cl=0x1496780, buf=0xfffffffff13f " P361377377377377", size=1) at /data1/dpdk-19.11/lib/librte_cmdline/cmdline.c:148 #10 0x0000000000637ed4 in cmdline_interact (cl=0x1496780) at /data1/dpdk-19.11/lib/librte_cmdline/cmdline.c:227 #11 0x00000000004a5258 in prompt () at /data1/dpdk-19.11/app/test-pmd/cmdline.c:19504 #12 0x000000000048eadc in main (argc=9, argv=0xfffffffff350) at /data1/dpdk-19.11/app/test-pmd/testpmd.c:3532

Breakpoint 2, rte_vhost_enqueue_burst (vid=0, queue_id=0, pkts=0xffffbd40bdd0, count=32) at /data1/dpdk-19.11/lib/librte_vhost/virtio_net.c:1239 1239 struct virtio_net *dev = get_device(vid); (gdb) bt #0 rte_vhost_enqueue_burst (vid=0, queue_id=0, pkts=0xffffbd40bdd0, count=32) at /data1/dpdk-19.11/lib/librte_vhost/virtio_net.c:1239 #1 0x0000000000a76b5c in eth_vhost_tx (q=0x140aa9080, bufs=0xffffbd40bdd0, nb_bufs=32) at /data1/dpdk-19.11/drivers/net/vhost/rte_eth_vhost.c:462 #2 0x00000000004c1160 in rte_eth_tx_burst (port_id=0, queue_id=0, tx_pkts=0xffffbd40bdd0, nb_pkts=32) at /data1/dpdk-19.11/arm64-armv8a-linuxapp-gcc/include/rte_ethdev.h:4666 #3 0x00000000004c23a0 in pkt_burst_transmit (fs=0x15ff9b180) at /data1/dpdk-19.11/app/test-pmd/txonly.c:302 #4 0x000000000048a3b0 in run_pkt_fwd_on_lcore (fc=0x15fb43e80, pkt_fwd=0x4c1b9c <pkt_burst_transmit>) at /data1/dpdk-19.11/app/test-pmd/testpmd.c:1805 #5 0x000000000048a4e4 in start_pkt_forward_on_core (fwd_arg=0x15fb43e80) at /data1/dpdk-19.11/app/test-pmd/testpmd.c:1831 #6 0x000000000060c408 in eal_thread_loop (arg=0x0) at /data1/dpdk-19.11/lib/librte_eal/linux/eal/eal_thread.c:153 #7 0x0000ffffbe617d38 in start_thread (arg=0xffffbd40d910) at pthread_create.c:309 #8 0x0000ffffbe55f5f0 in thread_start () at ../sysdeps/unix/sysv/linux/aarch64/clone.S:91 (gdb)

(gdb) b vhost_user_set_vring_kick Breakpoint 5 at 0x59664c: file /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c, line 1795. (gdb) b vhost_user_set_vring_call Breakpoint 6 at 0x595dfc: file /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c, line 1563. (gdb) r

Breakpoint 6, vhost_user_set_vring_call (pdev=0xffffb8b7cfa8, msg=0xffffb8b7cd00, main_fd=68) at /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c:1563 1563 struct virtio_net *dev = *pdev; (gdb) bt #0 vhost_user_set_vring_call (pdev=0xffffb8b7cfa8, msg=0xffffb8b7cd00, main_fd=68) at /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c:1563 #1 0x0000000000597fdc in vhost_user_msg_handler (vid=0, fd=68) at /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c:2689 #2 0x000000000058b678 in vhost_user_read_cb (connfd=68, dat=0x1492ba0, remove=0xffffb8b7d064) at /data1/dpdk-19.11/lib/librte_vhost/socket.c:306 #3 0x0000000000588790 in fdset_event_dispatch (arg=0xec4c08 <vhost_user+8192>) at /data1/dpdk-19.11/lib/librte_vhost/fd_man.c:286 #4 0x000000000062639c in rte_thread_init (arg=0x1495f50) at /data1/dpdk-19.11/lib/librte_eal/common/eal_common_thread.c:165 #5 0x0000ffffbe617d38 in start_thread (arg=0xffffb8b7d910) at pthread_create.c:309 #6 0x0000ffffbe55f5f0 in thread_start () at ../sysdeps/unix/sysv/linux/aarch64/clone.S:91 (gdb)

Breakpoint 5, vhost_user_set_vring_kick (pdev=0xffffb8b7cfa8, msg=0xffffb8b7cd00, main_fd=68) at /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c:1795 1795 struct virtio_net *dev = *pdev; (gdb) bt #0 vhost_user_set_vring_kick (pdev=0xffffb8b7cfa8, msg=0xffffb8b7cd00, main_fd=68) at /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c:1795 #1 0x0000000000597fdc in vhost_user_msg_handler (vid=0, fd=68) at /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c:2689 #2 0x000000000058b678 in vhost_user_read_cb (connfd=68, dat=0x1492ba0, remove=0xffffb8b7d064) at /data1/dpdk-19.11/lib/librte_vhost/socket.c:306 #3 0x0000000000588790 in fdset_event_dispatch (arg=0xec4c08 <vhost_user+8192>) at /data1/dpdk-19.11/lib/librte_vhost/fd_man.c:286 #4 0x000000000062639c in rte_thread_init (arg=0x1495f50) at /data1/dpdk-19.11/lib/librte_eal/common/eal_common_thread.c:165 #5 0x0000ffffbe617d38 in start_thread (arg=0xffffb8b7d910) at pthread_create.c:309 #6 0x0000ffffbe55f5f0 in thread_start () at ../sysdeps/unix/sysv/linux/aarch64/clone.S:91 (gdb) c Continuing. VHOST_CONFIG: vring kick idx:0 file:75 VHOST_CONFIG: reallocate vq from 0 to 2 node VHOST_CONFIG: reallocate dev from 0 to 2 node VHOST_CONFIG: read message VHOST_USER_SET_VRING_CALL Breakpoint 6, vhost_user_set_vring_call (pdev=0xffffb8b7cfa8, msg=0xffffb8b7cd00, main_fd=68) at /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c:1563 1563 struct virtio_net *dev = *pdev; (gdb) c Continuing. VHOST_CONFIG: vring call idx:0 file:76 VHOST_CONFIG: read message VHOST_USER_SET_VRING_CALL Breakpoint 6, vhost_user_set_vring_call (pdev=0xffffb8b7cfa8, msg=0xffffb8b7cd00, main_fd=68) at /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c:1563 1563 struct virtio_net *dev = *pdev; (gdb) bt #0 vhost_user_set_vring_call (pdev=0xffffb8b7cfa8, msg=0xffffb8b7cd00, main_fd=68) at /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c:1563 #1 0x0000000000597fdc in vhost_user_msg_handler (vid=0, fd=68) at /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c:2689 #2 0x000000000058b678 in vhost_user_read_cb (connfd=68, dat=0x1492ba0, remove=0xffffb8b7d064) at /data1/dpdk-19.11/lib/librte_vhost/socket.c:306 #3 0x0000000000588790 in fdset_event_dispatch (arg=0xec4c08 <vhost_user+8192>) at /data1/dpdk-19.11/lib/librte_vhost/fd_man.c:286 #4 0x000000000062639c in rte_thread_init (arg=0x1495f50) at /data1/dpdk-19.11/lib/librte_eal/common/eal_common_thread.c:165 #5 0x0000ffffbe617d38 in start_thread (arg=0xffffb8b7d910) at pthread_create.c:309 #6 0x0000ffffbe55f5f0 in thread_start () at ../sysdeps/unix/sysv/linux/aarch64/clone.S:91 (gdb) info break Num Type Disp Enb Address What 3 breakpoint keep y 0x0000000000a70b14 in vhost_kernel_ioctl at /data1/dpdk-19.11/drivers/net/virtio/virtio_user/vhost_kernel.c:182 4 breakpoint keep y 0x0000000000a70b14 in vhost_kernel_ioctl at /data1/dpdk-19.11/drivers/net/virtio/virtio_user/vhost_kernel.c:182 5 breakpoint keep y 0x000000000059664c in vhost_user_set_vring_kick at /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c:1795 breakpoint already hit 1 time 6 breakpoint keep y 0x0000000000595dfc in vhost_user_set_vring_call at /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c:1563 breakpoint already hit 4 times (gdb) delete all warning: bad breakpoint number at or near 'all' (gdb) delete 3-6 (gdb) info break No breakpoints or watchpoints.

vhost-user是vhost-kernel又回到用户空间的实现,其基本思想和vhost-kernel很类似,不过之前在内核的部分现在由另外一个用户进程代替,可能是snapp或者dpdk等。vhost-user下,UNIX本地socket代替了之前kernel模式下的设备文件进行进程间的通信(qemu和vhost-user app),而通过mmap的方式把ram映射到vhost-user app的进程空间实现内存的共享。其他的部分和vhost-kernel原理基本一致。

本文主要分析涉及到的三个重要机制:qemu和vhost-user app的消息传递,guest memory和vhost-user app的共享,guest和vhost-user app的通知机制。

一、qemu和vhost-user app的消息传递

qemu和vhost-user app的消息传递是通过UNIX本地socket实现的,对应于kernel下每个ioctl的实现(如果是vhost-kernel,用ioctl),这里vhost-user app必须对每个ioctl 提供自己的处理,DPDK下在vhost-user.c文件下的vhost_user_msg_handler函数,这里有一个核心的数据结构:VhostUserMsg,该结构是消息传递的载体,整个结构并不复杂.

1 typedef struct VhostUserMsg {

2 union {

3 uint32_t master; /* a VhostUserRequest value */ //qemu

4 uint32_t slave; /* a VhostUserSlaveRequest value*/ 例如 //dpdk

5 } request;

6

7 #define VHOST_USER_VERSION_MASK 0x3

8 #define VHOST_USER_REPLY_MASK (0x1 << 2)

9 #define VHOST_USER_NEED_REPLY (0x1 << 3)

10 uint32_t flags;

11 uint32_t size; /* the following payload size */

12 union {

13 #define VHOST_USER_VRING_IDX_MASK 0xff

14 #define VHOST_USER_VRING_NOFD_MASK (0x1<<8)

15 uint64_t u64;

16 struct vhost_vring_state state;

17 struct vhost_vring_addr addr;

18 VhostUserMemory memory;

19 VhostUserLog log;

20 struct vhost_iotlb_msg iotlb;

21 VhostUserCryptoSessionParam crypto_session;

22 VhostUserVringArea area;

23 VhostUserInflight inflight;

24 } payload;

25 int fds[VHOST_MEMORY_MAX_NREGIONS];

26 int fd_num;

27 } __attribute((packed)) VhostUserMsg;

既然是传递消息,其中必须包含消息的种类、消息的内容、消息内容的大小。而这些也是该结构的主要部分,首个union便标志该消息的种类。接下来的Flags表明该消息本身的一些性质,如是否需要回复等。size就是payload的大小,接下来的union是具体的消息内容,最后的fds是关联每一个memory RAM的fd数组。消息种类如下:

1 typedef enum VhostUserRequest {

2 VHOST_USER_NONE = 0,

3 VHOST_USER_GET_FEATURES = 1,

4 VHOST_USER_SET_FEATURES = 2,

5 VHOST_USER_SET_OWNER = 3,

6 VHOST_USER_RESET_OWNER = 4,

7 VHOST_USER_SET_MEM_TABLE = 5,

8 VHOST_USER_SET_LOG_BASE = 6,

9 VHOST_USER_SET_LOG_FD = 7,

10 VHOST_USER_SET_VRING_NUM = 8,

11 VHOST_USER_SET_VRING_ADDR = 9,

12 VHOST_USER_SET_VRING_BASE = 10,

13 VHOST_USER_GET_VRING_BASE = 11,

14 VHOST_USER_SET_VRING_KICK = 12,

15 VHOST_USER_SET_VRING_CALL = 13,

16 VHOST_USER_SET_VRING_ERR = 14,

17 VHOST_USER_GET_PROTOCOL_FEATURES = 15,

18 VHOST_USER_SET_PROTOCOL_FEATURES = 16,

19 VHOST_USER_GET_QUEUE_NUM = 17,

20 VHOST_USER_SET_VRING_ENABLE = 18,

21 VHOST_USER_SEND_RARP = 19,

22 VHOST_USER_NET_SET_MTU = 20,

23 VHOST_USER_SET_SLAVE_REQ_FD = 21,

24 VHOST_USER_IOTLB_MSG = 22,

25 VHOST_USER_CRYPTO_CREATE_SESS = 26,

26 VHOST_USER_CRYPTO_CLOSE_SESS = 27,

27 VHOST_USER_POSTCOPY_ADVISE = 28,

28 VHOST_USER_POSTCOPY_LISTEN = 29,

29 VHOST_USER_POSTCOPY_END = 30,

30 VHOST_USER_GET_INFLIGHT_FD = 31,

31 VHOST_USER_SET_INFLIGHT_FD = 32,

32 VHOST_USER_MAX = 33

33 } VhostUserRequest;

到目前为止并不复杂,我们下面看下消息本身的初始化机制,socket-file的路径会作为参数传递进来,在main函数中examples/vhost/,调用us_vhost_parse_socket_path对参数中的socket-fiile参数进行解析,保存在静态数组socket_files中,而后在main函数中有一个for循环,针对每个socket-file,会调用rte_vhost_driver_register函数注册vhost 驱动,该函数的核心功能就是为每个socket-fie创建本地socket,通过create_unix_socket函数。vhost中的socket结构通过create_unix_socket描述。在注册驱动之后,会根据具体的特性设置features。在最后会通过rte_vhost_driver_start启动vhost driver,该函数倒是值得一看

下面函数vhost_register_unix_socket是spdk中的。

1 int

2 vhost_register_unix_socket(const char *path, const char *ctrl_name,

3 uint64_t virtio_features, uint64_t disabled_features, uint64_t protocol_features)

4 {

5 struct stat file_stat;

6 #ifndef SPDK_CONFIG_VHOST_INTERNAL_LIB

7 uint64_t features = 0;

8 #endif

9

10 /* Register vhost driver to handle vhost messages. */

11 if (stat(path, &file_stat) != -1) {

12 if (!S_ISSOCK(file_stat.st_mode)) {

13 SPDK_ERRLOG("Cannot create a domain socket at path "%s": "

14 "The file already exists and is not a socket.

",

15 path);

16 return -EIO;

17 } else if (unlink(path) != 0) {

18 SPDK_ERRLOG("Cannot create a domain socket at path "%s": "

19 "The socket already exists and failed to unlink.

",

20 path);

21 return -EIO;

22 }

23 }

24

25 if (rte_vhost_driver_register(path, 0) != 0) {//生成socket监听句柄

26 SPDK_ERRLOG("Could not register controller %s with vhost library

", ctrl_name);

27 SPDK_ERRLOG("Check if domain socket %s already exists

", path);

28 return -EIO;

29 }

30 if (rte_vhost_driver_set_features(path, virtio_features) ||

31 rte_vhost_driver_disable_features(path, disabled_features)) {

32 SPDK_ERRLOG("Couldn't set vhost features for controller %s

", ctrl_name);

33

34 rte_vhost_driver_unregister(path);

35 return -EIO;

36 }

37 //注册socket连接建立后的消息处理notify_op回调

38 if (rte_vhost_driver_callback_register(path, &g_spdk_vhost_ops) != 0) {//g_spdk_vhost_ops中new device会执行rc = vdev->backend->start_session(vsession);是什么时候执行?

39 //在 vhost_user_msg_handler函数后半部会执行 ops-> new_device

40 rte_vhost_driver_unregister(path);

41 SPDK_ERRLOG("Couldn't register callbacks for controller %s

", ctrl_name);

42 return -EIO;

43 }

44

45 #ifndef SPDK_CONFIG_VHOST_INTERNAL_LIB

46 rte_vhost_driver_get_protocol_features(path, &features);

47 features |= protocol_features;

48 rte_vhost_driver_set_protocol_features(path, features);

49 #endif

50

51 //拉起一个监听线程,开始等待客户连接请求

52 if (rte_vhost_driver_start(path) != 0) {

53 SPDK_ERRLOG("Failed to start vhost driver for controller %s (%d): %s

",

54 ctrl_name, errno, spdk_strerror(errno));

55 rte_vhost_driver_unregister(path);

56 return -EIO;

57 }

58

59 return 0;

60 }

1 int

2 rte_vhost_driver_start(const char *path)

3 {

4 struct vhost_user_socket *vsocket;

5 static pthread_t fdset_tid;

6

7 pthread_mutex_lock(&vhost_user.mutex);

8 vsocket = find_vhost_user_socket(path);//根据路径通过find_vhost_user_socket函数找到对应的vhost_user_socket结构

9 pthread_mutex_unlock(&vhost_user.mutex);

10

11 if (!vsocket)

12 return -1;

13 /*创建一个线程监听fdset*/

14 if (fdset_tid == 0) {

15 int ret = pthread_create(&fdset_tid, NULL, fdset_event_dispatch,

16 &vhost_user.fdset);

17 if (ret < 0)

18 RTE_LOG(ERR, VHOST_CONFIG,

19 "failed to create fdset handling thread");

20 }

21

22 if (vsocket->is_server)

23 return vhost_user_start_server(vsocket);

24 else

25 return vhost_user_start_client(vsocket);

26 }

函数参数是对应的socket-file的路径,进入函数内部,首先便是根据路径通过find_vhost_user_socket函数找到对应的vhost_user_socket结构,所有的vhost_user_socket以一个数组的形式保存在vhost_user数据结构中。接下来如果该socket确实存在,就创建一个线程,处理vhost-user的fd, 这个作用我们后面再看,该线程绑定的函数为fdset_event_dispatch。这些工作完成后,就启动该socket了,起始qemu和vhost可以互做server和client,一般情况下vhost是作为server存在。所以这里就调用了vhost_user_start_server。这里就是我们常见的socket编程操作了,调用bind……然后listen……,没什么好说的。后面调用了fdset_add函数,这是就是vhost处理消息fd的一个单独的机制,

1 static int

2 vhost_user_start_server(struct vhost_user_socket *vsocket)

3 {

4 int fd = vsocket->socket_fd;

5 const char *path = vsocket->path;

6

7 ...

8 ret = bind(fd, (struct sockaddr *)&vsocket->un, sizeof(vsocket->un));

9 ret = listen(fd, MAX_VIRTIO_BACKLOG);

10 ...

11 ret = fdset_add(&vhost_user.fdset, fd, vhost_user_server_new_connection,

12 NULL, vsocket);

13 //该函数为对应的fd注册了一个处理函数,当该fd有信号时,就调用该函数

14 ...}

1 int

2 fdset_add(struct fdset *pfdset, int fd, fd_cb rcb, fd_cb wcb, void *dat)

3 {

4 int i;

5

6 if (pfdset == NULL || fd == -1)

7 return -1;

8

9 pthread_mutex_lock(&pfdset->fd_mutex);

10 i = pfdset->num < MAX_FDS ? pfdset->num++ : -1;

11 if (i == -1) {

12 fdset_shrink_nolock(pfdset);

13 i = pfdset->num < MAX_FDS ? pfdset->num++ : -1;

14 if (i == -1) {

15 pthread_mutex_unlock(&pfdset->fd_mutex);

16 return -2;

17 }

18 }

19

20 fdset_add_fd(pfdset, i, fd, rcb, wcb, dat);

21 pthread_mutex_unlock(&pfdset->fd_mutex);

22

23 return 0;

24 }

简单来说就是该函数为对应的fd注册了一个处理函数,当该fd有信号时,就调用该函数,这里就是vhost_user_server_new_connection。具体是如何实现的呢?看下fdset_add_fd

1 static void

2 fdset_add_fd(struct fdset *pfdset, int idx, int fd,

3 fd_cb rcb, fd_cb wcb, void *dat)

4 {

5 struct fdentry *pfdentry = &pfdset->fd[idx];

6 struct pollfd *pfd = &pfdset->rwfds[idx];

7

8 pfdentry->fd = fd;

9 pfdentry->rcb = rcb;

10 pfdentry->wcb = wcb;

11 pfdentry->dat = dat;

12

13 pfd->fd = fd;

14 pfd->events = rcb ? POLLIN : 0;

15 pfd->events |= wcb ? POLLOUT : 0;

16 pfd->revents = 0;

17 }

这里分成了两部分,一个是fdentry,一个是pollfd。前者保存具体的信息,后者用作poll操作,方便线程监听fd。参数中函数指针为第三个参数,所以这里pfd->events就是POLLIN。那么再回到处理线程的处理函数fdset_event_dispatch中,该函数会监听vhost_user.fdset中的rwfds,当某个fd有信号时,则进入处理流程.

1 if (rcb && pfd->revents & (POLLIN | FDPOLLERR)) 2 rcb(fd, dat, &remove1); 3 if (wcb && pfd->revents & (POLLOUT | FDPOLLERR)) 4 wcb(fd, dat, &remove2);

这里的rcb便是前面针对fd注册的回调函数。再次回到vhost_user_server_new_connection函数中,当某个fd有信号时,这里指对应socket-file的fd,则该函数被调用,建立连接,然后调用vhost_user_add_connection函数。既然连接已经建立,则需要对该连接进行vhost的一些设置了,包括创建virtio_net设备附加到连接上,设置device名字等等。而关键的一步是为该fd添加回调函数,刚才的回调函数用于建立连接,在连接建立后就需要设置函数处理socket的msg了,这里便是vhost_user_read_cb。到这里正式进入msg的部分。该函数中调用了vhost_user_msg_handler,而该函数正是处理socket msg的核心函数。到这里消息处理的部分便介绍完成了。

二、guest memory和vhost-user app的共享

虽然qemu和vhost通过socket建立了联系,但是这信息量毕竟有限,重点是要传递的数据,难不成通过socket传递的??当然不是,如果这样模式切换和数据复制估计会把系统撑死……这里主要也是用到共享内存的概念。核心机制和vhost-kernel类似,qemu也需要把guest的内存布局通过MSG传递给vhost-user,那么我们就从这里开始分析,在函数vhost_user_msg_handler中

1 case VHOST_USER_SET_MEM_TABLE: 2 ret = vhost_user_set_mem_table(dev, &msg); 3 break;

在分析函数之前我们先看下几个数据结构

1 /*对应qemu端的region结构*/

2 typedef struct VhostUserMemoryRegion {

3 uint64_t guest_phys_addr;//GPA of region

4 uint64_t memory_size; //size

5 uint64_t userspace_addr;//HVA in qemu process

6 uint64_t mmap_offset; //offset

7 } VhostUserMemoryRegion;

8

9 typedef struct VhostUserMemory {

10 uint32_t nregions;//region num

11 uint32_t padding;

12 VhostUserMemoryRegion regions[VHOST_MEMORY_MAX_NREGIONS];//All region

13 } VhostUserMemory;

在vhost端,对应的数据结构为

1 struct rte_vhost_mem_region {

2 uint64_t guest_phys_addr;//GPA of region

3 uint64_t guest_user_addr;//HVA in qemu process

4 uint64_t host_user_addr;//HVA in vhost-user

5 uint64_t size;//size

6 void *mmap_addr;//mmap base Address

7 uint64_t mmap_size;

8 int fd;//relative fd of region

9 };

意义都比较容易理解就不在多说,在virtio_net结构中保存有指向当前连接对应的memory结构rte_vhost_memory

1 struct rte_vhost_memory {

2 uint32_t nregions;

3 struct rte_vhost_mem_region regions[];

4 };

OK,下面看代码,代码虽然较多,但是意义都比较容易理解,只看核心部分吧:

1 dev->mem = rte_zmalloc("vhost-mem-table", sizeof(struct rte_vhost_memory) +

2 sizeof(struct rte_vhost_mem_region) * memory.nregions, 0);

3 if (dev->mem == NULL) {

4 RTE_LOG(ERR, VHOST_CONFIG,

5 "(%d) failed to allocate memory for dev->mem

",

6 dev->vid);

7 return -1;

8 }

9 /*region num*/

10 dev->mem->nregions = memory.nregions;

11

12 for (i = 0; i < memory.nregions; i++) {

13 /*fd info*/

14 fd = msg->fds[i];//qemu进程中的文件描述符??

15 reg = &dev->mem->regions[i];

16 /*GPA of specific region*/

17 reg->guest_phys_addr = memory.regions[i].guest_phys_addr;

18 /*HVA in qemu address*/

19 reg->guest_user_addr = memory.regions[i].userspace_addr;//该region在qemu进程中的虚拟地址

20 reg->size = memory.regions[i].memory_size;

21 reg->fd = fd;

22 /*offset in region*/

23 mmap_offset = memory.regions[i].mmap_offset;

24 mmap_size = reg->size + mmap_offset;

25

26 /* mmap() without flag of MAP_ANONYMOUS, should be called

27 * with length argument aligned with hugepagesz at older

28 * longterm version Linux, like 2.6.32 and 3.2.72, or

29 * mmap() will fail with EINVAL.

30 *

31 * to avoid failure, make sure in caller to keep length

32 * aligned.

33 */

34 alignment = get_blk_size(fd);

35 if (alignment == (uint64_t)-1) {

36 RTE_LOG(ERR, VHOST_CONFIG,

37 "couldn't get hugepage size through fstat

");

38 goto err_mmap;

39 }

40 /*对齐*/

41 mmap_size = RTE_ALIGN_CEIL(mmap_size, alignment);

42 /*执行映射,这里就是本进程的虚拟地址了,为何能映射另一个进程的文件描述符呢?*/

43 mmap_addr = mmap(NULL, mmap_size, PROT_READ | PROT_WRITE,

44 MAP_SHARED | MAP_POPULATE, fd, 0);

45

46 if (mmap_addr == MAP_FAILED) {

47 RTE_LOG(ERR, VHOST_CONFIG,

48 "mmap region %u failed.

", i);

49 goto err_mmap;

50 }

51

52 reg->mmap_addr = mmap_addr;

53 reg->mmap_size = mmap_size;

54 /*region Address in vhost process*/

55 reg->host_user_addr = (uint64_t)(uintptr_t)mmap_addr +

56 mmap_offset;//该region在vhost进程中的虚拟地址

57

58 if (dev->dequeue_zero_copy)

59 add_guest_pages(dev, reg, alignment);

60

61

62 }

首先就是为dev分配mem空间,由此我们也可以得到该结构的布局

下面一个for循环对每个region先进行对应信息的复制,然后对该region的大小进行对其操作,接着通过mmap的方式对region关联的fd进行映射,这里便得到了region在vhost端的虚拟地址,但是region中GPA对应的虚拟地址还需要在mmap得到的虚拟地址上加上offset,该值也是作为参数传递进来的。到此,设置memory Table的工作基本完成,看下地址翻译过程呢?

1 /* Converts QEMU virtual address to Vhost virtual address. */

2 static uint64_t

3 qva_to_vva(struct virtio_net *dev, uint64_t qva)

4 {

5 struct rte_vhost_mem_region *reg;

6 uint32_t i;

7

8 /* Find the region where the address lives. */

9 for (i = 0; i < dev->mem->nregions; i++) {

10 reg = &dev->mem->regions[i];

11

12 if (qva >= reg->guest_user_addr &&

13 qva < reg->guest_user_addr + reg->size) {

14 return qva - reg->guest_user_addr +

15 reg->host_user_addr;//qva在所属region中的偏移,qva - reg->guest_user_addr

16 }

17 }

18

19 return 0;

20 }

相当简单把,核心思想是先使用QVA确定在哪一个region,然后取地址在region中的偏移,加上该region在vhost-user映射的实际有效地址即reg->host_user_addr字段。这部分还有一个核心思想是fd的使用,vhost_user_set_mem_table直接从MSG中获取到了fd,然后直接把FD进行mmap映射,这点一时间让我难以理解,FD不是仅仅在进程内部有效么?怎么也可以共享了??通过向开源社区请教,感叹自己的知识面实在狭窄,这是Unix下一种通用的传递描述符的方式,怎么说呢?就是进程A的描述符可以通过特定的调用传递给进程B,进程B在自己的描述符表中分配一个位置给该描述符指针,因此实际上进程B使用的并不是A的FD,而是自己描述符表中的FD,但是两个进程的FD却指向同一个描述符表,就像是增加了一个引用而已。后面会专门对该机制进行详解,本文仅仅了解该作用即可。

三、vhost-user app的通知机制

这里的通知机制和vhost kernel基本一致,都是通过eventfd的方式。因此这里就比较简单了

qemu端的代码:

1 file.fd = event_notifier_get_fd(virtio_queue_get_host_notifier(vvq)); 2 r = dev->vhost_ops->vhost_set_vring_kick(dev, &file);

1 static int vhost_user_set_vring_kick(struct vhost_dev *dev,

2 struct vhost_vring_file *file)

3 {

4 return vhost_set_vring_file(dev, VHOST_USER_SET_VRING_KICK, file);

5 }

6 static int vhost_set_vring_file(struct vhost_dev *dev,

7 VhostUserRequest request,

8 struct vhost_vring_file *file)

9 {

10 int fds[VHOST_MEMORY_MAX_NREGIONS];

11 size_t fd_num = 0;

12 VhostUserMsg msg = {

13 .request = request,

14 .flags = VHOST_USER_VERSION,

15 .payload.u64 = file->index & VHOST_USER_VRING_IDX_MASK,

16 .size = sizeof(msg.payload.u64),

17 };

18

19 if (ioeventfd_enabled() && file->fd > 0) {

20 fds[fd_num++] = file->fd;

21 } else {

22 msg.payload.u64 |= VHOST_USER_VRING_NOFD_MASK;

23 }

24

25 if (vhost_user_write(dev, &msg, fds, fd_num) < 0) {

26 return -1;

27 }

28

29 return 0;

30 }

可以看到这里实质上也是把eventfd的描述符传递给vhost-user。再看vhost-user端,在vhost_user_set_vring_kick中,关键的一句

1 vq->kickfd = file.fd;

其实这里的通知机制和kernel下没什么区别,不过是换到用户空间对eventfd进行操作而已,这里暂时不讨论了,后面有时间在补充!