http://blog.chinaunix.net/uid-28541347-id-5786547.html

https://zhaozhanxu.com/2017/02/16/QEMU/2017-02-16-qemu-reconnect/

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=no,downscript=no: info: net_client_init_fun call 10

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=no,downscript=no: info: vhost_net_init call vhost_dev_init

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=no,downscript=no: info: vhost_dev_init call hdev->vhost_ops->vhost_backend_init 、host_set_owne、 vhost_get_features

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=no,downscript=no: info: vhost_dev_init call vhost_virtqueue_init

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=no,downscript=no: vhost_virtqueue_init vhost_set_vring_call : File descriptor in bad state (77)

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=no,downscript=no: info: vhost_dev_init call vhost_virtqueue_init

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=no,downscript=no: vhost_virtqueue_init vhost_set_vring_call : File descriptor in bad state (77)

qemu-system-aarch64: -object memory-backend-file,id=mem0,mem-path=/tmp/kata: can't create backend with size 0

[root@localhost binary]# socat "stdin,raw,echo=0,escape=0x11" "unix-connect:console.sock"

2020/11/10 04:04:26 socat[16280] E connect(5, AF=1 "console.sock", 14): Connection refused

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=no,downscript=no: info: net_client_init_fun call 10

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=no,downscript=no: info: vhost_net_init call vhost_dev_init

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=no,downscript=no: info: vhost_dev_init call hdev->vhost_ops->vhost_backend_init 、host_set_owne、 vhost_get_features

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=no,downscript=no: info: vhost_dev_init call vhost_virtqueue_init

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=no,downscript=no: vhost_virtqueue_init vhost_set_vring_call : File descriptor in bad state (77)

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=no,downscript=no: info: vhost_dev_init call vhost_virtqueue_init

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=no,downscript=no: vhost_virtqueue_init vhost_set_vring_call : File descriptor in bad state (77)

qemu-system-aarch64: info: vhost_net_start vhost_net_start_one

qemu-system-aarch64: info: vhost.c vhost_dev_start

qemu-system-aarch64: vhost_dev_start call vhost_set_mem_table: No buffer space available (105)

qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: No buffer space available (105)

qemu-system-aarch64: vhost_virtqueue_start: No buffer space available (105)

qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: Resource temporarily unavailable (11)

qemu-system-aarch64: vhost_virtqueue_start: Resource temporarily unavailable (11)

qemu-system-aarch64: info: vhost_net_start vhost_net_start_one

qemu-system-aarch64: info: vhost.c vhost_dev_start

qemu-system-aarch64: vhost_dev_start call vhost_set_mem_table: No buffer space available (105)

qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: No buffer space available (105)

qemu-system-aarch64: vhost_virtqueue_start: No buffer space available (105)

qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: Resource temporarily unavailable (11)

qemu-system-aarch64: vhost_virtqueue_start: Resource temporarily unavailable (11)

qemu-system-aarch64: info: vhost_net_start vhost_net_start_one

qemu-system-aarch64: info: vhost.c vhost_dev_start

qemu-system-aarch64: vhost_dev_start call vhost_set_mem_table: No buffer space available (105)

qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: No buffer space available (105)

qemu-system-aarch64: vhost_virtqueue_start: No buffer space available (105)

qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: Resource temporarily unavailable (11)

qemu-system-aarch64: vhost_virtqueue_start: Resource temporarily unavailable (11)

qemu-system-aarch64: info: vhost_net_start vhost_net_start_one

qemu-system-aarch64: info: vhost.c vhost_dev_start

qemu-system-aarch64: vhost_dev_start call vhost_set_mem_table: No buffer space available (105)

qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: No buffer space available (105)

qemu-system-aarch64: vhost_virtqueue_start: No buffer space available (105)

qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: Resource temporarily unavailable (11)

qemu-system-aarch64: vhost_virtqueue_start: Resource temporarily unavailable (11)

qemu-system-aarch64: info: vhost_net_start vhost_net_start_one

qemu-system-aarch64: info: vhost.c vhost_dev_start

qemu-system-aarch64: vhost_dev_start call vhost_set_mem_table: No buffer space available (105)

qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: No buffer space available (105)

qemu-system-aarch64: vhost_virtqueue_start: No buffer space available (105)

qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: Resource temporarily unavailable (11)

qemu-system-aarch64: vhost_virtqueue_start: Resource temporarily unavailable (11)

^Cqemu-system-aarch64: terminating on signal 2

vhost_set_mem_table: No buffer space available

qemu-system-aarch64: info: vhost_net_start vhost_net_start_one

qemu-system-aarch64: info: vhost.c vhost_dev_start

qemu-system-aarch64: vhost_dev_start call vhost_set_mem_table: No buffer space available (105)

qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: No buffer space available (105)

qemu-system-aarch64: vhost_virtqueue_start: No buffer space available (105)

qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: Resource temporarily unavailable (11)

qemu-system-aarch64: vhost_virtqueue_start: Resource temporarily unavailable (11)

qemu-system-aarch64: info: vhost_net_start vhost_net_start_one

qemu-system-aarch64: info: vhost.c vhost_dev_start

qemu-system-aarch64: vhost_dev_start call vhost_set_mem_table: No buffer space available (105)

qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: No buffer space available (105)

qemu-system-aarch64: vhost_virtqueue_start: No buffer space available (105)

qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: Resource temporarily unavailable (11)

qemu-system-aarch64: vhost_virtqueue_start: Resource temporarily unavailable (11)

qemu-system-aarch64: info: vhost_net_start vhost_net_start_one

qemu-system-aarch64: info: vhost.c vhost_dev_start

qemu-system-aarch64: vhost_dev_start call vhost_set_mem_table: No buffer space available (105)

qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: No buffer space available (105)

qemu-system-aarch64: vhost_virtqueue_start: No buffer space available (105)

qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: Resource temporarily unavailable (11)

qemu-system-aarch64: vhost_virtqueue_start: Resource temporarily unavailable (11)

qemu-system-aarch64: info: vhost_net_start vhost_net_start_one

qemu-system-aarch64: info: vhost.c vhost_dev_start

qemu-system-aarch64: vhost_dev_start call vhost_set_mem_table: No buffer space available (105)

qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: No buffer space available (105)

qemu-system-aarch64: vhost_virtqueue_start: No buffer space available (105)

qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: Resource temporarily unavailable (11)

qemu-system-aarch64: vhost_virtqueue_start: Resource temporarily unavailable (11)

创建vhost nic没有加-object memory-backend-file,id=mem,size=4096M,mem-path=/mnt/huge1,share=on

tcp_chr_connect

static void tcp_chr_connect(void *opaque) { Chardev *chr = CHARDEV(opaque); SocketChardev *s = SOCKET_CHARDEV(opaque); g_free(chr->filename); chr->filename = qemu_chr_compute_filename(s); tcp_chr_change_state(s, TCP_CHARDEV_STATE_CONNECTED); update_ioc_handlers(s); info_report("tcp_chr_connect call qemu_chr_be_event CHR_EVENT_OPENED"); qemu_chr_be_event(chr, CHR_EVENT_OPENED); }

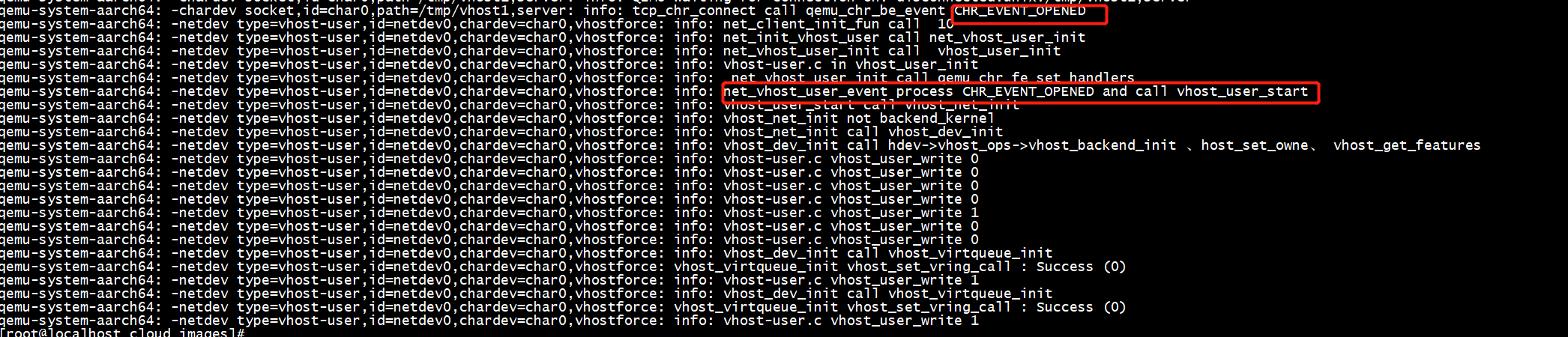

qemu-system-aarch64: -chardev socket,id=char0,path=/tmp/vhost1,server: info: QEMU waiting for connection on: disconnected:unix:/tmp/vhost1,server qemu-system-aarch64: -chardev socket,id=char0,path=/tmp/vhost1,server: info: tcp_chr_connect call qemu_chr_be_event CHR_EVENT_OPENED qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: net_client_init_fun call 10 qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: net_init_vhost_user call net_vhost_user_init qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: net_vhost_user_init call vhost_user_init qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: vhost-user.c in vhost_user_init qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: net_vhost_user_init call qemu_chr_fe_set_handlers qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: net_vhost_user_event process CHR_EVENT_OPENED and call vhost_user_start qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: vhost_user_start call vhost_net_init qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: vhost_net_init not backend_kernel qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: vhost_net_init call vhost_dev_init qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: vhost_dev_init call hdev->vhost_ops->vhost_backend_init 、host_set_owne、 vhost_get_features qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: vhost-user.c vhost_user_write 0 qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: vhost-user.c vhost_user_write 0 qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: vhost-user.c vhost_user_write 0 qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: vhost-user.c vhost_user_write 0 qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: vhost-user.c vhost_user_write 1 qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: vhost-user.c vhost_user_write 0 qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: vhost-user.c vhost_user_write 0 qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: vhost_dev_init call vhost_virtqueue_init qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: vhost_virtqueue_init vhost_set_vring_call : Success (0) qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: vhost-user.c vhost_user_write 1 qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: vhost_dev_init call vhost_virtqueue_init qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: vhost_virtqueue_init vhost_set_vring_call : Success (0) qemu-system-aarch64: -netdev type=vhost-user,id=netdev0,chardev=char0,vhostforce: info: vhost-user.c vhost_user_write 1

net_client_init1

static int net_client_init1(const Netdev *netdev, bool is_netdev, Error **errp) { NetClientState *peer = NULL; if (is_netdev) { if (netdev->type == NET_CLIENT_DRIVER_NIC || !net_client_init_fun[netdev->type]) { error_setg(errp, QERR_INVALID_PARAMETER_VALUE, "type", "a netdev backend type"); return -1; } } else { if (netdev->type == NET_CLIENT_DRIVER_NONE) { return 0; /* nothing to do */ } if (netdev->type == NET_CLIENT_DRIVER_HUBPORT || !net_client_init_fun[netdev->type]) { error_setg(errp, QERR_INVALID_PARAMETER_VALUE, "type", "a net backend type (maybe it is not compiled " "into this binary)"); return -1; } /* Do not add to a hub if it's a nic with a netdev= parameter. */ if (netdev->type != NET_CLIENT_DRIVER_NIC || !netdev->u.nic.has_netdev) { peer = net_hub_add_port(0, NULL, NULL); } } info_report("net_client_init_fun call %d", NET_CLIENT_DRIVER_VHOST_USER); if (net_client_init_fun[netdev->type](netdev, netdev->id, peer, errp) < 0) { /* FIXME drop when all init functions store an Error */ if (errp && !*errp) { error_setg(errp, QERR_DEVICE_INIT_FAILED, NetClientDriver_str(netdev->type)); } return -1; } if (is_netdev) { NetClientState *nc; nc = qemu_find_netdev(netdev->id); assert(nc); nc->is_netdev = true; } return 0; }

[NET_CLIENT_DRIVER_VHOST_USER] = net_init_vhost_user,

net_init_vhost_user

int net_init_vhost_user(const Netdev *netdev, const char *name, NetClientState *peer, Error **errp) { int queues; const NetdevVhostUserOptions *vhost_user_opts; Chardev *chr; assert(netdev->type == NET_CLIENT_DRIVER_VHOST_USER); vhost_user_opts = &netdev->u.vhost_user; chr = net_vhost_claim_chardev(vhost_user_opts, errp); if (!chr) { return -1; } /* verify net frontend */ if (qemu_opts_foreach(qemu_find_opts("device"), net_vhost_check_net, (char *)name, errp)) { return -1; } queues = vhost_user_opts->has_queues ? vhost_user_opts->queues : 1; if (queues < 1 || queues > MAX_QUEUE_NUM) { error_setg(errp, "vhost-user number of queues must be in range [1, %d]", MAX_QUEUE_NUM); return -1; } info_report("net_init_vhost_user call net_vhost_user_init"); return net_vhost_user_init(peer, "vhost_user", name, chr, queues); }

安装net_vhost_user_event沟子函数

static int net_vhost_user_init(NetClientState *peer, const char *device, const char *name, Chardev *chr, int queues) { Error *err = NULL; NetClientState *nc, *nc0 = NULL; NetVhostUserState *s = NULL; VhostUserState *user; int i; assert(name); assert(queues > 0); user = g_new0(struct VhostUserState, 1); for (i = 0; i < queues; i++) { nc = qemu_new_net_client(&net_vhost_user_info, peer, device, name); snprintf(nc->info_str, sizeof(nc->info_str), "vhost-user%d to %s", i, chr->label); nc->queue_index = i; if (!nc0) { nc0 = nc; s = DO_UPCAST(NetVhostUserState, nc, nc); info_report("net_vhost_user_init call vhost_user_init"); if (!qemu_chr_fe_init(&s->chr, chr, &err) || !vhost_user_init(user, &s->chr, &err)) { error_report_err(err); goto err; } } s = DO_UPCAST(NetVhostUserState, nc, nc); s->vhost_user = user; } s = DO_UPCAST(NetVhostUserState, nc, nc0); do { if (qemu_chr_fe_wait_connected(&s->chr, &err) < 0) { error_report_err(err); goto err; } info_report(" net_vhost_user_init call qemu_chr_fe_set_handlers"); qemu_chr_fe_set_handlers(&s->chr, NULL, NULL, net_vhost_user_event, NULL, nc0->name, NULL, true); } while (!s->started); assert(s->vhost_net); return 0;

net/vhost-user.c:net_vhost_user_event

static void net_vhost_user_event(void *opaque, QEMUChrEvent event) { const char *name = opaque; NetClientState *ncs[MAX_QUEUE_NUM]; NetVhostUserState *s; Chardev *chr; Error *err = NULL; int queues; queues = qemu_find_net_clients_except(name, ncs, NET_CLIENT_DRIVER_NIC, MAX_QUEUE_NUM); assert(queues < MAX_QUEUE_NUM); s = DO_UPCAST(NetVhostUserState, nc, ncs[0]); chr = qemu_chr_fe_get_driver(&s->chr); trace_vhost_user_event(chr->label, event); switch (event) { case CHR_EVENT_OPENED: info_report("net_vhost_user_event process CHR_EVENT_OPENED and call vhost_user_start"); if (vhost_user_start(queues, ncs, s->vhost_user) < 0) { qemu_chr_fe_disconnect(&s->chr); return; } s->watch = qemu_chr_fe_add_watch(&s->chr, G_IO_HUP, net_vhost_user_watch, s); qmp_set_link(name, true, &err); s->started = true; break;

net/vhost-user.c:vhost_user_start

static int vhost_user_start(int queues, NetClientState *ncs[], VhostUserState *be) { VhostNetOptions options; struct vhost_net *net = NULL; NetVhostUserState *s; int max_queues; int i; options.backend_type = VHOST_BACKEND_TYPE_USER; for (i = 0; i < queues; i++) { assert(ncs[i]->info->type == NET_CLIENT_DRIVER_VHOST_USER); s = DO_UPCAST(NetVhostUserState, nc, ncs[i]); options.net_backend = ncs[i]; options.opaque = be; options.busyloop_timeout = 0; info_report("vhost_user_start call vhost_net_init"); net = vhost_net_init(&options); if (!net) { error_report("failed to init vhost_net for queue %d", i); goto err; } if (i == 0) { max_queues = vhost_net_get_max_queues(net); if (queues > max_queues) { error_report("you are asking more queues than supported: %d", max_queues); goto err; } } if (s->vhost_net) { vhost_net_cleanup(s->vhost_net); g_free(s->vhost_net); } s->vhost_net = net; } return 0; err: if (net) { vhost_net_cleanup(net); g_free(net); } vhost_user_stop(i, ncs); return -1; }

hw/net/vhost_net.c: vhost_net_init

struct vhost_net *vhost_net_init(VhostNetOptions *options) { int r; bool backend_kernel = options->backend_type == VHOST_BACKEND_TYPE_KERNEL; struct vhost_net *net = g_new0(struct vhost_net, 1); uint64_t features = 0; if (!options->net_backend) { fprintf(stderr, "vhost-net requires net backend to be setup "); goto fail; } net->nc = options->net_backend; net->dev.max_queues = 1; net->dev.nvqs = 2; net->dev.vqs = net->vqs; if (backend_kernel) { r = vhost_net_get_fd(options->net_backend); if (r < 0) { goto fail; } net->dev.backend_features = qemu_has_vnet_hdr(options->net_backend) ? 0 : (1ULL << VHOST_NET_F_VIRTIO_NET_HDR); net->backend = r; net->dev.protocol_features = 0; } else { info_report("vhost_net_init not backend_kernel"); net->dev.backend_features = 0; net->dev.protocol_features = 0; net->backend = -1; /* vhost-user needs vq_index to initiate a specific queue pair */ net->dev.vq_index = net->nc->queue_index * net->dev.nvqs; } info_report("vhost_net_init call vhost_dev_init"); r = vhost_dev_init(&net->dev, options->opaque, options->backend_type, options->busyloop_timeout); if (r < 0) { goto fail; } if (backend_kernel) { if (!qemu_has_vnet_hdr_len(options->net_backend, sizeof(struct virtio_net_hdr_mrg_rxbuf))) { net->dev.features &= ~(1ULL << VIRTIO_NET_F_MRG_RXBUF); } if (~net->dev.features & net->dev.backend_features) { fprintf(stderr, "vhost lacks feature mask %" PRIu64 " for backend ", (uint64_t)(~net->dev.features & net->dev.backend_features)); goto fail; } }

hw/virtio/vhost.c:vhost_dev_init

int vhost_dev_init(struct vhost_dev *hdev, void *opaque, VhostBackendType backend_type, uint32_t busyloop_timeout) { uint64_t features; int i, r, n_initialized_vqs = 0; Error *local_err = NULL; hdev->vdev = NULL; hdev->migration_blocker = NULL; info_report("vhost_dev_init call hdev->vhost_ops->vhost_backend_init 、host_set_owne、 vhost_get_features"); r = vhost_set_backend_type(hdev, backend_type); assert(r >= 0); r = hdev->vhost_ops->vhost_backend_init(hdev, opaque); if (r < 0) { goto fail; } r = hdev->vhost_ops->vhost_set_owner(hdev); if (r < 0) { VHOST_OPS_DEBUG("vhost_set_owner failed"); goto fail; } r = hdev->vhost_ops->vhost_get_features(hdev, &features); if (r < 0) { VHOST_OPS_DEBUG("vhost_get_features failed"); goto fail; } for (i = 0; i < hdev->nvqs; ++i, ++n_initialized_vqs) { info_report("vhost_dev_init call vhost_virtqueue_init"); r = vhost_virtqueue_init(hdev, hdev->vqs + i, hdev->vq_index + i); if (r < 0) { goto fail; } } if (busyloop_timeout) { for (i = 0; i < hdev->nvqs; ++i) { r = vhost_virtqueue_set_busyloop_timeout(hdev, hdev->vq_index + i, busyloop_timeout); if (r < 0) { goto fail_busyloop; } } }

const VhostOps user_ops = { .backend_type = VHOST_BACKEND_TYPE_USER, .vhost_backend_init = vhost_user_backend_init, .vhost_backend_cleanup = vhost_user_backend_cleanup, .vhost_backend_memslots_limit = vhost_user_memslots_limit, .vhost_set_log_base = vhost_user_set_log_base, .vhost_set_mem_table = vhost_user_set_mem_table, .vhost_set_vring_addr = vhost_user_set_vring_addr, .vhost_set_vring_endian = vhost_user_set_vring_endian, .vhost_set_vring_num = vhost_user_set_vring_num, .vhost_set_vring_base = vhost_user_set_vring_base, .vhost_get_vring_base = vhost_user_get_vring_base, .vhost_set_vring_kick = vhost_user_set_vring_kick, .vhost_set_vring_call = vhost_user_set_vring_call, .vhost_set_features = vhost_user_set_features, .vhost_get_features = vhost_user_get_features, .vhost_set_owner = vhost_user_set_owner, .vhost_reset_device = vhost_user_reset_device, .vhost_get_vq_index = vhost_user_get_vq_index, .vhost_set_vring_enable = vhost_user_set_vring_enable, .vhost_requires_shm_log = vhost_user_requires_shm_log, .vhost_migration_done = vhost_user_migration_done, .vhost_backend_can_merge = vhost_user_can_merge, .vhost_net_set_mtu = vhost_user_net_set_mtu, .vhost_set_iotlb_callback = vhost_user_set_iotlb_callback, .vhost_send_device_iotlb_msg = vhost_user_send_device_iotlb_msg, .vhost_get_config = vhost_user_get_config, .vhost_set_config = vhost_user_set_config, .vhost_crypto_create_session = vhost_user_crypto_create_session, .vhost_crypto_close_session = vhost_user_crypto_close_session, .vhost_backend_mem_section_filter = vhost_user_mem_section_filter, .vhost_get_inflight_fd = vhost_user_get_inflight_fd, .vhost_set_inflight_fd = vhost_user_set_inflight_fd, };

hw/virtio/vhost-user.c:vhost_user_write

/* most non-init callers ignore the error */ static int vhost_user_write(struct vhost_dev *dev, VhostUserMsg *msg, int *fds, int fd_num) { info_report("vhost-user.c vhost_user_write %d", fd_num); struct vhost_user *u = dev->opaque; CharBackend *chr = u->user->chr; int ret, size = VHOST_USER_HDR_SIZE + msg->hdr.size; /* * For non-vring specific requests, like VHOST_USER_SET_MEM_TABLE, * we just need send it once in the first time. For later such * request, we just ignore it. */ if (vhost_user_one_time_request(msg->hdr.request) && dev->vq_index != 0) { msg->hdr.flags &= ~VHOST_USER_NEED_REPLY_MASK; return 0; } if (qemu_chr_fe_set_msgfds(chr, fds, fd_num) < 0) { error_report("Failed to set msg fds."); return -1; } ret = qemu_chr_fe_write_all(chr, (const uint8_t *) msg, size); if (ret != size) { error_report("Failed to write msg." " Wrote %d instead of %d.", ret, size); return -1; } return 0; }

vhost_virtqueue_init

static int vhost_virtqueue_init(struct vhost_dev *dev, struct vhost_virtqueue *vq, int n) { int vhost_vq_index = dev->vhost_ops->vhost_get_vq_index(dev, n); struct vhost_vring_file file = { .index = vhost_vq_index, }; int r = event_notifier_init(&vq->masked_notifier, 0); if (r < 0) { return r; } file.fd = event_notifier_get_fd(&vq->masked_notifier); VHOST_OPS_DEBUG("vhost_virtqueue_init vhost_set_vring_call "); r = dev->vhost_ops->vhost_set_vring_call(dev, &file); if (r) { VHOST_OPS_DEBUG("vhost_set_vring_call failed"); r = -errno; goto fail_call; } vq->dev = dev; return 0; fail_call: event_notifier_cleanup(&vq->masked_notifier); return r; }

static int vhost_user_set_vring_call(struct vhost_dev *dev, struct vhost_vring_file *file) { return vhost_set_vring_file(dev, VHOST_USER_SET_VRING_CALL, file); }

static int vhost_user_set_vring_call(struct vhost_dev *dev, struct vhost_vring_file *file) { return vhost_set_vring_file(dev, VHOST_USER_SET_VRING_CALL, file); }

static int vhost_set_vring_file(struct vhost_dev *dev, VhostUserRequest request, struct vhost_vring_file *file) { int fds[VHOST_USER_MAX_RAM_SLOTS]; size_t fd_num = 0; VhostUserMsg msg = { .hdr.request = request, .hdr.flags = VHOST_USER_VERSION, .payload.u64 = file->index & VHOST_USER_VRING_IDX_MASK, .hdr.size = sizeof(msg.payload.u64), }; if (ioeventfd_enabled() && file->fd > 0) { fds[fd_num++] = file->fd; } else { msg.payload.u64 |= VHOST_USER_VRING_NOFD_MASK; } if (vhost_user_write(dev, &msg, fds, fd_num) < 0) { return -1; } return 0; }

/* most non-init callers ignore the error */ static int vhost_user_write(struct vhost_dev *dev, VhostUserMsg *msg, int *fds, int fd_num) { info_report("vhost-user.c vhost_user_write %d", fd_num); struct vhost_user *u = dev->opaque; CharBackend *chr = u->user->chr; int ret, size = VHOST_USER_HDR_SIZE + msg->hdr.size; /* * For non-vring specific requests, like VHOST_USER_SET_MEM_TABLE, * we just need send it once in the first time. For later such * request, we just ignore it. */ if (vhost_user_one_time_request(msg->hdr.request) && dev->vq_index != 0) { msg->hdr.flags &= ~VHOST_USER_NEED_REPLY_MASK; return 0; } if (qemu_chr_fe_set_msgfds(chr, fds, fd_num) < 0) { error_report("Failed to set msg fds."); return -1; } ret = qemu_chr_fe_write_all(chr, (const uint8_t *) msg, size); if (ret != size) { error_report("Failed to write msg." " Wrote %d instead of %d.", ret, size); return -1; } return 0; }

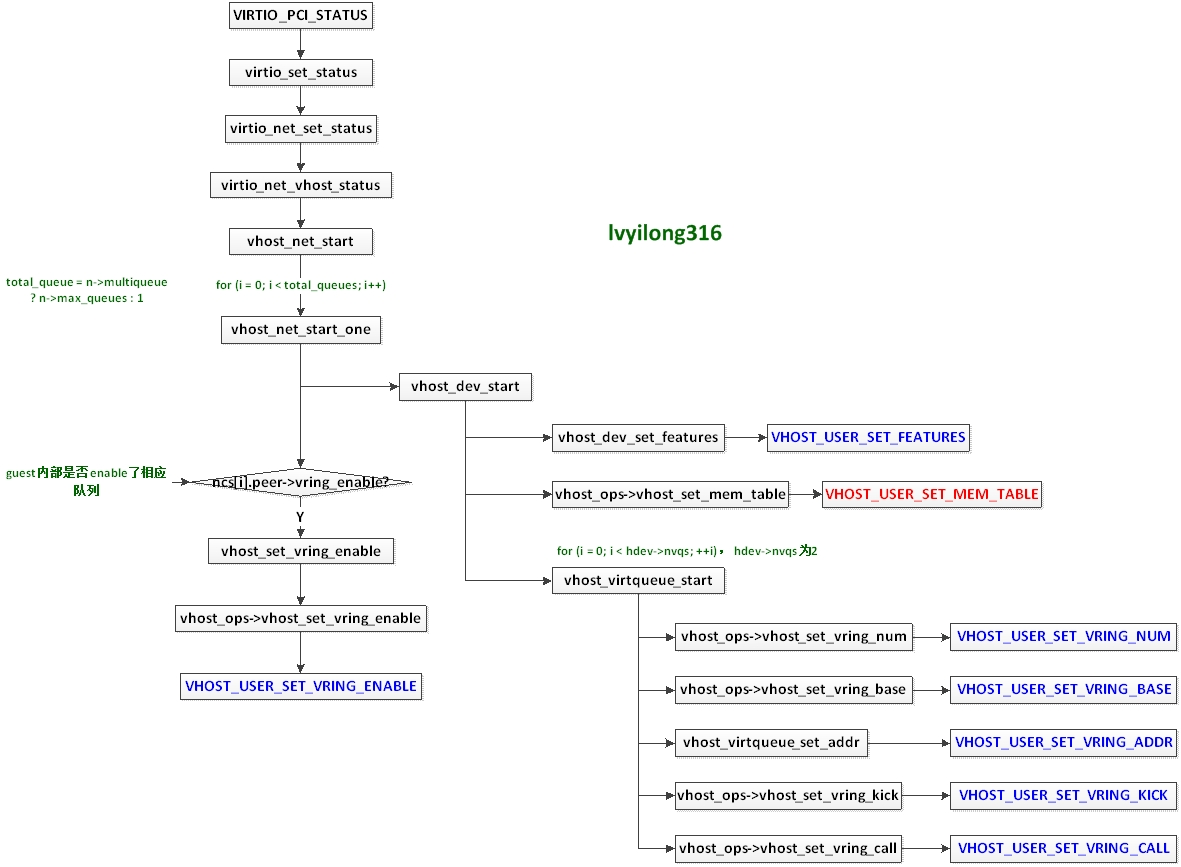

guest驱动加载完成

当guest中virtio-net加载完成后会写VIRTIO_PCI_STATUS寄存器,这个操作同样会被kvm捕获传递给qemu。qemu的相应处理逻辑如下。

host网络设备就会从virtio_set_status中调用memory_region_add_eventfd,然后通过kvm_io_ioeventfd_add添加eventfd到KVM,一旦有PIO操作,就通过eventfd通知QEMU,和iothread没有关系

一般virtio网络设备(非vhost)从virtio_set_status 进入到virtio_net_vhost_status 后,发现不是vhost这边就直接return了,也就不会有memory_region_add_eventfd添加eventfd的过程了

VHOST调用栈如下 #0 memory_region_add_eventfd (mr=0x55fe2ae56320, addr=16, size=2, match_data=true, data=0, e=0x55fe2cf729f0) at /home/liufeng/workspace/src/open/qemu/memory.c:1792 #1 0x000055fe2976a299 in virtio_pci_set_host_notifier_internal (proxy=0x55fe2ae55aa0, n=0, assign=true, set_handler=false) at hw/virtio/virtio-pci.c:307 #2 0x000055fe2976c15f in virtio_pci_set_host_notifier (d=0x55fe2ae55aa0, n=0, assign=true) at hw/virtio/virtio-pci.c:1130 #3 0x000055fe2952fe97 in vhost_dev_enable_notifiers (hdev=0x55fe2adcfbc0, vdev=0x55fe2ae5ddc8) at /home/liufeng/workspace/src/open/qemu/hw/virtio/vhost.c:1124 #4 0x000055fe2950b8c7 in vhost_net_start_one (net=0x55fe2adcfbc0, dev=0x55fe2ae5ddc8) at /home/liufeng/workspace/src/open/qemu/hw/net/vhost_net.c:208 #5 0x000055fe2950bdef in vhost_net_start (dev=0x55fe2ae5ddc8, ncs=0x55fe2c1ab040, total_queues=1) at /home/liufeng/workspace/src/open/qemu/hw/net/vhost_net.c:308 #6 0x000055fe2950647c in virtio_net_vhost_status (n=0x55fe2ae5ddc8, status=7 'a') at /home/liufeng/workspace/src/open/qemu/hw/net/virtio-net.c:151 #7 0x000055fe29506711 in virtio_net_set_status (vdev=0x55fe2ae5ddc8, status=7 'a') at /home/liufeng/workspace/src/open/qemu/hw/net/virtio-net.c:224 #8 0x000055fe29527b89 in virtio_set_status (vdev=0x55fe2ae5ddc8, val=7 'a') at /home/liufeng/workspace/src/open/qemu/hw/virtio/virtio.c:748 #9 0x000055fe2976a6eb in virtio_ioport_write (opaque=0x55fe2ae55aa0, addr=18, val=7) at hw/virtio/virtio-pci.c:428 #10 0x000055fe2976ab46 in virtio_pci_config_write (opaque=0x55fe2ae55aa0, addr=18, val=7, size=1) at hw/virtio/virtio-pci.c:553 #11 0x000055fe294c67dd in memory_region_write_accessor (mr=0x55fe2ae56320, addr=18, value=0x7f6d3fd9a848, size=1, shift=0, mask=255, attrs=...) at /home/liufeng/workspace/src/open/qemu/memory.c:525 #12 0x000055fe294c69e8 in access_with_adjusted_size (addr=18, value=0x7f6d3fd9a848, size=1, access_size_min=1, access_size_max=4, access=0x55fe294c66fc <memory_region_write_accessor>, mr=0x55fe2ae56320, attrs=...) at /home/liufeng/workspace/src/open/qemu/memory.c:591 #13 0x000055fe294c962f in memory_region_dispatch_write (mr=0x55fe2ae56320, addr=18, data=7, size=1, attrs=...) at /home/liufeng/workspace/src/open/qemu/memory.c:1273 #14 0x000055fe2947b724 in address_space_write_continue (as=0x55fe29e205c0 <address_space_io>, addr=49170, attrs=..., buf=0x7f6d4ba38000 "aE�03", len=1, addr1=18, l=1, mr=0x55fe2ae56320) at /home/liufeng/workspace/src/open/qemu/exec.c:2619 #15 0x000055fe2947b89a in address_space_write (as=0x55fe29e205c0 <address_space_io>, addr=49170, attrs=..., buf=0x7f6d4ba38000 "aE�03", len=1) at /home/liufeng/workspace/src/open/qemu/exec.c:2665 #16 0x000055fe2947bc51 in address_space_rw (as=0x55fe29e205c0 <address_space_io>, addr=49170, attrs=..., buf=0x7f6d4ba38000 "aE�03", len=1, is_write=true) at /home/liufeng/workspace/src/open/qemu/exec.c:2768 #17 0x000055fe294c2d64 in kvm_handle_io (port=49170, attrs=..., data=0x7f6d4ba38000, direction=1, size=1, count=1) at /home/liufeng/workspace/src/open/qemu/kvm-all.c:1699 #18 0x000055fe294c3264 in kvm_cpu_exec (cpu=0x55fe2ae8ef50) at /home/liufeng/workspace/src/open/qemu/kvm-all.c:1863 #19 0x000055fe294aa8b8 in qemu_kvm_cpu_thread_fn (arg=0x55fe2ae8ef50) at /home/liufeng/workspace/src/open/qemu/cpus.c:1064 #20 0x00007f6d47187dc5 in start_thread () from /lib64/libpthread.so.0 #21 0x00007f6d46eb4ced in clone () from /lib64/libc.so.6

static int vhost_net_start_one(struct vhost_net *net, VirtIODevice *dev) { struct vhost_vring_file file = { }; int r; net->dev.nvqs = 2; net->dev.vqs = net->vqs; r = vhost_dev_enable_notifiers(&net->dev, dev); if (r < 0) { goto fail_notifiers; } r = vhost_dev_start(&net->dev, dev); if (r < 0) { goto fail_start; }