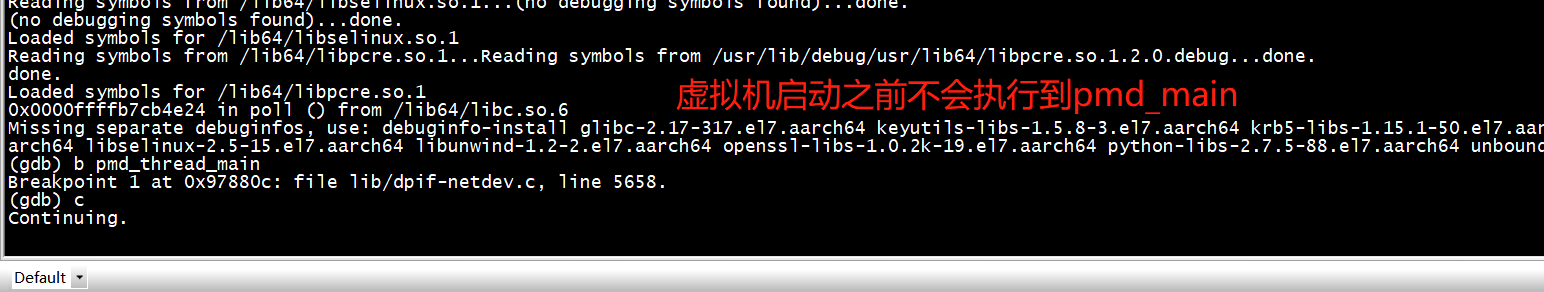

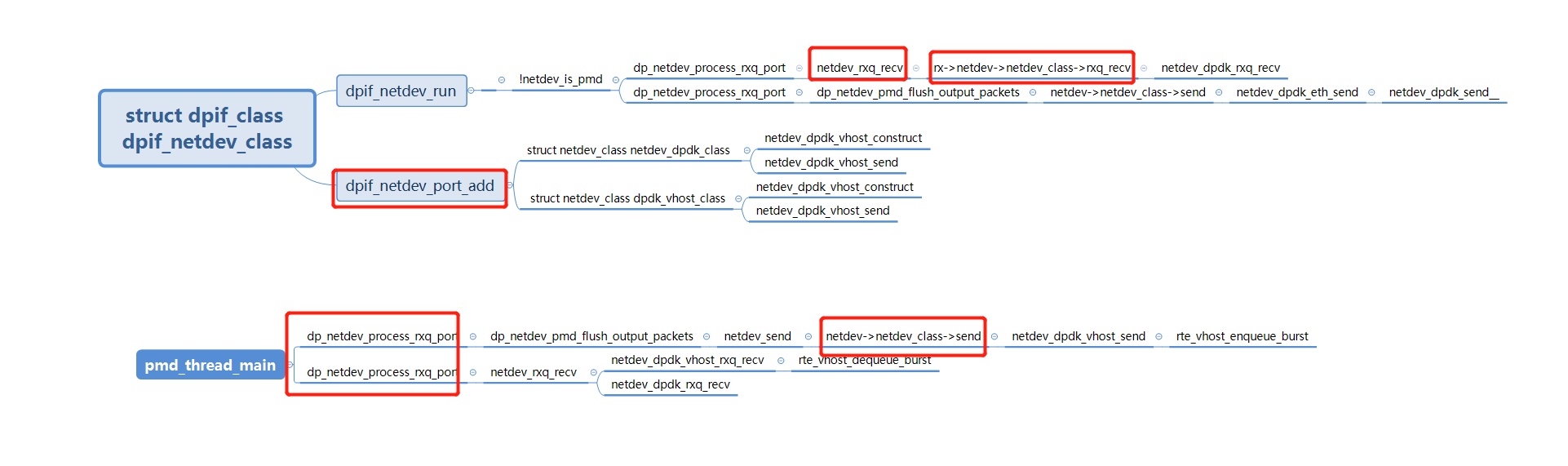

dpif_netdev_run && pmd_thread_main (没有启动虚拟机去连接 -chardev socket,id=char1,path=$VHOST_SOCK_DIR/vhost-user1,不会有pmd_thread_main)

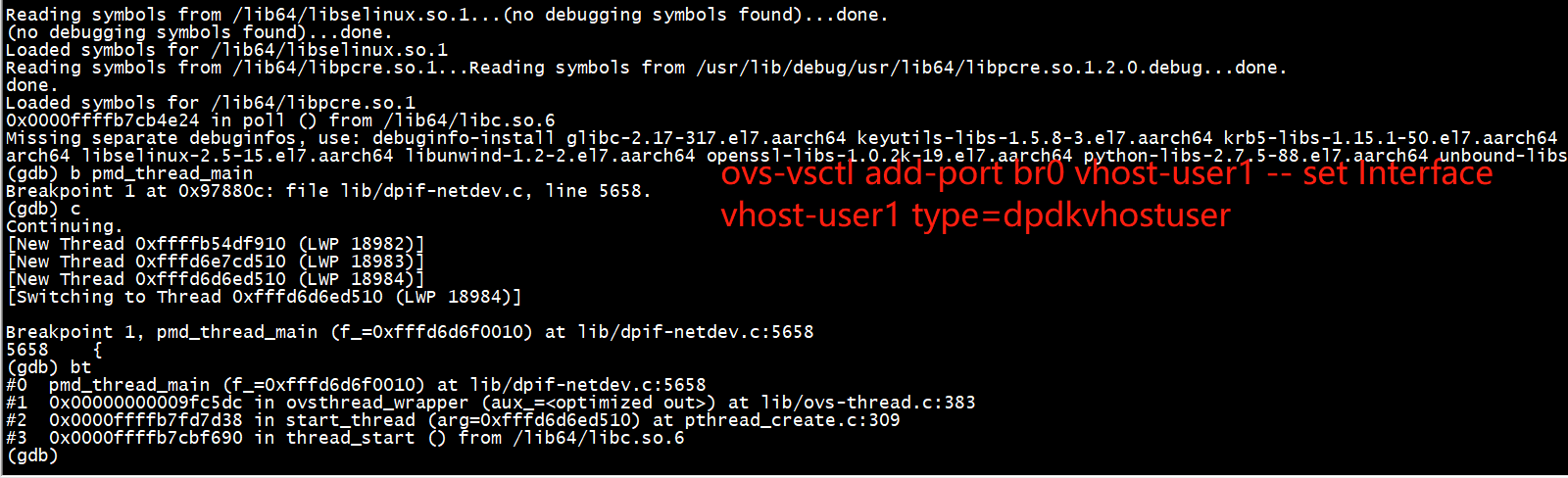

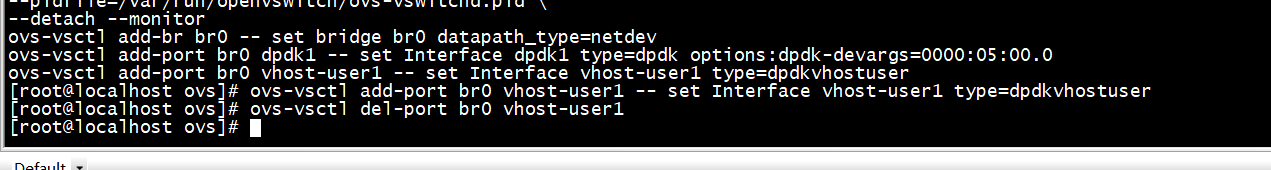

执行ovs-vsctl add-port br0 vhost-user1 -- set Interface vhost-user1 type=dpdkvhostuser 会触发pmd_thread_main

[root@localhost ovs]# ovs-vsctl show c013fe69-c1a7-40dd-833b-bef8cd04d43e Bridge br0 datapath_type: netdev Port br0 Interface br0 type: internal Port dpdk1 Interface dpdk1 type: dpdk options: {dpdk-devargs="0000:05:00.0"} [root@localhost ovs]#

删除端口

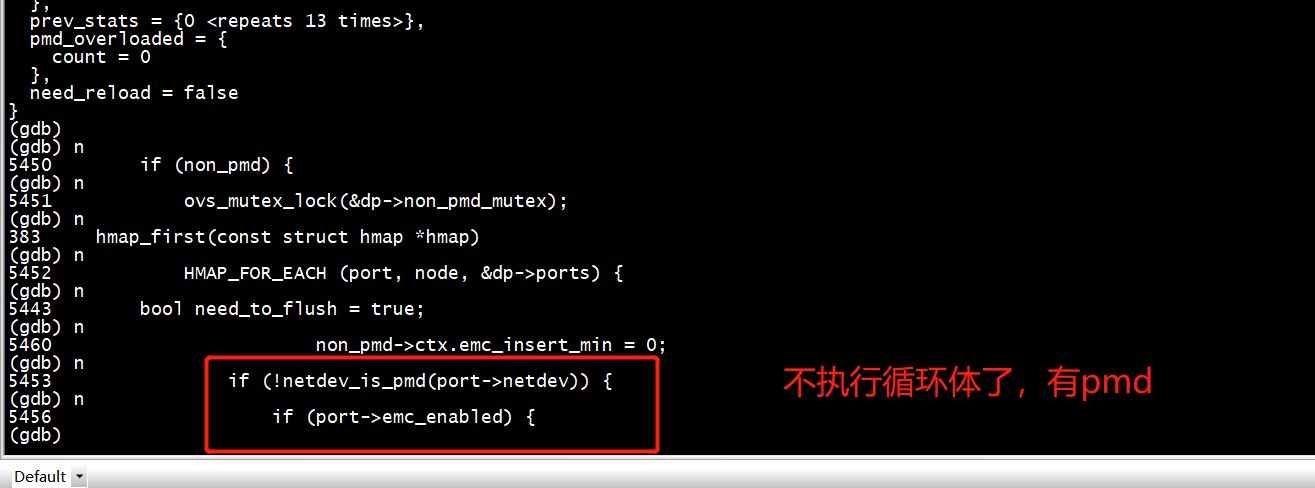

if (!netdev_is_pmd(port->netdev))

Breakpoint 1, dpif_netdev_run (dpif=0x204f78a0) at lib/dpif-netdev.c:5438 5438 { (gdb) bt #0 dpif_netdev_run (dpif=0x204f78a0) at lib/dpif-netdev.c:5438 #1 0x000000000097d290 in dpif_run (dpif=<optimized out>) at lib/dpif.c:463 #2 0x0000000000934c68 in type_run (type=type@entry=0x202dd910 "netdev") at ofproto/ofproto-dpif.c:370 #3 0x000000000091eb18 in ofproto_type_run (datapath_type=<optimized out>, datapath_type@entry=0x202dd910 "netdev") at ofproto/ofproto.c:1772 #4 0x000000000090d94c in bridge_run__ () at vswitchd/bridge.c:3242 #5 0x0000000000913480 in bridge_run () at vswitchd/bridge.c:3307 #6 0x000000000042469c in main (argc=11, argv=0xffffe2250958) at vswitchd/ovs-vswitchd.c:127 (gdb) b dp_netdev_process_rxq_port Breakpoint 2 at 0x97851c: file lib/dpif-netdev.c, line 4441. (gdb) b pmd_thread_main Breakpoint 3 at 0x97880c: file lib/dpif-netdev.c, line 5658. (gdb)

(gdb) c Continuing. [New Thread 0xffff7c29f910 (LWP 18234)] Breakpoint 1, dpif_netdev_run (dpif=0x204f78a0) at lib/dpif-netdev.c:5438 5438 { (gdb) bt #0 dpif_netdev_run (dpif=0x204f78a0) at lib/dpif-netdev.c:5438 #1 0x000000000097d290 in dpif_run (dpif=<optimized out>) at lib/dpif.c:463 #2 0x0000000000934c68 in type_run (type=type@entry=0x202dd910 "netdev") at ofproto/ofproto-dpif.c:370 #3 0x000000000091eb18 in ofproto_type_run (datapath_type=<optimized out>, datapath_type@entry=0x202dd910 "netdev") at ofproto/ofproto.c:1772 #4 0x000000000090d94c in bridge_run__ () at vswitchd/bridge.c:3242 #5 0x0000000000913480 in bridge_run () at vswitchd/bridge.c:3307 #6 0x000000000042469c in main (argc=11, argv=0xffffe2250958) at vswitchd/ovs-vswitchd.c:127 (gdb) b dp_netdev_process_rxq_port Breakpoint 2 at 0x97851c: file lib/dpif-netdev.c, line 4441. (gdb) b pmd_thread_main Breakpoint 3 at 0x97880c: file lib/dpif-netdev.c, line 5658. (gdb) c Continuing. Breakpoint 2, dp_netdev_process_rxq_port (pmd=pmd@entry=0xffff75f00010, rxq=0x204f7230, port_no=0) at lib/dpif-netdev.c:4441 4441 { (gdb) bt #0 dp_netdev_process_rxq_port (pmd=pmd@entry=0xffff75f00010, rxq=0x204f7230, port_no=0) at lib/dpif-netdev.c:4441 #1 0x000000000097911c in dpif_netdev_run (dpif=<optimized out>) at lib/dpif-netdev.c:5469 #2 0x000000000097d290 in dpif_run (dpif=<optimized out>) at lib/dpif.c:463 #3 0x0000000000934c68 in type_run (type=type@entry=0x202dd910 "netdev") at ofproto/ofproto-dpif.c:370 #4 0x000000000091eb18 in ofproto_type_run (datapath_type=<optimized out>, datapath_type@entry=0x202dd910 "netdev") at ofproto/ofproto.c:1772 #5 0x000000000090d94c in bridge_run__ () at vswitchd/bridge.c:3242 #6 0x0000000000913480 in bridge_run () at vswitchd/bridge.c:3307 #7 0x000000000042469c in main (argc=11, argv=0xffffe2250958) at vswitchd/ovs-vswitchd.c:127 (gdb) c Continuing. Breakpoint 2, dp_netdev_process_rxq_port (pmd=pmd@entry=0xffff75f00010, rxq=0x2052ec80, port_no=2) at lib/dpif-netdev.c:4441 4441 { (gdb) bt #0 dp_netdev_process_rxq_port (pmd=pmd@entry=0xffff75f00010, rxq=0x2052ec80, port_no=2) at lib/dpif-netdev.c:4441 #1 0x000000000097911c in dpif_netdev_run (dpif=<optimized out>) at lib/dpif-netdev.c:5469 #2 0x000000000097d290 in dpif_run (dpif=<optimized out>) at lib/dpif.c:463 #3 0x0000000000934c68 in type_run (type=type@entry=0x202dd910 "netdev") at ofproto/ofproto-dpif.c:370 #4 0x000000000091eb18 in ofproto_type_run (datapath_type=<optimized out>, datapath_type@entry=0x202dd910 "netdev") at ofproto/ofproto.c:1772 ----执行了 #5 0x000000000090d94c in bridge_run__ () at vswitchd/bridge.c:3242 #6 0x0000000000913480 in bridge_run () at vswitchd/bridge.c:3307 #7 0x000000000042469c in main (argc=11, argv=0xffffe2250958) at vswitchd/ovs-vswitchd.c:127 (gdb)

(gdb) n 4451 cycle_timer_start(&pmd->perf_stats, &timer); (gdb) n 4441 { (gdb) n 4447 int rem_qlen = 0, *qlen_p = NULL; (gdb) n 4451 cycle_timer_start(&pmd->perf_stats, &timer); (gdb) n 4441 { (gdb) n 4447 int rem_qlen = 0, *qlen_p = NULL; (gdb) n 4451 cycle_timer_start(&pmd->perf_stats, &timer); (gdb) n 4453 pmd->ctx.last_rxq = rxq; (gdb) n 4457 if (pmd_perf_metrics_enabled(pmd) && rxq->is_vhost) { (gdb) n 4451 cycle_timer_start(&pmd->perf_stats, &timer); (gdb) n 4457 if (pmd_perf_metrics_enabled(pmd) && rxq->is_vhost) { (gdb) n 4451 cycle_timer_start(&pmd->perf_stats, &timer); (gdb) n 4454 dp_packet_batch_init(&batch); (gdb) set print pretty on (gdb) p *batch Structure has no component named operator*. (gdb) p batch $1 = { count = 0, trunc = false, do_not_steal = false, packets = {0xffff00000000, 0xa3505c <format_log_message+768>, 0x0, 0x0, 0x20, 0x1, 0xbfe3da, 0x980414 <ds_put_cstr+48>, 0xffffe22505a0, 0xffffe22505a0, 0xffffe2250570, 0xffffff80ffffffd8, 0xffffe22505a0, 0xffffe22505a0, 0xffffe22502a0, 0xa28468 <xclock_gettime+12>, 0xffffe22502b0, 0xa28560 <time_timespec__+212>, 0xffffe2250300, 0xa28638 <time_msec+28>, 0x202dd910, 0x204f2150, 0xffffe2250300, 0x9fb848 <ovs_mutex_lock_at+32>, 0xffff7c330f58, 0xbd4308, 0xffff00000000, 0xffff7e2a0c64 <malloc+88>, 0xffffe2250320, 0x978f74 <dpif_netdev_run+128>, 0xffffe2250320, 0x979100 <dpif_netdev_run+524>} } (gdb)

dp_netdev_process_rxq_port

(gdb) bt

#0 dp_netdev_process_rxq_port (pmd=pmd@entry=0xfffd546d0010, rxq=0x11c8c5d0, port_no=2) at lib/dpif-netdev.c:4480

#1 0x0000000000978a24 in pmd_thread_main (f_=0xfffd546d0010) at lib/dpif-netdev.c:5731

#2 0x00000000009fc5dc in ovsthread_wrapper (aux_=<optimized out>) at lib/ovs-thread.c:383

#3 0x0000ffff95e37d38 in start_thread (arg=0xfffd4fffd510) at pthread_create.c:309

#4 0x0000ffff95b1f690 in thread_start () from /lib64/libc.so.6

(gdb) c

Continuing.

Breakpoint 1, dp_netdev_process_rxq_port (pmd=pmd@entry=0xfffd546d0010, rxq=0x11c8c5d0, port_no=2) at lib/dpif-netdev.c:4480

4480 dp_netdev_input(pmd, &batch, port_no);

(gdb)

dp_netdev_process_rxq_port(struct dp_netdev_pmd_thread *pmd,

struct netdev_rxq *rx,

odp_port_t port_no)

{

struct dp_packet_batch batch;

int error;

dp_packet_batch_init(&batch);

cycles_count_start(pmd);

/*通过调用netdev_class->rxq_recv从rx中收包存入batch中*/

error = netdev_rxq_recv(rx, &batch);

cycles_count_end(pmd, PMD_CYCLES_POLLING);

if (!error) {

*recirc_depth_get() = 0;

cycles_count_start(pmd);

/*将batch中的包转入datapath中进行处理*/

dp_netdev_input(pmd, &batch, port_no);

cycles_count_end(pmd, PMD_CYCLES_PROCESSING);

}

...

}

netdev_class的实例有NETDEV_DPDK_CLASS,NETDEV_DUMMY_CLASS,NETDEV_BSD_CLASS,NETDEV_LINUX_CLASS.

netdev_rxq_recv &netdev_class ----->netdev_dpdk_vhost_rxq_recv

netdev_rxq_recv(struct netdev_rxq *rx, struct dp_packet_batch *batch,

int *qfill)

{

int retval;

retval = rx->netdev->netdev_class->rxq_recv(rx, batch, qfill);

if (!retval) {

COVERAGE_INC(netdev_received);

} else {

batch->count = 0;

}

return retval;

}

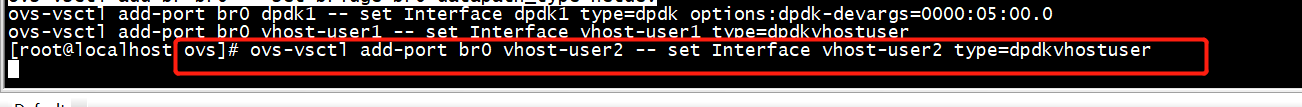

ovs-vsctl add-port br0 vhost-user1 -- set Interface vhost-user1 type=dpdkvhostuser

static const struct netdev_class dpdk_vhost_class = {

.type = "dpdkvhostuser",

NETDEV_DPDK_CLASS_COMMON,

.construct = netdev_dpdk_vhost_construct,

.destruct = netdev_dpdk_vhost_destruct,

.send = netdev_dpdk_vhost_send,

.get_carrier = netdev_dpdk_vhost_get_carrier,

.get_stats = netdev_dpdk_vhost_get_stats,

.get_custom_stats = netdev_dpdk_get_sw_custom_stats,

.get_status = netdev_dpdk_vhost_user_get_status,

.reconfigure = netdev_dpdk_vhost_reconfigure,

.rxq_recv = netdev_dpdk_vhost_rxq_recv,

.rxq_enabled = netdev_dpdk_vhost_rxq_enabled,

};

dp_netdev_input

用户态Datapath处理packet流程:

pmd_thread_main dpif_netdev_run

/

/

dp_netdev_process_rxq_port

|

dp_netdev_input

|

fast_path_processing

|

handle_packet_upcall

/

(2) / (1)

/

dp_netdev_execute_actions dp_netdev_upcall

| |

odp_execute_actions upcall_cb(调用注册的回调函数)

|

dp_execute_cb

|

netdev_send

netdev_dpdk_rxq_recv

> >> #1 0x00000000007d2252 in rte_eth_rx_burst (nb_pkts=32,

> >>

> >> rx_pkts=0x7f3e4bffe7b0, queue_id=0, port_id=0 '�00')

> >>

> >> at /data1/build/dpdk-stable- rte_eth_rx_burst /x86_64-native-linuxapp-gcc/include/rte_ethdev.h:2774

> >>

> >> #2 netdev_dpdk_rxq_recv (rxq=<optimized out>, batch=0x7f3e4bffe7a0)

> >>

> >> at lib/netdev-dpdk.c:1664

> >>

> >> #3 0x000000000072e571 in netdev_rxq_recv (rx=rx at entry=0x7f3e5cc4a680,

> >>

> >> batch=batch at entry=0x7f3e4bffe7a0) at lib/netdev.c:701

> >>

> >> #4 0x000000000070ab0e in dp_netdev_process_rxq_port

> >>

> >> (pmd=pmd at entry=0x29e5e20, rx=0x7f3e5cc4a680, port_no=1) at

> >>

> >> lib/dpif-netdev.c:3114

> >>

> >> #5 0x000000000070ad76 in pmd_thread_main (f_=<optimized out>) at

> >>

> >> lib/dpif-netdev.c:3854

> >>

> >> #6 0x000000000077e4b4 in ovsthread_wrapper (aux_=<optimized out>) at

> >>

> >> lib/ovs-thread.c:348

> >>

> >> #7 0x00007f3e5fe07dc5 in start_thread (arg=0x7f3e4bfff700) at

> >>

> >> pthread_create.c:308

> >>

> >> #8 0x00007f3e5f3eb73d in clone () at

> >>

> >> ../sysdeps/unix/sysv/linux/x86_64/clone.S:113

> >>

> >> (gdb) bt

dpdk_init

(gdb) bt

#0 0x00007f3d5f5c31d7 in raise () from /lib64/libc.so.6

#1 0x00007f3d5f5c48c8 in abort () from /lib64/libc.so.6

#2 0x00007f3d61184d95 in __rte_panic (

funcname=funcname@entry=0x7f3d614846b0 <__func__.9925> "rte_eal_init",

format=format@entry=0x7f3d61483e7c "Cannot init memory

%.0s")

at /usr/src/debug/openvswitch-2.6.1/dpdk16.11/lib/librte_eal/linuxapp/eal/eal_debug.c:86

#3 0x00007f3d6128973a in rte_eal_init (argc=argc@entry=5, argv=<optimized out>)

at /usr/src/debug/openvswitch-2.6.1/dpdk16.11/lib/librte_eal/linuxapp/eal/eal.c:814

#4 0x00007f3d61416516 in dpdk_init__

(ovs_other_config=ovs_other_config@entry=0x7f3d62d497f8)

at lib/netdev-dpdk.c:3486

#5 0x00007f3d61416b1c in dpdk_init (ovs_other_config=0x7f3d62d497f8)

at lib/netdev-dpdk.c:3541

#6 0x00007f3d613030e2 in bridge_run () at vswitchd/bridge.c:2918

#7 0x00007f3d6118828d in main (argc=10, argv=0x7ffcc105a128) at vswitchd/ovsvswitchd.c:112

(gdb)

#0 virtio_recv_mergeable_pkts (rx_queue=0x7fbe181bcc00, rx_pkts=0x7fbdd1ffa850,

nb_pkts=32)

at /usr/src/debug/openvswitch-2.5.0/dpdk2.2.0/drivers/net/virtio/virtio_rxtx.c:684

684 nb_used = VIRTQUEUE_NUSED(rxvq);

Missing separate debuginfos, use: debuginfo-install glibc-2.17-156.el7.x86_64

keyutils-libs-1.5.8-3.el7.x86_64 krb5-libs-1.14.1-20.el7.x86_64 libcom_err-1.42.9-

9.el7.x86_64 libgcc-4.8.5-9.el7.x86_64 libselinux-2.5-4.el7.x86_64 openssl-libs1.0.1e-58.el7.x86_64 pcre-8.32-15.el7_2.1.x86_64 zlib-1.2.7-17.el7.x86_64

(gdb) bt

#0 virtio_recv_mergeable_pkts (rx_queue=0x7fbe181bcc00, rx_pkts=0x7fbdd1ffa850,

nb_pkts=32)

at /usr/src/debug/openvswitch-2.5.0/dpdk2.2.0/drivers/net/virtio/virtio_rxtx.c:684

#1 0x00007fbe1d61cc6a in rte_eth_rx_burst (nb_pkts=32, rx_pkts=0x7fbdd1ffa850,

queue_id=0,

port_id=0 '�00')

at /usr/src/debug/openvswitch-2.5.0/dpdk-2.2.0/x86_64-native-linuxappgcc/include/rte_ethdev.h:2510

#2 netdev_dpdk_rxq_recv (rxq_=<optimized out>, packets=0x7fbdd1ffa850,

c=0x7fbdd1ffa84c)

at lib/netdev-dpdk.c:1092

#3 0x00007fbe1d592351 in netdev_rxq_recv (rx=<optimized out>,

buffers=buffers@entry=0x7fbdd1ffa850,

cnt=cnt@entry=0x7fbdd1ffa84c) at lib/netdev.c:654

#4 0x00007fbe1d572536 in dp_netdev_process_rxq_port (pmd=pmd@entry=0x7fbe1ea0e730,

rxq=<optimized out>, port=<optimized out>, port=<optimized out>) at lib/dpifnetdev.c:2594

#5 0x00007fbe1d5728b9 in pmd_thread_main (f_=0x7fbe1ea0e730) at lib/dpifnetdev.c:2725

#6 0x00007fbe1d5d48b6 in ovsthread_wrapper (aux_=<optimized out>) at lib/ovsthread.c:340

#7 0x00007fbe1c733dc5 in start_thread () from /lib64/libpthread.so.0

#8 0x00007fbe1bb2c73d in clone () from /lib64/libc.so.6

(gdb) bt

#0 0x0000ffff95b14e24 in poll () from /lib64/libc.so.6

#1 0x0000000000a28780 in time_poll (pollfds=pollfds@entry=0x11c8c820, n_pollfds=11, handles=handles@entry=0x0, timeout_when=453308105, elapsed=elapsed@entry=0xffffe8e999dc) at lib/timeval.c:326

#2 0x0000000000a12408 in poll_block () at lib/poll-loop.c:364

#3 0x00000000004246c8 in main (argc=11, argv=0xffffe8e99be8) at vswitchd/ovs-vswitchd.c:138

(gdb) b rte_vhost_enqueue_burst

Breakpoint 1 at 0x90581c

(gdb) b rte_vhost_dequeue_burst

Breakpoint 2 at 0x905a3c

(gdb) c

Continuing.

[New Thread 0xffff9333f910 (LWP 14604)]

[New Thread 0xfffd4d62d510 (LWP 14605)]

[Thread 0xffff9333f910 (LWP 14604) exited]

[New Thread 0xffff9333f910 (LWP 14620)]

[Thread 0xffff9333f910 (LWP 14620) exited]

[New Thread 0xffff9333f910 (LWP 14621)]

[Thread 0xffff9333f910 (LWP 14621) exited]

[New Thread 0xffff9333f910 (LWP 14636)]

[Thread 0xffff9333f910 (LWP 14636) exited]

[New Thread 0xffff9333f910 (LWP 14646)]

[Switching to Thread 0xfffd4ef1d510 (LWP 14393)]

Breakpoint 2, 0x0000000000905a3c in rte_vhost_dequeue_burst ()

(gdb) bt

#0 0x0000000000905a3c in rte_vhost_dequeue_burst ()

#1 0x0000000000a62d04 in netdev_dpdk_vhost_rxq_recv (rxq=<optimized out>, batch=0xfffd4ef1ca80, qfill=0x0) at lib/netdev-dpdk.c:2442

#2 0x00000000009a440c in netdev_rxq_recv (rx=<optimized out>, batch=batch@entry=0xfffd4ef1ca80, qfill=<optimized out>) at lib/netdev.c:726

#3 0x00000000009785bc in dp_netdev_process_rxq_port (pmd=pmd@entry=0xfffd4ef20010, rxq=0x11efe540, port_no=3) at lib/dpif-netdev.c:4461

#4 0x0000000000978a24 in pmd_thread_main (f_=0xfffd4ef20010) at lib/dpif-netdev.c:5731

#5 0x00000000009fc5dc in ovsthread_wrapper (aux_=<optimized out>) at lib/ovs-thread.c:383

#6 0x0000ffff95e37d38 in start_thread (arg=0xfffd4ef1d510) at pthread_create.c:309

#7 0x0000ffff95b1f690 in thread_start () from /lib64/libc.so.6

(gdb) c

Continuing.

[Switching to Thread 0xfffd4fffd510 (LWP 14391)]

Breakpoint 1, 0x000000000090581c in rte_vhost_enqueue_burst ()

(gdb) btt

Undefined command: "btt". Try "help".

(gdb) bt

#0 0x000000000090581c in rte_vhost_enqueue_burst ()

#1 0x0000000000a60b90 in __netdev_dpdk_vhost_send (netdev=0x19fd81a40, qid=<optimized out>, pkts=pkts@entry=0xfffd3019daf0, cnt=32) at lib/netdev-dpdk.c:2662

#2 0x0000000000a6198c in netdev_dpdk_vhost_send (netdev=<optimized out>, qid=<optimized out>, batch=0xfffd3019dae0, concurrent_txq=<optimized out>) at lib/netdev-dpdk.c:2893

#3 0x00000000009a478c in netdev_send (netdev=0x19fd81a40, qid=qid@entry=0, batch=batch@entry=0xfffd3019dae0, concurrent_txq=concurrent_txq@entry=true) at lib/netdev.c:893

#4 0x000000000096fae8 in dp_netdev_pmd_flush_output_on_port (pmd=pmd@entry=0xfffd546d0010, p=p@entry=0xfffd3019dab0) at lib/dpif-netdev.c:4391

#5 0x000000000096fdfc in dp_netdev_pmd_flush_output_packets (pmd=pmd@entry=0xfffd546d0010, force=force@entry=false) at lib/dpif-netdev.c:4431

#6 0x00000000009787d8 in dp_netdev_pmd_flush_output_packets (force=false, pmd=0xfffd546d0010) at lib/dpif-netdev.c:4501

#7 dp_netdev_process_rxq_port (pmd=pmd@entry=0xfffd546d0010, rxq=0x11c8c5d0, port_no=2) at lib/dpif-netdev.c:4486

#8 0x0000000000978a24 in pmd_thread_main (f_=0xfffd546d0010) at lib/dpif-netdev.c:5731

#9 0x00000000009fc5dc in ovsthread_wrapper (aux_=<optimized out>) at lib/ovs-thread.c:383

#10 0x0000ffff95e37d38 in start_thread (arg=0xfffd4fffd510) at pthread_create.c:309

#11 0x0000ffff95b1f690 in thread_start () from /lib64/libc.so.6

(gdb) c

Continuing.

Breakpoint 1, 0x000000000090581c in rte_vhost_enqueue_burst ()

(gdb) bt

#0 0x000000000090581c in rte_vhost_enqueue_burst ()

#1 0x0000000000a60b90 in __netdev_dpdk_vhost_send (netdev=0x19fd81a40, qid=<optimized out>, pkts=pkts@entry=0xfffd3019daf0, cnt=32) at lib/netdev-dpdk.c:2662

#2 0x0000000000a6198c in netdev_dpdk_vhost_send (netdev=<optimized out>, qid=<optimized out>, batch=0xfffd3019dae0, concurrent_txq=<optimized out>) at lib/netdev-dpdk.c:2893

#3 0x00000000009a478c in netdev_send (netdev=0x19fd81a40, qid=qid@entry=0, batch=batch@entry=0xfffd3019dae0, concurrent_txq=concurrent_txq@entry=true) at lib/netdev.c:893

#4 0x000000000096fae8 in dp_netdev_pmd_flush_output_on_port (pmd=pmd@entry=0xfffd546d0010, p=p@entry=0xfffd3019dab0) at lib/dpif-netdev.c:4391

#5 0x000000000096fdfc in dp_netdev_pmd_flush_output_packets (pmd=pmd@entry=0xfffd546d0010, force=force@entry=false) at lib/dpif-netdev.c:4431

#6 0x00000000009787d8 in dp_netdev_pmd_flush_output_packets (force=false, pmd=0xfffd546d0010) at lib/dpif-netdev.c:4501

#7 dp_netdev_process_rxq_port (pmd=pmd@entry=0xfffd546d0010, rxq=0x11c8c5d0, port_no=2) at lib/dpif-netdev.c:4486

#8 0x0000000000978a24 in pmd_thread_main (f_=0xfffd546d0010) at lib/dpif-netdev.c:5731

#9 0x00000000009fc5dc in ovsthread_wrapper (aux_=<optimized out>) at lib/ovs-thread.c:383

#10 0x0000ffff95e37d38 in start_thread (arg=0xfffd4fffd510) at pthread_create.c:309

#11 0x0000ffff95b1f690 in thread_start () from /lib64/libc.so.6

dp_netdev_process_rxq_port(struct dp_netdev_pmd_thread *pmd,

struct dp_netdev_rxq *rxq,

odp_port_t port_no)

{

struct pmd_perf_stats *s = &pmd->perf_stats;

struct dp_packet_batch batch;

struct cycle_timer timer;

int error;

int batch_cnt = 0;

int rem_qlen = 0, *qlen_p = NULL;

uint64_t cycles;

/* Measure duration for polling and processing rx burst. */

cycle_timer_start(&pmd->perf_stats, &timer);

pmd->ctx.last_rxq = rxq;

dp_packet_batch_init(&batch);

/* Fetch the rx queue length only for vhostuser ports. */

if (pmd_perf_metrics_enabled(pmd) && rxq->is_vhost) {

qlen_p = &rem_qlen;

}

error = netdev_rxq_recv(rxq->rx, &batch, qlen_p); //dequeue

if (!error) {

/* At least one packet received. */

*recirc_depth_get() = 0;

pmd_thread_ctx_time_update(pmd);

batch_cnt = dp_packet_batch_size(&batch);

if (pmd_perf_metrics_enabled(pmd)) {

/* Update batch histogram. */

s->current.batches++;

histogram_add_sample(&s->pkts_per_batch, batch_cnt);

/* Update the maximum vhost rx queue fill level. */

if (rxq->is_vhost && rem_qlen >= 0) {

uint32_t qfill = batch_cnt + rem_qlen;

if (qfill > s->current.max_vhost_qfill) {

s->current.max_vhost_qfill = qfill;

}

}

}

/* Process packet batch. */

dp_netdev_input(pmd, &batch, port_no); //流处理

/* Assign processing cycles to rx queue. */

cycles = cycle_timer_stop(&pmd->perf_stats, &timer);

dp_netdev_rxq_add_cycles(rxq, RXQ_CYCLES_PROC_CURR, cycles);

dp_netdev_pmd_flush_output_packets(pmd, false); // enqueue

} else {

/* Discard cycles. */

cycle_timer_stop(&pmd->perf_stats, &timer);

if (error != EAGAIN && error != EOPNOTSUPP) {

static struct vlog_rate_limit rl = VLOG_RATE_LIMIT_INIT(1, 5);

VLOG_ERR_RL(&rl, "error receiving data from %s: %s",

netdev_rxq_get_name(rxq->rx), ovs_strerror(error));

}

}

pmd->ctx.last_rxq = NULL;

return batch_cnt;

}

执行 ovs-vsctl add-port br0 vhost-user1 -- set Interface vhost-user1 type=dpdkvhostuser

[New Thread 0xffff9333f910 (LWP 14604)]

[New Thread 0xfffd4d62d510 (LWP 14605)]

[Thread 0xffff9333f910 (LWP 14604) exited]

[New Thread 0xffff9333f910 (LWP 14620)]

[Thread 0xffff9333f910 (LWP 14620) exited]

[New Thread 0xffff9333f910 (LWP 14621)]

[Thread 0xffff9333f910 (LWP 14621) exited]

[New Thread 0xffff9333f910 (LWP 14636)]

[Thread 0xffff9333f910 (LWP 14636) exited]

[New Thread 0xffff9333f910 (LWP 14646)]

[Switching to Thread 0xfffd4ef1d510 (LWP 14393)]

netdev_open

could not create netdev dpdk1 of unknown type dpdk

ovs-vsctl add-br br0 -- set bridge br0 datapath_type=netdev

Breakpoint 1, netdev_open (name=0x11f01c40 "br0", type=type@entry=0x0, netdevp=netdevp@entry=0xffffe8e996c8) at lib/netdev.c:372

372 {

(gdb) bt

#0 netdev_open (name=0x11f01c40 "br0", type=type@entry=0x0, netdevp=netdevp@entry=0xffffe8e996c8) at lib/netdev.c:372

#1 0x000000000094f8a0 in xbridge_addr_create (xbridge=0x11c95dc0, xbridge=0x11c95dc0) at ofproto/ofproto-dpif-xlate.c:900

#2 xlate_ofproto_set (ofproto=ofproto@entry=0x11eb6a80, name=0x11c88a50 "br0", dpif=0x11c8cb90, ml=0x11ecc400, stp=0x0, rstp=0x0, ms=0x0, mbridge=0x11ec9e70, sflow=sflow@entry=0x0, ipfix=ipfix@entry=0x0,

netflow=netflow@entry=0x0, forward_bpdu=forward_bpdu@entry=false, has_in_band=false, support=0x11c51350) at ofproto/ofproto-dpif-xlate.c:1266

#3 0x000000000093555c in type_run (type=type@entry=0x11c96dc0 "netdev") at ofproto/ofproto-dpif.c:481

#4 0x000000000091eb18 in ofproto_type_run (datapath_type=<optimized out>, datapath_type@entry=0x11c96dc0 "netdev") at ofproto/ofproto.c:1772

#5 0x000000000090d94c in bridge_run__ () at vswitchd/bridge.c:3242

#6 0x0000000000913480 in bridge_run () at vswitchd/bridge.c:3307

#7 0x000000000042469c in main (argc=11, argv=0xffffe8e99be8) at vswitchd/ovs-vswitchd.c:127

ovs的datapath_type有nedev和system,netdev表示用户态数据访问,system表示内核数据访问

(gdb) b type_run

Breakpoint 1 at 0x934c28: file ofproto/ofproto-dpif.c, line 360.

(gdb) c

Continuing.

Breakpoint 1, type_run (type=type@entry=0x20530510 "netdev") at ofproto/ofproto-dpif.c:360

360 {

(gdb) list

355 return NULL;

356 }

357

358 static int

359 type_run(const char *type)

360 {

361 struct dpif_backer *backer;

362

363 backer = shash_find_data(&all_dpif_backers, type);

364 if (!backer) {

(gdb) n

363 backer = shash_find_data(&all_dpif_backers, type);

(gdb) n

360 {

(gdb) n

363 backer = shash_find_data(&all_dpif_backers, type);

(gdb) n

360 {

(gdb) n

363 backer = shash_find_data(&all_dpif_backers, type);

(gdb) n

364 if (!backer) {

(gdb) n

370 if (dpif_run(backer->dpif)) {

(gdb) set print pretty on

(gdb) p *backer

$1 = {

type = 0x204f2130 "netdev",

refcount = 1,

dpif = 0x204f78a0,

udpif = 0x204f7940,

odp_to_ofport_lock = {

lock = {

__data = {

__lock = 0,

__nr_readers = 0,

__readers_wakeup = 0,

__writer_wakeup = 0,

__nr_readers_queued = 0,

__nr_writers_queued = 0,

__writer = 0,

__shared = 0,

__pad1 = 0,

__pad2 = 0,

__flags = 1

},

__size = '�00' <repeats 48 times>, "�01�00�00�00�00�00�00",

__align = 0

},

where = 0xbc50c0 "<unlocked>"

},

odp_to_ofport_map = {

buckets = 0x202acc60,

one = 0x0,

mask = 3,

n = 2

},

tnl_backers = {

map = {

buckets = 0x204f21d8,

one = 0x0,

mask = 0,

n = 0

}

},

need_revalidate = 0,

recv_set_enable = true,

meter_ids = 0x20504710,

tp_ids = 0x20508e40,

ct_zones = {

impl = {

p = 0x10f2a40 <empty_cmap>

}

},

ct_tps = {

buckets = 0x204f2218,

one = 0x0,

mask = 0,

n = 0

},

ct_tp_kill_list = {

prev = 0x204f2230,

next = 0x204f2230

},

dp_version_string = 0x2050c9a0 "<built-in>",

---Type <return> to continue, or q <return> to quit---

bt_support = {

masked_set_action = true,

tnl_push_pop = true,

ufid = true,

trunc = true,

clone = true,

sample_nesting = 10,

ct_eventmask = true,

ct_clear = true,

max_hash_alg = 1,

check_pkt_len = true,

ct_timeout = true,

explicit_drop_action = true,

odp = {

max_vlan_headers = 1,

max_mpls_depth = 3,

recirc = true,

ct_state = true,

ct_zone = true,

ct_mark = true,

ct_label = true,

ct_state_nat = true,

ct_orig_tuple = true,

ct_orig_tuple6 = true,

nd_ext = true

}

},

rt_support = {

masked_set_action = true,

tnl_push_pop = true,

ufid = true,

trunc = true,

clone = true,

sample_nesting = 10,

ct_eventmask = true,

ct_clear = true,

max_hash_alg = 1,

check_pkt_len = true,

ct_timeout = true,

explicit_drop_action = true,

odp = {

max_vlan_headers = 1,

max_mpls_depth = 3,

recirc = true,

ct_state = true,

ct_zone = true,

ct_mark = true,

ct_label = true,

ct_state_nat = true,

ct_orig_tuple = true,

ct_orig_tuple6 = true,

nd_ext = true

}

},

tnl_count = {

count = 0

}

}

(gdb)

(gdb)

(gdb) p backer->dpif

$2 = (struct dpif *) 0x204f78a0

(gdb) p *(backer->dpif)

$3 = {

dpif_class = 0xbd2650 <dpif_netdev_class>,

base_name = 0x204f73e0 "ovs-netdev",

full_name = 0x204f78e0 "netdev@ovs-netdev",

netflow_engine_type = 47 '/',

netflow_engine_id = 209 '321',

current_ms = 464123586

}

(gdb)

dpif_class

datapath接口实现类,每种datapath都有对应的接口实现实例。dpif有两种实现类:dpif_netlink_class、dpif_netdev_class。即system、netdev。比如,dpdk的netdevdatapath类型的实现实例dpif_netdev_class。

(gdb) p *(backer->dpif)

$3 = {

dpif_class = 0xbd2650 <dpif_netdev_class>,

base_name = 0x204f73e0 "ovs-netdev",

full_name = 0x204f78e0 "netdev@ovs-netdev",

netflow_engine_type = 47 '/',

netflow_engine_id = 209 '321',

current_ms = 464123586

}

(gdb)

struct dpif {

const struct dpif_class *dpif_class;

char *base_name;

char *full_name;

uint8_t netflow_engine_type;

uint8_t netflow_engine_id;

long long int current_ms;

};

struct dpif_class {

/* Type of dpif in this class, e.g. "system", "netdev", etc.

*

* One of the providers should supply a "system" type, since this is

* the type assumed if no type is specified when opening a dpif. */

const char *type;

/* Enumerates the names of all known created datapaths, if possible, into

* 'all_dps'. The caller has already initialized 'all_dps' and other dpif

* classes might already have added names to it.

*

* This is used by the vswitch at startup, so that it can delete any

* datapaths that are not configured.

*

* Some kinds of datapaths might not be practically enumerable, in which

* case this function may be a null pointer. */

int (*enumerate)(struct sset *all_dps);

/* Returns the type to pass to netdev_open() when a dpif of class

* 'dpif_class' has a port of type 'type', for a few special cases

* when a netdev type differs from a port type. For example, when

* using the userspace datapath, a port of type "internal" needs to

* be opened as "tap".

*

* Returns either 'type' itself or a string literal, which must not

* be freed. */

const char *(*port_open_type)(const struct dpif_class *dpif_class,

const char *type);

/* Attempts to open an existing dpif called 'name', if 'create' is false,

* or to open an existing dpif or create a new one, if 'create' is true.

*

* 'dpif_class' is the class of dpif to open.

*

* If successful, stores a pointer to the new dpif in '*dpifp', which must

* have class 'dpif_class'. On failure there are no requirements on what

* is stored in '*dpifp'. */

int (*open)(const struct dpif_class *dpif_class,

const char *name, bool create, struct dpif **dpifp);

/* Closes 'dpif' and frees associated memory. */

void (*close)(struct dpif *dpif);

/* Attempts to destroy the dpif underlying 'dpif'.

*

* If successful, 'dpif' will not be used again except as an argument for

* the 'close' member function. */

int (*destroy)(struct dpif *dpif);

/* Performs periodic work needed by 'dpif', if any is necessary. */

void (*run)(struct dpif *dpif);

/* Arranges for poll_block() to wake up if the "run" member function needs

* to be called for 'dpif'. */

void (*wait)(struct dpif *dpif);

/* Retrieves statistics for 'dpif' into 'stats'. */

int (*get_stats)(const struct dpif *dpif, struct dpif_dp_stats *stats);

/* Adds 'netdev' as a new port in 'dpif'. If '*port_no' is not

* UINT32_MAX, attempts to use that as the port's port number.

*

* If port is successfully added, sets '*port_no' to the new port's

* port number. Returns EBUSY if caller attempted to choose a port

* number, and it was in use. */

int (*port_add)(struct dpif *dpif, struct netdev *netdev,

uint32_t *port_no);

/* Removes port numbered 'port_no' from 'dpif'. */

int (*port_del)(struct dpif *dpif, uint32_t port_no);

/* Queries 'dpif' for a port with the given 'port_no' or 'devname'.

* If 'port' is not null, stores information about the port into

* '*port' if successful.

*

* If 'port' is not null, the caller takes ownership of data in

* 'port' and must free it with dpif_port_destroy() when it is no

* longer needed. */

int (*port_query_by_number)(const struct dpif *dpif, uint32_t port_no,

struct dpif_port *port);

int (*port_query_by_name)(const struct dpif *dpif, const char *devname,

struct dpif_port *port);

/* Returns one greater than the largest port number accepted in flow

* actions. */

int (*get_max_ports)(const struct dpif *dpif);

/* Returns the Netlink PID value to supply in OVS_ACTION_ATTR_USERSPACE

* actions as the OVS_USERSPACE_ATTR_PID attribute's value, for use in

* flows whose packets arrived on port 'port_no'.

*

* A 'port_no' of UINT32_MAX should be treated as a special case. The

* implementation should return a reserved PID, not allocated to any port,

* that the client may use for special purposes.

*

* The return value only needs to be meaningful when DPIF_UC_ACTION has

* been enabled in the 'dpif''s listen mask, and it is allowed to change

* when DPIF_UC_ACTION is disabled and then re-enabled.

*

* A dpif provider that doesn't have meaningful Netlink PIDs can use NULL

* for this function. This is equivalent to always returning 0. */

uint32_t (*port_get_pid)(const struct dpif *dpif, uint32_t port_no);

/* Attempts to begin dumping the ports in a dpif. On success, returns 0

* and initializes '*statep' with any data needed for iteration. On

* failure, returns a positive errno value. */

int (*port_dump_start)(const struct dpif *dpif, void **statep);

/* Attempts to retrieve another port from 'dpif' for 'state', which was

* initialized by a successful call to the 'port_dump_start' function for

* 'dpif'. On success, stores a new dpif_port into 'port' and returns 0.

* Returns EOF if the end of the port table has been reached, or a positive

* errno value on error. This function will not be called again once it

* returns nonzero once for a given iteration (but the 'port_dump_done'

* function will be called afterward).

*

* The dpif provider retains ownership of the data stored in 'port'. It

* must remain valid until at least the next call to 'port_dump_next' or

* 'port_dump_done' for 'state'. */

int (*port_dump_next)(const struct dpif *dpif, void *state,

struct dpif_port *port);

/* Releases resources from 'dpif' for 'state', which was initialized by a

* successful call to the 'port_dump_start' function for 'dpif'. */

int (*port_dump_done)(const struct dpif *dpif, void *state);

/* Polls for changes in the set of ports in 'dpif'. If the set of ports in

* 'dpif' has changed, then this function should do one of the

* following:

*

* - Preferably: store the name of the device that was added to or deleted

* from 'dpif' in '*devnamep' and return 0. The caller is responsible

* for freeing '*devnamep' (with free()) when it no longer needs it.

*

* - Alternatively: return ENOBUFS, without indicating the device that was

* added or deleted.

*

* Occasional 'false positives', in which the function returns 0 while

* indicating a device that was not actually added or deleted or returns

* ENOBUFS without any change, are acceptable.

*

* If the set of ports in 'dpif' has not changed, returns EAGAIN. May also

* return other positive errno values to indicate that something has gone

* wrong. */

int (*port_poll)(const struct dpif *dpif, char **devnamep);

/* Arranges for the poll loop to wake up when 'port_poll' will return a

* value other than EAGAIN. */

void (*port_poll_wait)(const struct dpif *dpif);

/* Queries 'dpif' for a flow entry. The flow is specified by the Netlink

* attributes with types OVS_KEY_ATTR_* in the 'key_len' bytes starting at

* 'key'.

*

* Returns 0 if successful. If no flow matches, returns ENOENT. On other

* failure, returns a positive errno value.

*

* If 'actionsp' is nonnull, then on success '*actionsp' must be set to an

* ofpbuf owned by the caller that contains the Netlink attributes for the

* flow's actions. The caller must free the ofpbuf (with ofpbuf_delete())

* when it is no longer needed.

*

* If 'stats' is nonnull, then on success it must be updated with the

* flow's statistics. */

int (*flow_get)(const struct dpif *dpif,

const struct nlattr *key, size_t key_len,

struct ofpbuf **actionsp, struct dpif_flow_stats *stats);

/* Adds or modifies a flow in 'dpif'. The flow is specified by the Netlink

* attributes with types OVS_KEY_ATTR_* in the 'put->key_len' bytes

* starting at 'put->key'. The associated actions are specified by the

* Netlink attributes with types OVS_ACTION_ATTR_* in the

* 'put->actions_len' bytes starting at 'put->actions'.

*

* - If the flow's key does not exist in 'dpif', then the flow will be

* added if 'put->flags' includes DPIF_FP_CREATE. Otherwise the

* operation will fail with ENOENT.

*

* If the operation succeeds, then 'put->stats', if nonnull, must be

* zeroed.

*

* - If the flow's key does exist in 'dpif', then the flow's actions will

* be updated if 'put->flags' includes DPIF_FP_MODIFY. Otherwise the

* operation will fail with EEXIST. If the flow's actions are updated,

* then its statistics will be zeroed if 'put->flags' includes

* DPIF_FP_ZERO_STATS, and left as-is otherwise.

*

* If the operation succeeds, then 'put->stats', if nonnull, must be set

* to the flow's statistics before the update.

*/

int (*flow_put)(struct dpif *dpif, const struct dpif_flow_put *put);

/* Deletes a flow from 'dpif' and returns 0, or returns ENOENT if 'dpif'

* does not contain such a flow. The flow is specified by the Netlink

* attributes with types OVS_KEY_ATTR_* in the 'del->key_len' bytes

* starting at 'del->key'.

*

* If the operation succeeds, then 'del->stats', if nonnull, must be set to

* the flow's statistics before its deletion. */

int (*flow_del)(struct dpif *dpif, const struct dpif_flow_del *del);

/* Deletes all flows from 'dpif' and clears all of its queues of received

* packets. */

int (*flow_flush)(struct dpif *dpif);

/* Attempts to begin dumping the flows in a dpif. On success, returns 0

* and initializes '*statep' with any data needed for iteration. On

* failure, returns a positive errno value. */

int (*flow_dump_start)(const struct dpif *dpif, void **statep);

/* Attempts to retrieve another flow from 'dpif' for 'state', which was

* initialized by a successful call to the 'flow_dump_start' function for

* 'dpif'. On success, updates the output parameters as described below

* and returns 0. Returns EOF if the end of the flow table has been

* reached, or a positive errno value on error. This function will not be

* called again once it returns nonzero within a given iteration (but the

* 'flow_dump_done' function will be called afterward).

*

* On success, if 'key' and 'key_len' are nonnull then '*key' and

* '*key_len' must be set to Netlink attributes with types OVS_KEY_ATTR_*

* representing the dumped flow's key. If 'actions' and 'actions_len' are

* nonnull then they should be set to Netlink attributes with types

* OVS_ACTION_ATTR_* representing the dumped flow's actions. If 'stats'

* is nonnull then it should be set to the dumped flow's statistics.

*

* All of the returned data is owned by 'dpif', not by the caller, and the

* caller must not modify or free it. 'dpif' must guarantee that it

* remains accessible and unchanging until at least the next call to

* 'flow_dump_next' or 'flow_dump_done' for 'state'. */

int (*flow_dump_next)(const struct dpif *dpif, void *state,

const struct nlattr **key, size_t *key_len,

const struct nlattr **actions, size_t *actions_len,

const struct dpif_flow_stats **stats);

/* Releases resources from 'dpif' for 'state', which was initialized by a

* successful call to the 'flow_dump_start' function for 'dpif'. */

int (*flow_dump_done)(const struct dpif *dpif, void *state);

/* Performs the 'execute->actions_len' bytes of actions in

* 'execute->actions' on the Ethernet frame specified in 'execute->packet'

* taken from the flow specified in the 'execute->key_len' bytes of

* 'execute->key'. ('execute->key' is mostly redundant with

* 'execute->packet', but it contains some metadata that cannot be

* recovered from 'execute->packet', such as tunnel and in_port.) */

int (*execute)(struct dpif *dpif, const struct dpif_execute *execute);

/* Executes each of the 'n_ops' operations in 'ops' on 'dpif', in the order

* in which they are specified, placing each operation's results in the

* "output" members documented in comments.

*

* This function is optional. It is only worthwhile to implement it if

* 'dpif' can perform operations in batch faster than individually. */

void (*operate)(struct dpif *dpif, struct dpif_op **ops, size_t n_ops);

/* Enables or disables receiving packets with dpif_recv() for 'dpif'.

* Turning packet receive off and then back on is allowed to change Netlink

* PID assignments (see ->port_get_pid()). The client is responsible for

* updating flows as necessary if it does this. */

int (*recv_set)(struct dpif *dpif, bool enable);

/* Translates OpenFlow queue ID 'queue_id' (in host byte order) into a

* priority value used for setting packet priority. */

int (*queue_to_priority)(const struct dpif *dpif, uint32_t queue_id,

uint32_t *priority);

/* Polls for an upcall from 'dpif'. If successful, stores the upcall into

* '*upcall', using 'buf' for storage. Should only be called if 'recv_set'

* has been used to enable receiving packets from 'dpif'.

*

* The implementation should point 'upcall->packet' and 'upcall->key' into

* data in the caller-provided 'buf'. If necessary to make room, the

* implementation may expand the data in 'buf'. (This is hardly a great

* way to do things but it works out OK for the dpif providers that exist

* so far.)

*

* This function must not block. If no upcall is pending when it is

* called, it should return EAGAIN without blocking. */

int (*recv)(struct dpif *dpif, struct dpif_upcall *upcall,

struct ofpbuf *buf);

/* Arranges for the poll loop to wake up when 'dpif' has a message queued

* to be received with the recv member function. */

void (*recv_wait)(struct dpif *dpif);

/* Throws away any queued upcalls that 'dpif' currently has ready to

* return. */

void (*recv_purge)(struct dpif *dpif);

};

dpif_class注册

dp_initialize(void)

{

static struct ovsthread_once once = OVSTHREAD_ONCE_INITIALIZER;

if (ovsthread_once_start(&once)) {

int i;

tnl_conf_seq = seq_create();

dpctl_unixctl_register();

tnl_port_map_init();

tnl_neigh_cache_init();

route_table_init();

for (i = 0; i < ARRAY_SIZE(base_dpif_classes); i++) {

dp_register_provider(base_dpif_classes[i]);

}

ovsthread_once_done(&once);

}

}

static const struct dpif_class *base_dpif_classes[] = {

#if defined(__linux__) || defined(_WIN32)

&dpif_netlink_class,

#endif

&dpif_netdev_class,

};

dpif_netdev_class

const struct dpif_class dpif_netdev_class = {

"netdev",

true, /* cleanup_required */

dpif_netdev_init,

dpif_netdev_enumerate,

dpif_netdev_port_open_type,

dpif_netdev_open,

dpif_netdev_close,

dpif_netdev_destroy,

dpif_netdev_run,

dpif_netdev_wait,

dpif_netdev_get_stats,

NULL, /* set_features */

dpif_netdev_port_add,

dpif_netdev_port_del,

dpif_netdev_port_set_config,

dpif_netdev_port_query_by_number,

dpif_netdev_port_query_by_name,

NULL, /* port_get_pid */

dpif_netdev_port_dump_start,

dpif_netdev_port_dump_next,

dpif_netdev_port_dump_done,

dpif_netdev_port_poll,

dpif_netdev_port_poll_wait,

dpif_netdev_flow_flush,

dpif_netdev_flow_dump_create,

dpif_netdev_flow_dump_destroy,

dpif_netdev_flow_dump_thread_create,

dpif_netdev_flow_dump_thread_destroy,

dpif_netdev_flow_dump_next,

dpif_netdev_operate,

NULL, /* recv_set */

NULL, /* handlers_set */

dpif_netdev_set_config,

dpif_netdev_queue_to_priority,

NULL, /* recv */

NULL, /* recv_wait */

NULL, /* recv_purge */

dpif_netdev_register_dp_purge_cb,

dpif_netdev_register_upcall_cb,

dpif_netdev_enable_upcall,

dpif_netdev_disable_upcall,

dpif_netdev_get_datapath_version,

dpif_netdev_ct_dump_start,

dpif_netdev_ct_dump_next,

dpif_netdev_ct_dump_done,

dpif_netdev_ct_flush,

dpif_netdev_ct_set_maxconns,

dpif_netdev_ct_get_maxconns,

dpif_netdev_ct_get_nconns,

dpif_netdev_ct_set_tcp_seq_chk,

dpif_netdev_ct_get_tcp_seq_chk,

dpif_netdev_ct_set_limits,

dpif_netdev_ct_get_limits,

dpif_netdev_ct_del_limits,

NULL, /* ct_set_timeout_policy */

NULL, /* ct_get_timeout_policy */

dpif_netdev_run

(gdb) b dpif_netdev_run

Breakpoint 1 at 0x978ef4: file lib/dpif-netdev.c, line 5438.

(gdb) c

Continuing.

Breakpoint 1, dpif_netdev_run (dpif=0x204f78a0) at lib/dpif-netdev.c:5438

5438 {

(gdb) bt

#0 dpif_netdev_run (dpif=0x204f78a0) at lib/dpif-netdev.c:5438

#1 0x000000000097d290 in dpif_run (dpif=<optimized out>) at lib/dpif.c:463

#2 0x0000000000934c68 in type_run (type=type@entry=0x202db460 "netdev") at ofproto/ofproto-dpif.c:370

#3 0x000000000091eb18 in ofproto_type_run (datapath_type=<optimized out>, datapath_type@entry=0x202db460 "netdev") at ofproto/ofproto.c:1772

#4 0x000000000090d94c in bridge_run__ () at vswitchd/bridge.c:3242

#5 0x0000000000913480 in bridge_run () at vswitchd/bridge.c:3307

#6 0x000000000042469c in main (argc=11, argv=0xffffe2250958) at vswitchd/ovs-vswitchd.c:127

(gdb)

#0 0x000000000053489a in mlx5_tx_mb2mr (mb=0x7fa5d8711180, txq=0x7fa5d812cdc0) at /usr/src/debug/openvswitch-2.9.0-3.el7.tis.1.x86_64/dpdk-17.11/drivers/net/mlx5/mlx5_rxtx.h:551

551 if (likely(txq->mp2mr[i]->start <= addr && txq->mp2mr[i]->end >= addr))

Missing separate debuginfos, use: debuginfo-install glibc-2.17-222.el7.x86_64 keyutils-libs-1.5.8-3.el7.x86_64 krb5-libs-1.15.1-19.el7.x86_64 libcap-ng-0.7.5-4.el7.x86_64 libcom_err-1.42.9-12.el7_5.x86_64 libgcc-4.8.5-28.el7_5.1.x86_64 libibverbs-43mlnx1-1.43302.tis.1.x86_64 libnl3-3.2.28-4.el7.x86_64 libselinux-2.5-12.el7.x86_64 numactl-libs-2.0.9-7.el7.x86_64 openssl-libs-1.0.2k-12.el7.x86_64 pcre-8.32-17.el7.x86_64 zlib-1.2.7-17.el7.x86_64

(gdb) bt

#0 0x000000000053489a in mlx5_tx_mb2mr (mb=0x7fa5d8711180, txq=0x7fa5d812cdc0) at /usr/src/debug/openvswitch-2.9.0-3.el7.tis.1.x86_64/dpdk-17.11/drivers/net/mlx5/mlx5_rxtx.h:551

#1 mlx5_tx_burst_mpw (dpdk_txq=<optimized out>, pkts=0x7ffffae34aa8, pkts_n=<optimized out>) at /usr/src/debug/openvswitch-2.9.0-3.el7.tis.1.x86_64/dpdk-17.11/drivers/net/mlx5/mlx5_rxtx.c:906

#2 0x0000000000410dc5 in rte_eth_tx_burst (nb_pkts=<optimized out>, tx_pkts=0x7ffffae34aa0, queue_id=0, port_id=<optimized out>) at /usr/src/debug/openvswitch-2.9.0-3.el7.tis.1.x86_64/dpdk-17.11/x86_64-native-linuxapp-gcc/include/rte_ethdev.h:3172

#3 netdev_dpdk_eth_tx_burst (cnt=1, pkts=0x7ffffae34aa0, qid=0, dev=0xffffffffffffef40) at lib/netdev-dpdk.c:1690

#4 dpdk_do_tx_copy (netdev=netdev@entry=0x7fa5fffb4700, qid=qid@entry=0, batch=batch@entry=0x160daf0) at lib/netdev-dpdk.c:2089

#5 0x00000000006ae4ab in netdev_dpdk_send__ (concurrent_txq=<optimized out>, batch=0x160daf0, qid=0, dev=<optimized out>) at lib/netdev-dpdk.c:2133

#6 netdev_dpdk_eth_send (netdev=0x7fa5fffb4700, qid=<optimized out>, batch=0x160daf0, concurrent_txq=false) at lib/netdev-dpdk.c:2164

#7 0x00000000005fb521 in netdev_send (netdev=<optimized out>, qid=<optimized out>, batch=batch@entry=0x160daf0, concurrent_txq=concurrent_txq@entry=false) at lib/netdev.c:791

#8 0x00000000005cdf15 in dp_netdev_pmd_flush_output_on_port (pmd=pmd@entry=0x7fa629a74010, p=p@entry=0x160dac0) at lib/dpif-netdev.c:3216

#9 0x00000000005ce247 in dp_netdev_pmd_flush_output_packets (pmd=pmd@entry=0x7fa629a74010, force=force@entry=false) at lib/dpif-netdev.c:3256

#10 0x00000000005d4a68 in dp_netdev_pmd_flush_output_packets (force=false, pmd=0x7fa629a74010) at lib/dpif-netdev.c:3249

#11 dp_netdev_process_rxq_port (pmd=pmd@entry=0x7fa629a74010, rxq=0x1682710, port_no=2) at lib/dpif-netdev.c:3292

#12 0x00000000005d549a in dpif_netdev_run (dpif=<optimized out>) at lib/dpif-netdev.c:3940

#13 0x0000000000592a5a in type_run (type=<optimized out>) at ofproto/ofproto-dpif.c:344

#14 0x000000000057d651 in ofproto_type_run (datapath_type=datapath_type@entry=0x167f5b0 "netdev") at ofproto/ofproto.c:1705

#15 0x000000000056cce5 in bridge_run__ () at vswitchd/bridge.c:2933

#16 0x0000000000572d08 in bridge_run () at vswitchd/bridge.c:2997

#17 0x000000000041246d in main (argc=10, argv=0x7ffffae35328) at vswitchd/ovs-vswitchd.c:119

(gdb)

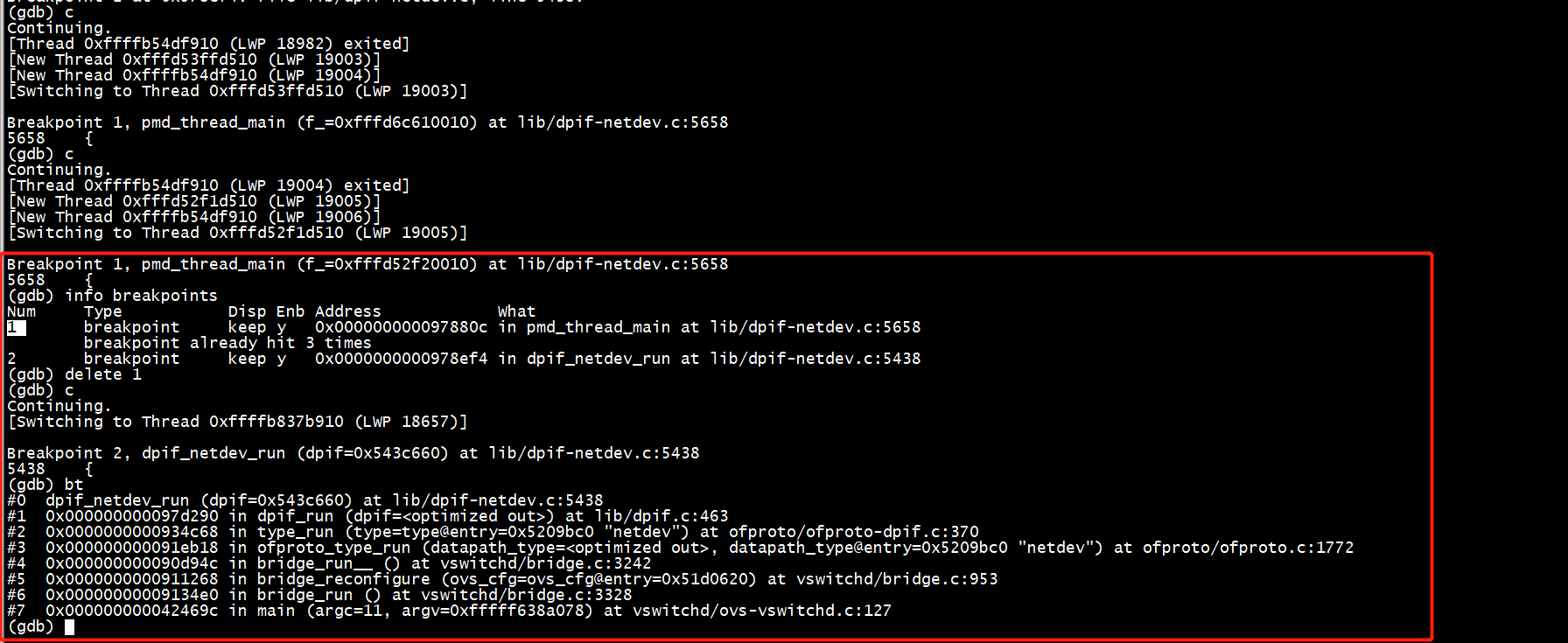

dpif_netdev_port_add

Breakpoint 2, dpif_netdev_port_add (dpif=0x204f78a0, netdev=0x19fd81a40, port_nop=0xffffe22502a4) at lib/dpif-netdev.c:1913

1913 {

(gdb) bt

#0 dpif_netdev_port_add (dpif=0x204f78a0, netdev=0x19fd81a40, port_nop=0xffffe22502a4) at lib/dpif-netdev.c:1913

#1 0x000000000097d508 in dpif_port_add (dpif=0x204f78a0, netdev=netdev@entry=0x19fd81a40, port_nop=port_nop@entry=0xffffe225030c) at lib/dpif.c:593

#2 0x000000000092a714 in port_add (ofproto_=0x202de690, netdev=0x19fd81a40) at ofproto/ofproto-dpif.c:3864

#3 0x0000000000920b40 in ofproto_port_add (ofproto=0x202de690, netdev=0x19fd81a40, ofp_portp=ofp_portp@entry=0xffffe2250400) at ofproto/ofproto.c:2070

#4 0x000000000090e530 in iface_do_create (errp=0xffffe2250410, netdevp=0xffffe2250408, ofp_portp=0xffffe2250400, iface_cfg=0x20531860, br=0x202dd9c0) at vswitchd/bridge.c:2060

#5 iface_create (port_cfg=0x2054ec30, iface_cfg=0x20531860, br=0x202dd9c0) at vswitchd/bridge.c:2103

#6 bridge_add_ports__ (br=br@entry=0x202dd9c0, wanted_ports=wanted_ports@entry=0x202ddaa0, with_requested_port=with_requested_port@entry=false) at vswitchd/bridge.c:1167

#7 0x0000000000910278 in bridge_add_ports (wanted_ports=<optimized out>, br=0x202dd9c0) at vswitchd/bridge.c:1183

#8 bridge_reconfigure (ovs_cfg=ovs_cfg@entry=0x202e3c00) at vswitchd/bridge.c:896

#9 0x00000000009134e0 in bridge_run () at vswitchd/bridge.c:3328

#10 0x000000000042469c in main (argc=11, argv=0xffffe2250958) at vswitchd/ovs-vswitchd.c:127

(gdb)

dpdk_vhost_class && port

static const struct netdev_class dpdk_vhost_class = {

.type = "dpdkvhostuser",

NETDEV_DPDK_CLASS_COMMON,

.construct = netdev_dpdk_vhost_construct,

.destruct = netdev_dpdk_vhost_destruct,

.send = netdev_dpdk_vhost_send,// enqeue

.get_carrier = netdev_dpdk_vhost_get_carrier,

.get_stats = netdev_dpdk_vhost_get_stats,

.get_custom_stats = netdev_dpdk_get_sw_custom_stats,

.get_status = netdev_dpdk_vhost_user_get_status,

.reconfigure = netdev_dpdk_vhost_reconfigure,

.rxq_recv = netdev_dpdk_vhost_rxq_recv, //dequeue

.rxq_enabled = netdev_dpdk_vhost_rxq_enabled,

};

static int

dpif_netdev_port_add(struct dpif *dpif, struct netdev *netdev,

odp_port_t *port_nop)

{

struct dp_netdev *dp = get_dp_netdev(dpif);

char namebuf[NETDEV_VPORT_NAME_BUFSIZE];

const char *dpif_port;

odp_port_t port_no;

int error;

ovs_mutex_lock(&dp->port_mutex);

dpif_port = netdev_vport_get_dpif_port(netdev, namebuf, sizeof namebuf);

if (*port_nop != ODPP_NONE) {

port_no = *port_nop;

error = dp_netdev_lookup_port(dp, *port_nop) ? EBUSY : 0;

} else {

port_no = choose_port(dp, dpif_port);

error = port_no == ODPP_NONE ? EFBIG : 0;

}

if (!error) {

*port_nop = port_no;

error = do_add_port(dp, dpif_port, netdev_get_type(netdev), port_no);

}

ovs_mutex_unlock(&dp->port_mutex);

return error;

}

(gdb) b dpif_netdev_run

Breakpoint 1 at 0x978ef4: file lib/dpif-netdev.c, line 5438.

(gdb) c

Continuing.

Breakpoint 1, dpif_netdev_run (dpif=0x204f78a0) at lib/dpif-netdev.c:5438

5438 {

(gdb) bt

#0 dpif_netdev_run (dpif=0x204f78a0) at lib/dpif-netdev.c:5438

#1 0x000000000097d290 in dpif_run (dpif=<optimized out>) at lib/dpif.c:463

#2 0x0000000000934c68 in type_run (type=type@entry=0x202db460 "netdev") at ofproto/ofproto-dpif.c:370

#3 0x000000000091eb18 in ofproto_type_run (datapath_type=<optimized out>, datapath_type@entry=0x202db460 "netdev") at ofproto/ofproto.c:1772

#4 0x000000000090d94c in bridge_run__ () at vswitchd/bridge.c:3242

#5 0x0000000000913480 in bridge_run () at vswitchd/bridge.c:3307

#6 0x000000000042469c in main (argc=11, argv=0xffffe2250958) at vswitchd/ovs-vswitchd.c:127

(gdb) delete 1

(gdb) b dpif_netdev_port_add

Breakpoint 2 at 0x9747c8: file lib/dpif-netdev.c, line 1913.

(gdb) c

Continuing.

[New Thread 0xffff7c29f910 (LWP 17675)]

[Thread 0xffff7c29f910 (LWP 17675) exited]

[New Thread 0xffff7c29f910 (LWP 17677)]

Breakpoint 2, dpif_netdev_port_add (dpif=0x204f78a0, netdev=0x19fd81a40, port_nop=0xffffe22502a4) at lib/dpif-netdev.c:1913

1913 {

(gdb) bt

#0 dpif_netdev_port_add (dpif=0x204f78a0, netdev=0x19fd81a40, port_nop=0xffffe22502a4) at lib/dpif-netdev.c:1913

#1 0x000000000097d508 in dpif_port_add (dpif=0x204f78a0, netdev=netdev@entry=0x19fd81a40, port_nop=port_nop@entry=0xffffe225030c) at lib/dpif.c:593

#2 0x000000000092a714 in port_add (ofproto_=0x202de690, netdev=0x19fd81a40) at ofproto/ofproto-dpif.c:3864

#3 0x0000000000920b40 in ofproto_port_add (ofproto=0x202de690, netdev=0x19fd81a40, ofp_portp=ofp_portp@entry=0xffffe2250400) at ofproto/ofproto.c:2070

#4 0x000000000090e530 in iface_do_create (errp=0xffffe2250410, netdevp=0xffffe2250408, ofp_portp=0xffffe2250400, iface_cfg=0x20531860, br=0x202dd9c0) at vswitchd/bridge.c:2060

#5 iface_create (port_cfg=0x2054ec30, iface_cfg=0x20531860, br=0x202dd9c0) at vswitchd/bridge.c:2103

#6 bridge_add_ports__ (br=br@entry=0x202dd9c0, wanted_ports=wanted_ports@entry=0x202ddaa0, with_requested_port=with_requested_port@entry=false) at vswitchd/bridge.c:1167

#7 0x0000000000910278 in bridge_add_ports (wanted_ports=<optimized out>, br=0x202dd9c0) at vswitchd/bridge.c:1183

#8 bridge_reconfigure (ovs_cfg=ovs_cfg@entry=0x202e3c00) at vswitchd/bridge.c:896

#9 0x00000000009134e0 in bridge_run () at vswitchd/bridge.c:3328

#10 0x000000000042469c in main (argc=11, argv=0xffffe2250958) at vswitchd/ovs-vswitchd.c:127

(gdb) n

1914 struct dp_netdev *dp = get_dp_netdev(dpif);

(gdb) list

1909

1910 static int

1911 dpif_netdev_port_add(struct dpif *dpif, struct netdev *netdev,

1912 odp_port_t *port_nop)

1913 {

1914 struct dp_netdev *dp = get_dp_netdev(dpif);

1915 char namebuf[NETDEV_VPORT_NAME_BUFSIZE];

1916 const char *dpif_port;

1917 odp_port_t port_no;

1918 int error;

(gdb) set print pretty on

(gdb) p *dp

value has been optimized out

(gdb) n

1913 {

(gdb) n

1914 struct dp_netdev *dp = get_dp_netdev(dpif);

(gdb) n

1913 {

(gdb) p *dp

value has been optimized out

(gdb) p *dpif

$1 = {

dpif_class = 0xbd2650 <dpif_netdev_class>,

base_name = 0x204f73e0 "ovs-netdev",

full_name = 0x204f78e0 "netdev@ovs-netdev",

netflow_engine_type = 47 '/',

netflow_engine_id = 209 '321',

current_ms = 465573501

}

(gdb) n

1914 struct dp_netdev *dp = get_dp_netdev(dpif);

(gdb) n

1920 ovs_mutex_lock(&dp->port_mutex);

(gdb) p *dp

value has been optimized out

(gdb) n

[Thread 0xffff7c29f910 (LWP 17677) exited]

1921 dpif_port = netdev_vport_get_dpif_port(netdev, namebuf, sizeof namebuf);

(gdb) p *netdev

$2 = {

name = 0x20500a30 "vhost-user2",

netdev_class = 0xc090e0 <dpdk_vhost_class>,

auto_classified = false,

ol_flags = 0,

mtu_user_config = false,

ref_cnt = 1,

change_seq = 2,

reconfigure_seq = 0x2029d280,

last_reconfigure_seq = 16781297,

n_txq = 0,

n_rxq = 0,

node = 0x20533f50,

saved_flags_list = {

prev = 0x19fd81a90,

next = 0x19fd81a90

},

flow_api = {

p = 0x0

},

hw_info = {

oor = false,

offload_count = 0,

pending_count = 0

}

}

(gdb) n

1922 if (*port_nop != ODPP_NONE) {

(gdb) n

1921 dpif_port = netdev_vport_get_dpif_port(netdev, namebuf, sizeof namebuf);

(gdb) n

1922 if (*port_nop != ODPP_NONE) {

(gdb) n

1926 port_no = choose_port(dp, dpif_port);

(gdb) n

1930 *port_nop = port_no;

(gdb) n

1931 error = do_add_port(dp, dpif_port, netdev_get_type(netdev), port_no);

(gdb) p *netdev

$3 = {

name = 0x20500a30 "vhost-user2",

netdev_class = 0xc090e0 <dpdk_vhost_class>,

auto_classified = false,

ol_flags = 0,

mtu_user_config = false,

ref_cnt = 1,

change_seq = 2,

reconfigure_seq = 0x2029d280,

last_reconfigure_seq = 16781297,

n_txq = 0,

n_rxq = 0,

node = 0x20533f50,

saved_flags_list = {

prev = 0x19fd81a90,

next = 0x19fd81a90

},

flow_api = {

p = 0x0

},

hw_info = {

oor = false,

offload_count = 0,

pending_count = 0

}

}

(gdb) n

[New Thread 0xffff7c29f910 (LWP 17711)]

1933 ovs_mutex_unlock(&dp->port_mutex);

(gdb) n

1936 }

(gdb) n

dpif_port_add (dpif=0x204f78a0, netdev=netdev@entry=0x19fd81a40, port_nop=port_nop@entry=0xffffe225030c) at lib/dpif.c:594

594 if (!error) {

(gdb) n

595 VLOG_DBG_RL(&dpmsg_rl, "%s: added %s as port %"PRIu32,

(gdb) n

598 if (!dpif_is_tap_port(netdev_get_type(netdev))) {

(gdb) n

602 dpif_port.type = CONST_CAST(char *, netdev_get_type(netdev));

(gdb) n

604 dpif_port.port_no = port_no;

(gdb) n

605 netdev_ports_insert(netdev, dpif->dpif_class, &dpif_port);

(gdb) p *netdev

$4 = {

name = 0x20500a30 "vhost-user2",

netdev_class = 0xc090e0 <dpdk_vhost_class>,

auto_classified = false,

ol_flags = 0,

mtu_user_config = false,

ref_cnt = 3,

change_seq = 3,

reconfigure_seq = 0x2029d280,

last_reconfigure_seq = 16781303,

n_txq = 1,

n_rxq = 1,

node = 0x20533f50,

saved_flags_list = {

prev = 0x19fd81a90,

next = 0x19fd81a90

},

flow_api = {

p = 0x0

},

hw_info = {

oor = false,

offload_count = 0,

pending_count = 0

}

}

(gdb) p dpif_port

$5 = {

name = 0x20500a30 "vhost-user2",

type = 0xffff <Address 0xffff out of bounds>,

port_no = 539616016

}

(gdb) p *(dpif->dpif_class)

$6 = {

type = 0xbd4548 "netdev",

cleanup_required = true,

init = 0x96cd7c <dpif_netdev_init>,

enumerate = 0x96f3c4 <dpif_netdev_enumerate>,

port_open_type = 0x96c2b4 <dpif_netdev_port_open_type>,

open = 0x974c00 <dpif_netdev_open>,

close = 0x9744b0 <dpif_netdev_close>,

destroy = 0x96e734 <dpif_netdev_destroy>,

run = 0x978ef4 <dpif_netdev_run>,

wait = 0x971798 <dpif_netdev_wait>,

get_stats = 0x96e63c <dpif_netdev_get_stats>,

set_features = 0x0,

port_add = 0x9747c8 <dpif_netdev_port_add>,

port_del = 0x9744f4 <dpif_netdev_port_del>,

port_set_config = 0x971340 <dpif_netdev_port_set_config>,

port_query_by_number = 0x9716b4 <dpif_netdev_port_query_by_number>,

port_query_by_name = 0x970af4 <dpif_netdev_port_query_by_name>,

port_get_pid = 0x0,

port_dump_start = 0x96c1d0 <dpif_netdev_port_dump_start>,

port_dump_next = 0x96e580 <dpif_netdev_port_dump_next>,

port_dump_done = 0x96c4e8 <dpif_netdev_port_dump_done>,

port_poll = 0x96e7f0 <dpif_netdev_port_poll>,

port_poll_wait = 0x96e7ac <dpif_netdev_port_poll_wait>,

flow_flush = 0x96f7f0 <dpif_netdev_flow_flush>,

flow_dump_create = 0x96c204 <dpif_netdev_flow_dump_create>,

flow_dump_destroy = 0x96c9d0 <dpif_netdev_flow_dump_destroy>,

flow_dump_thread_create = 0x96c808 <dpif_netdev_flow_dump_thread_create>,

flow_dump_thread_destroy = 0x96c4e4 <dpif_netdev_flow_dump_thread_destroy>,

flow_dump_next = 0x96ebe4 <dpif_netdev_flow_dump_next>,

operate = 0x97711c <dpif_netdev_operate>,

recv_set = 0x0,

handlers_set = 0x0,

set_config = 0x96e1a0 <dpif_netdev_set_config>,

queue_to_priority = 0x96c130 <dpif_netdev_queue_to_priority>,

recv = 0x0,

recv_wait = 0x0,

recv_purge = 0x0,

register_dp_purge_cb = 0x96e164 <dpif_netdev_register_dp_purge_cb>,

register_upcall_cb = 0x96e128 <dpif_netdev_register_upcall_cb>,

enable_upcall = 0x96e0f0 <dpif_netdev_enable_upcall>,

disable_upcall = 0x96e0b8 <dpif_netdev_disable_upcall>,

get_datapath_version = 0x96c1f8 <dpif_netdev_get_datapath_version>,

ct_dump_start = 0x96e034 <dpif_netdev_ct_dump_start>,

ct_dump_next = 0x96cbd8 <dpif_netdev_ct_dump_next>,

ct_dump_done = 0x96cba0 <dpif_netdev_ct_dump_done>,

ct_flush = 0x96dfdc <dpif_netdev_ct_flush>,

ct_set_maxconns = 0x96dfa4 <dpif_netdev_ct_set_maxconns>,

ct_get_maxconns = 0x96df6c <dpif_netdev_ct_get_maxconns>,

ct_get_nconns = 0x96df34 <dpif_netdev_ct_get_nconns>,

ct_set_tcp_seq_chk = 0x96def8 <dpif_netdev_ct_set_tcp_seq_chk>,

ct_get_tcp_seq_chk = 0x96deac <dpif_netdev_ct_get_tcp_seq_chk>,

ct_set_limits = 0x96ddfc <dpif_netdev_ct_set_limits>,

ct_get_limits = 0x96dcf0 <dpif_netdev_ct_get_limits>,

ct_del_limits = 0x96dc60 <dpif_netdev_ct_del_limits>,

ct_set_timeout_policy = 0x0,

ct_get_timeout_policy = 0x0,

ct_del_timeout_policy = 0x0,

ct_timeout_policy_dump_start = 0x0,

---Type <return> to continue, or q <return> to quit---

ct_timeout_policy_dump_next = 0x0,

ct_timeout_policy_dump_done = 0x0,

ct_get_timeout_policy_name = 0x0,

ipf_set_enabled = 0x96dc0c <dpif_netdev_ipf_set_enabled>,

ipf_set_min_frag = 0x96dbb8 <dpif_netdev_ipf_set_min_frag>,

ipf_set_max_nfrags = 0x96db74 <dpif_netdev_ipf_set_max_nfrags>,

ipf_get_status = 0x96db28 <dpif_netdev_ipf_get_status>,

ipf_dump_start = 0x96cb98 <dpif_netdev_ipf_dump_start>,

ipf_dump_next = 0x96dadc <dpif_netdev_ipf_dump_next>,

ipf_dump_done = 0x96cb90 <dpif_netdev_ipf_dump_done>,

meter_get_features = 0x96c1a8 <dpif_netdev_meter_get_features>,

meter_set = 0x96d900 <dpif_netdev_meter_set>,

meter_get = 0x96d718 <dpif_netdev_meter_get>,

meter_del = 0x96d854 <dpif_netdev_meter_del>

}

(gdb)

(gdb) n

602 dpif_port.type = CONST_CAST(char *, netdev_get_type(netdev));

(gdb) n

605 netdev_ports_insert(netdev, dpif->dpif_class, &dpif_port);

(gdb) p dpif_port.type

$7 = 0xc0b588 "dpdkvhostuser"

(gdb)

(gdb) n

602 dpif_port.type = CONST_CAST(char *, netdev_get_type(netdev));

(gdb) n

605 netdev_ports_insert(netdev, dpif->dpif_class, &dpif_port);

(gdb) p dpif_port.type

$7 = 0xc0b588 "dpdkvhostuser"

(gdb) n

603 dpif_port.name = CONST_CAST(char *, netdev_name);

(gdb) n

604 dpif_port.port_no = port_no;

(gdb) p dpif_port.name

$8 = 0x20500a30 "vhost-user2"

(gdb) n

605 netdev_ports_insert(netdev, dpif->dpif_class, &dpif_port);

(gdb) p dpif_port.port_no

$9 = 3

(gdb) n

[Thread 0xffff7c29f910 (LWP 17711) exited]

612 if (port_nop) {

(gdb) n

613 *port_nop = port_no;

(gdb) n

616 }

(gdb) n

(gdb) n

602 dpif_port.type = CONST_CAST(char *, netdev_get_type(netdev));

(gdb) n

605 netdev_ports_insert(netdev, dpif->dpif_class, &dpif_port);

(gdb) p dpif_port.type

$7 = 0xc0b588 "dpdkvhostuser"

(gdb) n

603 dpif_port.name = CONST_CAST(char *, netdev_name);

(gdb) n

604 dpif_port.port_no = port_no;

(gdb) p dpif_port.name

$8 = 0x20500a30 "vhost-user2"

(gdb) n

605 netdev_ports_insert(netdev, dpif->dpif_class, &dpif_port);

(gdb) p dpif_port.port_no

$9 = 3

(gdb) n

[Thread 0xffff7c29f910 (LWP 17711) exited]

612 if (port_nop) {

(gdb) n

613 *port_nop = port_no;

(gdb) n

616 }

(gdb) n

port_add (ofproto_=0x202de690, netdev=0x19fd81a40) at ofproto/ofproto-dpif.c:3865

3865 if (error) {

(gdb) n

3868 if (netdev_get_tunnel_config(netdev)) {

(gdb) n

[New Thread 0xffff7c29f910 (LWP 17739)]

3874 if (netdev_get_tunnel_config(netdev)) {

(gdb) n

3877 sset_add(&ofproto->ports, devname);

(gdb) n

3879 return 0;

(gdb) n

3880 }

(gdb) n

ofproto_port_add (ofproto=0x202de690, netdev=0x19fd81a40, ofp_portp=ofp_portp@entry=0xffffe2250400) at ofproto/ofproto.c:2071

2071 if (!error) {

(gdb) n

2072 const char *netdev_name = netdev_get_name(netdev);

(gdb) n

[Thread 0xffff7c29f910 (LWP 17739) exited]

[New Thread 0xffff7c24f910 (LWP 17740)]

2074 simap_put(&ofproto->ofp_requests, netdev_name,

(gdb) p netdev_name

$10 = 0x20500a30 "vhost-user2"

(gdb) n

2076 error = update_port(ofproto, netdev_name);

(gdb) n

2078 if (ofp_portp) {

(gdb) n

2079 *ofp_portp = OFPP_NONE;

(gdb) n

2080 if (!error) {

(gdb) n

2083 error = ofproto_port_query_by_name(ofproto,

(gdb) n

[Thread 0xffff7c24f910 (LWP 17740) exited]

[New Thread 0xffff7c29f910 (LWP 17741)]

2086 if (!error) {

(gdb) n

2087 *ofp_portp = ofproto_port.ofp_port;

(gdb) n

2088 ofproto_port_destroy(&ofproto_port);

(gdb) n

2087 *ofp_portp = ofproto_port.ofp_port;

(gdb) n

2088 ofproto_port_destroy(&ofproto_port);

(gdb) n

[Thread 0xffff7c29f910 (LWP 17741) exited]

[New Thread 0xffff7c24f910 (LWP 17742)]

2093 }

(gdb) n

iface_do_create (errp=0xffffe2250410, netdevp=0xffffe2250408, ofp_portp=0xffffe2250400, iface_cfg=0x20531860, br=0x202dd9c0) at vswitchd/bridge.c:2061

2061 if (error) {

(gdb) n

2072 VLOG_INFO("bridge %s: added interface %s on port %d",

(gdb) n

[Thread 0xffff7c24f910 (LWP 17742) exited]

[New Thread 0xffff7c29f910 (LWP 17743)]

2075 *netdevp = netdev;

(gdb) n

iface_create (port_cfg=0x2054ec30, iface_cfg=0x20531860, br=0x202dd9c0) at vswitchd/bridge.c:2111

2111 port = port_lookup(br, port_cfg->name);

(gdb) n

2103 error = iface_do_create(br, iface_cfg, &ofp_port, &netdev, &errp);

(gdb) n

2111 port = port_lookup(br, port_cfg->name);

(gdb) n

2112 if (!port) {

(gdb) n

2113 port = port_create(br, port_cfg);

(gdb) delete 1

No breakpoint number 1.

(gdb) quit

A debugging session is active.

Inferior 1 [process 17155] will be detached.

Quit anyway? (y or n) y

Detaching from program: /usr/sbin/ovs-vswitchd, process 17155

[Inferior 1 (process 17155) detached]

[root@localhost ovs]#

iface_do_create(const struct bridge *br,

const struct ovsrec_interface *iface_cfg,

ofp_port_t *ofp_portp, struct netdev **netdevp,

char **errp)

{

struct netdev *netdev = NULL;

int error;

const char *type;

if (netdev_is_reserved_name(iface_cfg->name)) {

VLOG_WARN("could not create interface %s, name is reserved",

iface_cfg->name);

error = EINVAL;

goto error;

}

type = ofproto_port_open_type(br->ofproto,

iface_get_type(iface_cfg, br->cfg));

error = netdev_open(iface_cfg->name, type, &netdev);

if (error) {

VLOG_WARN_BUF(errp, "could not open network device %s (%s)",

iface_cfg->name, ovs_strerror(error));

goto error;

}

error = iface_set_netdev_config(iface_cfg, netdev, errp);

if (error) {

goto error;

}

netdev_dpdk_vhost_construct

Breakpoint 1, netdev_dpdk_vhost_construct (netdev=0x19fd81a40) at lib/netdev-dpdk.c:1369

1369 {

(gdb) bt

#0 netdev_dpdk_vhost_construct (netdev=0x19fd81a40) at lib/netdev-dpdk.c:1369

#1 0x00000000009a3d60 in netdev_open (name=<optimized out>, type=0x20532350 "dpdkvhostuser", netdevp=netdevp@entry=0xffffe2250418) at lib/netdev.c:436

#2 0x000000000090e488 in iface_do_create (errp=0xffffe2250410, netdevp=0xffffe2250408, ofp_portp=0xffffe2250400, iface_cfg=0x2052bcf0, br=0x202dd9c0) at vswitchd/bridge.c:2045

#3 iface_create (port_cfg=0x20550ac0, iface_cfg=0x2052bcf0, br=0x202dd9c0) at vswitchd/bridge.c:2103

#4 bridge_add_ports__ (br=br@entry=0x202dd9c0, wanted_ports=wanted_ports@entry=0x202ddaa0, with_requested_port=with_requested_port@entry=false) at vswitchd/bridge.c:1167

#5 0x0000000000910278 in bridge_add_ports (wanted_ports=<optimized out>, br=0x202dd9c0) at vswitchd/bridge.c:1183

#6 bridge_reconfigure (ovs_cfg=ovs_cfg@entry=0x202e3c00) at vswitchd/bridge.c:896

#7 0x00000000009134e0 in bridge_run () at vswitchd/bridge.c:3328

#8 0x000000000042469c in main (argc=11, argv=0xffffe2250958) at vswitchd/ovs-vswitchd.c:127

(gdb)

int

netdev_open(const char *name, const char *type, struct netdev **netdevp)

OVS_EXCLUDED(netdev_mutex)

{

struct netdev *netdev;

int error = 0;

if (!name[0]) {

/* Reject empty names. This saves the providers having to do this. At

* least one screwed this up: the netdev-linux "tap" implementation

* passed the name directly to the Linux TUNSETIFF call, which treats

* an empty string as a request to generate a unique name. */

return EINVAL;

}

netdev_initialize();

ovs_mutex_lock(&netdev_mutex);

netdev = shash_find_data(&netdev_shash, name);

if (netdev &&

type && type[0] && strcmp(type, netdev->netdev_class->type)) {

if (netdev->auto_classified) {

/* If this device was first created without a classification type,

* for example due to routing or tunneling code, and they keep a

* reference, a "classified" call to open will fail. In this case

* we remove the classless device, and re-add it below. We remove

* the netdev from the shash, and change the sequence, so owners of

* the old classless device can release/cleanup. */

if (netdev->node) {

shash_delete(&netdev_shash, netdev->node);

netdev->node = NULL;

netdev_change_seq_changed(netdev);

}

netdev = NULL;

} else {

error = EEXIST;

}

}

if (!netdev) {

struct netdev_registered_class *rc;

rc = netdev_lookup_class(type && type[0] ? type : "system");

if (rc && ovs_refcount_try_ref_rcu(&rc->refcnt)) {

netdev = rc->class->alloc();

if (netdev) {

memset(netdev, 0, sizeof *netdev);

netdev->netdev_class = rc->class;

netdev->auto_classified = type && type[0] ? false : true;

netdev->name = xstrdup(name);

netdev->change_seq = 1;

netdev->reconfigure_seq = seq_create();

netdev->last_reconfigure_seq =

seq_read(netdev->reconfigure_seq);

ovsrcu_set(&netdev->flow_api, NULL);

netdev->hw_info.oor = false;

netdev->node = shash_add(&netdev_shash, name, netdev);

/* By default enable one tx and rx queue per netdev. */

netdev->n_txq = netdev->netdev_class->send ? 1 : 0;

netdev->n_rxq = netdev->netdev_class->rxq_alloc ? 1 : 0;

ovs_list_init(&netdev->saved_flags_list);

error = rc->class->construct(netdev);

//调用construct

if (!error) {

netdev_change_seq_changed(netdev);

} else {

ovs_refcount_unref(&rc->refcnt);

seq_destroy(netdev->reconfigure_seq);

free(netdev->name);

ovs_assert(ovs_list_is_empty(&netdev->saved_flags_list));

shash_delete(&netdev_shash, netdev->node);

rc->class->dealloc(netdev);

}

} else {

error = ENOMEM;

}

} else {

VLOG_WARN("could not create netdev %s of unknown type %s",

name, type);

error = EAFNOSUPPORT;

}

}

if (!error) {

netdev->ref_cnt++;

*netdevp = netdev;

} else {

*netdevp = NULL;

}

ovs_mutex_unlock(&netdev_mutex);

return error;

}

netdev_class netdev_dpdk_class

static struct netdev_class netdev_dpdk_class = { "dpdk", dpdk_class_init, /* init */ NULL, /* netdev_dpdk_run */ NULL, /* netdev_dpdk_wait */ netdev_dpdk_alloc, netdev_dpdk_construct, netdev_dpdk_destruct, netdev_dpdk_dealloc, netdev_dpdk_get_config, NULL, /* netdev_dpdk_set_config */ NULL, /* get_tunnel_config */ netdev_dpdk_send, /* send */ NULL, /* send_wait */ netdev_dpdk_set_etheraddr, netdev_dpdk_get_etheraddr, netdev_dpdk_get_mtu, netdev_dpdk_set_mtu, netdev_dpdk_get_ifindex, netdev_dpdk_get_carrier, netdev_dpdk_get_carrier_resets, netdev_dpdk_set_miimon, netdev_dpdk_get_stats, netdev_dpdk_set_stats, netdev_dpdk_get_features, NULL, /* set_advertisements */ NULL, /* set_policing */ NULL, /* get_qos_types */ NULL, /* get_qos_capabilities */ NULL, /* get_qos */ NULL, /* set_qos */ NULL, /* get_queue */ NULL, /* set_queue */ NULL, /* delete_queue */ NULL, /* get_queue_stats */ NULL, /* queue_dump_start */ NULL, /* queue_dump_next */ NULL, /* queue_dump_done */ NULL, /* dump_queue_stats */ NULL, /* get_in4 */ NULL, /* set_in4 */ NULL, /* get_in6 */ NULL, /* add_router */ NULL, /* get_next_hop */ netdev_dpdk_get_status, NULL, /* arp_lookup */ netdev_dpdk_update_flags, netdev_dpdk_rxq_alloc, netdev_dpdk_rxq_construct, netdev_dpdk_rxq_destruct, netdev_dpdk_rxq_dealloc, netdev_dpdk_rxq_recv, NULL, /* rxq_wait */ NULL, /* rxq_drain */ };

ovs-vsctl add-port br0 dpdk1 -- set Interface dpdk1 type=dpdk options:dpdk-devargs=0000:05:00.0

(gdb) b netdev_dpdk_construct Breakpoint 1 at 0xa5aca0: file lib/netdev-dpdk.c, line 1467. (gdb) b netdev_dpdk_send Function "netdev_dpdk_send" not defined. Make breakpoint pending on future shared library load? (y or [n]) n (gdb) b netdev_dpdk_send Function "netdev_dpdk_send" not defined. Make breakpoint pending on future shared library load? (y or [n]) y Breakpoint 2 (netdev_dpdk_send) pending. (gdb) b netdev_dpdk_rxq_recv Breakpoint 3 at 0xa62fd8: file lib/netdev-dpdk.c, line 2489. (gdb) c Continuing. Breakpoint 1, netdev_dpdk_construct (netdev=0x19fd81ec0) at lib/netdev-dpdk.c:1467 1467 { (gdb) bt #0 netdev_dpdk_construct (netdev=0x19fd81ec0) at lib/netdev-dpdk.c:1467 #1 0x00000000009a3d60 in netdev_open (name=<optimized out>, type=0x6ed09a0 "dpdk", netdevp=netdevp@entry=0xfffff6389b38) at lib/netdev.c:436 #2 0x000000000090e488 in iface_do_create (errp=0xfffff6389b30, netdevp=0xfffff6389b28, ofp_portp=0xfffff6389b20, iface_cfg=0x5476680, br=0x51d29b0) at vswitchd/bridge.c:2045 #3 iface_create (port_cfg=0x6ed09c0, iface_cfg=0x5476680, br=0x51d29b0) at vswitchd/bridge.c:2103 #4 bridge_add_ports__ (br=br@entry=0x51d29b0, wanted_ports=wanted_ports@entry=0x51d2a90, with_requested_port=with_requested_port@entry=false) at vswitchd/bridge.c:1167 #5 0x0000000000910278 in bridge_add_ports (wanted_ports=<optimized out>, br=0x51d29b0) at vswitchd/bridge.c:1183 #6 bridge_reconfigure (ovs_cfg=ovs_cfg@entry=0x51d0620) at vswitchd/bridge.c:896 #7 0x00000000009134e0 in bridge_run () at vswitchd/bridge.c:3328 #8 0x000000000042469c in main (argc=11, argv=0xfffff638a078) at vswitchd/ovs-vswitchd.c:127 (gdb) c Continuing. [New Thread 0xffffb43df910 (LWP 25825)] [Switching to Thread 0xfffd53ffd510 (LWP 19066)] Breakpoint 3, netdev_dpdk_rxq_recv (rxq=0x19fd81400, batch=0xfffd53ffca80, qfill=0x0) at lib/netdev-dpdk.c:2489 2489 { (gdb) bt #0 netdev_dpdk_rxq_recv (rxq=0x19fd81400, batch=0xfffd53ffca80, qfill=0x0) at lib/netdev-dpdk.c:2489 #1 0x00000000009a440c in netdev_rxq_recv (rx=<optimized out>, batch=batch@entry=0xfffd53ffca80, qfill=<optimized out>) at lib/netdev.c:726 #2 0x00000000009785bc in dp_netdev_process_rxq_port (pmd=pmd@entry=0x5d3f680, rxq=0x51d14a0, port_no=3) at lib/dpif-netdev.c:4461 #3 0x0000000000978a24 in pmd_thread_main (f_=0x5d3f680) at lib/dpif-netdev.c:5731 #4 0x00000000009fc5dc in ovsthread_wrapper (aux_=<optimized out>) at lib/ovs-thread.c:383 #5 0x0000ffffb7fd7d38 in start_thread (arg=0xfffd53ffd510) at pthread_create.c:309 #6 0x0000ffffb7cbf690 in thread_start () from /lib64/libc.so.6 (gdb) c Continuing.

netdev_dpdk|INFO|vHost Device '/var/run/openvswitch/vhost-user1' has been removed

destroy_device(int vid)

{

struct netdev_dpdk *dev;

bool exists = false;

char ifname[IF_NAME_SZ];

rte_vhost_get_ifname(vid, ifname, sizeof ifname);

ovs_mutex_lock(&dpdk_mutex);

LIST_FOR_EACH (dev, list_node, &dpdk_list) {

if (netdev_dpdk_get_vid(dev) == vid) {

ovs_mutex_lock(&dev->mutex);

dev->vhost_reconfigured = false;

ovsrcu_index_set(&dev->vid, -1);

memset(dev->vhost_rxq_enabled, 0,

dev->up.n_rxq * sizeof *dev->vhost_rxq_enabled);

netdev_dpdk_txq_map_clear(dev);

netdev_change_seq_changed(&dev->up);

ovs_mutex_unlock(&dev->mutex);

exists = true;

break;

}

}

ovs_mutex_unlock(&dpdk_mutex);

if (exists) {

/*

* Wait for other threads to quiesce after setting the 'virtio_dev'

* to NULL, before returning.

*/

ovsrcu_synchronize();

/*

* As call to ovsrcu_synchronize() will end the quiescent state,

* put thread back into quiescent state before returning.

*/

ovsrcu_quiesce_start();

VLOG_INFO("vHost Device '%s' has been removed", ifname);

} else {

VLOG_INFO("vHost Device '%s' not found", ifname);

}

}