| 1. Overview: | |

| no interrupts, no devices, no io | |

| tasks are goroutines | |

| 2. syscall: | |

| sentry can run in non-root(ring0) and root(ring3). | |

| userapp's syscall are intercepted like normal guest, and handled by sentry kernel(in non-root mode iif the syscall can be | |

| handled without sentry call syscall on host) | |

| sentry kernel's syscall always executed in root(ring3) mode. sentry kernel's syscall finally execute HLT, which causes | |

| VM exit. and then in root mode. | |

| basic flow: | |

| bluepill loop util bluepillHandler + setcontext return, now in non-root mode. | |

| user app -> syscall -> sysenter -> user: -> SwitchToUser returns -> syscall | |

| handled by sentry kernel(t.doSyscall()), in non-root mode. | |

| sentry kernel -> syscall -> sysenter -> HLT -> vm exit -> t.doSyscall() [in root | |

| mode]. | |

| 3. memory: | |

| physical memory: | |

| size of physical memory almost equals 1 << cpu.physicalbits, but might be smaller because of reserved region,etc. | |

| vsize - psize part not in physicalRegion. gva <-> gpa, ie | |

| guest pagetable, maps almost all gva <-> gpa, but gpa <-> hva(hpa) | |

| is only set for sentry kernel initially. Then gpa page frame | |

| is filled by HandleUserFault(from filemem or HostFile) each | |

| time there is ept fault.. | |

| pagetables: | |

| gvisor itself is mapped in root and non-root mode, and the gva == hva. So, sentry runs in userspace address space | |

| in root ring3 mode, also run in userspace address space in non-root ring0 mode. | |

| user app: userspace address space(lower part of 64bits address) <--> gpa | |

| kernelspace address space(higher part of 64bits address), which actually | |

| is sentry kernel userspace address with 63th bit set <--> gpa. This | |

| map is almost useless, maybe only for pagetable switch and some setups. | |

| we cannot run sentry on this range of address..(even | |

| PIC cannot work, since PIC will be resolved once, not everytime when | |

| hits). | |

| sentry kernel: userspace address space, which is the userspace address on host. | |

| so, gva actually equals hva. then gva <-> gpa <-> hva. | |

| kernelspace address space is hva with 63th bits set <--> gpa. gpa <--> hva(hpa) | |

| is set using ept. Again, gpa <--> hva is set up for sentry kernel initially. All subsequent | |

| are handled by EPT fault, which eventually causes HandleUserFault(). | |

| From here, we can see, for each user app syscall, there is pagetable switch. | |

| somewhat similary to KPTI. but the pagetable is very different. | |

| Since user app and sentry kernel's pagetable probably overlap(use the same userspace address space), they cannot be | |

| mapped at the same time. when syscall, switch to sentry kernel's pagetable, there | |

| is no map of user app in the table.. it causes access to user memory complicated.. | |

| (This is why usermem is needed...). unlike linux, kernel's pagetable is superset | |

| of user process's pagetable, so kernel can access user memory convieniently. | |

| The access to userapp's memory from sentry kernel(for example, write syscall for userapp, sentry kernel | |

| have to copy data from userapp's memory address space). How to find the sentry kernel's addr according to the userapp's | |

| addr? Basically, Walkthrough userapp's pagetable to get uaddr --> gpa, Or walk userapp's vma to findout | |

| uaddr -> file + file offset, the walk userapp's address_space to findout file +file offset -> gpa. Then sentry | |

| knows gpa -> hva(it itself maps all the memory, stores the mapping), gets hva.. In sentry, gva == hva, no matter | |

| sentry in root or non-root, both ok to access this hva. | |

| Filesystem: | |

| The thin vfs is in sentry, like linux. Also has limited proc and sys. gofer only for 9pfs. | |

| From code path, all file operations go through 9p server, However From log, ther is no Tread/Twrite message in | |

| 9p server. Topen/Tclunk go through 9p server, assume | |

| that read/write directly to host file, probably fd passed by unix domain socket. | |

| Network: | |

| receive via go routine, tx via endpoint.WritePacket. | |

| Summary: | |

| shortcomings: compatibility, unstable, syscall overhead. eg, mount command causes sudden exit of gvisor, ip command | |

| cannot run, SO_SNDBUF socket option not supported.. | |

| merits: small memory footprints. physical memory be backed up by memfd/physical file(somehow like dax). on demand | |

| memory map, not fixed for the beginning. |

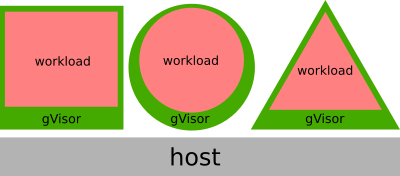

The resource model for gVisor does not assume a fixed number of threads of execution (i.e. vCPUs) or amount of physical memory. Where possible, decisions about underlying physical resources are delegated to the host system, where optimizations can be made with global information. This delegation allows the sandbox to be highly dynamic in terms of resource usage: spanning a large number of cores and large amount of memory when busy, and yielding those resources back to the host when not.

In order words, the shape of the sandbox should closely track the shape of the sandboxed process:

Processes

Much like a Virtual Machine (VM), a gVisor sandbox appears as an opaque process on the system. Processes within the sandbox do not manifest as processes on the host system, and process-level interactions within the sandbox require entering the sandbox (e.g. via a Docker exec).

Networking

The sandbox attaches a network endpoint to the system, but runs its own network stack. All network resources, other than packets in flight on the host, exist only inside the sandbox, bound by relevant resource limits.

You can interact with network endpoints exposed by the sandbox, just as you would any other container, but network introspection similarly requires entering the sandbox.

Files

Files in the sandbox may be backed by different implementations. For host-native files (where a file descriptor is available), the Gofer may return a file descriptor to the Sentry via SCM_RIGHTS1.

These files may be read from and written to through standard system calls, and also mapped into the associated application’s address space. This allows the same host memory to be shared across multiple sandboxes, although this mechanism does not preclude the use of side-channels (see Security Model.

Note that some file systems exist only within the context of the sandbox. For example, in many cases a tmpfs mount will be available at /tmp or /dev/shm, which allocates memory directly from the sandbox memory file (see below). Ultimately, these will be accounted against relevant limits in a similar way as the host native case.

Threads

The Sentry models individual task threads with goroutines. As a result, each task thread is a lightweight green thread, and may not correspond to an underlying host thread.

However, application execution is modelled as a blocking system call with the Sentry. This means that additional host threads may be created, depending on the number of active application threads. In practice, a busy application will converge on the number of active threads, and the host will be able to make scheduling decisions about all application threads.

Time

Time in the sandbox is provided by the Sentry, through its own vDSO and time-keeping implementation. This is distinct from the host time, and no state is shared with the host, although the time will be initialized with the host clock.

The Sentry runs timers to note the passage of time, much like a kernel running on hardware (though the timers are software timers, in this case). These timers provide updates to the vDSO, the time returned through system calls, and the time recorded for usage or limit tracking (e.g. RLIMIT_CPU).

When all application threads are idle, the Sentry disables timers until an event occurs that wakes either the Sentry or an application thread, similar to a tickless kernel. This allows the Sentry to achieve near zero CPU usage for idle applications.

Memory

The Sentry implements its own memory management, including demand-paging and a Sentry internal page cache for files that cannot be used natively. A single memfd backs all application memory.

Address spaces

The creation of address spaces is platform-specific. For some platforms, additional “stub” processes may be created on the host in order to support additional address spaces. These stubs are subject to various limits applied at the sandbox level (e.g. PID limits).

Physical memory

The host is able to manage physical memory using regular means (e.g. tracking working sets, reclaiming and swapping under pressure). The Sentry lazily populates host mappings for applications, and allow the host to demand-page those regions, which is critical for the functioning of those mechanisms.

In order to avoid excessive overhead, the Sentry does not demand-page individual pages. Instead, it selects appropriate regions based on heuristics. There is a trade-off here: the Sentry is unable to trivially determine which pages are active and which are not. Even if pages were individually faulted, the host may select pages to be reclaimed or swapped without the Sentry’s knowledge.

Therefore, memory usage statistics within the sandbox (e.g. via proc) are approximations. The Sentry maintains an internal breakdown of memory usage, and can collect accurate information but only through a relatively expensive API call. In any case, it would likely be considered unwise to share precise information about how the host is managing memory with the sandbox.

Finally, when an application marks a region of memory as no longer needed, for example via a call to madvise, the Sentry releases this memory back to the host. There can be performance penalties for this, since it may be cheaper in many cases to retain the memory and use it to satisfy some other request. However, releasing it immediately to the host allows the host to more effectively multiplex resources and apply an efficient global policy.

Limits

All Sentry threads and Sentry memory are subject to a container cgroup. However, application usage will not appear as anonymous memory usage, and will instead be accounted to the memfd. All anonymous memory will correspond to Sentry usage, and host memory charged to the container will work as standard.

The cgroups can be monitored for standard signals: pressure indicators, threshold notifiers, etc. and can also be adjusted dynamically. Note that the Sentry itself may listen for pressure signals in its containing cgroup, in order to purge internal caches.

-

Unless host networking is enabled, the Sentry is not able to create or open host file descriptors itself, it can only receive them in this way from the Gofer. ↩