Kubernetes的一个重要特性就是要把不同node节点的pod(container)连接起来,无视物理节点的限制。但是在某些应用环境中,比如公有云,不同租户的pod不应该互通,这个时候就需要网络隔离。幸好,Kubernetes提供了NetworkPolicy,支持按Namespace级别的网络隔离,这篇文章就带你去了解如何使用NetworkPolicy。

需要注意的是,使用NetworkPolicy需要特定的网络解决方案,如果不启用,即使配置了NetworkPolicy也无济于事。我们这里使用Calico解决网络隔离问题。

互通测试

在使用NetworkPolicy之前,我们先验证不使用的情况下,pod是否互通。这里我们的测试环境是这样的:

Namespace:ns-calico1,ns-calico2

Deployment: ns-calico1/calico1-nginx, ns-calico2/busybox

Service: ns-calico1/calico1-nginx

先创建Namespace:

apiVersion: v1

kind: Namespace

metadata:

name: ns-calico1

labels:

user: calico1

---

apiVersion: v1

kind: Namespace

metadata:

name: ns-calico2root@ubuntu:~/tenant# kubectl apply -f namespace.yaml namespace/ns-calico1 created namespace/ns-calico2 created root@ubuntu:~/tenant# kubectl get ns NAME STATUS AGE default Active 12d kube-node-lease Active 12d kube-public Active 12d kube-system Active 12d ns-calico1 Active 25s ns-calico2 Active 25s tmp Active 5d6h volcano-system Active 9d

接着创建ns-calico1/calico1-nginx:

root@ubuntu:~/tenant# cat calico1-nginx.yaml apiVersion: apps/v1 kind: Deployment metadata: name: calico1-nginx namespace: ns-calico1 spec: selector: matchLabels: app: calico1-nginx replicas: 1 template: metadata: labels: user: calico1 app: calico1-nginx spec: containers: - name: nginx image: nginx ports: - containerPort: 80 --- apiVersion: v1 kind: Service metadata: name: calico1-nginx namespace: ns-calico1 labels: user: calico1 spec: selector: app: nginx ports: - port: 80

root@ubuntu:~/tenant# kubectl get svc -n ns-calico1 NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE calico1-nginx ClusterIP 10.101.254.195 <none> 80/TCP 66s root@ubuntu:~/tenant# kubectl get deploy -n ns-calico1 NAME READY UP-TO-DATE AVAILABLE AGE calico1-nginx 1/1 1 1 75s root@ubuntu:~/tenant# kubectl get pod -n ns-calico1 NAME READY STATUS RESTARTS AGE calico1-nginx-688fdbb89b-vctjv 1/1 Running 0 82s root@ubuntu:~/tenant# kubectl get pod -n ns-calico1 -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico1-nginx-688fdbb89b-vctjv 1/1 Running 0 86s 10.244.29.18 bogon <none> <none> root@ubuntu:~/tenant#

最后创建ns-calico2/calico2-busybox:

apiVersion: v1

kind: Pod

metadata:

name: calico2-busybox

namespace: ns-calico2

spec:

containers:

- name: busybox

image: busybox

command:

- sleep

- "3600"

root@ubuntu:~/tenant# kubectl create -f calico2-busybox.yaml pod/calico2-busybox created root@ubuntu:~/tenant# kubectl get pod -n ns-calico2 NAME READY STATUS RESTARTS AGE calico2-busybox 0/1 ContainerCreating 0 7s root@ubuntu:~/tenant# kubectl get pod -n ns-calico2 NAME READY STATUS RESTARTS AGE calico2-busybox 0/1 ContainerCreating 0 31s root@ubuntu:~/tenant# kubectl get pod -n ns-calico2 NAME READY STATUS RESTARTS AGE calico2-busybox 0/1 ContainerCreating 0 36s root@ubuntu:~/tenant# kubectl get pod -n ns-calico2 NAME READY STATUS RESTARTS AGE calico2-busybox 0/1 ContainerCreating 0 37s root@ubuntu:~/tenant# kubectl get pod -n ns-calico2 NAME READY STATUS RESTARTS AGE calico2-busybox 0/1 ContainerCreating 0 39s root@ubuntu:~/tenant# kubectl get pod -n ns-calico2 NAME READY STATUS RESTARTS AGE calico2-busybox 0/1 ContainerCreating 0 40s root@ubuntu:~/tenant# kubectl get pod -n ns-calico2 NAME READY STATUS RESTARTS AGE calico2-busybox 0/1 ContainerCreating 0 41s root@ubuntu:~/tenant# kubectl get pod -n ns-calico2 NAME READY STATUS RESTARTS AGE calico2-busybox 0/1 ContainerCreating 0 43s root@ubuntu:~/tenant# kubectl get pod -n ns-calico2 NAME READY STATUS RESTARTS AGE calico2-busybox 1/1 Running 0 46s root@ubuntu:~/tenant# kubectl get pod -n ns-calico2 -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico2-busybox 1/1 Running 0 53s 10.244.29.12 bogon <none> <none> root@ubuntu:~/tenant# kubectl get svc -n ns-calico2 No resources found in ns-calico2 namespace. root@ubuntu:~/tenant# kubectl get ns NAME STATUS AGE default Active 12d kube-node-lease Active 12d kube-public Active 12d kube-system Active 12d ns-calico1 Active 14m ns-calico2 Active 14m tmp Active 5d6h volcano-system Active 9d root@ubuntu:~/tenant# kubectl get svc -n ns-calico2 No resources found in ns-calico2 namespace. root@ubuntu:~/tenant#

测试服务已经安装完成,现在我们登进calico2-busybox里,看是否能够连通calico1-nginx

# kubectl exec -it calico2-busybox -n ns-calico2 -- wget --spider --timeout=1 calico1-nginx.ns-calico1

Connecting to calico1-nginx.ns-calico1 (192.168.3.141:80)

root@ubuntu:~/tenant# kubectl get svc -n ns-calico2 No resources found in ns-calico2 namespace. root@ubuntu:~/tenant# kubectl exec -it calico2-busybox -n ns-calico2 -- wget --spider --timeout=1 calico1-nginx.ns-calico1 Connecting to calico1-nginx.ns-calico1 (10.101.254.195:80) wget: download timed out command terminated with exit code 1 root@ubuntu:~/tenant# kubectl exec -it calico2-busybox -n ns-calico2 -- ping calico1-nginx.ns-calico1 PING calico1-nginx.ns-calico1 (10.101.254.195): 56 data bytes ^C --- calico1-nginx.ns-calico1 ping statistics --- 25 packets transmitted, 0 packets received, 100% packet loss command terminated with exit code 1 root@ubuntu:~/tenant# kubectl exec -it calico2-busybox -n ns-calico2 -- ping 10.244.29.18 PING 10.244.29.18 (10.244.29.18): 56 data bytes 64 bytes from 10.244.29.18: seq=0 ttl=63 time=0.233 ms 64 bytes from 10.244.29.18: seq=1 ttl=63 time=0.131 ms 64 bytes from 10.244.29.18: seq=2 ttl=63 time=0.113 ms ^C --- 10.244.29.18 ping statistics --- 3 packets transmitted, 3 packets received, 0% packet loss round-trip min/avg/max = 0.113/0.159/0.233 ms root@ubuntu:~/tenant#

root@ubuntu:~/tenant# kubectl exec -it calico2-busybox -n ns-calico2 -- wget http://10.244.29.18:80 Connecting to 10.244.29.18:80 (10.244.29.18:80) saving to 'index.html' index.html 100% |********************************| 612 0:00:00 ETA 'index.html' saved root@ubuntu:~/tenant#

由此可以看出,在没有设置网络隔离的时候,两个不同Namespace下的Pod是可以互通的。接下来我们使用Calico进行网络隔离。

网络隔离

先决条件

要想在Kubernetes集群中使用Calico进行网络隔离,必须满足以下条件:

- kube-apiserver必须开启运行时extensions/v1beta1/networkpolicies,即设置启动参数:--runtime-config=extensions/v1beta1/networkpolicies=true

- kubelet必须启用cni网络插件,即设置启动参数:--network-plugin=cni

- kube-proxy必须启用iptables代理模式,这是默认模式,可以不用设置

- kube-proxy不得启用--masquerade-all,这会跟calico冲突

注意:配置Calico之后,之前在集群中运行的Pod都要重新启动

编辑/etc/kubernetes/manifests/kube-apiserver.yaml

- --runtime-config=extensions/v1beta1/networkpolicies=true

生效

Static Pod 的配置文件被修改后,立即生效。

- Kubelet 会监听该文件的变化,当您修改了

/etc/kubenetes/manifest/kube-apiserver.yaml文件之后,kubelet 将自动终止原有的 kube-apiserver-{nodename} 的 Pod,并自动创建一个使用了新配置参数的 Pod 作为替代。 - 如果您有多个 Kubernetes Master 节点,您需要在每一个 Master 节点上都修改该文件,并使各节点上的参数保持一致。

安装calico

首先需要安装Calico网络插件,我们直接在Kubernetes集群中安装,便于管理。

# Calico Version v2.1.4

# http://docs.projectcalico.org/v2.1/releases#v2.1.4

# This manifest includes the following component versions:

# calico/node:v1.1.3

# calico/cni:v1.7.0

# calico/kube-policy-controller:v0.5.4

# This ConfigMap is used to configure a self-hosted Calico installation.

kind: ConfigMap

apiVersion: v1

metadata:

name: calico-config

namespace: kube-system

data:

# Configure this with the location of your etcd cluster.

etcd_endpoints: "https://10.1.2.154:2379,https://10.1.2.147:2379"

# Configure the Calico backend to use.

calico_backend: "bird"

# The CNI network configuration to install on each node.

cni_network_config: |-

{

"name": "k8s-pod-network",

"type": "calico",

"etcd_endpoints": "__ETCD_ENDPOINTS__",

"etcd_key_file": "__ETCD_KEY_FILE__",

"etcd_cert_file": "__ETCD_CERT_FILE__",

"etcd_ca_cert_file": "__ETCD_CA_CERT_FILE__",

"log_level": "info",

"ipam": {

"type": "calico-ipam"

},

"policy": {

"type": "k8s",

"k8s_api_root": "https://__KUBERNETES_SERVICE_HOST__:__KUBERNETES_SERVICE_PORT__",

"k8s_auth_token": "__SERVICEACCOUNT_TOKEN__"

},

"kubernetes": {

"kubeconfig": "__KUBECONFIG_FILEPATH__"

}

}

# If you're using TLS enabled etcd uncomment the following.

# You must also populate the Secret below with these files.

etcd_ca: "/calico-secrets/etcd-ca" # "/calico-secrets/etcd-ca"

etcd_cert: "/calico-secrets/etcd-cert" # "/calico-secrets/etcd-cert"

etcd_key: "/calico-secrets/etcd-key" # "/calico-secrets/etcd-key"

---

# The following contains k8s Secrets for use with a TLS enabled etcd cluster.

# For information on populating Secrets, see http://kubernetes.io/docs/user-guide/secrets/

apiVersion: v1

kind: Secret

type: Opaque

metadata:

name: calico-etcd-secrets

namespace: kube-system

data:

# Populate the following files with etcd TLS configuration if desired, but leave blank if

# not using TLS for etcd.

# This self-hosted install expects three files with the following names. The values

# should be base64 encoded strings of the entire contents of each file.

etcd-key: base64 key.pem

etcd-cert: base64 cert.pem

etcd-ca: base64 ca.pem

---

# This manifest installs the calico/node container, as well

# as the Calico CNI plugins and network config on

# each master and worker node in a Kubernetes cluster.

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: calico-node

namespace: kube-system

labels:

k8s-app: calico-node

spec:

selector:

matchLabels:

k8s-app: calico-node

template:

metadata:

labels:

k8s-app: calico-node

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

scheduler.alpha.kubernetes.io/tolerations: |

[{"key": "dedicated", "value": "master", "effect": "NoSchedule" },

{"key":"CriticalAddonsOnly", "operator":"Exists"}]

spec:

hostNetwork: true

containers:

# Runs calico/node container on each Kubernetes node. This

# container programs network policy and routes on each

# host.

- name: calico-node

image: quay.io/calico/node:v1.1.3

env:

# The location of the Calico etcd cluster.

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

# Choose the backend to use.

- name: CALICO_NETWORKING_BACKEND

valueFrom:

configMapKeyRef:

name: calico-config

key: calico_backend

# Disable file logging so `kubectl logs` works.

- name: CALICO_DISABLE_FILE_LOGGING

value: "true"

# Set Felix endpoint to host default action to ACCEPT.

- name: FELIX_DEFAULTENDPOINTTOHOSTACTION

value: "ACCEPT"

# Configure the IP Pool from which Pod IPs will be chosen.

- name: CALICO_IPV4POOL_CIDR

value: "192.168.0.0/16"

- name: CALICO_IPV4POOL_IPIP

value: "always"

# Disable IPv6 on Kubernetes.

- name: FELIX_IPV6SUPPORT

value: "false"

# Set Felix logging to "info"

- name: FELIX_LOGSEVERITYSCREEN

value: "info"

# Location of the CA certificate for etcd.

- name: ETCD_CA_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_ca

# Location of the client key for etcd.

- name: ETCD_KEY_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_key

# Location of the client certificate for etcd.

- name: ETCD_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_cert

# Auto-detect the BGP IP address.

- name: IP

value: ""

securityContext:

privileged: true

#resources:

#requests:

#cpu: 250m

volumeMounts:

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- mountPath: /var/run/calico

name: var-run-calico

readOnly: false

- mountPath: /calico-secrets

name: etcd-certs

# This container installs the Calico CNI binaries

# and CNI network config file on each node.

- name: install-cni

image: quay.io/calico/cni:v1.7.0

command: ["/install-cni.sh"]

env:

# The location of the Calico etcd cluster.

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

# The CNI network config to install on each node.

- name: CNI_NETWORK_CONFIG

valueFrom:

configMapKeyRef:

name: calico-config

key: cni_network_config

volumeMounts:

- mountPath: /host/opt/cni/bin

name: cni-bin-dir

- mountPath: /host/etc/cni/net.d

name: cni-net-dir

- mountPath: /calico-secrets

name: etcd-certs

volumes:

# Used by calico/node.

- name: lib-modules

hostPath:

path: /lib/modules

- name: var-run-calico

hostPath:

path: /var/run/calico

# Used to install CNI.

- name: cni-bin-dir

hostPath:

path: /opt/cni/bin

- name: cni-net-dir

hostPath:

path: /etc/cni/net.d

# Mount in the etcd TLS secrets.

- name: etcd-certs

secret:

secretName: calico-etcd-secrets

---

# This manifest deploys the Calico policy controller on Kubernetes.

# See https://github.com/projectcalico/k8s-policy

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: calico-policy-controller

namespace: kube-system

labels:

k8s-app: calico-policy

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

scheduler.alpha.kubernetes.io/tolerations: |

[{"key": "dedicated", "value": "master", "effect": "NoSchedule" },

{"key":"CriticalAddonsOnly", "operator":"Exists"}]

spec:

# The policy controller can only have a single active instance.

replicas: 1

strategy:

type: Recreate

template:

metadata:

name: calico-policy-controller

namespace: kube-system

labels:

k8s-app: calico-policy

spec:

# The policy controller must run in the host network namespace so that

# it isn't governed by policy that would prevent it from working.

hostNetwork: true

containers:

- name: calico-policy-controller

image: quay.io/calico/kube-policy-controller:v0.5.4

env:

# The location of the Calico etcd cluster.

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

# Location of the CA certificate for etcd.

- name: ETCD_CA_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_ca

# Location of the client key for etcd.

- name: ETCD_KEY_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_key

# Location of the client certificate for etcd.

- name: ETCD_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_cert

# The location of the Kubernetes API. Use the default Kubernetes

# service for API access.

- name: K8S_API

value: "https://kubernetes.default:443"

# Since we're running in the host namespace and might not have KubeDNS

# access, configure the container's /etc/hosts to resolve

# kubernetes.default to the correct service clusterIP.

- name: CONFIGURE_ETC_HOSTS

value: "true"

volumeMounts:

# Mount in the etcd TLS secrets.

- mountPath: /calico-secrets

name: etcd-certs

volumes:

# Mount in the etcd TLS secrets.

- name: etcd-certs

secret:

secretName: calico-etcd-secrets

# kubectl create -f calico.yaml

configmap "calico-config" created

secret "calico-etcd-secrets" created

daemonset "calico-node" created

deployment "calico-policy-controller" created

# kubectl get ds -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE-SELECTOR AGE

calico-node 1 1 1 1 1 <none> 52s

# kubectl get deploy -n kube-system

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

calico-policy-controller 1 1 1 1 6m这样就搭建了Calico网络,接下来就可以配置NetworkPolicy了。

配置NetworkPolicy

首先,修改ns-calico1的配置:

apiVersion: v1

kind: Namespace

metadata:

name: ns-calico1

labels:

user: calico1

annotations:

net.beta.kubernetes.io/network-policy: |

{

"ingress": {

"isolation": "DefaultDeny"

}

}

# kubectl apply -f ns-calico1.yaml

namespace "ns-calico1" configured

root@ubuntu:~/tenant# kubectl apply -f ns-calico1.yaml.bak namespace/ns-calico1 configured root@ubuntu:~/tenant# cat ns-calico1.yaml.bak apiVersion: v1 kind: Namespace metadata: name: ns-calico1 labels: user: calico1 annotations: net.beta.kubernetes.io/network-policy: | { "ingress": { "isolation": "DefaultDeny" } } root@ubuntu:~/tenant# kubectl exec -it calico2-busybox -n ns-calico2 -- wget http://10.244.29.18:80 Connecting to 10.244.29.18:80 (10.244.29.18:80) wget: can't open 'index.html': File exists command terminated with exit code 1 root@ubuntu:~/tenant# kubectl exec -it calico2-busybox -n ns-calico2 -- ls bin etc index.html root tmp var dev home proc sys usr root@ubuntu:~/tenant# kubectl exec -it calico2-busybox -n ns-calico2 -- rm index.html root@ubuntu:~/tenant# kubectl exec -it calico2-busybox -n ns-calico2 -- wget http://10.244.29.18:80 Connecting to 10.244.29.18:80 (10.244.29.18:80) saving to 'index.html' index.html 100% |********************************| 612 0:00:00 ETA 'index.html' saved root@ubuntu:~/tenant#

networkpolicy没有生效

root@ubuntu:~# ./calicoctl get GlobalNetworkPolicy NAME root@ubuntu:~#

设置全局不可通

root@ubuntu:~/tenant# kubectl apply -f ns-calico1-policy.yaml networkpolicy.networking.k8s.io/default-deny created root@ubuntu:~/tenant# cat ns-calico1-policy.yaml kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: name: default-deny namespace: ns-calico1 spec: podSelector: matchLabels: {}

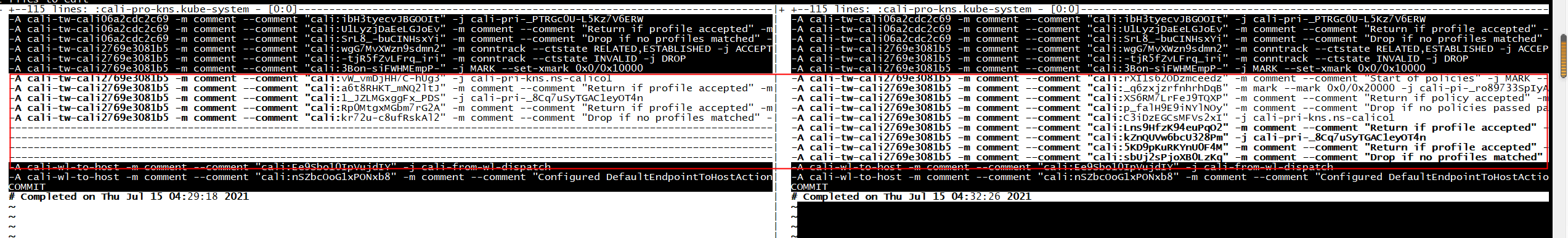

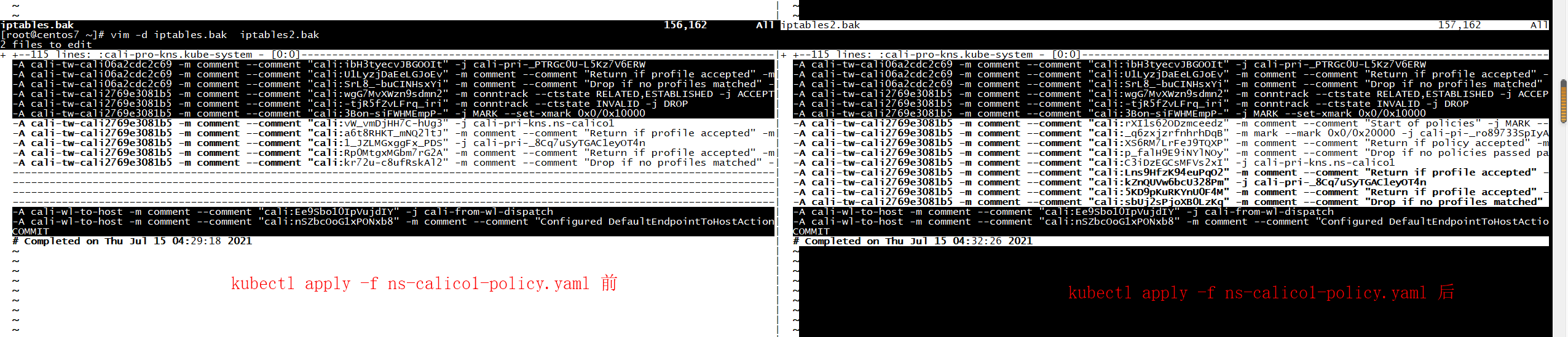

執行kubectl apply -f ns-calico1-policy.yaml 之前

[root@centos7 ~]# iptables -S -t filter | grep DROP -P FORWARD DROP -A KUBE-FIREWALL -m comment --comment "kubernetes firewall for dropping marked packets" -m mark --mark 0x8000/0x8000 -j DROP -A KUBE-FORWARD -m conntrack --ctstate INVALID -j DROP -A cali-INPUT -p ipv4 -m comment --comment "cali:_wjq-Yrma8Ly1Svo" -m comment --comment "Drop IPIP packets from non-Calico hosts" -j DROP -A cali-from-endpoint-mark -m comment --comment "cali:eZHmMCxyCR4n05Nl" -m comment --comment "Unknown interface" -j DROP -A cali-from-wl-dispatch -m comment --comment "cali:o2jq1ugSY-Rhiwz8" -m comment --comment "Unknown interface" -j DROP -A cali-fw-cali06a2cdc2c69 -m comment --comment "cali:cCy_DeqvYK6JKZiV" -m conntrack --ctstate INVALID -j DROP -A cali-fw-cali06a2cdc2c69 -p udp -m comment --comment "cali:htfAgjSPu1jPCn-4" -m comment --comment "Drop VXLAN encapped packets originating in workloads" -m multiport --dports 4789 -j DROP -A cali-fw-cali06a2cdc2c69 -p ipv4 -m comment --comment "cali:kqKm3g1mdVLpoidm" -m comment --comment "Drop IPinIP encapped packets originating in workloads" -j DROP -A cali-fw-cali06a2cdc2c69 -m comment --comment "cali:QV68yjQMtt1wEOJr" -m comment --comment "Drop if no profiles matched" -j DROP -A cali-fw-cali2769e3081b5 -m comment --comment "cali:GbLMFm3sFhvtdaYu" -m conntrack --ctstate INVALID -j DROP -A cali-fw-cali2769e3081b5 -p udp -m comment --comment "cali:vEU9xJVviqWG5k8g" -m comment --comment "Drop VXLAN encapped packets originating in workloads" -m multiport --dports 4789 -j DROP -A cali-fw-cali2769e3081b5 -p ipv4 -m comment --comment "cali:epMeJxcxi2dSofno" -m comment --comment "Drop IPinIP encapped packets originating in workloads" -j DROP -A cali-fw-cali2769e3081b5 -m comment --comment "cali:_IYODrs7q4VwBzhW" -m comment --comment "Drop if no profiles matched" -j DROP -A cali-set-endpoint-mark -i cali+ -m comment --comment "cali:eremTb5N6GaSSarT" -m comment --comment "Unknown endpoint" -j DROP -A cali-to-wl-dispatch -m comment --comment "cali:zD9MpMl7PbSllXDF" -m comment --comment "Unknown interface" -j DROP -A cali-tw-cali06a2cdc2c69 -m comment --comment "cali:kN1mxMlWZViDJhMS" -m conntrack --ctstate INVALID -j DROP -A cali-tw-cali06a2cdc2c69 -m comment --comment "cali:SrL8_-buCINHsxYi" -m comment --comment "Drop if no profiles matched" -j DROP -A cali-tw-cali2769e3081b5 -m comment --comment "cali:-tjR5fZvLFrq_iri" -m conntrack --ctstate INVALID -j DROP -A cali-tw-cali2769e3081b5 -m comment --comment "cali:kr72u-c8ufRskAl2" -m comment --comment "Drop if no profiles matched" -j DROP [root@centos7 ~]# iptables -S -t filter | grep DROP | wc -l 20

執行kubectl apply -f ns-calico1-policy.yaml 之后

[root@centos7 ~]# iptables -S -t filter | grep DROP -P FORWARD DROP -A KUBE-FIREWALL -m comment --comment "kubernetes firewall for dropping marked packets" -m mark --mark 0x8000/0x8000 -j DROP -A KUBE-FORWARD -m conntrack --ctstate INVALID -j DROP -A cali-INPUT -p ipv4 -m comment --comment "cali:_wjq-Yrma8Ly1Svo" -m comment --comment "Drop IPIP packets from non-Calico hosts" -j DROP -A cali-from-endpoint-mark -m comment --comment "cali:eZHmMCxyCR4n05Nl" -m comment --comment "Unknown interface" -j DROP -A cali-from-wl-dispatch -m comment --comment "cali:o2jq1ugSY-Rhiwz8" -m comment --comment "Unknown interface" -j DROP -A cali-fw-cali06a2cdc2c69 -m comment --comment "cali:cCy_DeqvYK6JKZiV" -m conntrack --ctstate INVALID -j DROP -A cali-fw-cali06a2cdc2c69 -p udp -m comment --comment "cali:htfAgjSPu1jPCn-4" -m comment --comment "Drop VXLAN encapped packets originating in workloads" -m multiport --dports 4789 -j DROP -A cali-fw-cali06a2cdc2c69 -p ipv4 -m comment --comment "cali:kqKm3g1mdVLpoidm" -m comment --comment "Drop IPinIP encapped packets originating in workloads" -j DROP -A cali-fw-cali06a2cdc2c69 -m comment --comment "cali:QV68yjQMtt1wEOJr" -m comment --comment "Drop if no profiles matched" -j DROP -A cali-fw-cali2769e3081b5 -m comment --comment "cali:GbLMFm3sFhvtdaYu" -m conntrack --ctstate INVALID -j DROP -A cali-fw-cali2769e3081b5 -p udp -m comment --comment "cali:vEU9xJVviqWG5k8g" -m comment --comment "Drop VXLAN encapped packets originating in workloads" -m multiport --dports 4789 -j DROP -A cali-fw-cali2769e3081b5 -p ipv4 -m comment --comment "cali:epMeJxcxi2dSofno" -m comment --comment "Drop IPinIP encapped packets originating in workloads" -j DROP -A cali-fw-cali2769e3081b5 -m comment --comment "cali:_IYODrs7q4VwBzhW" -m comment --comment "Drop if no profiles matched" -j DROP -A cali-set-endpoint-mark -i cali+ -m comment --comment "cali:eremTb5N6GaSSarT" -m comment --comment "Unknown endpoint" -j DROP -A cali-to-wl-dispatch -m comment --comment "cali:zD9MpMl7PbSllXDF" -m comment --comment "Unknown interface" -j DROP -A cali-tw-cali06a2cdc2c69 -m comment --comment "cali:kN1mxMlWZViDJhMS" -m conntrack --ctstate INVALID -j DROP -A cali-tw-cali06a2cdc2c69 -m comment --comment "cali:SrL8_-buCINHsxYi" -m comment --comment "Drop if no profiles matched" -j DROP -A cali-tw-cali2769e3081b5 -m comment --comment "cali:-tjR5fZvLFrq_iri" -m conntrack --ctstate INVALID -j DROP -A cali-tw-cali2769e3081b5 -m comment --comment "cali:p_falH9E9iNYlNOy" -m comment --comment "Drop if no policies passed packet" -m mark --mark 0x0/0x20000 -j DROP -A cali-tw-cali2769e3081b5 -m comment --comment "cali:sbUj2sPjoXB0LzKq" -m comment --comment "Drop if no profiles matched" -j DROP [root@centos7 ~]# iptables -S -t filter | grep DROP | wc -l 21 [root@centos7 ~]#

root@ubuntu:~/tenant# kubectl get pods -n ns-calico1 -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico1-busybox 1/1 Running 4 4h44m 10.244.129.130 centos7 <none> <none> calico1-nginx-688fdbb89b-vctjv 1/1 Running 0 23h 10.244.29.18 bogon <none> <none> root@ubuntu:~/tenant#

通过一个ns的pod也不可以访问

root@ubuntu:~/tenant# kubectl exec -it calico1-busybox -n ns-calico1 -- wget http://10.244.29.18:80 Connecting to 10.244.29.18:80 (10.244.29.18:80) ^Ccommand terminated with exit code 130 root@ubuntu:~/tenant#

设置同一个ns下的pod可以互通

root@ubuntu:~/tenant# kubectl label ns ns-calico1 nsname=ns-calico1 --overwrite=true namespace/ns-calico1 labeled

root@ubuntu:~/tenant# cat k8s-ns-calico1-policy-allow.yaml apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: isolate-namespace namespace: ns-calico1 spec: podSelector: {} policyTypes: - Ingress - Egress ingress: - from: - namespaceSelector: matchLabels: nsname: ns-calico1 egress: - to: - namespaceSelector: matchLabels: nsname: ns-calico1

root@ubuntu:~/tenant# kubectl apply -f k8s-ns-calico1-policy-allow.yaml networkpolicy.networking.k8s.io/isolate-namespace created root@ubuntu:~/tenant# kubectl exec -it calico1-busybox -n ns-calico1 -- wget http://10.244.29.18:80 Connecting to 10.244.29.18:80 (10.244.29.18:80) wget: can't open 'index.html': File exists command terminated with exit code 1 root@ubuntu:~/tenant# kubectl exec -it calico1-busybox -n ns-calico1 -- rm index.html root@ubuntu:~/tenant# kubectl exec -it calico1-busybox -n ns-calico1 -- wget http://10.244.29.18:80 Connecting to 10.244.29.18:80 (10.244.29.18:80) saving to 'index.html' index.html 100% |********************************| 612 0:00:00 ETA 'index.html' saved root@ubuntu:~/tenant#

不同ns之间的pod不可以访问

root@ubuntu:~/tenant# kubectl exec -it calico2-busybox -n ns-calico2 -- wget http://10.244.29.18:80 Connecting to 10.244.29.18:80 (10.244.29.18:80) ^Ccommand terminated with exit code 130 root@ubuntu:~/tenant# kubectl get pods -n ns-calico2 -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico2-busybox 1/1 Running 23 23h 10.244.29.12 bogon <none> <none> root@ubuntu:~/tenant#

root@ubuntu:~/tenant# kubectl delete -f k8s-ns-calico1-policy-allow.yaml networkpolicy.networking.k8s.io "isolate-namespace" deleted root@ubuntu:~/tenant# kubectl delete -f ns-calico1-policy.yaml networkpolicy.networking.k8s.io "default-deny" deleted

比較

root@ubuntu:~/tenant# kubectl apply -f ns-calico1-policy.yaml networkpolicy.networking.k8s.io/default-deny created root@ubuntu:~/tenant#

[root@centos7 ~]# cat iptables2.bak | grep cali-tw-cali2769e3081b5 :cali-tw-cali2769e3081b5 - [0:0] -A cali-to-wl-dispatch -o cali2769e3081b5 -m comment --comment "cali:OtAlv2r8LxCvt3uI" -g cali-tw-cali2769e3081b5 -A cali-tw-cali2769e3081b5 -m comment --comment "cali:wgG7MvXWzn9sdmn2" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT -A cali-tw-cali2769e3081b5 -m comment --comment "cali:-tjR5fZvLFrq_iri" -m conntrack --ctstate INVALID -j DROP -A cali-tw-cali2769e3081b5 -m comment --comment "cali:3Bon-siFWHMEmpP-" -j MARK --set-xmark 0x0/0x10000 -A cali-tw-cali2769e3081b5 -m comment --comment "cali:rXI1s62ODzmceedz" -m comment --comment "Start of policies" -j MARK --set-xmark 0x0/0x20000 -A cali-tw-cali2769e3081b5 -m comment --comment "cali:_q6zxjzrfnhrhDqB" -m mark --mark 0x0/0x20000 -j cali-pi-_ro89733SpIyAzMkuOSY -A cali-tw-cali2769e3081b5 -m comment --comment "cali:XS6RM7LrFeJ9TQXP" -m comment --comment "Return if policy accepted" -m mark --mark 0x10000/0x10000 -j RETURN -A cali-tw-cali2769e3081b5 -m comment --comment "cali:p_falH9E9iNYlNOy" -m comment --comment "Drop if no policies passed packet" -m mark --mark 0x0/0x20000 -j DROP -A cali-tw-cali2769e3081b5 -m comment --comment "cali:C3iDzEGCsMFVs2xI" -j cali-pri-kns.ns-calico1 -A cali-tw-cali2769e3081b5 -m comment --comment "cali:Lns9HfzK94euPqO2" -m comment --comment "Return if profile accepted" -m mark --mark 0x10000/0x10000 -j RETURN -A cali-tw-cali2769e3081b5 -m comment --comment "cali:kZnQUVw6bcU328Pm" -j cali-pri-_8Cq7uSyTGAC1eyOT4n -A cali-tw-cali2769e3081b5 -m comment --comment "cali:5KD9pKuRKYnU0F4M" -m comment --comment "Return if profile accepted" -m mark --mark 0x10000/0x10000 -j RETURN -A cali-tw-cali2769e3081b5 -m comment --comment "cali:sbUj2sPjoXB0LzKq" -m comment --comment "Drop if no profiles matched" -j DROP [root@centos7 ~]# cat iptables.bak | grep cali-tw-cali2769e3081b5 :cali-tw-cali2769e3081b5 - [0:0] -A cali-to-wl-dispatch -o cali2769e3081b5 -m comment --comment "cali:OtAlv2r8LxCvt3uI" -g cali-tw-cali2769e3081b5 -A cali-tw-cali2769e3081b5 -m comment --comment "cali:wgG7MvXWzn9sdmn2" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT -A cali-tw-cali2769e3081b5 -m comment --comment "cali:-tjR5fZvLFrq_iri" -m conntrack --ctstate INVALID -j DROP -A cali-tw-cali2769e3081b5 -m comment --comment "cali:3Bon-siFWHMEmpP-" -j MARK --set-xmark 0x0/0x10000 -A cali-tw-cali2769e3081b5 -m comment --comment "cali:vW_vmDjHH7C-hUg3" -j cali-pri-kns.ns-calico1 -A cali-tw-cali2769e3081b5 -m comment --comment "cali:a6t8RHKT_mNQ2ltJ" -m comment --comment "Return if profile accepted" -m mark --mark 0x10000/0x10000 -j RETURN -A cali-tw-cali2769e3081b5 -m comment --comment "cali:1_JZLMGxggFx_PDS" -j cali-pri-_8Cq7uSyTGAC1eyOT4n -A cali-tw-cali2769e3081b5 -m comment --comment "cali:Rp0MtgxMGbm7rG2A" -m comment --comment "Return if profile accepted" -m mark --mark 0x10000/0x10000 -j RETURN -A cali-tw-cali2769e3081b5 -m comment --comment "cali:kr72u-c8ufRskAl2" -m comment --comment "Drop if no profiles matched" -j DROP [root@centos7 ~]#

root@ubuntu:~/tenant# kubectl apply -f k8s-ns-calico1-policy-allow.yaml networkpolicy.networking.k8s.io/isolate-namespace created

iptables-save -t filter > iptables3.bak

[root@centos7 ~]# cat iptables3.bak | grep cali-tw-cali2769e3081b5 :cali-tw-cali2769e3081b5 - [0:0] -A cali-to-wl-dispatch -o cali2769e3081b5 -m comment --comment "cali:OtAlv2r8LxCvt3uI" -g cali-tw-cali2769e3081b5 -A cali-tw-cali2769e3081b5 -m comment --comment "cali:wgG7MvXWzn9sdmn2" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT -A cali-tw-cali2769e3081b5 -m comment --comment "cali:-tjR5fZvLFrq_iri" -m conntrack --ctstate INVALID -j DROP -A cali-tw-cali2769e3081b5 -m comment --comment "cali:3Bon-siFWHMEmpP-" -j MARK --set-xmark 0x0/0x10000 -A cali-tw-cali2769e3081b5 -m comment --comment "cali:rXI1s62ODzmceedz" -m comment --comment "Start of policies" -j MARK --set-xmark 0x0/0x20000 -A cali-tw-cali2769e3081b5 -m comment --comment "cali:_q6zxjzrfnhrhDqB" -m mark --mark 0x0/0x20000 -j cali-pi-_ro89733SpIyAzMkuOSY -A cali-tw-cali2769e3081b5 -m comment --comment "cali:XS6RM7LrFeJ9TQXP" -m comment --comment "Return if policy accepted" -m mark --mark 0x10000/0x10000 -j RETURN -A cali-tw-cali2769e3081b5 -m comment --comment "cali:JHdbuhecs1h2Q06M" -m mark --mark 0x0/0x20000 -j cali-pi-_2Wxn51hylsXDhXiIl9a -A cali-tw-cali2769e3081b5 -m comment --comment "cali:qSQHX9dgNt6V-PQ7" -m comment --comment "Return if policy accepted" -m mark --mark 0x10000/0x10000 -j RETURN -A cali-tw-cali2769e3081b5 -m comment --comment "cali:g0CtOwf4HucpVF97" -m comment --comment "Drop if no policies passed packet" -m mark --mark 0x0/0x20000 -j DROP -A cali-tw-cali2769e3081b5 -m comment --comment "cali:BmZ4QzFT6N-drKkS" -j cali-pri-kns.ns-calico1 -A cali-tw-cali2769e3081b5 -m comment --comment "cali:1rMno4eYVMLOPmTp" -m comment --comment "Return if profile accepted" -m mark --mark 0x10000/0x10000 -j RETURN -A cali-tw-cali2769e3081b5 -m comment --comment "cali:noc8BDMl14DoNhmc" -j cali-pri-_8Cq7uSyTGAC1eyOT4n -A cali-tw-cali2769e3081b5 -m comment --comment "cali:cIh-bAHE8Nxnt4OO" -m comment --comment "Return if profile accepted" -m mark --mark 0x10000/0x10000 -j RETURN -A cali-tw-cali2769e3081b5 -m comment --comment "cali:gTCap-VBTff9Gh95" -m comment --comment "Drop if no profiles matched" -j DROP [root@centos7 ~]#

- Network Policies

- Declaring Network Policy

- Using Calico for NetworkPolicy

- Calico for Kubernetes

-

Kubernetes之NetworkPolicy,Flannel和Calico

- Using Calico network policies to block traffic

-

Use namespace rules in policy

-

networkpolicy的实践——kube-router

-

networkpolicy的实践——felix calico

-

Network Policy in Kubernetes using Calico

-

Network Policy Implementation