一.知识点:

1.headless services

NOTE:: 我们在k8s上运行etcd集群,集群间通告身份是使用dns,不能使用pod ip,因为如果pod被重构了ip会变,在这种场景中不能直接使用k8s 的service,因为在集群环境中我们需要直接将service name映射到pod ip,而不是 service ip,这样我们才能完成集群间的身份验证。

2.env

NOTE: pod还没启动之前怎么能知道pod的ip呢?那我们启动etcd时绑定网卡的ip该怎样拿到呢?这时就可以用env将pod ip 以变量方式传给etcd,这样当pod启动后,etcd就通过env拿到了pod ip, 从而绑定到container 的ip

二.架构:

采用三节点的etcd:

分别为:etcd1,etcd2,etcd3

etcd持久数据采用nfs

etcd1.yml

apiVersion: apps/v1 kind: Deployment metadata: name: etcd1 labels: name: etcd1 spec: replicas: 1 selector: matchLabels: app: etcd1 template: metadata: labels: app: etcd1 spec: containers: - name: etcd1 image: myetcd imagePullPolicy: IfNotPresent volumeMounts: - mountPath: /data name: etcd-data env: - name: host_ip valueFrom: fieldRef: fieldPath: status.podIP command: ["/bin/sh","-c"] args: - /tmp/etcd --name etcd1 --initial-advertise-peer-urls http://${host_ip}:2380 --listen-peer-urls http://${host_ip}:2380 --listen-client-urls http://${host_ip}:2379,http://127.0.0.1:2379 --advertise-client-urls http://${host_ip}:2379 --initial-cluster-token etcd-cluster-1 --initial-cluster etcd1=http://etcd1:2380,etcd2=http://etcd2:2380,etcd3=http://etcd3:2380 --initial-cluster-state new --data-dir=/data volumes: - name: etcd-data nfs: server: 192.168.85.139 path: /data/v1

etcd2.yml

apiVersion: apps/v1 kind: Deployment metadata: name: etcd2 labels: name: etcd2 spec: replicas: 1 selector: matchLabels: app: etcd2 template: metadata: labels: app: etcd2 spec: containers: - name: etcd2 image: myetcd imagePullPolicy: IfNotPresent volumeMounts: - mountPath: /data name: etcd-data env: - name: host_ip valueFrom: fieldRef: fieldPath: status.podIP command: ["/bin/sh","-c"] args: - /tmp/etcd --name etcd2 --initial-advertise-peer-urls http://${host_ip}:2380 --listen-peer-urls http://${host_ip}:2380 --listen-client-urls http://${host_ip}:2379,http://127.0.0.1:2379 --advertise-client-urls http://${host_ip}:2379 --initial-cluster-token etcd-cluster-1 --initial-cluster etcd1=http://etcd1:2380,etcd2=http://etcd2:2380,etcd3=http://etcd3:2380 --initial-cluster-state new --data-dir=/data volumes: - name: etcd-data nfs: server: 192.168.85.139 path: /data/v2

etcd3.yml

apiVersion: apps/v1 kind: Deployment metadata: name: etcd3 labels: name: etcd3 spec: replicas: 1 selector: matchLabels: app: etcd3 template: metadata: labels: app: etcd3 spec: containers: - name: etcd3 image: myetcd imagePullPolicy: IfNotPresent volumeMounts: - mountPath: /data name: etcd-data env: - name: host_ip valueFrom: fieldRef: fieldPath: status.podIP command: ["/bin/sh","-c"] args: - /tmp/etcd --name etcd3 --initial-advertise-peer-urls http://${host_ip}:2380 --listen-peer-urls http://${host_ip}:2380 --listen-client-urls http://${host_ip}:2379,http://127.0.0.1:2379 --advertise-client-urls http://${host_ip}:2379 --initial-cluster-token etcd-cluster-1 --initial-cluster etcd1=http://etcd1:2380,etcd2=http://etcd2:2380,etcd3=http://etcd3:2380 --initial-cluster-state new --data-dir=/data volumes: - name: etcd-data nfs: server: 192.168.85.139 path: /data/v3

etcd1-svc.yml

apiVersion: v1 kind: Service metadata: name: etcd1 spec: clusterIP: None ports: - name: client port: 2379 targetPort: 2379 - name: message port: 2380 targetPort: 2380 selector: app: etcd1

etcd2-svc.yml

apiVersion: v1 kind: Service metadata: name: etcd2 spec: clusterIP: None ports: - name: client port: 2379 targetPort: 2379 - name: message port: 2380 targetPort: 2380 selector: app: etcd2

etcd3-svc.yml

apiVersion: v1 kind: Service metadata: name: etcd3 spec: clusterIP: None ports: - name: client port: 2379 targetPort: 2379 - name: message port: 2380 targetPort: 2380 selector: app: etcd3

在任意节点查看etcd状态:

[root@node1 test]# kubectl exec etcd1-79bdcb47c9-fwqps -- /tmp/etcdctl cluster-health

member 876043ef79ada1ea is healthy: got healthy result from http://10.244.1.113:2379

member 99eab3685d8363a1 is healthy: got healthy result from http://10.244.1.111:2379

member dcb68c82481661be is healthy: got healthy result from http://10.244.1.112:2379

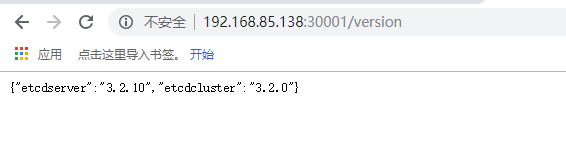

提供外部访问:

apiVersion: v1 kind: Service metadata: name: etcd-external spec: type: NodePort ports: - name: client port: 2379 targetPort: 2379 nodePort: 30001 - name: message port: 2380 targetPort: 2380 nodePort: 30002 selector: app: etcd1