PROBLEM: OmniAnomaly

multivariate time series anomaly detection + unsupervised

主体思想: input: multivariate time series to RNN ------> capture the normal patterns -----> reconstruct input data by the representations ------> use the reconstruction probabilities to determine anomalies.

INTRODUCTION:

1. The first challenge is how to learn robust latent representations, considering both the temporal dependence and stochasticity of multivariate time series.

-------stochastic RNN + explicit temporal dependence among stochastic variables.

Stochastic variables are latent representations of input data and their quality is the key to model performance.

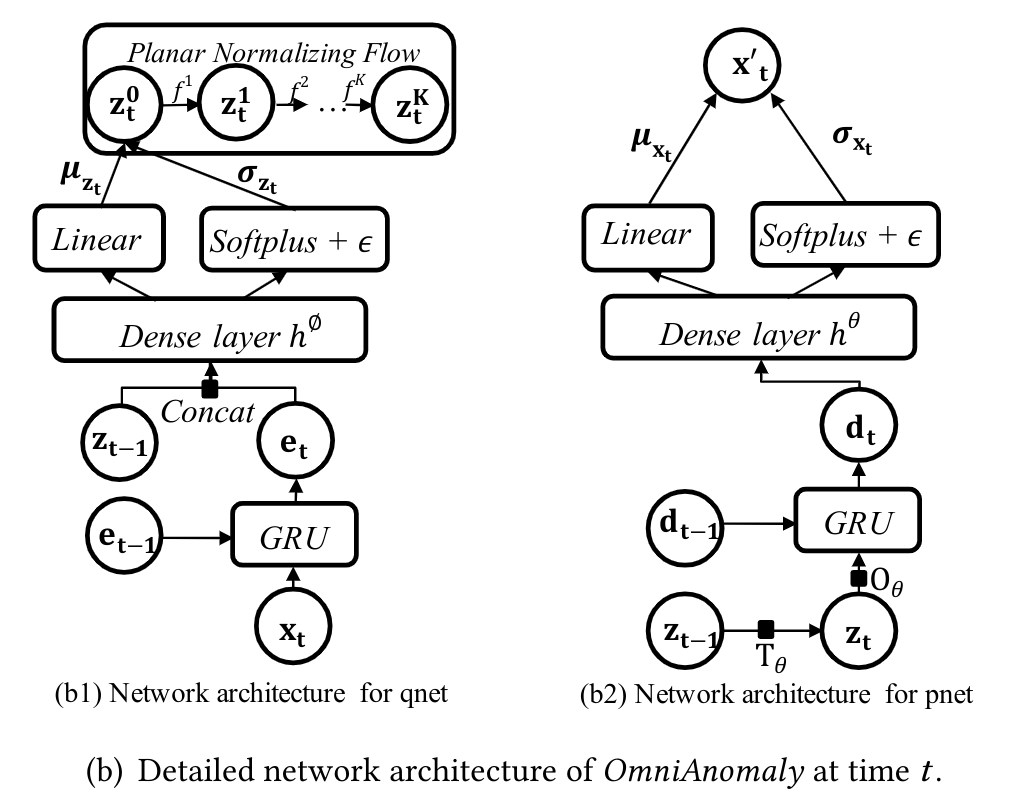

Their approach glues GRU and VAE with two key techniques:

- stochastic variable connection technique: explicitly model temporal dependence among stochastic variables in the latent space.

- Planar Normalizing Flows, which uses a series of invertible mappings可逆映射 to learn non-Gaussian posterior distributions in latent stochastic space.

2. The second challenge is how to provide interpretation to the detected entity-level anomalies, given the stochastic deep learning approaches.

Challenge: 1. capture long-term dependence. 2. capture probability distributions of multivariate time series. 3. how to interpret your results (unsupervised learning)

EVIDENCE: literature 5 shown that explicitly modeling the temporal dependence are better.

RELATED WORK:

PRELIMINARIES:

Problem statement: 以时序数据的个数作为维度,M个TS, x 属于R[M*N], x_t为一个M维的列向量,

gru, vae, and stochastic gradient variational bayes

DESIGN

OmniAnomaly structure: returns an anomaly score for x_t.

- online detection

- offline detection

- data preprocessing: data standardization, sequence segmentation through sliding windows T+1;

- input: multivariate time series inside a window, ----------Model training ------------output: an anomaly score for each observation ------- automatic threshold selection;

Detection: detect anomalies based on the reconstruction probability of x_t.

Loss function: ELBO;

Variational inference algorithms: SGVB;

Output: a univariate time series of anomaly scores

Automatic thresholds selection: extreme value theory + peaks-over-threshold;

1. use GRU to capture complex temporal dependence in x-space.

2. apply VAE to map observations to stochastic variables.

3. explicitly model temporal dependence among latent space, they propose the stochastic variable connection technique.

4. adopt planar NF.

Evaluation:

We use Precision, Recall, F1-Score (denoted as F1) to evaluate the performance of OmniAnomaly.

Baseline:

- LSTM with nonparametric dynamic thresholding

- EncDec-AD

- DAGMM

- LSTM-VAE

- Donut; 采取别的方式使donut适用于multivariate TS.

Supplementary knowledge:

1. VAE:

inference net qnet + generative net pnet.

2. GRU: gate recurrent unit

Reference