模糊查询-〉数据库全文检索-〉Lucene

一元分词(lucene内置)

Analyzer analyzer = new CJKAnalyzer(); TokenStream tokenStream = analyzer.TokenStream("", new StringReader("北京,Hi欢迎你们大家")); Lucene.Net.Analysis.Token token = null; while ((token = tokenStream.Next()) != null) { Console.WriteLine(token.TermText()); }

二元分词对汉字没有多大作用

using System.Collections; using System.IO; using Lucene.Net.Analysis; namespace NSharp.SearchEngine.Lucene.Analysis.Cjk { /**/ /** * Filters CJKTokenizer with StopFilter. * * @author Che, Dong */ public class CJKAnalyzer : Analyzer { //~ Static fields/initializers --------------------------------------------- /**/ /** * An array containing some common English words that are not usually * useful for searching and some double-byte interpunctions. */ public static string[] STOP_WORDS = { "a", "and", "are", "as", "at", "be", "but", "by", "for", "if", "in", "into", "is", "it", "no", "not", "of", "on", "or", "s", "such", "t", "that", "the", "their", "then", "there", "these", "they", "this", "to", "was", "will", "with", "", "www" }; //~ Instance fields -------------------------------------------------------- /**/ /** * stop word list */ private Hashtable stopTable; //~ Constructors ----------------------------------------------------------- /**/ /** * Builds an analyzer which removes words in {@link #STOP_WORDS}. */ public CJKAnalyzer() { stopTable = StopFilter.MakeStopSet(STOP_WORDS); } /**/ /** * Builds an analyzer which removes words in the provided array. * * @param stopWords stop word array */ public CJKAnalyzer(string[] stopWords) { stopTable = StopFilter.MakeStopSet(stopWords); } //~ Methods ---------------------------------------------------------------- /**/ /** * get token stream from input * * @param fieldName lucene field name * @param reader input reader * @return TokenStream */ public override TokenStream TokenStream(string fieldName, TextReader reader) { TokenStream ts = new CJKTokenizer(reader); return new StopFilter(ts, stopTable); //return new StopFilter(new CJKTokenizer(reader), stopTable); } } }

using System; using System.Collections; using System.IO; using Lucene.Net.Analysis; namespace NSharp.SearchEngine.Lucene.Analysis.Cjk { public class CJKTokenizer:Tokenizer { //~ Static fields/initializers --------------------------------------------- /**//** Max word length */ private static int MAX_WORD_LEN = 255; /**//** buffer size: */ private static int IO_BUFFER_SIZE = 256; //~ Instance fields -------------------------------------------------------- /**//** word offset, used to imply which character(in ) is parsed */ private int offset = 0; /**//** the index used only for ioBuffer */ private int bufferIndex = 0; /**//** data length */ private int dataLen = 0; /**//** * character buffer, store the characters which are used to compose <br> * the returned Token */ private char[] buffer = new char[MAX_WORD_LEN]; /**//** * I/O buffer, used to store the content of the input(one of the <br> * members of Tokenizer) */ private char[] ioBuffer = new char[IO_BUFFER_SIZE]; /**//** word type: single=>ASCII double=>non-ASCII word=>default */ private string tokenType = "word"; /**//** * tag: previous character is a cached double-byte character "C1C2C3C4" * ----(set the C1 isTokened) C1C2 "C2C3C4" ----(set the C2 isTokened) * C1C2 C2C3 "C3C4" ----(set the C3 isTokened) "C1C2 C2C3 C3C4" */ private bool preIsTokened = false; //~ Constructors ----------------------------------------------------------- /**//** * Construct a token stream processing the given input. * * @param in I/O reader */ public CJKTokenizer(TextReader reader) { input = reader; } //~ Methods ---------------------------------------------------------------- /**//** * Returns the next token in the stream, or null at EOS. * See http://java.sun.com/j2se/1.3/docs/api/java/lang/Character.UnicodeBlock.html * for detail. * * @return Token * * @throws java.io.IOException - throw IOException when read error <br> * hanppened in the InputStream * */ public override Token Next() { /**//** how many character(s) has been stored in buffer */ int length = 0; /**//** the position used to create Token */ int start = offset; while (true) { /**//** current charactor */ char c; offset++; /**//* if (bufferIndex >= dataLen) { dataLen = input.read(ioBuffer); //Java中read读到最后不会出错,但.Net会, bufferIndex = 0; } */ if (bufferIndex >= dataLen ) { if (dataLen==0 || dataLen>=ioBuffer.Length)//Java中read读到最后不会出错,但.Net会,所以此处是为了拦截异常 { dataLen = input.Read(ioBuffer,0,ioBuffer.Length); bufferIndex = 0; } else { dataLen=0; } } if (dataLen ==0) { if (length > 0) { if (preIsTokened == true) { length = 0; preIsTokened = false; } break; } else { return null; } } else { //get current character c = ioBuffer[bufferIndex++]; } //if the current character is ASCII or Extend ASCII if (IsAscii(c) || IsHALFWIDTH_AND_FULLWIDTH_FORMS(c)) { if (IsHALFWIDTH_AND_FULLWIDTH_FORMS(c)) { /**//** convert HALFWIDTH_AND_FULLWIDTH_FORMS to BASIC_LATIN */ int i = (int) c; i = i - 65248; c = (char) i; } //if the current character is a letter or "_" "+" "##region if the current character is a letter or "_" "+" "# // if the current character is a letter or "_" "+" "#" if (char.IsLetterOrDigit(c) || ((c == '_') || (c == '+') || (c == '#'))) { if (length == 0) { // "javaC1C2C3C4linux" <br> // ^--: the current character begin to token the ASCII // letter start = offset - 1; } else if (tokenType == "double") { // "javaC1C2C3C4linux" <br> // ^--: the previous non-ASCII // : the current character offset--; bufferIndex--; tokenType = "single"; if (preIsTokened == true) { // there is only one non-ASCII has been stored length = 0; preIsTokened = false; break; } else { break; } } // store the LowerCase(c) in the buffer buffer[length++] = char.ToLower(c); tokenType = "single"; // break the procedure if buffer overflowed! if (length == MAX_WORD_LEN) { break; } } else if (length > 0) { if (preIsTokened == true) { length = 0; preIsTokened = false; } else { break; } } } else { // non-ASCII letter, eg."C1C2C3C4"#region // non-ASCII letter, eg."C1C2C3C4" // non-ASCII letter, eg."C1C2C3C4" if (char.IsLetter(c)) { if (length == 0) { start = offset - 1; buffer[length++] = c; tokenType = "double"; } else { if (tokenType == "single") { offset--; bufferIndex--; //return the previous ASCII characters break; } else { buffer[length++] = c; tokenType = "double"; if (length == 2) { offset--; bufferIndex--; preIsTokened = true; break; } } } } else if (length > 0) { if (preIsTokened == true) { // empty the buffer length = 0; preIsTokened = false; } else { break; } } } } return new Token(new String(buffer, 0, length), start, start + length, tokenType ); } public bool IsAscii(char c) { return c<256 && c>=0; } public bool IsHALFWIDTH_AND_FULLWIDTH_FORMS(char c) { return c<=0xFFEF && c>=0xFF00; } } }

Analyzer analyzer = new PanGuAnalyzer(); TokenStream tokenStream = analyzer.TokenStream("", new StringReader("面向对象编程")); Lucene.Net.Analysis.Token token = null; while ((token = tokenStream.Next()) != null) { Console.WriteLine(token.TermText()); }

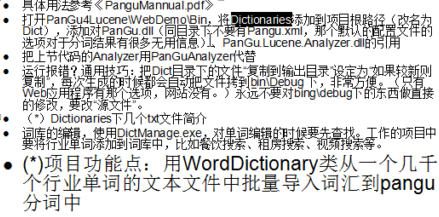

基于词库的分词算法:庖丁解牛(java用)、盘古分词(.net用)等,效率低

盘古分词要引用pangu.dll,pangu.lucene.Analyzer.dll,并拷贝词库

Analyzer analyzer = new PanGuAnalyzer(); TokenStream tokenStream = analyzer.TokenStream("", new StringReader("面向对象编程")); Lucene.Net.Analysis.Token token = null; while ((token = tokenStream.Next()) != null) { Console.WriteLine(token.TermText()); }

创建索引(读取查询文件并写入Lucene.net并生成索引文件)

string indexPath = @"C:lucenedir";//注意和磁盘上文件夹的大小写一致,否则会报错。将创建的分词内容放在该目录下。 FSDirectory directory = FSDirectory.Open(new DirectoryInfo(indexPath), new NativeFSLockFactory());//指定索引文件(打开索引目录) FS指的是就是FileSystem bool isUpdate = IndexReader.IndexExists(directory);//IndexReader:对索引进行读取的类。该语句的作用:判断索引库文件夹是否存在以及索引特征文件是否存在。 if (isUpdate) { //同时只能有一段代码对索引库进行写操作。当使用IndexWriter打开directory时会自动对索引库文件上锁。 //如果索引目录被锁定(比如索引过程中程序异常退出),则首先解锁(提示一下:如果我现在正在写着已经加锁了,但是还没有写完,这时候又来一个请求,那么不就解锁了吗?这个问题后面会解决) if (IndexWriter.IsLocked(directory)) { IndexWriter.Unlock(directory); } } IndexWriter writer = new IndexWriter(directory, new PanGuAnalyzer(), !isUpdate, Lucene.Net.Index.IndexWriter.MaxFieldLength.UNLIMITED);//向索引库中写索引。这时在这里加锁。 for (int i = 1; i <= 10; i++) { string txt = File.ReadAllText(@"D:讲课�924班OA第七天资料测试文件" + i + ".txt", System.Text.Encoding.Default);//注意这个地方的编码 Document document = new Document();//表示一篇文档。 //Field.Store.YES:表示是否存储原值。只有当Field.Store.YES在后面才能用doc.Get("number")取出值来.Field.Index. NOT_ANALYZED:不进行分词保存 document.Add(new Field("number", i.ToString(), Field.Store.YES, Field.Index.NOT_ANALYZED)); //Field.Index. ANALYZED:进行分词保存:也就是要进行全文的字段要设置分词 保存(因为要进行模糊查询) //Lucene.Net.Documents.Field.TermVector.WITH_POSITIONS_OFFSETS:不仅保存分词还保存分词的距离。 document.Add(new Field("body", txt, Field.Store.YES, Field.Index.ANALYZED, Lucene.Net.Documents.Field.TermVector.WITH_POSITIONS_OFFSETS)); writer.AddDocument(document); } writer.Close();//会自动解锁。 directory.Close();//不要忘了Close,否则索引结果搜不到

搜索

string indexPath = @"C:lucenedir"; string kw = "面向对象"; FSDirectory directory = FSDirectory.Open(new DirectoryInfo(indexPath), new NoLockFactory()); IndexReader reader = IndexReader.Open(directory, true); IndexSearcher searcher = new IndexSearcher(reader); //搜索条件 PhraseQuery query = new PhraseQuery(); //foreach (string word in kw.Split(' '))//先用空格,让用户去分词,空格分隔的就是词“计算机 专业” //{ // query.Add(new Term("body", word)); //} //query.Add(new Term("body","语言"));--可以添加查询条件,两者是add关系.顺序没有关系. //query.Add(new Term("body", "大学生")); query.Add(new Term("body", kw));//body中含有kw的文章 query.SetSlop(100);//多个查询条件的词之间的最大距离.在文章中相隔太远 也就无意义.(例如 “大学生”这个查询条件和"简历"这个查询条件之间如果间隔的词太多也就没有意义了。) //TopScoreDocCollector是盛放查询结果的容器 TopScoreDocCollector collector = TopScoreDocCollector.create(1000, true); searcher.Search(query, null, collector);//根据query查询条件进行查询,查询结果放入collector容器 ScoreDoc[] docs = collector.TopDocs(0, collector.GetTotalHits()).scoreDocs;//得到所有查询结果中的文档,GetTotalHits():表示总条数 TopDocs(300, 20);//表示得到300(从300开始),到320(结束)的文档内容. //可以用来实现分页功能 this.listBox1.Items.Clear(); for (int i = 0; i < docs.Length; i++) { // //搜索ScoreDoc[]只能获得文档的id,这样不会把查询结果的Document一次性加载到内存中。降低了内存压力,需要获得文档的详细内容的时候通过searcher.Doc来根据文档id来获得文档的详细内容对象Document. int docId = docs[i].doc;//得到查询结果文档的id(Lucene内部分配的id) Document doc = searcher.Doc(docId);//找到文档id对应的文档详细信息 this.listBox1.Items.Add(doc.Get("number") + " ");// 取出放进字段的值 this.listBox1.Items.Add(doc.Get("body") + " "); this.listBox1.Items.Add("----------------------- "); }