前言

Ceph是优秀的分布式存储集群,可以为云计算提供可靠的存储服务,本次作者示范部署ceph存储集群,使用的系统ubuntu18.04.2,部署的ceph版本为Luminous,即Ceph 12.x。本次的范例是使用ceph的官方工具ceph-deploy进行部署服务。由于ceph的使用条件苛刻,不推荐在生产环境使用云主机部署,所以本次的部署测试使用睿江云的云主机部署服务是一个合适的选择。

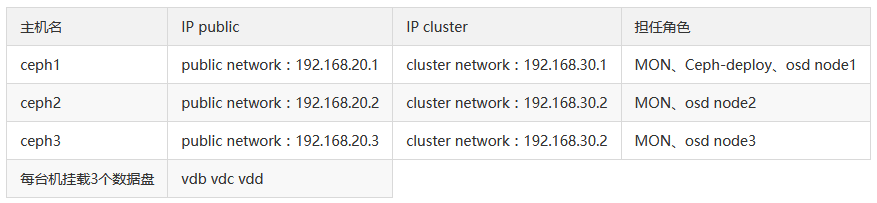

先说明下搭建环境与各主机角色。

机器选择:睿江云平台

节点选择:广东G(VPC网络更安全、SSD磁盘性能高)

云主机配置:1核2G

网络选择:VPC虚拟私有云(VPC网络更安全、高效)

带宽:5M

系统版本:Ubuntu18.04

云主机数量:3

软件版本:Ceph 12.0.X

拓扑图

实战部署

1.设置Hostname

vim /etc/hostname

127.0.0.1 localhost $IP ceph$i

2.设置Hosts文件(IP 是存储IP ceph-node是各ceph节点的hostname)

vim /etc/hosts

$IP $ceph$i

hosts文件可以分发密钥后再scp给所有节点

3.设置ssh 并分发密钥

cat ssh-script.sh

##!/bin/sh ##关闭GSSAPIAuthentication sed -i 's@GSSAPIAuthentication yes@GSSAPIAuthentication no@' /etc/ssh/sshd_config ##开启ssh可登陆root用户 sed -i ‘/^#PermitRootLogin/c PermitRootLogin yes’ /etc/ssh/sshd_config ##增大ssh连接时间 sed -i 's@#LoginGraceTime 2m@LoginGraceTime 30m@' /etc/ssh/sshd_config ##关闭dns解析 sed -i 's@#UseDNS yes@UseDNS no@' /etc/ssh/sshd_config apt-get install -y sshpass sshpass -pdeploy123 ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.20.1 "-o StrictHostKeyChecking=no" sshpass -pdeploy123 ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.20.2 "-o StrictHostKeyChecking=no" sshpass -pdeploy123 ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.20.3 "-o StrictHostKeyChecking=no"

sh ssh-script.sh

4.配置系统时间,设置统一ntp时钟服务器

cat time-service ntp-synctime.sh

##! /bin/sh 'timedatectl set-timezone Asia/Shanghai' apt-get install ntp ntpdate ntp-doc -y sed -i 's@pool 0.ubuntu.pool.ntp.org iburst@pool 120.25.115.20 prefer@' /etc/ntp.conf ntpdate 120.25.115.20 >>/dev/null systemctl restart ntp ntpq -pm >>/dev/null

mkdir /opt/DevOps/CallCenter -p

cat << EOF >>/opt/DevOps/CallCenter/CallCenter.sh !/usr/bin/env bash sync time daily## grep sync time daily /var/spool/at/a* &>/dev/null || at -f /opt/DevOps/CallCenter/sync_time_daily.sh 23:59 &> /dev/null EOF cat << EOF >>/opt/DevOps/CallCenter/sync_time_daily.sh !/usr/bin/env bash sync time daily systemctl stop ntp ntpdate 120.25.115.20 || echo "sync time error" systemctl start ntp hwclock -w EOF

chmod u+x /opt/DevOps/CallCenter/CallCenter.sh

crontab -l > crontab_sync_time

echo ‘/5 * /opt/DevOps/CallCenter/CallCenter.sh’ > crontab_sync_time

crontab crontab_sync_time

rm -f crontab_sync_time

sh time-service ntp-synctime.sh

5.安装工具和修改ceph源和认证

cat apt-tool.sh

##!/bin/sh apt-get update apt-get install -y nslookup apt-get install -y tcpdump apt-get install -y bind-utils apt-get install -y wget apt-get -y install vim apt-get -y install ifenslave apt-get install -y python-minimal python2.7 python-rbd python-pip

echo export CEPH_DEPLOY_REPO_URL=http://mirrors.163.com/ceph/debian-luminous >>/etc/profile

echo export CEPH_DEPLOY_GPG_URL=http://mirrors.163.com/ceph/keys/release.asc >>/etc/profile

source /etc/profile

sh apt-tool.sh

6.下面步骤使用 deploy 角色 执行

所有节点安装ceph,deploy123自己更换成deploy用户密码

$ echo ‘deploy123’ |sudo apt-get install ceph

$ echo ‘deploy123’ |sudo pip install ceph-deploy

$ mkdir my-cluster

$ cd my-cluster

$ sudo ceph-deploy new ceph1 ceph2 ceph3

7.初始化mon

$ sudo ceph-deploy —overwrite-conf mon create-initial

[ceph_deploy.gatherkeys][INFO ] Storing ceph.client.admin.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mds.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mgr.keyring

[ceph_deploy.gatherkeys][INFO ] keyring ‘ceph.mon.keyring’ already exists

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-osd.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-rgw.keyring

[ceph_deploy.gatherkeys][INFO ] Destroy temp directory /tmp/tmpcrNzjv

8.把配置文件分发到各node节点上

$ sudo ceph-deploy admin ceph1 ceph2 ceph3

deploy@ceph1:~/my-cluster$ ll /etc/ceph/

total 24

drwxr-xr-x 2 root root 70 Sep 21 11:54 ./

drwxr-xr-x 96 root root 8192 Sep 21 11:41 ../

-rw———- 1 root root 63 Sep 21 11:54 ceph.client.admin.keyring

-rw-r—r— 1 root root 298 Sep 21 11:54 ceph.conf

-rw-r—r— 1 root root 92 Jul 3 09:33 rbdmap

9.创建osd

cat create-osd.sh

##!/bin/sh

cd ~/my-cluster

for Name in ceph1 ceph2 ceph3

do

{

echo 'deploy123' | sudo ceph-deploy disk zap $Name /dev/vdb

echo 'deploy123' | sudo ceph-deploy disk zap $Name /dev/vdc

echo 'deploy123' | sudo ceph-deploy disk zap $Name /dev/vdd

echo 'deploy123' | sudo ceph-deploy osd create $Name --data /dev/vdb

echo 'deploy123' | sudo ceph-deploy osd create $Name --data /dev/vdc

echo 'deploy123' | sudo ceph-deploy osd create $Name --data /dev/vdd

}&

done

wait!/bin/sh

cd ~/my-cluster

for Name in ceph1 ceph2 ceph3

do

{

echo 'deploy123' | sudo ceph-deploy disk zap $Name /dev/vdb

echo 'deploy123' | sudo ceph-deploy disk zap $Name /dev/vdc

echo 'deploy123' | sudo ceph-deploy disk zap $Name /dev/vdd

echo 'deploy123' | sudo ceph-deploy osd create $Name --data /dev/vdb

echo 'deploy123' | sudo ceph-deploy osd create $Name --data /dev/vdc

echo 'deploy123' | sudo ceph-deploy osd create $Name --data /dev/vdd

}&

done

wait

sh create-osd.sh

10.# 开启mgr模式

$ sudo ceph-deploy mgr create ceph1 ceph2 ceph3

$ sudo ceph mgr module enable dashboard

11.# 创建osd pool

PG数量计算:https://ceph.com/pgcalc/

$ sudo ceph osd pool create {pool_name} 50 50

12.# 设置副本数及最小副本数

$ sudo ceph osd pool set {pool_name} size {num}

$ sudo ceph osd pool set {pool_name} min_size {num}

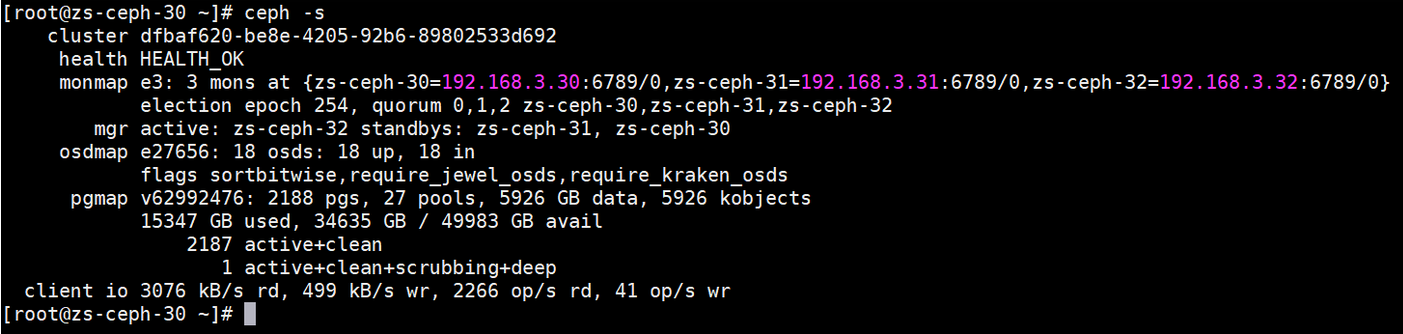

13.# 部署成功使用命令ceph -s 检查状态

$ sudo ceph-s

看到 HEALTH_OK则表示集群状态正常

看到池组 active+clean 则表示PG状态正常

后续

到此为止,Ubuntu18&Ceph-deploy工具部署全部部署过程已经完成。如对小编的部署过程有疑问,可以在下方留言哦~