1. 算法

多标签分类的适用场景较为常见,比如,一份歌单可能既属于标签旅行也属于标签驾车。有别于多分类分类,多标签分类中每个标签不是互斥的。多标签分类算法大概有两类流派:

- 采用One-vs-Rest(或其他方法)组合多个二分类基分类器;

- 改造经典的单分类器,比如,AdaBoost-MH与ML-KNN。

One-vs-Rest

基本思想:为每一个标签(y_i)构造一个二分类器,正样本为含有标签(y_i)的实例,负样本为不含有标签(y_i)的实例;最后组合N个二分类器结果得到N维向量,可视作为在多标签上的得分。我实现一个Spark版本MultiLabelOneVsRest,源代码见mllibX。

AdaBoost-MH

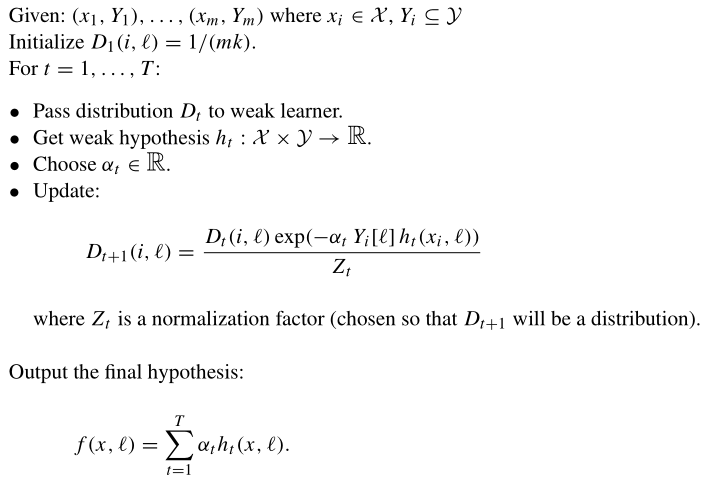

AdaBoost-MH算法是由Schapire(AdaBoost算法作者)与Singer提出,基本思想与AdaBoost算法类似:自适应地调整样本-类别的分布权重。对于训练样本(langle (x_1, Y_1), cdots, (x_m, Y_m) angle),任意一个实例 (x_i in mathcal{X}),标签类别(Y_i subseteq mathcal{Y}),算法流程如下:

其中,(D_t(i, ell))表示在t次迭代实例(x_i)对应标签(ell)的权重,(Y[ell])标识标签(ell)是否属于实例((x, Y)),若属于则为+1,反之为-1(增加样本标签的权重);即

(Z_t)为每一次迭代的归一化因子,保证权重分布矩阵(D)的所有权重之和为1,

ML-KNN

ML-KNN (multi-label K nearest neighbor)基于KNN算法,已知K近邻的标签信息,通过最大后验概率(Maximum A Posteriori)估计实例(t)是否应打上标签(ell),

其中,(H_0^{ell})表示实例(t)不应打上标签(ell),(H_1^{ell})则表示应被打上;(E_{C_t(ell)}^{ell}) 表示实例(t)的K近邻中拥有标签(ell)的实例数为(C_t(ell))。上述式子可有贝叶斯定理求解:

上面两项计算细节见论文[2].

2. 实验

AdaBoost.MH算法Spark实现见sparkboost,scikit-multilearn实现ML-KNN算法。我在siam-competition2007数据集上做了几个算法的对比实验,结果如下:

| 算法 | Hamming loss | Precision | Recall | F1 Measure |

|---|---|---|---|---|

| LR+OvR | 0.0569 | 0.6252 | 0.5586 | 0.5563 |

| AdaBoost.MH | 0.0587 | 0.6280 | 0.6082 | 0.5837 |

| ML-KNN | 0.0652 | 0.6204 | 0.6535 | 0.5977 |

此外,Mulan提供了众多数据集,Kaggle也有多标签分类的比赛WISE 2014。

实验部分代码如下:

import numpy as np

from sklearn import metrics

from sklearn.datasets import load_svmlight_file

from sklearn.linear_model import LogisticRegression

from sklearn.multiclass import OneVsRestClassifier

from sklearn.preprocessing import MultiLabelBinarizer

# load svm file

X_train, y_train = load_svmlight_file('tmc2007_train.svm', dtype=np.float64, multilabel=True)

X_test, y_test = load_svmlight_file('tmc2007_test.svm', dtype=np.float64, multilabel=True)

# convert multi labels to binary matrix

mb = MultiLabelBinarizer()

y_train = mb.fit_transform(y_train)

y_test = mb.fit_transform(y_test)

# LR + OvR

clf = OneVsRestClassifier(LogisticRegression(), n_jobs=10)

clf.fit(X_train, y_train)

y_pred = clf.predict(X_test)

# multilabel classification metrics

loss = metrics.hamming_loss(y_test, y_pred)

prf = metrics.precision_recall_fscore_support(y_test, y_pred, average='samples')

"""

ML-KNN for multilabel classification

"""

from skmultilearn.adapt import MLkNN

clf = MLkNN(k=15)

clf.fit(X_train, y_train)

y_pred = clf.predict(X_test)

// AdaBoost.MH for multilabel classification

val labels0Based = true

val binaryProblem = false

val learner = new AdaBoostMHLearner(sc)

learner.setNumIterations(params.numIterations) // 500 iter

learner.setNumDocumentsPartitions(params.numDocumentsPartitions)

learner.setNumFeaturesPartitions(params.numFeaturesPartitions)

learner.setNumLabelsPartitions(params.numLabelsPartitions)

val classifier = learner.buildModel(params.input, labels0Based, binaryProblem)

val testPath = "./tmc2007_test.svm"

val numRows = DataUtils.getNumRowsFromLibSvmFile(sc, testPath)

val testRdd = DataUtils.loadLibSvmFileFormatDataAsList(sc, testPath, labels0Based, binaryProblem, 0, numRows, -1);

val results = classifier.classifyWithResults(sc, testRdd, 20)

val predAndLabels = sc.parallelize(predLabels.zip(goldLabels)

.map(t => {

(t._1.map(e => e.toDouble), t._2.map(e => e.toDouble))

}))

val metrics = new MultilabelMetrics(predAndLabels)

3. 参考文献

[1] Schapire, Robert E., and Yoram Singer. "BoosTexter: A boosting-based system for text categorization." Machine learning 39.2-3 (2000): 135-168.

[2] Zhang, Min-Ling, and Zhi-Hua Zhou. "ML-KNN: A lazy learning approach to multi-label learning." Pattern recognition 40.7 (2007): 2038-2048.

[3] 基于PredictionIO的推荐引擎打造,及大规模多标签分类探索.