OpenShift4.2详细安装参考同事王征的安装手册(感谢王征大师的研究和答疑解惑, 大坑文章都已经搞定了,我这里是一些小坑)

https://github.com/wangzheng422/docker_env/blob/master/redhat/ocp4/4.2.disconnect.install.md

因为我这边的环境有些不同,所以这里只是自己的补充记录,详细的需要对照来看.

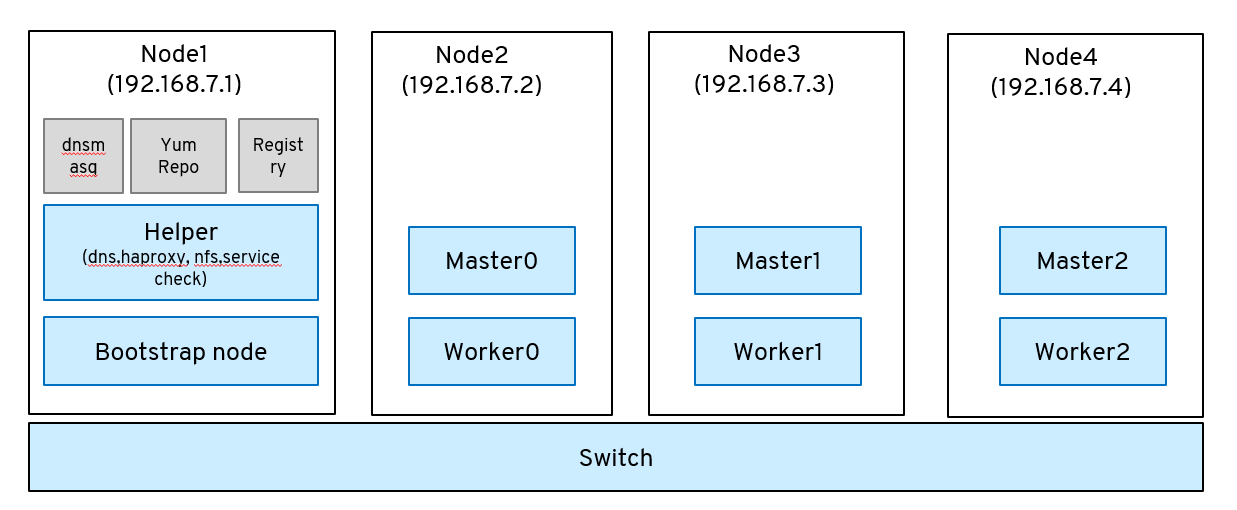

1.架构

启动的虚拟机通过bridge和主机网络在同一个网段,ip规划保持和文档一致

|

Bootstrap nodes |

192.168.7.12 |

|

master-0.ocp4.redhat.ren |

192.168.7.13 |

|

master-1.ocp4.redhat.ren |

192.168.7.14 |

|

master-2.ocp4.redhat.ren |

192.168.7.15 |

|

worker-0.ocp4.redhat.ren |

192.168.7.16 |

|

worker-1.ocp4.redhat.ren |

192.168.7.17 |

|

worker-2.ocp4.redhat.ren |

192.168.7.18 |

2.网络

我手头的机器是4台NUC,每台4CPU,32G内存,而4.2OCP集群最少需要3台master, 1个bootstrap,1台作为负载均衡,dns解析等工作,再配上几个worker节点,因此需要的机器在6+以上,采用虚拟机后,OpenShift节点启动以后的跨主机网络连接就是一个问题。

经过尝试,采用的是KVM的Bridge模式,具体设置如下。

在每台机器上设置

- 添加一个br0

[root@base ocp4]# cat /etc/sysconfig/network-scripts/ifcfg-br0 TYPE=Bridge BOOTPROTO=static IPADDR=192.168.7.1 NETMASK=255.255.255.0 GATEWAY=192.168.7.1 ONBOOT=yes DEFROUTE=yes NAME=br0 DEVICE=br0 PREFIX=25

- 修改现有的网卡加入br0

[root@base ocp4]# cat /etc/sysconfig/network-scripts/ifcfg-eno1 TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=none #IPADDR=192.168.7.1 #NETMASK=255.255.255.0 #GATEWAY=192.168.7.1 DEFROUTE=yes IPV4_FAILURE_FATAL=no IPV6INIT=yes IPV6_AUTOCONF=yes IPV6_DEFROUTE=yes IPV6_FAILURE_FATAL=no IPV6_ADDR_GEN_MODE=stable-privacy NAME=eno1 UUID=4e9504c6-a5c4-4093-88b8-89a153dd66de DEVICE=eno1 ONBOOT=yes BRIDGE=br0

- 重启网络

systemctl restart network

启动以后验证笔记本还能继续连接

[root@base ocp4]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eno1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master br0 state UP group default qlen 1000 link/ether 00:1f:c6:9c:56:60 brd ff:ff:ff:ff:ff:ff 3: wlp3s0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether 00:c2:c6:f0:c8:78 brd ff:ff:ff:ff:ff:ff 4: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether 00:1f:c6:9c:56:60 brd ff:ff:ff:ff:ff:ff inet 192.168.7.1/25 brd 192.168.7.127 scope global noprefixroute br0 valid_lft forever preferred_lft forever inet6 fe80::e458:f6ff:fea8:b655/64 scope link valid_lft forever preferred_lft forever 5: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000 link/ether 52:54:00:6d:9d:9f brd ff:ff:ff:ff:ff:ff inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0 valid_lft forever preferred_lft forever 6: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN group default qlen 1000 link/ether 52:54:00:6d:9d:9f brd ff:ff:ff:ff:ff:ff 12: vnet0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master br0 state UNKNOWN group default qlen 1000 link/ether fe:54:00:9c:66:29 brd ff:ff:ff:ff:ff:ff inet6 fe80::fc54:ff:fe9c:6629/64 scope link valid_lft forever preferred_lft forever 20: vnet1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master br0 state UNKNOWN group default qlen 1000 link/ether fe:54:00:88:62:de brd ff:ff:ff:ff:ff:ff inet6 fe80::fc54:ff:fe88:62de/64 scope link valid_lft forever preferred_lft forever

网络生效后,应该生成的虚拟机可以访问主机网络。

- 建立虚拟机采用的网络

[root@base data]# cat virt-net.xml <network> <name>br0</name> <forward mode='bridge'> <bridge name ='br0'/> </forward> </network>

virsh net-define --file virt-net.xml virsh net-autostart br0 virsh net-start br0

查看一下

[root@base data]# virsh net-list Name State Autostart Persistent ---------------------------------------------------------- br0 active yes yes default active yes yes

3. Yum源设置

参照3.11来设置yum源,但有一点要注意,尽量用3.11的高版本,之前用3.11.16来设置,结果导致podman build镜像到本地有问题,后来替换成3.11.146版本的yum.

[root@base ocp4]# cat /etc/yum.repos.d/base.repo [base] name=base baseurl=http://192.168.7.1:8080/repo/rhel-7-server-rpms/ enabled=1 gpgcheck=0 [ansible] name=ansible baseurl=http://192.168.7.1:8080/repo/rhel-7-server-ansible-2.6-rpms/ enabled=1 gpgcheck=0 [extra] name=extra baseurl=http://192.168.7.1:8080/repo/rhel-7-server-extras-rpms/ enabled=1 gpgcheck=0 [ose] name=ose baseurl=http://192.168.7.1:8080/repo/rhel-7-server-ose-3.11-rpms/ enabled=1 gpgcheck=0

4.启动虚拟机和安装过程

指定bridge网络启动,调整了网络和ram的大小

virt-install --name=ocp4-bootstrap --vcpus=4 --ram=8192 --disk path=/data/kvm/ocp4-bootstrap.qcow2,bus=virtio,size=120 --os-variant rhel8.0 --network bridge=br0,model=virtio --boot menu=on --cdrom /data/ocp4/bootstrap-static.iso virt-install --name=ocp4-master0 --vcpus=4 --ram=16384 --disk path=/data/kvm/ocp4-master0.qcow2,bus=virtio,size=120 --os-variant rhel8.0 --network bridge=br0,model=virtio --boot menu=on --cdrom /data/ocp4/master-0.iso virt-install --name=ocp4-master1 --vcpus=4 --ram=16384 --disk path=/data/kvm/ocp4-master1.qcow2,bus=virtio,size=120 --os-variant rhel8.0 --network bridge=br0,model=virtio --boot menu=on --cdrom /data/ocp4/master-1.iso virt-install --name=ocp4-master2 --vcpus=4 --ram=16384 --disk path=/data/kvm/ocp4-master2.qcow2,bus=virtio,size=120 --os-variant rhel8.0 --network bridge=br0,model=virtio --boot menu=on --cdrom /data/ocp4/master-2.iso virt-install --name=ocp4-worker0 --vcpus=4 --ram=8192 --disk path=/data/kvm/ocp4-worker0.qcow2,bus=virtio,size=120 --os-variant rhel8.0 --network bridge=br0,model=virtio --boot menu=on --cdrom /data/ocp4/worker-0.iso virt-install --name=ocp4-worker1 --vcpus=4 --ram=8192 --disk path=/data/kvm/ocp4-worker1.qcow2,bus=virtio,size=120 --os-variant rhel8.0 --network bridge=br0,model=virtio --boot menu=on --cdrom /data/ocp4/worker-1.iso virt-install --name=ocp4-worker2 --vcpus=4 --ram=8192 --disk path=/data/kvm/ocp4-worker2.qcow2,bus=virtio,size=120 --os-variant rhel8.0 --network bridge=br0,model=virtio --boot menu=on --cdrom /data/ocp4/worker-2.iso

等大概5分钟时间bootstrap会ready, 可以ready以后在建立其他虚拟机

等待一段时间后

在helper节点上通过命令查看安装进度

openshift-install wait-for bootstrap-complete --log-level debug

处理完存储后,还是在helper节点

[root@helper ocp4]# openshift-install wait-for install-complete INFO Waiting up to 30m0s for the cluster at https://api.ocp4.redhat.ren:6443 to initialize... INFO Waiting up to 10m0s for the openshift-console route to be created... INFO Install complete! INFO To access the cluster as the system:admin user when using 'oc', run 'export KUBECONFIG=/root/ocp4/auth/kubeconfig' INFO Access the OpenShift web-console here: https://console-openshift-console.apps.ocp4.redhat.ren INFO Login to the console with user: kubeadmin, password: WRTp9-avPVu-IMWLX-KiIQ2

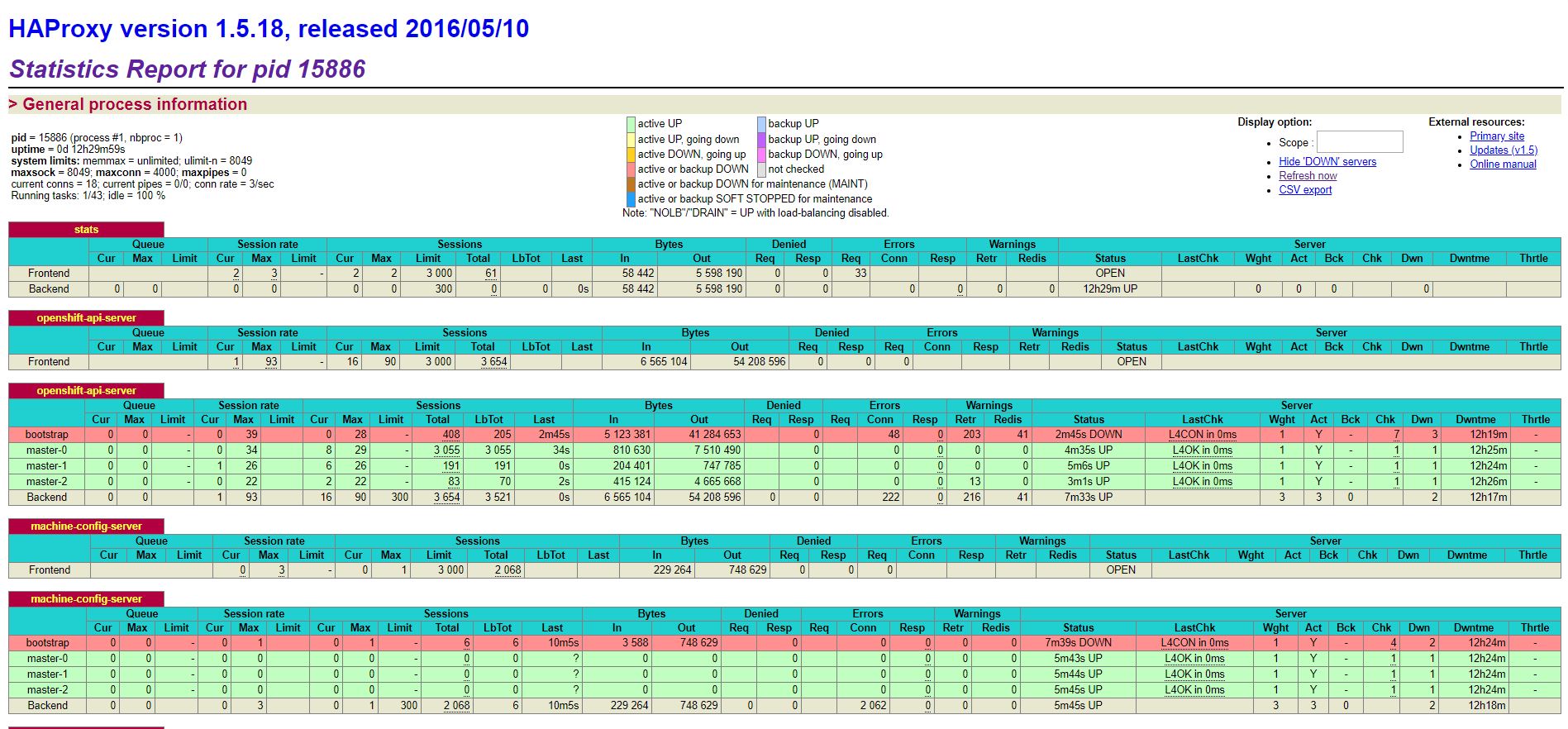

5. 关于bootstrap节点不ready问题

刚开始的时候,haproxy界面中bootstrap一直不ready,登录到helper上去 sudo -i, podman images看到镜像为空。

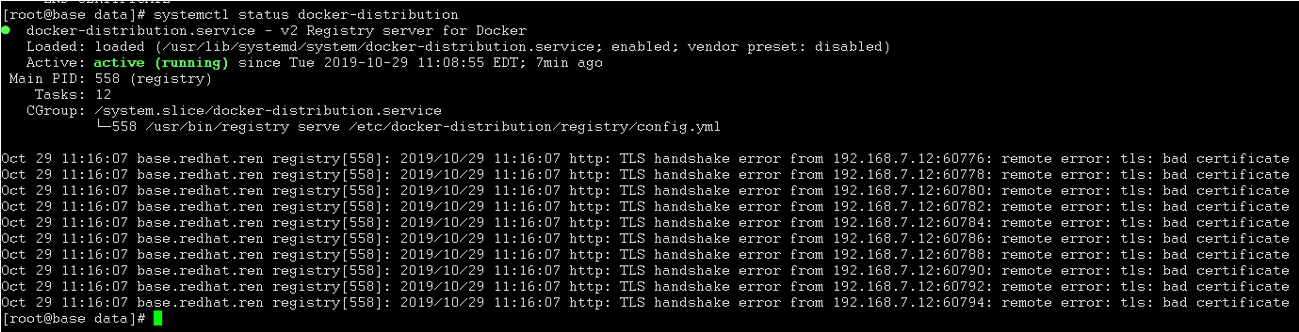

查看192.168.7.1的registry服务,发现shake hand error.

需要更新install-config.yaml中的additionalTrustBundle,和/etc/crts/redhat.ren.crt 一致

install-config.yaml中需要修改的部分用粗体标出

apiVersion: v1 baseDomain: redhat.ren compute: - hyperthreading: Enabled name: worker replicas: 3 controlPlane: hyperthreading: Enabled name: master replicas: 3 metadata: name: ocp4 networking: clusterNetworks: - cidr: 10.254.0.0/16 hostPrefix: 24 networkType: OpenShiftSDN serviceNetwork: - 172.30.0.0/16 platform: none: {} pullSecret: '{"auths":{"registry.redhat.ren": {"auth": "ZHVtbXk6ZHVtbXk=","email": "noemail@localhost"}}}' sshKey: 'ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCnejC+QkKXqEOj7lSKxpHnnIxPli2iwNveE9apd0QUFgc3xTyaQWyOqbFEsUzR2MnXV36a89DiOVnecVgXZqVDFrDZDRkMLKJTm2U85AExWE0Lmtkxpmyg5OdpFmTBCutpNy2LigG8LTkMPXIgDrfNF+37/BvKzvWdrhR6/dQwqfMGqfRi+PYscD6nUJG5kAzVugalyw8+Sv9CzS+4BMRCZ4EVKu5bB2wl1bw7KCJc+D0nhnc87qGswJquleT7CGi7N2k6/Q1iK80l1KymmwWcwvh+Yf4Nhdk4cxbeSZmPGBQIQMmOUzK0Q4xs3XZd2WvZd/NYj0D83sSCQGXEUkGL root@helper' additionalTrustBundle: | -----BEGIN CERTIFICATE----- MIIDszCCApugAwIBAgIJAPRFC4yzZOpxMA0GCSqGSIb3DQEBCwUAMHAxCzAJBgNV BAYTAkNOMQswCQYDVQQIDAJHRDELMAkGA1UEBwwCU1oxGDAWBgNVBAoMD0dsb2Jh bCBTZWN1cml0eTEWMBQGA1UECwwNSVQgRGVwYXJ0bWVudDEVMBMGA1UEAwwMKi5y ZWRoYXQucmVuMB4XDTE5MTAxODEwMTAzMFoXDTI5MTAxNTEwMTAzMFowcDELMAkG A1UEBhMCQ04xCzAJBgNVBAgMAkdEMQswCQYDVQQHDAJTWjEYMBYGA1UECgwPR2xv YmFsIFNlY3VyaXR5MRYwFAYDVQQLDA1JVCBEZXBhcnRtZW50MRUwEwYDVQQDDAwq LnJlZGhhdC5yZW4wggEiMA0GCSqGSIb3DQEBAQUAA4IBDwAwggEKAoIBAQDA1Mgq hGebtpCx93KtaaRw5jDRbxrTdJkZvV6Wyq1BYFRQDKZ3QOcFFOMrLbN7g8Nrw1dl zgvKLLc1l4god12RgOiM1fOVODoLIk2Z0x2VFbQ7ZIx0jKdKmaNex/fGd/MoLhij dYtAmZokjs7sw0VNkZLlHzPgR9AXYtJp07zUUL1eRWNTOhO8LxDUviOg2eVy31yW TrYla1ze7+meTvZs3edr5/dLncZ2PCiyaF6hOEf/t7ev4vA33p6SUY6prgaPaKlb PiB8+7ZKsucgXd/ikKoCP/0rMcqRSIrpYuudM8Dff8OGxhfL0ChUx3VkKd2t5T3l N3717qj+siuUb7OLAgMBAAGjUDBOMB0GA1UdDgQWBBTwuyzX5stt+Pyrs7VIr508 1VMR8zAfBgNVHSMEGDAWgBTwuyzX5stt+Pyrs7VIr5081VMR8zAMBgNVHRMEBTAD AQH/MA0GCSqGSIb3DQEBCwUAA4IBAQBhhicfn9fY+PAxnVNn7R0PscxbYof4DVv3 lqkkO6BCLkHUivljxjU7OYpxkva34vSuK1WVZf74Mbif7NkzVS3EG0+b0h+8EcQ+ Fnv4qyKBfs8LG/V/A0ukAD5AYP098jsj5tmREbnFbMy7UojVEK54w6262iefvg0b uT5I0Y3jLljIlsxSbX4tTXjX0X/KHXK4PJ7hqdRLXnD4CgWKHjU6yNQS+sZg83VC jsZpKl5eSBqOdXB1CFteZm571/AXlagcyGf9hvK4fV2ybQoOxgkZt9zyUvtm3myb S5FAo4B5IvEhkge+jvolj31AWnB4v6GX0TgWotJd52GUpWDJDr5T -----END CERTIFICATE----- imageContentSources: - mirrors: - registry.redhat.ren/ocp4/openshift4 source: quay.io/openshift-release-dev/ocp-release - mirrors: - registry.redhat.ren/ocp4/openshift4 source: quay.io/openshift-release-dev/ocp-v4.0-art-dev

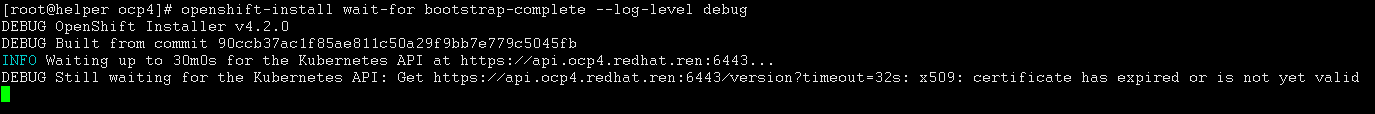

6.证书过期问题

登录helper通过命令行去查安装进度

需要重新删除openshift-install create ignition-configs 生成的部分。从下面这段重新开始

/bin/rm -rf *.ign .openshift_install_state.json auth bootstrap master0 master1 master2 worker0 worker1 worker2

openshift-install create ignition-configs --dir=/root/ocp4

当然我的问题是各机器的时间不同步,设置完时间同步后问题重新做问题解决。

7.image-registry-storage问题

在安装过程中,需要执行

bash ocp4-upi-helpernode-master/files/nfs-provisioner-setup.sh

去创建nfs-provisioner的project同时部署一个pod,用于创建pvc

等待pod启动完成后(注意查看他的状态)

oc edit configs.imageregistry.operator.openshift.io # 修改 storage 部分 # storage: # pvc: # claim:

把claim置空,之前是指向image-registry-storage的pvc的。修改完成后应该会促使pod创建一个pvc:image-registry-storage

可以通过

oc get pvc --all-namespaces

来查看是否成功。pvc会申请100G的空间,如果磁盘上没有这么多空间的化,pvc就会在pending状态。

oc get clusteroperator image-registry

状态也会是False, image-registry的pod会是Pending状态,导致集群创建无法继续。

如果状态不对,可以先删除pvc,然后再修改configs.imageregistry.operator.openshift.io,会触发创建。

只有当image-registry的co状态为True后,然后再运行

openshift-install wait-for install-complete

等待集群继续往下执行。

8.DNS配置

除了生成的zonefile.db不变外,为了解析registry.redhat.ren,加入registry.zonefile.db

/etc/named.conf

########### Add what's between these comments ########### zone "ocp4.redhat.ren" IN { type master; file "zonefile.db"; }; zone "7.168.192.in-addr.arpa" IN { type master; file "reverse.db"; }; ######################################################## zone "redhat.ren" IN { type master; file "registry.zonefile.db"; };

[root@helper named]# cat registry.zonefile.db $TTL 1W @ IN SOA ns1.redhat.ren. root ( 2019120205 ; serial 3H ; refresh (3 hours) 30M ; retry (30 minutes) 2W ; expiry (2 weeks) 1W ) ; minimum (1 week) IN NS ns1.redhat.ren. IN MX 10 smtp.redhat.ren. ; ; ns1 IN A 192.168.7.11 smtp IN A 192.168.7.11 ; registry IN A 192.168.7.1 registry IN A 192.168.7.1 ; ;EOF

[root@helper named]# cat reverse.db $TTL 1W @ IN SOA ns1.ocp4.redhat.ren. root ( 2019120205 ; serial 3H ; refresh (3 hours) 30M ; retry (30 minutes) 2W ; expiry (2 weeks) 1W ) ; minimum (1 week) IN NS ns1.ocp4.redhat.ren. ; ; syntax is "last octet" and the host must have fqdn with trailing dot 13 IN PTR master-0.ocp4.redhat.ren. 14 IN PTR master-1.ocp4.redhat.ren. 15 IN PTR master-2.ocp4.redhat.ren. ; 12 IN PTR bootstrap.ocp4.redhat.ren. ; 11 IN PTR api.ocp4.redhat.ren. 11 IN PTR api-int.ocp4.redhat.ren. ; 16 IN PTR worker-0.ocp4.redhat.ren. 17 IN PTR worker-1.ocp4.redhat.ren. 18 IN PTR worker-2.ocp4.redhat.ren. ; 1 IN PTR registry.redhat.ren. ;EOF

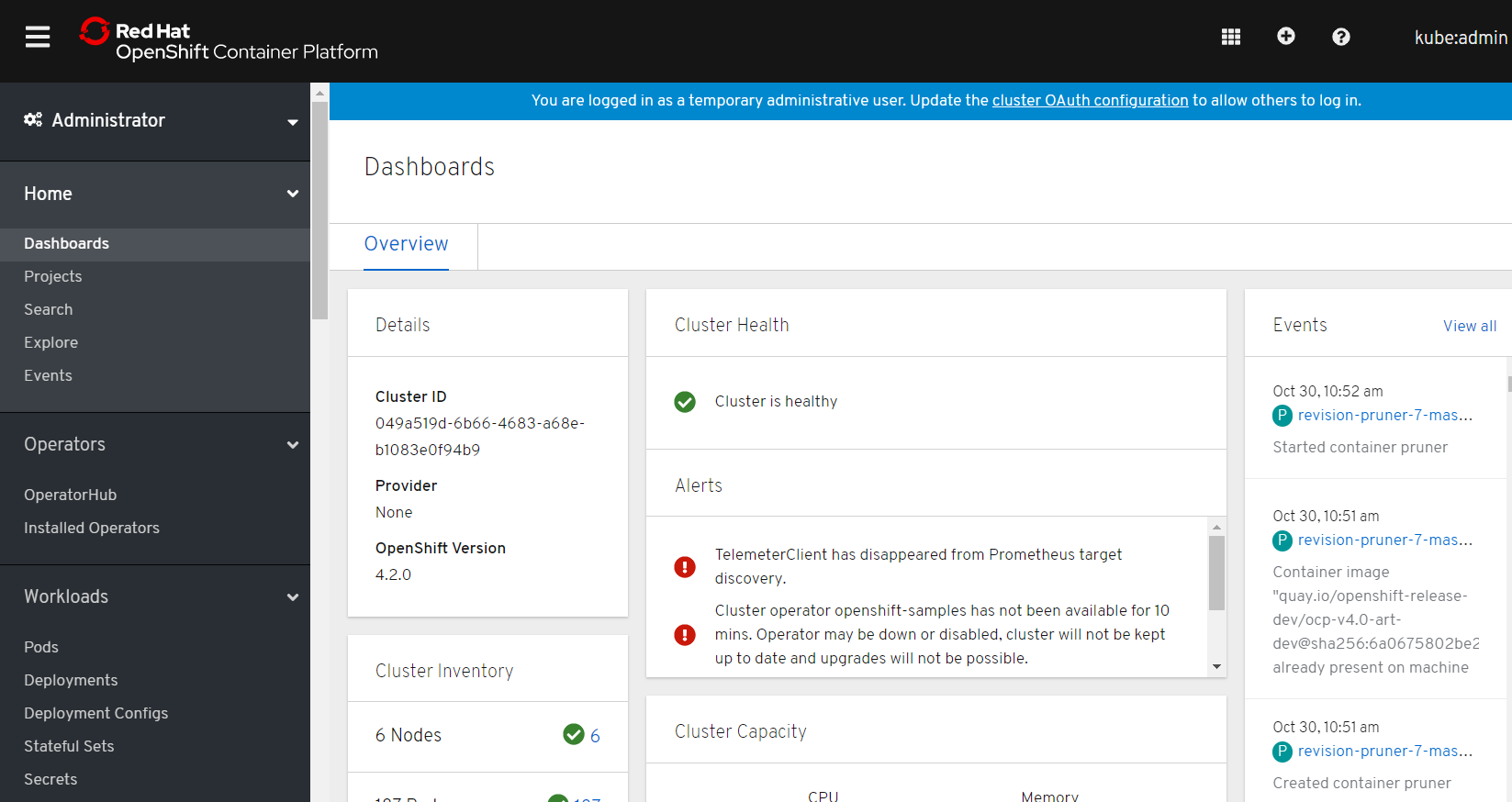

安装完后访问

https://console-openshift-console.apps.ocp4.redhat.ren

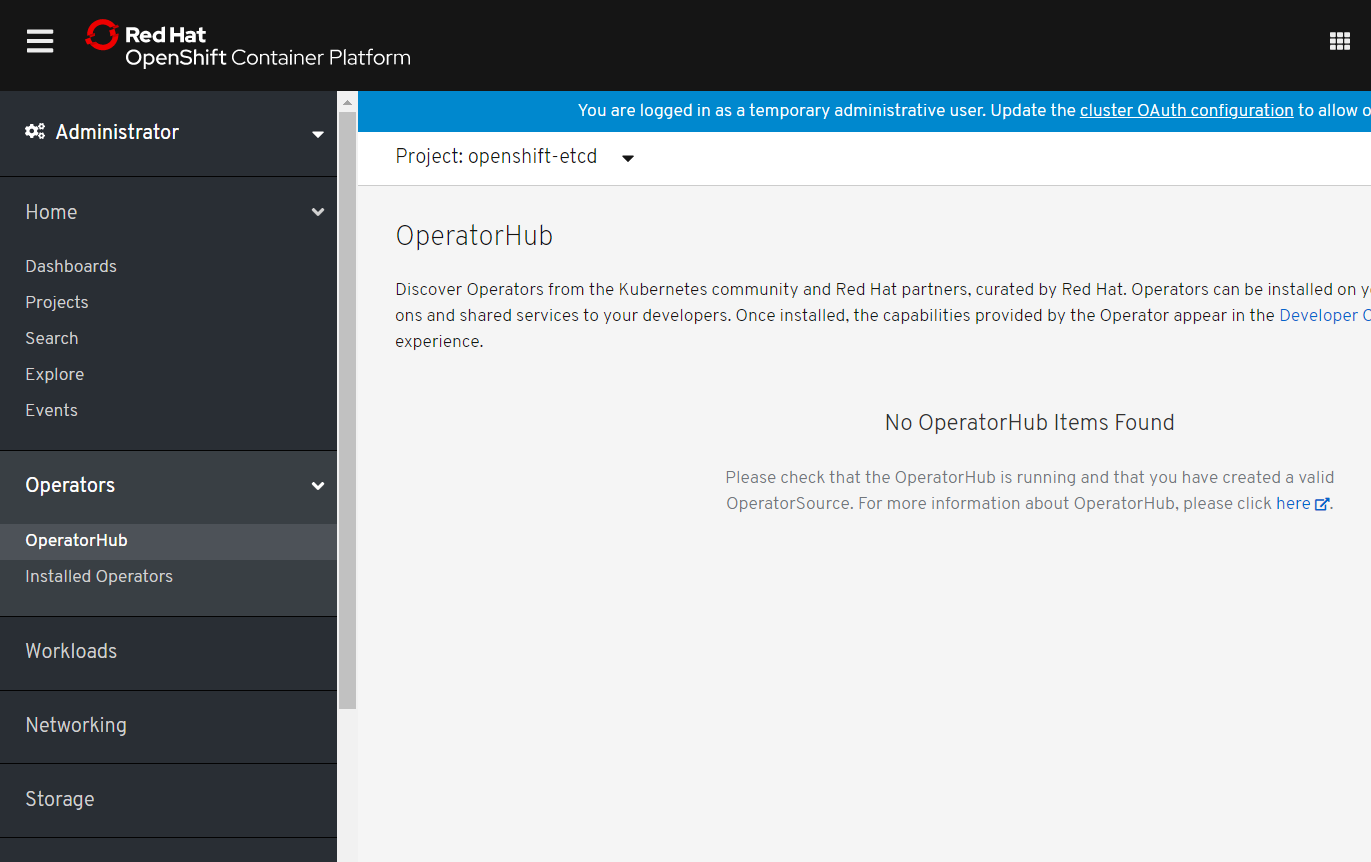

美中不足是Operatorhub没有内容,也需要离线安装

在Helper机器上

cd ~/ocp4 export KUBECONFIG=auth/kubeconfig [root@helper ocp4]# oc get nodes NAME STATUS ROLES AGE VERSION master-0.ocp4.redhat.ren Ready master 71m v1.14.6+c07e432da master-1.ocp4.redhat.ren Ready master 71m v1.14.6+c07e432da master-2.ocp4.redhat.ren Ready master 71m v1.14.6+c07e432da worker-0.ocp4.redhat.ren Ready worker 71m v1.14.6+c07e432da worker-1.ocp4.redhat.ren Ready worker 71m v1.14.6+c07e432da worker-2.ocp4.redhat.ren Ready worker 71m v1.14.6+c07e432da

装机现场