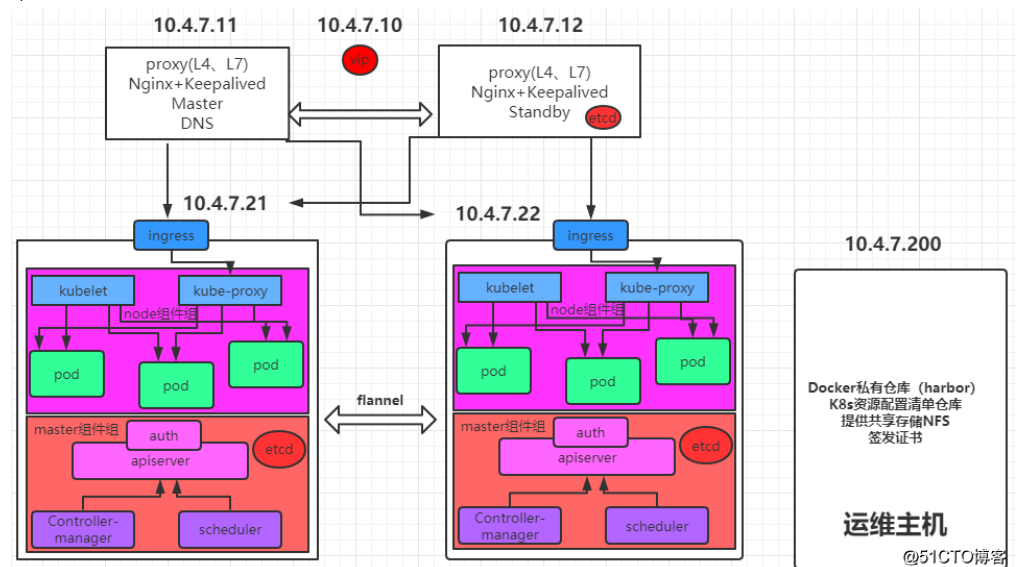

目录

0.架构图

1.k8s服务发现插件——CoreDNS

- CoreDNS能够实现自动关联Service资源的"名称"和"集群网络IP",从而达到服务被集群自动发现。

操作过程:

- 运维主机创建资源配置清单

- 下载coredns镜像上传到仓库

- 配置内网dns

- node主机应用资源配置清单

1.1 本节架构

| 主机名 | IP | 角色 | 节点 |

|---|---|---|---|

| hdss7-200.host.com | 10.4.7.200 | 资源配置清单 | 运维主机 |

| hdss7-11.host.com | 10.4.7.11 | 内网DNS解析 | DNS主机 |

| hdss7-21.host.com | 10.4.7.21 | 应用资源配置清单 | Node节点 |

1.2 部署k8s资源配置清单的内网http服务

用以提供k8s统一的资源配置清单访问入口,以后所有的资源配置清单统一放置在运维主机的/data/k8s-yaml目录下即可。

hdss7-200.host.com上操作

cat > /etc/nginx/conf.d/k8s-yaml.od.com.conf << 'eof'

server {

listen 80;

server_name k8s-yaml.od.com;

location / {

autoindex on;

default_type text/plain;

root /data/k8s-yaml;

}

}

eof

nginx -s reload

1.3 准备资源配置清单

- rbac.yaml 基于角色访问控制资源配置清单

- configmap.yaml 配置资源配置清单

- deployment.yaml 控制器资源配置清单

- svc.yaml service资源配置清单

资源清单下载地址:https://github.com/kubernetes/kubernetes/blob/master/cluster/addons/dns/coredns/coredns.yaml.base

1.3.1 准备rbac.yaml文件

mkdir -p /data/k8s-yaml/coredns && cd /data/k8s-yaml/coredns

cat > /data/k8s-yaml/coredns/rbac.yaml << 'eof'

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

eof

1.3.2 configmap.yaml 资源配置清单

cat > /data/k8s-yaml/coredns/configmap.yaml << 'eof'

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

log

health

kubernetes cluster.local 192.168.0.0/16

proxy . /etc/resolv.conf

cache 30

}

eof

1.3.3 deployment.yaml 控制器资源配置清单

cat > /data/k8s-yaml/coredns/deployment.yaml << 'eof'

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

replicas: 1

selector:

matchLabels:

k8s-app: coredns

template:

metadata:

labels:

k8s-app: coredns

spec:

serviceAccountName: coredns

containers:

- name: coredns

image: harbor.od.com/k8s/coredns:v1.3.1

args:

- -conf

- /etc/coredns/Corefile

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

dnsPolicy: Default

imagePullSecrets:

- name: harbor

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

eof

1.3.4 svc.yaml service资源配置清单

cat > /data/k8s-yaml/coredns/svc.yaml << 'eof'

apiVersion: v1

kind: Service

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: coredns

clusterIP: 192.168.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

eof

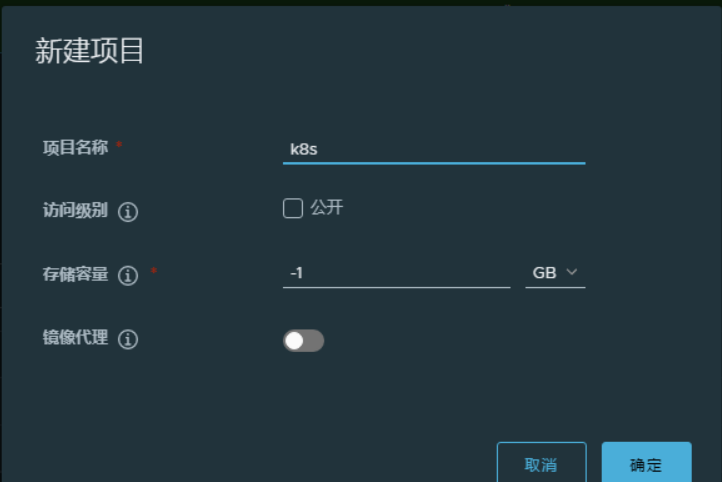

1.4 下载kube-dns(coredns)到本地仓库

harbor界面创建K8s项目

docker pull coredns/coredns:1.3.1

docker images | grep coredns

coredns/coredns 1.3.1 eb516548c180 20 months ago 40.3MB

docker tag eb516548c180 harbor.od.com/k8s/coredns:v1.3.1

docker push harbor.od.com/k8s/coredns:v1.3.1

1.5 配置内网DNS解析

在hdss7-11.host.com上操作

cat >> /var/named/od.com.zone <<'eof'

k8s-yaml A 10.4.7.200

eof

vi /var/named/od.com.zone

2020092703 ; serial # 日期需要加1

systemctl restart named

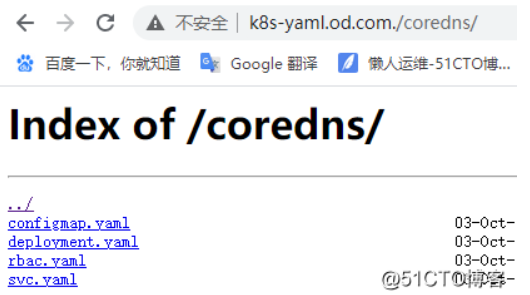

1.6测试访问

1.7 部署kube-dns(coredns)

hdss7-21或hdss7-22任意一台节点上操作

kubectl create secret docker-registry harbor --docker-server=harbor.od.com --docker-username=admin --docker-password=Harbor12345 --docker-email=1934844044@qq.com -n kube-system

kubectl apply -f http://k8s-yaml.od.com/coredns/rbac.yaml

kubectl apply -f http://k8s-yaml.od.com/coredns/configmap.yaml

kubectl apply -f http://k8s-yaml.od.com/coredns/deployment.yaml

kubectl apply -f http://k8s-yaml.od.com/coredns/svc.yaml

1.8 检查

[root@hdss7-21 ~]# kubectl create deployment nginx-dp --=image=harbor.od.com/public/nginx:v1.7.9 -n kube-public --replicas=1

[root@hdss7-21 ~]# kubectl expose deployment nginx-dp --port=80 -n kube-public

[root@hdss7-21 ~]# kubectl get svc -n kube-public # 新建容器集群IP为134结尾

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-dp ClusterIP 192.168.109.134 <none> 80/TCP 58m

[root@hdss7-21 ~]# kubectl get pods -n kube-system -o wide # coredns安装放在kube-system内

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-57c78bdbcd-lsf5z 1/1 Running 0 <invalid> 172.7.21.3 hdss7-21.host.com <none> <none>

[root@hdss7-21 ~]# kubectl get svc -n kube-system # creodns的svc存在kube-system内

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

coredns ClusterIP 192.168.0.2 <none> 53/UDP,53/TCP 4m30s

[root@hdss7-21 ~]# dig -t A nginx-dp.kube-public.svc.cluster.local @192.168.0.2 +short

192.168.109.134 # 查看到能够解析IP,但域名只能在集群内部使用,因为集群IP只能在内网使用

[root@hdss7-21 ~]# dig -t A www.baidu.com @192.168.0.2 +short # 解析百度

www.a.shifen.com.

14.215.177.38

14.215.177.39

[root@hdss7-21 ~]# kubectl exec -ti nginx-dp-7f74c75ff9-jtzhs -n kube-public -- /bin/bash

root@nginx-dp-7f74c75ff9-jtzhs:/# cat /etc/resolv.conf # 进入容器内查看dns配置了search

nameserver 192.168.0.2

search kube-public.svc.cluster.local svc.cluster.local cluster.local host.com

options ndots:5

root@nginx-dp-7f74c75ff9-jtzhs:/# ping nginx-dp # 容器内部ping

PING nginx-dp.kube-public.svc.cluster.local (192.168.109.134): 48 data bytes

56 bytes from 192.168.109.134: icmp_seq=0 ttl=64 time=0.234 ms