下述几段代码,是看b站上莫凡的视频学习的:

例2:

import tensorflow as tf import numpy as np #creat da x_data = np.random.rand(100).astype(np.float32) y_data = x_data * 0.1 + 0.3 #create tensorflow structure start Weights = tf.Variable(tf.random_uniform([1], -1, 0, 1)) biases = tf.Variable(tf.zeros([1])) y=Weights * x_data+biases loss = tf.reduce_mean(tf.square(y-y_data)) optimizer = tf.train.GradientDescentOptimizer(0.5) train = optimizer.minimize(loss) init = tf.initialize_all_variables() #create tensorflow structure end sess = tf.Session() sess.run(init) #Very inmportant step in range(201): sess.run(train) if(step % 20 == 0): print(step,sess.run(Weights), sess.run(biases))

Session会话控制:

import numpy as np import tensorflow as tf state = tf.Variable(0,name = "counter") one = tf.constant(1) new_value = tf.add(state, ) update = tf.assign(state, new_value) init = tf.global_variables_initializer() with tf.Session() as sess: sess.run(init) for _ in range(3): sess.run(update) print(sess.run(state))

placeholder:

import numpy as np import tensorflow as tf input1 = tf.placeholder(tf.float32) input2 = tf.placeholder(tf.float32) res = tf.multiply(input1,input2) with tf.Session() as sess: print(sess.run(res,feed_dict={input1:[7.,2.],input2:[2.]}))

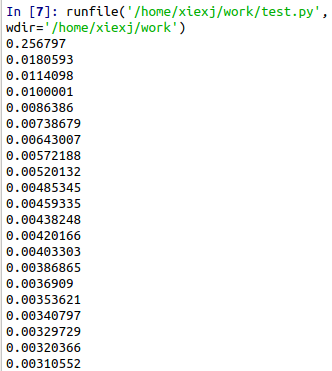

例3 build a neural network(输入层1个节点,隐藏层10个节点,输出层1个节点)

import numpy as np import tensorflow as tf def add_layer(inputs,in_size,out_size,activation_function = None): Weights = tf.Variable(tf.random_normal([in_size,out_size])) biases = tf.Variable(tf.zeros([1, out_size]) + 0.1) Wx_plus_b = tf.matmul(inputs,Weights) + biases if activation_function is None: outputs = Wx_plus_b else: outputs = activation_function(Wx_plus_b) return outputs x_data = np.linspace(-1, 1, 300)[:,np.newaxis]#array to 300*1 matrix noise = np.random.normal(0, 0.05, x_data.shape) y_data = np.square(x_data) - 0.5 + noise xs = tf.placeholder(tf.float32, [None, 1]) ys = tf.placeholder(tf.float32, [None, 1]) l1 = add_layer(xs, 1, 10, activation_function=tf.nn.relu) prediction = add_layer(l1, 10 , 1, activation_function=None) loss = tf.reduce_mean(tf.reduce_sum(tf.square(ys-prediction), reduction_indices=[1])) train_step = tf.train.GradientDescentOptimizer(0.1).minimize(loss) init = tf.global_variables_initializer() sess = tf.Session() sess.run(init) for i in range(1001): sess.run(train_step, feed_dict={xs:x_data, ys:y_data}) if(i % 50 == 0): print(sess.run(loss, feed_dict={xs:x_data, ys:y_data}))

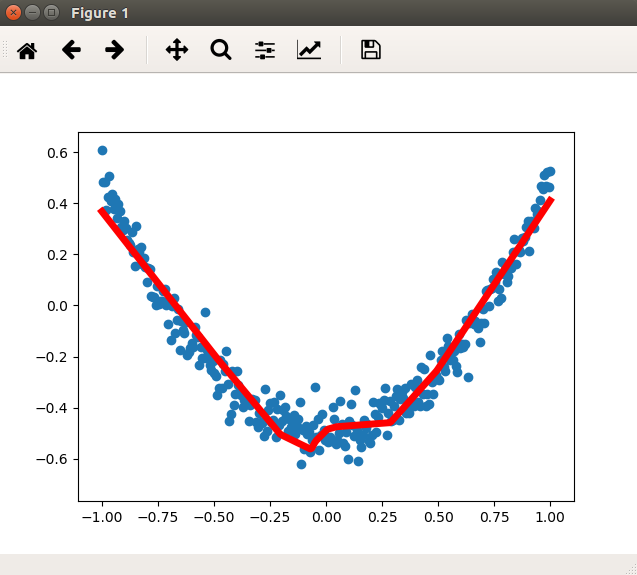

可视化:

import matplotlib.pyplot as plt . . . fig = plt.figure() ax = fig.add_subplot(1,1,1) ax.scatter(x_data, y_data) plt.ion() plt.show() for i in range(1001): sess.run(train_step, feed_dict={xs:x_data, ys:y_data}) if i % 50 == 0: # print(sess.run(loss, feed_dict={xs:x_data, ys:y_data})) try: ax.lines.remove(lines[0]) except Exception: pass prediction_value = sess.run(prediction, feed_dict={xs:x_data}) lines = ax.plot(x_data, prediction_value, 'r-', lw=5) plt.pause(0.1) #plt.show()