课程地址:https://cloud.tencent.com/developer/labs/lab/10295/console

utf-8编码在做网络传输和文件保存的时候,将unicode编码转换成utf-8编码,才能更好的发挥其作用;

当从文件中读取数据到内存中的时候,将utf-8编码转换为unicode编码,亦为良策。

python中的字符串在内存中是用unicode进行编码的,所以在python实际编程处理时用的是unicode码

Python2.x的默认编码是ascii,所以python2需要在前面加utf-8声明, python3不需要,因为python3全部按照unicode编码

由于这篇教程给的腾讯云环境可能是python2,所以实际代码中加上了utf-8的声明

这里提供一个unicode编码转换网站,如“寒”字对应的unicode码是u5bd2,符合python中代码测试的结果

站长工具 > Unicode编码转换:http://tool.chinaz.com/tools/unicode.aspx

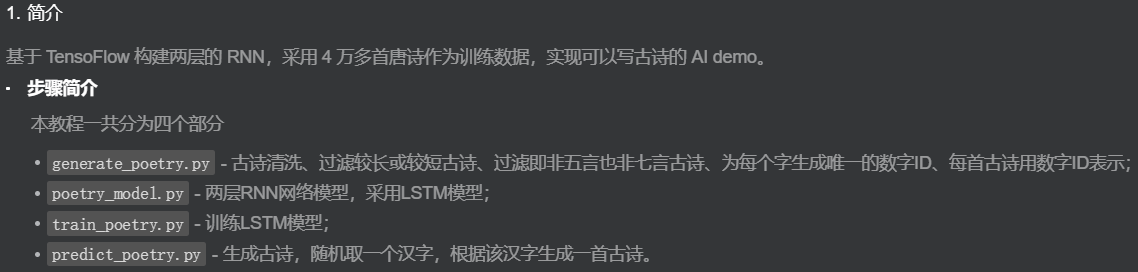

简介

数据学习

1.获取训练数据

wget 命令获取:wget http://tensorflow-1253675457.cosgz.myqcloud.com/poetry/poetry

2.数据预处理

def get_poetrys(self):

- 去换行符,去空格符,以冒号分隔题目和诗歌正文

- 得到诗歌正文后,再去一次空格

- 诗歌正文(加上标点)的字数小于5或大于79将不被使用

- 将正文中的,。替换为 |,再用 | 当分割符得到正文中的每一句unicode码

- 每一句字数不等于0,5,7的也要丢弃,如果符合要求则将上述第二步处理后的正文保存

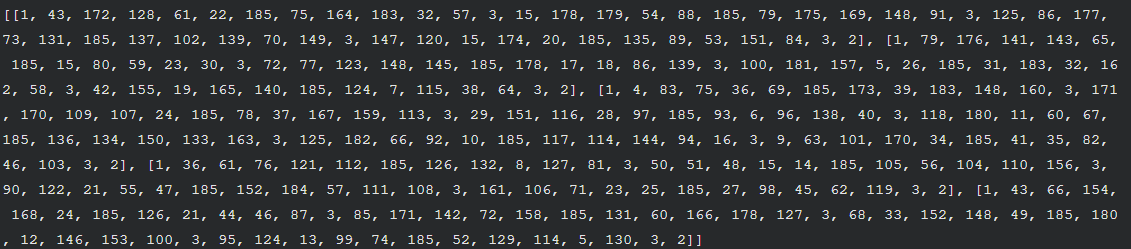

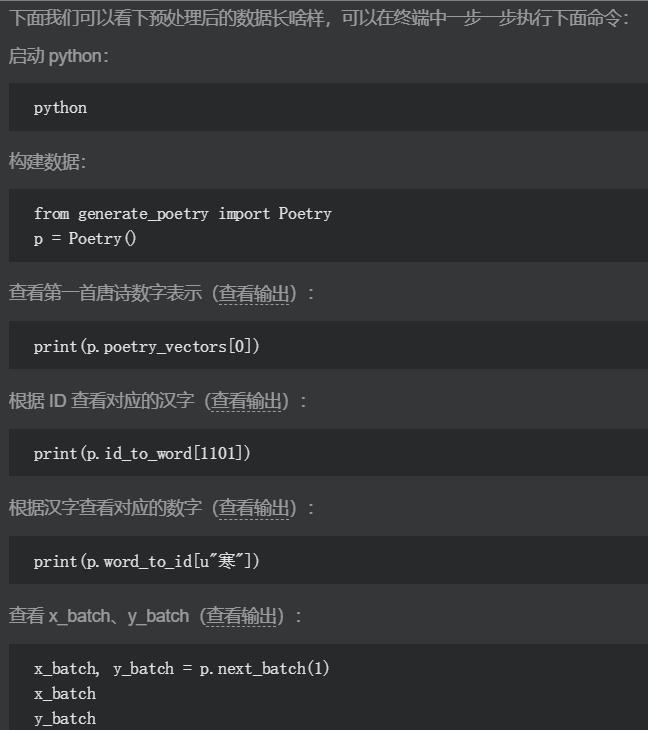

def gen_poetry_vector(self):

-

将列表中的所有汉字分隔成单个unicode码,加上空格符统一扔进set容器,之后排序可得到如下结果(前五首诗得到的排序字符集)

- 生成词典 id_to_word

- 生成字典 word_to_id

- 生成lambda表达式 to_id,为了之后能应用在函数式编程中

- 用map函数将self.poetrys中的每首诗的正文都转成对应id,没首诗一个列表,存进一个大列表

- 返回该大列表poetry_vector,下图为前五首诗

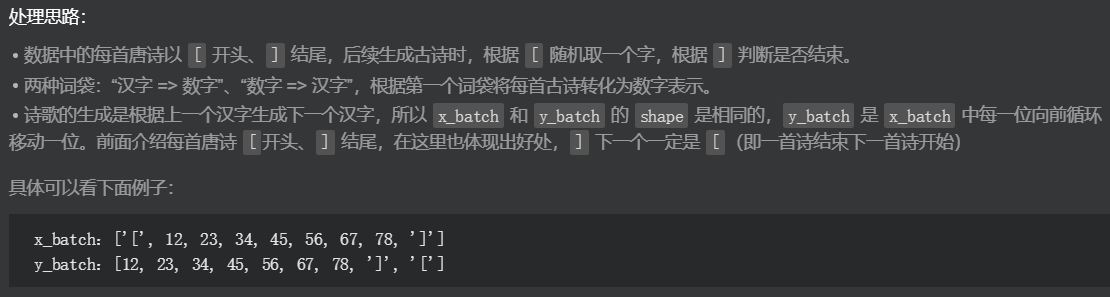

def next_batch(self,batch_size):

- 取batch_size首诗的数据至batches

- x_batch的shape为(batch_size, 诗的最大字数(包括符号)),初始化为全0的id值

- 对x_batch进行赋值

- 将x_batch直接拷贝给y_batch

- 对y_batch做少许修改,以满足其是x_batch循环左移一位得来

- 返回x_batch,y_batch

以上三个函数汇总起来就是第一个python文件

因为对有些python代码不太熟,我自己有一个功能调试的版本,取消print注释再修改最下方调用方法即可

test_generate_poetry.py (测试)

# -*- encoding: utf-8 -*- import numpy as np import sys from io import open reload(sys) sys.setdefaultencoding('utf8') class Poetry: def __init__(self): self.filename = "poetry" self.poetrys = self.get_poetrys() self.poetry_vectors, self.word_to_id, self.id_to_word = self.gen_poetry_vector() self.poetry_vectors_size = len(self.poetry_vectors) self._index_in_epoch = 0 def get_poetrys(self): poetrys = list() f = open(self.filename, "r", encoding="utf-8") for line in f.readlines()[:50]: # print(line) _, content = line.strip(' ').strip().split(':') content = content.replace(' ', '') # print(_, content) # title and content # print(len(content)) # symbols and chinese characters if(not content or '_' in content or '(' in content or '(' in content or "□" in content or '《' in content or '[' in content or ':' in content or ':'in content): continue if len(content) < 5 or len(content) > 79: continue content_list = content.replace(',', '|').replace('。', '|').split('|') # print(content_list) flag = True for sentence in content_list: slen = len(sentence) if slen == 0: continue if slen != 5 and slen != 7: flag = False break; if flag: poetrys.append('[' + content + ']') return poetrys def gen_poetry_vector(self): words = sorted(set(''.join(self.poetrys) + ' ')) # print(words) #sorted unicode sets id_to_word = {i: w for i, w in enumerate(words)} word_to_id = {w: i for i, w in id_to_word.items()} to_id = lambda word: word_to_id.get(word) poetry_vectors = [list(map(to_id, poetry)) for poetry in self.poetrys] # print(poetry_vectors) return poetry_vectors, word_to_id, id_to_word def next_batch(self, batch_size): assert batch_size < self.poetry_vectors_size start = self._index_in_epoch self._index_in_epoch += batch_size if self._index_in_epoch > self.poetry_vectors_size: np.random.shuffle(self.poetry_vectors) start = 0 self._index_in_epoch = batch_size end = self._index_in_epoch batches = self.poetry_vectors[start:end] # print(map(len, batches)) x_batch = np.full((batch_size, max(map(len, batches))), self.word_to_id[' '], np.int32) for row in range(batch_size): x_batch[row, :len(batches[row])] = batches[row] y_batch = np.copy(x_batch) y_batch[:, :-1] = x_batch[:, 1:] y_batch[:, -1] = x_batch[:, 0] return x_batch, y_batch p = Poetry() # p.next_batch(10) # x_batch, y_batch = p.next_batch(10) # print(x_batch, y_batch)

#-*- coding:utf-8 -*- import numpy as np from io import open import sys import collections reload(sys) sys.setdefaultencoding('utf8') class Poetry: def __init__(self): self.filename = "poetry" self.poetrys = self.get_poetrys() self.poetry_vectors,self.word_to_id,self.id_to_word = self.gen_poetry_vectors() self.poetry_vectors_size = len(self.poetry_vectors) self._index_in_epoch = 0 def get_poetrys(self): poetrys = list() f = open(self.filename,"r", encoding='utf-8') for line in f.readlines(): _,content = line.strip(' ').strip().split(':') content = content.replace(' ','') #过滤含有特殊符号的唐诗 if(not content or '_' in content or '(' in content or '(' in content or "□" in content or '《' in content or '[' in content or ':' in content or ':'in content): continue #过滤较长或较短的唐诗 if len(content) < 5 or len(content) > 79: continue content_list = content.replace(',', '|').replace('。', '|').split('|') flag = True #过滤即非五言也非七验的唐诗 for sentence in content_list: slen = len(sentence) if 0 == slen: continue if 5 != slen and 7 != slen: flag = False break if flag: #每首古诗以'['开头、']'结尾 poetrys.append('[' + content + ']') return poetrys def gen_poetry_vectors(self): words = sorted(set(''.join(self.poetrys) + ' ')) #数字ID到每个字的映射 id_to_word = {i: word for i, word in enumerate(words)} #每个字到数字ID的映射 word_to_id = {v: k for k, v in id_to_word.items()} to_id = lambda word: word_to_id.get(word) #唐诗向量化 poetry_vectors = [list(map(to_id, poetry)) for poetry in self.poetrys] return poetry_vectors,word_to_id,id_to_word def next_batch(self,batch_size): assert batch_size < self.poetry_vectors_size start = self._index_in_epoch self._index_in_epoch += batch_size #取完一轮数据,打乱唐诗集合,重新取数据 if self._index_in_epoch > self.poetry_vectors_size: np.random.shuffle(self.poetry_vectors) start = 0 self._index_in_epoch = batch_size end = self._index_in_epoch batches = self.poetry_vectors[start:end] x_batch = np.full((batch_size, max(map(len, batches))), self.word_to_id[' '], np.int32) for row in range(batch_size): x_batch[row,:len(batches[row])] = batches[row] y_batch = np.copy(x_batch) y_batch[:,:-1] = x_batch[:,1:] y_batch[:,-1] = x_batch[:, 0] return x_batch,y_batch

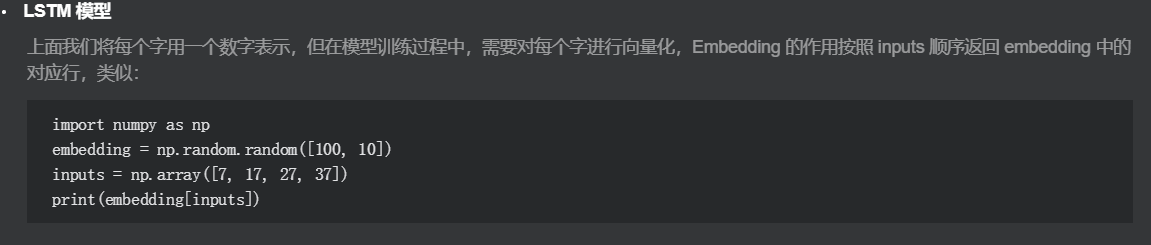

3.LSTM 模型 (建议在开始此部分之前先阅读一下参考博客21、22)

以下两种方法相同,但建议用第一种:

Class tf.contrib.rnn 新版

Class tf.nn.rnn_cell

def rnn_variable(self, rnn_size, words_size): 生成隐藏层到输出层的w,b

def rnn_variable(self, rnn_size, words_size): sequence_loss_by_example 基于 sparse_softmax_cross_entropy_with_logits

def optimizer_model(self, loss, learning_rate): 梯度规约,防止梯度爆炸

def embedding_variable(self, inputs, rnn_size, words_size): 通过inputs给出的id,返回embedding

def create_model(self, inputs, batch_size, rnn_size, words_size, num_layers, is_training, keep_prob):

outputs,last_state = tf.nn.dynamic_rnn(cell,input_data,initial_state=initial_state)

input_data: shape = (batch_size, time_steps, input_size)

此处的time_steps是batch_size首诗中最长的字符数(包括符号),input_size为128,也就是rnn_size

create_model中logits: shape = (batch_size*time_steps, word_size),这是为了计算损失函数时,第1维只需按word_size展开,反正之后会reduce_mean

以上五个函数和起来就是第二个py文件:poetry_model.py

#-*- coding:utf-8 -*- import tensorflow as tf class poetryModel: #定义权重和偏置项 def rnn_variable(self,rnn_size,words_size): with tf.variable_scope('variable'): w = tf.get_variable("w", [rnn_size, words_size]) b = tf.get_variable("b", [words_size]) return w,b #损失函数 def loss_model(self,words_size,targets,logits): targets = tf.reshape(targets,[-1]) loss = tf.contrib.legacy_seq2seq.sequence_loss_by_example([logits], [targets], [tf.ones_like(targets, dtype=tf.float32)],words_size) loss = tf.reduce_mean(loss) return loss #优化算子 def optimizer_model(self,loss,learning_rate): tvars = tf.trainable_variables() grads, _ = tf.clip_by_global_norm(tf.gradients(loss, tvars), 5) train_op = tf.train.AdamOptimizer(learning_rate) optimizer = train_op.apply_gradients(zip(grads, tvars)) return optimizer #每个字向量化 def embedding_variable(self,inputs,rnn_size,words_size): with tf.variable_scope('embedding'): with tf.device("/cpu:0"): embedding = tf.get_variable('embedding', [words_size, rnn_size]) input_data = tf.nn.embedding_lookup(embedding,inputs) return input_data #构建LSTM模型 def create_model(self,inputs,batch_size,rnn_size,words_size,num_layers,is_training,keep_prob): lstm = tf.contrib.rnn.BasicLSTMCell(num_units=rnn_size,state_is_tuple=True) input_data = self.embedding_variable(inputs,rnn_size,words_size) if is_training: lstm = tf.nn.rnn_cell.DropoutWrapper(lstm, output_keep_prob=keep_prob) input_data = tf.nn.dropout(input_data,keep_prob) cell = tf.contrib.rnn.MultiRNNCell([lstm] * num_layers,state_is_tuple=True) initial_state = cell.zero_state(batch_size, tf.float32) outputs,last_state = tf.nn.dynamic_rnn(cell,input_data,initial_state=initial_state) outputs = tf.reshape(outputs,[-1, rnn_size]) w,b = self.rnn_variable(rnn_size,words_size) logits = tf.matmul(outputs,w) + b probs = tf.nn.softmax(logits) return logits,probs,initial_state,last_state

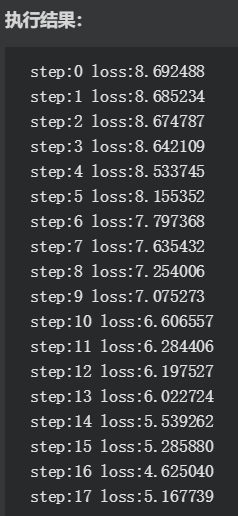

4.训练 LSTM 模型

需要关注的是下述输入字典需包含state,而且需要在使用next_state前先sess.run(initial_state)

feed = {inputs:x_batch,targets:y_batch,initial_state:next_state,keep_prob:0.5}

inputs: shape = (batch_size, time_steps), 每个值为int,即id

input_data: shape = (batch_size, time_steps, rnn_size)

outputs(1): shape = (batch_size, time_steps, rnn_size)

outputs(2): shape = (batch_size*time_steps, rnn_size)

logits: shape = (batch_size*time_steps, words_size)

targets: shape = (batch_size, time_steps),每个值为int,之后内部会调用tf.one_hot()展开,在

sequence_loss_by_example损失函数调用中,最后指明了words_size,理论上targets最后shape与logits相同

每批次采用 50 首唐诗训练,训练 40000 次后,损失函数基本保持不变,GPU 大概需要 2 个小时左右。当然你可以调整循环次数,节省训练时间,亦或者直接下载我们训练好的模型。

wget http://tensorflow-1253675457.cosgz.myqcloud.com/poetry/poetry_model.zip unzip poetry_model.zip

#-*- coding:utf-8 -*- from generate_poetry import Poetry from poetry_model import poetryModel import tensorflow as tf import numpy as np if __name__ == '__main__': batch_size = 50 epoch = 20 rnn_size = 128 num_layers = 2 poetrys = Poetry() words_size = len(poetrys.word_to_id) inputs = tf.placeholder(tf.int32, [batch_size, None]) targets = tf.placeholder(tf.int32, [batch_size, None]) keep_prob = tf.placeholder(tf.float32, name='keep_prob') model = poetryModel() logits,probs,initial_state,last_state = model.create_model(inputs,batch_size, rnn_size,words_size,num_layers,True,keep_prob) loss = model.loss_model(words_size,targets,logits) learning_rate = tf.Variable(0.0, trainable=False) optimizer = model.optimizer_model(loss,learning_rate) saver = tf.train.Saver() with tf.Session() as sess: sess.run(tf.global_variables_initializer()) sess.run(tf.assign(learning_rate, 0.002 * 0.97 )) next_state = sess.run(initial_state) step = 0 while True: x_batch,y_batch = poetrys.next_batch(batch_size) feed = {inputs:x_batch,targets:y_batch,initial_state:next_state,keep_prob:0.5} train_loss, _ ,next_state = sess.run([loss,optimizer,last_state], feed_dict=feed) print("step:%d loss:%f" % (step,train_loss)) if step > 40000: break if step%1000 == 0: n = step/1000 sess.run(tf.assign(learning_rate, 0.002 * (0.97 ** n))) step += 1 saver.save(sess,"poetry_model.ckpt")

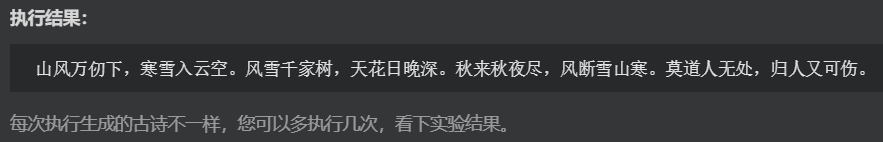

生成古诗

根据 [ 随机取一个汉字,作为生成古诗的第一个字,遇到 ] 结束生成古诗。

def to_word(prob): prob是tf.nn.softmax处理后归一化的多维数组,先取出prob[0](此处batch_size=1,time_steps=1(每次输出一个字符)),此时shape=[words_size]

将其排序后,取概率值最高的值判断是否大于0.9,是则直接取该值对应的字符,否则向后一个随机数取一个较大概率值的字符输出

#-*- coding:utf-8 -*- from generate_poetry import Poetry from poetry_model import poetryModel from operator import itemgetter import tensorflow as tf import numpy as np import random if __name__ == '__main__': batch_size = 1 rnn_size = 128 num_layers = 2 poetrys = Poetry() words_size = len(poetrys.word_to_id) def to_word(prob): prob = prob[0] indexs, _ = zip(*sorted(enumerate(prob), key=itemgetter(1))) rand_num = int(np.random.rand(1)*10); index_sum = len(indexs) max_rate = prob[indexs[(index_sum-1)]] if max_rate > 0.9 : sample = indexs[(index_sum-1)] else: sample = indexs[(index_sum-1-rand_num)] return poetrys.id_to_word[sample] inputs = tf.placeholder(tf.int32, [batch_size, None]) keep_prob = tf.placeholder(tf.float32, name='keep_prob') model = poetryModel() logits,probs,initial_state,last_state = model.create_model(inputs,batch_size, rnn_size,words_size,num_layers,False,keep_prob) saver = tf.train.Saver() with tf.Session() as sess: sess.run(tf.global_variables_initializer()) saver.restore(sess,"poetry_model.ckpt") next_state = sess.run(initial_state) x = np.zeros((1, 1)) x[0,0] = poetrys.word_to_id['['] feed = {inputs: x, initial_state: next_state, keep_prob: 1} predict, next_state = sess.run([probs, last_state], feed_dict=feed) word = to_word(predict) poem = '' while word != ']': poem += word x = np.zeros((1, 1)) x[0, 0] = poetrys.word_to_id[word] feed = {inputs: x, initial_state: next_state, keep_prob: 1} predict, next_state = sess.run([probs, last_state], feed_dict=feed) word = to_word(predict) print poem

实验总结:

本次实验是第一次接触rnn和lstm,看的比较费力,对于实验代码有两处疑点,特在此记录,供之后学习后回头解决

1.BasicLSTMCell只指定了unit_num为每个小单元的输出维度,但没有指明time_steps时序步长,我百度并没有解决这个疑惑,

所以此处我猜测时序步长是可以动态生成的,因为inputs里面对于时序步长取了一个最大值max(map(len, batches)),看起来比较随意

2.另一个是next_state是如何传递给模型的,感觉initial_state不是一个显示接口,而且create_model没有被第二次调用,我猜想是tf.nn.dynamic_rnn或是tensorflow机制理解的问题

参考博客:

1.Python reload() 函数 python2

2.为什么在sys.setdefaultencoding之前要写reload(sys)

8.Python 字典(Dictionary) items()方法

13.Python zip() 函数 打包成元组组成的列表

14.tensorflow中sequence_loss_by_example()函数的计算过程(结合TF的ptb构建语言模型例子)

15.【TensorFlow】关于tf.nn.sparse_softmax_cross_entropy_with_logits()

16.tf.nn.softmax_cross_entropy_with_logits 和 tf.contrib.legacy_seq2seq.sequence_loss_by_example 的联系与区别

17.TensorFlow学习笔记之--[tf.clip_by_global_norm,tf.clip_by_value,tf.clip_by_norm等的区别]

18.tensorflow—tf.gradients()简单实用教程

19.TensorFlow学习笔记之--[compute_gradients和apply_gradients原理浅析] apply_gradients 参数为元组对(梯度,被求导的变量)

20.tf.nn.embedding_lookup()的用法 返回的tensor的维度是lk的维度+data的除了第一维后的维度拼接。

21.完全图解RNN、RNN变体、Seq2Seq、Attention机制 入门强推

22.TensorFlow中RNN实现的正确打开方式 入门强推 内含char RNN项目

23.Understanding LSTM Networks 入门强推 英文lstm介绍