存入的数据过多的时候,尤其是需要扩容的时候,在并发情况下是很容易出现问题.

resize函数:

void resize(int newCapacity) { Entry[] oldTable = table; int oldCapacity = oldTable.length; if (oldCapacity == MAXIMUM_CAPACITY) { threshold = Integer.MAX_VALUE; return; } Entry[] newTable = new Entry[newCapacity]; boolean oldAltHashing = useAltHashing; useAltHashing |= sun.misc.VM.isBooted() && (newCapacity >= Holder.ALTERNATIVE_HASHING_THRESHOLD); boolean rehash = oldAltHashing ^ useAltHashing; transfer(newTable, rehash); //transfer函数的调用 table = newTable; threshold = (int)Math.min(newCapacity * loadFactor, MAXIMUM_CAPACITY + 1); }

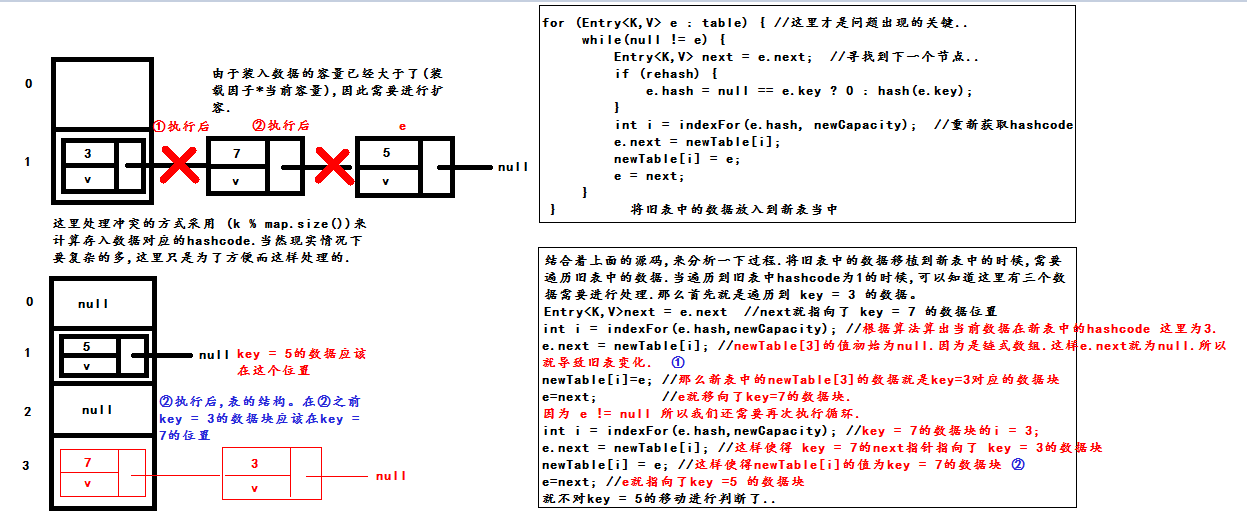

void transfer(Entry[] newTable, boolean rehash) { int newCapacity = newTable.length; for (Entry<K,V> e : table) { //这里才是问题出现的关键.. while(null != e) { Entry<K,V> next = e.next; //寻找到下一个节点.. if (rehash) { e.hash = null == e.key ? 0 : hash(e.key); } int i = indexFor(e.hash, newCapacity); //重新获取hashcode e.next = newTable[i]; newTable[i] = e; e = next; } } }

并发导致死循环:

https://blog.csdn.net/z69183787/article/details/64920074?locationNum=15&fps=1