Mac配置本地hadoop

这学期要学习大数据,于是在自己的mac上配置了hadoop环境。由于Mac是OSX系统,所以配置方法跟Linux类似

一、下载hadoop

从官网下载压缩包。

$ll

total 598424

-rwxrwxrwx@ 1 fanghao staff 292M 3 4 23:16 hadoop-3.0.0.tar.gz

解压

tar -xzvf hadoop-3.0.0.tar.gz

二、设置环境变量

vim ~/.bash_profile

export HADOOP_HOME=/Users/fanghao/someSoftware/hadoop-3.0.0

export HADOOP_HOME_WARN_SUPPRESS=1

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

使环境变量生效

source ~/.bash_profile

三、配置hadoop自己的参数

进入hadoop-3.0.0/etc/hadoop

1. 配置hadoop-env.sh

# The java implementation to use. By default, this environment

# variable is REQUIRED on ALL platforms except OS X!

export JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.8.0_111.jdk/Contents/Home

这里写了OSX不必须加这一行,加了也没事

2. 配置core-site.xml

指定临时数据文件夹,指定NameNode的主机名和端口

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/Users/fanghao/someSoftware/hadoop-3.0.0/data/</value>

</property>

</configuration>

3. 配置hdfs-site.xml

指定HDFS的默认参数副本,因为是单机运行,所以副本数为1

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

4. 配置mapred-site.xml

指定使用yarn集群框架

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

5. 配置yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>localhost</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

四、启动

先格式化

hadoop namenode -format

然后执行hadoop-3.0.0/sbin中的系统脚本

如

start-dfs.sh # 启动DataNode、NameNode、SecondaryNameNode

start-yarn.sh # 启动NodeManager、ResourceManager

用jps命令可以查看这些JVM上的进程

6178 NodeManager

6083 ResourceManager

6292 Jps

5685 DataNode

5582 NameNode

5822 SecondaryNameNode

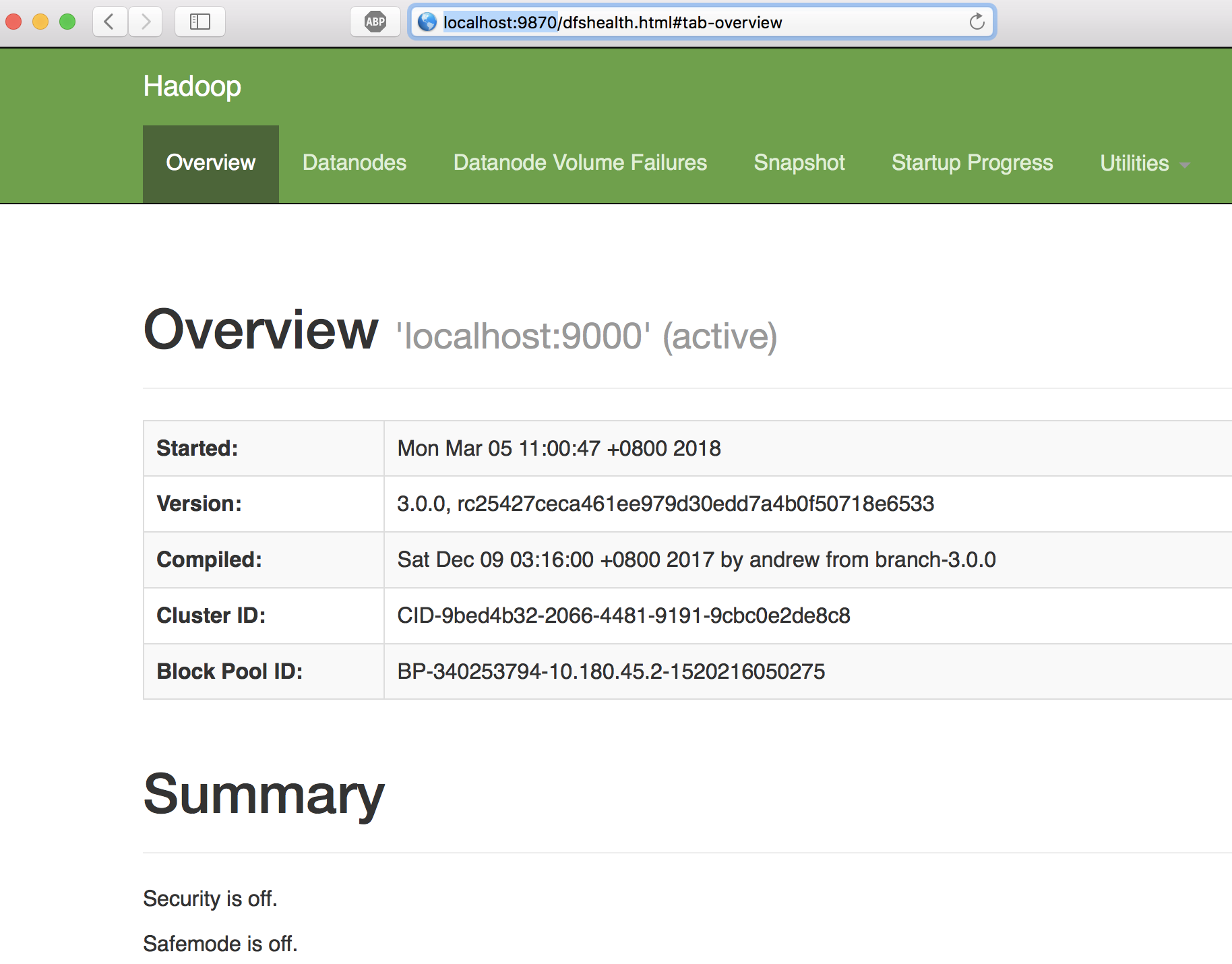

查看HDFS

用浏览器登录http://localhost:9870/

这里要注意的是,在hadoop3.0.0中,这里的端口号改成了9870,不是2.x的50070,官网上有issue

The patch updates the HDFS default HTTP/RPC ports to non-ephemeral ports. The changes are listed below:

Namenode ports: 50470 --> 9871, 50070 --> 9870, 8020 --> 9820

Secondary NN ports: 50091 --> 9869, 50090 --> 9868

Datanode ports: 50020 --> 9867, 50010 --> 9866, 50475 --> 9865, 50075 --> 9864

可能遇到的问题

hadoop的集群控制是通过ssh实现的,因此要在系统偏好设置->共享->远程登录设置成允许