特征匹配

特征匹配是计算机视觉中经常要用到的一步。通过对图像与图像或者图像与地图之间的描述子进行准确匹配,我们可以为后续的姿态估计,优化等操作减轻大量负担。然而,由于图像特征的局部特性,误匹配的情况广泛存在。在opencv的匹配算法中

实际上集成了一些对误匹配的处理。我们首先介绍一下暴力匹配算法。

暴力匹配

cv::BFMatcher

暴力匹配是指依次查找(穷举搜索)第一组中每个描述符与第二组中哪个描述符最接近。当然初始的暴力匹配得到的误匹配很多。我们可以通过交叉匹配过滤的方法对误匹配进行一定程度的剔除。

这种技术的思想是用查询集来匹配训练描述符,反之亦然。只返回在这两个匹配中同时出现的匹配。当有足够多的匹配时,这种技术在离群值数目极少的情况下通常会产生最佳效果。

在cv::BFMatcher类中可进行交叉匹配。为了能进行交叉检测实验,要创建cv::BFMatcher类的实例,需将构造函数的第二个参数设置为true:

cv::BFMatcher matcher2( NORM_HAMMING, true);

如果不设置第二个参数,则是正常的暴力匹配算法

我们可以对这两种匹配的结果,进行对比

环境:ubuntu16.04 , opencv-3.1.0

使用ORB特征

代码如下

#include <iostream> #include <opencv2/core/core.hpp> #include <opencv2/features2d/features2d.hpp> #include <opencv2/highgui/highgui.hpp> using namespace std; using namespace cv; int main( int argc, char** argv) { if ( argc != 3 ) { cout<<"usage: feature_extraction img1 img2"<<endl; return 1; } Mat img1 = imread( argv[1], CV_LOAD_IMAGE_COLOR ); Mat img2 = imread( argv[2], CV_LOAD_IMAGE_COLOR ); // std::vector<KeyPoint> keypoints_1, keypoints_2; Mat descriptors_1, descriptors_2; Ptr<ORB> orb = ORB::create( 500, 1.2f, 8, 31, 0, 2, ORB::HARRIS_SCORE,31,20 ); // orb->detect(img1, keypoints_1); orb->detect(img2, keypoints_2); // orb->compute(img1, keypoints_1, descriptors_1); orb->compute(img2, keypoints_2, descriptors_2); // BFMatcher matcher1( NORM_HAMMING ); vector<DMatch> matches1; matcher1.match(descriptors_1, descriptors_2, matches1); //cv::Ptr<cv::DescriptorMatcher> matcher(new cv::BFMatcher(cv::NORM HAMMING, true)); //use in opencv2.x BFMatcher matcher2( NORM_HAMMING, true); vector<DMatch> matches2; matcher2.match(descriptors_1, descriptors_2, matches2); Mat img_match1; Mat img_match2; drawMatches(img1, keypoints_1, img2, keypoints_2, matches1, img_match1 ); drawMatches(img1, keypoints_1, img2, keypoints_2, matches2, img_match2 ); imshow("BFMatcher", img_match1); imshow("jiao cha match", img_match2); waitKey(0); return 0; }

执行程序得到,匹配结果

暴力匹配

交叉匹配过滤

可以看出,经过交叉匹配过滤,误匹配得到一定程度的清除。

比率测试

对于误匹配的剔除还可以通过比率测试的方法来解决。可用KNN匹配,最初的K为2,即对每个匹配返回两个最近邻描述符。仅当第一个匹配与第二个匹配之间的距离比率足够大时(比率的阈值通常为2左右),才认为这是一个匹配。

为此,我们可以写一个用于比率测试的匹配函数

void match_features(Mat& query, Mat& train, vector<DMatch>& matches) { vector<vector<DMatch>> knn_matches; BFMatcher matcher(NORM_HAMMING); matcher.knnMatch(query, train, knn_matches, 2); //?????Ratio Test????ƥ??ľ?? float min_dist = FLT_MAX; for (int r = 0; r < knn_matches.size(); ++r) { //Ratio Test if (knn_matches[r][0].distance > 0.6*knn_matches[r][1].distance) continue; float dist = knn_matches[r][0].distance; if (dist < min_dist) min_dist = dist; } matches.clear(); for (size_t r = 0; r < knn_matches.size(); ++r) { //???????Ratio Test?ĵ?ƥ???????? if ( knn_matches[r][0].distance > 0.6*knn_matches[r][1].distance || knn_matches[r][0].distance > 5 * max(min_dist, 10.0f) ) continue; //???????? matches.push_back(knn_matches[r][0]); } }

此函数有三个输入,Mat& query, Mat& train, vector<DMatch>& matches

分别是要匹配的两幅图像的描述子,和匹配

比率测试的阈值设置为0.6

可以得到匹配结果

可以看出,比率测试可删除几乎所有的异常值。但在某些情况下,假阳性匹配可通过这个测试。我们可以进一步去掉异常值的剩余部分,只留下正确的匹配。

单应性估计

为了得到更多的匹配,可采用随机采样一致性(RANSAC)方法来进行异常过滤。若希望所使用的图像是刚性的(可看成平面对象),只需在模式图像的特征点与查询图像的特征点之间找到单应性变换即可。单应性变换会将模式中的点变换到用作查询的图像坐标系中。为了找到这样的变换,可使用cv::findHomography函数。它使用RANSAC来探测输入点的子集,由此找到最好的单应性矩阵。这种方法通过计算单应性矩阵的重投影误差来标记每个点是否为离群值或者非离群值

我们再写一个函数,然后在主函数中调用

bool refineMatchesWithHomography ( const std::vector<cv::KeyPoint>& queryKeypoints, const std::vector<cv::KeyPoint>& trainKeypoints, float reprojectionThreshold, std::vector<cv::DMatch>& matches, cv::Mat& homography ) { const int minNumberMatchesAllowed = 8; if (matches.size() < minNumberMatchesAllowed) return false; // Prepare data for cv::findHomography std::vector<cv::Point2f> srcPoints(matches.size()); std::vector<cv::Point2f> dstPoints(matches.size()); for (size_t i = 0; i < matches.size(); i++) { srcPoints[i] = trainKeypoints[matches[i].trainIdx].pt; dstPoints[i] = queryKeypoints[matches[i].queryIdx].pt; } // Find homography matrix and get inliers mask std::vector<unsigned char> inliersMask(srcPoints.size()); homography = cv::findHomography(srcPoints, dstPoints, CV_FM_RANSAC, reprojectionThreshold, inliersMask); std::vector<cv::DMatch> inliers; for (size_t i=0; i<inliersMask.size(); i++) { if (inliersMask[i]) inliers.push_back(matches[i]); } matches.swap(inliers); return matches.size() > minNumberMatchesAllowed; }

输出结果

分析来看,结果并不好,大量的正确匹配被剔除,留下来的明显可以看出误匹配。

基本矩阵F估计

用基本矩阵,我们调用cv::findFundamentalMat函数,函数的原型为

Mat cv::findFundamentalMat ( InputArray points1, InputArray points2, int method = FM_RANSAC, double ransacReprojThreshold = 3., double confidence = 0.99, OutputArray mask = noArray() )

通过两张图像的对应点来计算基本矩阵F

- Parameters

-

points1 Array of N points from the first image. The point coordinates should be floating-point (single or double precision). points2 Array of the second image points of the same size and format as points1 . method Method for computing a fundamental matrix. - CV_FM_7POINT for a 7-point algorithm. N

- CV_FM_8POINT for an 8-point algorithm. N

- CV_FM_RANSAC for the RANSAC algorithm. N

- CV_FM_LMEDS for the LMedS algorithm. N

ransacReprojThreshold Parameter used only for RANSAC. It is the maximum distance from a point to an epipolar line in pixels, beyond which the point is considered an outlier and is not used for computing the final fundamental matrix. It can be set to something like 1-3, depending on the accuracy of the point localization, image resolution, and the image noise. confidence Parameter used for the RANSAC and LMedS methods only. It specifies a desirable level of confidence (probability) that the estimated matrix is correct. mask The epipolar geometry is described by the following equation:

注意一点就是参数points1,和points2的格式都为Point2f,

vector<Point2f> points1; vector<Point2f> points2; KeyPointsToPoints(keypoints_1, keypoints_2, points1, points2, matches1); //compute F Mat mask; vector<uchar> status(keypoints_1.size()); Mat F = findFundamentalMat(points1, points2, FM_RANSAC, 3, 0.99, status); vector<DMatch> new_matches; cout << "F keeping " << countNonZero(status) << " / " << status.size() << endl; for (unsigned int i = 0; i < status.size(); i++) { if (status[i]) { if (matches1.size() <= 0) { new_matches.push_back(DMatch(matches1[i].queryIdx,matches1[i].trainIdx,matches1[i].distance)); } else { new_matches.push_back(matches1[i]); } } } cout << matches1.size() << " matches before, " << new_matches.size() << " new matches after Fundamental Matrix ";

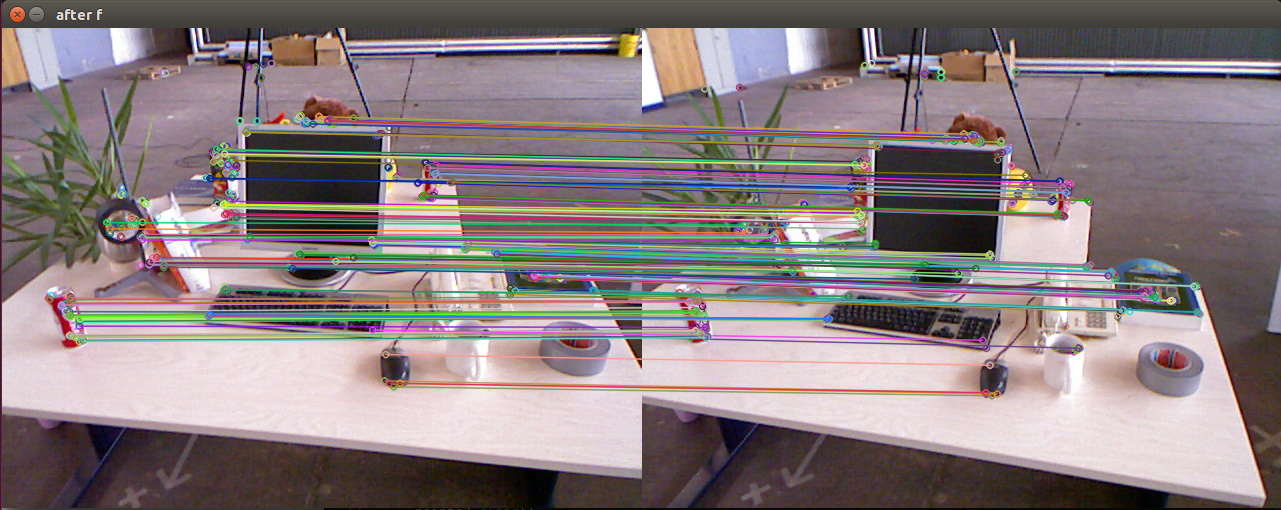

程序运行结果为:

输出

F keeping 241 / 500 500 matches before, 241 new matches after Fundamental Matrix

初始暴力匹配有500对,经过f矩阵验证之后,剩下241对,

完整代码下载地址:https://download.csdn.net/download/buaa_zn/10473427