安装Java JDK:

到sun网站上下载jdk

chmod +x jdk-6u30-linux-x64.bin

./jdk-6u30-linux-x64.bin

下载Hadoop

wget http://labs.renren.com/apache-mirror/hadoop/common/hadoop-0.20.205.0/hadoop-0.20.205.0.tar.gz

tar zxvf hadoop-0.20.205.0.tar.gz

安装必要软件

yum install ssh rsync

修改配置文件

conf/core-site.xml:

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

conf/hdfs-site.xml:

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

conf/mapred-site.xml:

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>localhost:9001</value>

</property>

</configuration>

conf/ hadoop-env.sh

export JAVA_HOME=/root/jdk1.6.0_30

bin/hadoop

以root用户运行会报-jvm参数不存在的错误,故将

if [[ $EUID -eq 0 ]]; then

HADOOP_OPTS="$HADOOP_OPTS -jvm server $HADOOP_DATANODE_OPTS"

else

HADOOP_OPTS="$HADOOP_OPTS -server $HADOOP_DATANODE_OPTS"

fi

修改为

elif [ "$COMMAND" = "datanode" ] ; then

CLASS='org.apache.hadoop.hdfs.server.datanode.DataNode'

HADOOP_OPTS="$HADOOP_OPTS -server $HADOOP_DATANODE_OPTS"

SSH设置

$ ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

启动hadoop

$ bin/hadoop namenode -format

$ bin/start-all.sh

此时,通过本地的50070和50030端口就可以分别浏览到NameNode和JobTracker:

- NameNode - http://localhost:50070/

- JobTracker - http://localhost:50030/

测试wordcount

生成测试数据:

[root hadoop-0.20.205.0]# mkdir input

[root hadoop-0.20.205.0]# echo "hello world" >> input/a.txt

[root hadoop-0.20.205.0]# echo "hello hadoop" >> input/b.txt

将本地数据复制到HDFS中:

[root hadoop-0.20.205.0]# bin/hadoop fs -put input in

执行测试任务:

[root hadoop-0.20.205.0]# bin/hadoop jar hadoop-examples-0.20.205.0.jar wordcount in out

12/02/05 21:00:47 INFO input.FileInputFormat: Total input paths to process : 2

12/02/05 21:00:48 INFO mapred.JobClient: Running job: job_201202052055_0001

12/02/05 21:00:49 INFO mapred.JobClient: map 0% reduce 0%

12/02/05 21:01:07 INFO mapred.JobClient: map 100% reduce 0%

12/02/05 21:01:19 INFO mapred.JobClient: map 100% reduce 100%

12/02/05 21:01:24 INFO mapred.JobClient: Job complete: job_201202052055_0001

12/02/05 21:01:24 INFO mapred.JobClient: Counters: 29

12/02/05 21:01:24 INFO mapred.JobClient: Job Counters

12/02/05 21:01:24 INFO mapred.JobClient: Launched reduce tasks=1

12/02/05 21:01:24 INFO mapred.JobClient: SLOTS_MILLIS_MAPS=22356

12/02/05 21:01:24 INFO mapred.JobClient: Total time spent by all reduces waiting after reserving slots (ms)=0

12/02/05 21:01:24 INFO mapred.JobClient: Total time spent by all maps waiting after reserving slots (ms)=0

12/02/05 21:01:24 INFO mapred.JobClient: Launched map tasks=2

12/02/05 21:01:24 INFO mapred.JobClient: Data-local map tasks=2

12/02/05 21:01:24 INFO mapred.JobClient: SLOTS_MILLIS_REDUCES=10801

12/02/05 21:01:24 INFO mapred.JobClient: File Output Format Counters

12/02/05 21:01:24 INFO mapred.JobClient: Bytes Written=25

12/02/05 21:01:24 INFO mapred.JobClient: FileSystemCounters

12/02/05 21:01:24 INFO mapred.JobClient: FILE_BYTES_READ=55

12/02/05 21:01:24 INFO mapred.JobClient: HDFS_BYTES_READ=235

12/02/05 21:01:24 INFO mapred.JobClient: FILE_BYTES_WRITTEN=64345

12/02/05 21:01:24 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=25

12/02/05 21:01:24 INFO mapred.JobClient: File Input Format Counters

12/02/05 21:01:24 INFO mapred.JobClient: Bytes Read=25

12/02/05 21:01:24 INFO mapred.JobClient: Map-Reduce Framework

12/02/05 21:01:24 INFO mapred.JobClient: Map output materialized bytes=61

12/02/05 21:01:24 INFO mapred.JobClient: Map input records=2

12/02/05 21:01:24 INFO mapred.JobClient: Reduce shuffle bytes=61

12/02/05 21:01:24 INFO mapred.JobClient: Spilled Records=8

12/02/05 21:01:24 INFO mapred.JobClient: Map output bytes=41

12/02/05 21:01:24 INFO mapred.JobClient: CPU time spent (ms)=2900

12/02/05 21:01:24 INFO mapred.JobClient: Total committed heap usage (bytes)=398852096

12/02/05 21:01:24 INFO mapred.JobClient: Combine input records=4

12/02/05 21:01:24 INFO mapred.JobClient: SPLIT_RAW_BYTES=210

12/02/05 21:01:24 INFO mapred.JobClient: Reduce input records=4

12/02/05 21:01:24 INFO mapred.JobClient: Reduce input groups=3

12/02/05 21:01:24 INFO mapred.JobClient: Combine output records=4

12/02/05 21:01:24 INFO mapred.JobClient: Physical memory (bytes) snapshot=422445056

12/02/05 21:01:24 INFO mapred.JobClient: Reduce output records=3

12/02/05 21:01:24 INFO mapred.JobClient: Virtual memory (bytes) snapshot=1544007680

12/02/05 21:01:24 INFO mapred.JobClient: Map output records=4

查看结果:

[root hadoop-0.20.205.0]# bin/hadoop fs -cat out/*

hadoop 1

hello 2

world 1

cat: File does not exist: /user/root/out/_logs

将结果从HDFS复制到本地并查看:

[root hadoop-0.20.205.0]# bin/hadoop fs -get out output

[root hadoop-0.20.205.0]# cat output/*

cat: output/_logs: Is a directory

hadoop 1

hello 2

world 1

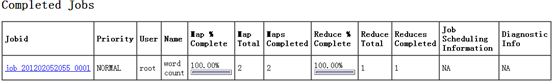

此时,从JobTracker网页中也可以看到任务的执行情况: