Hive架构

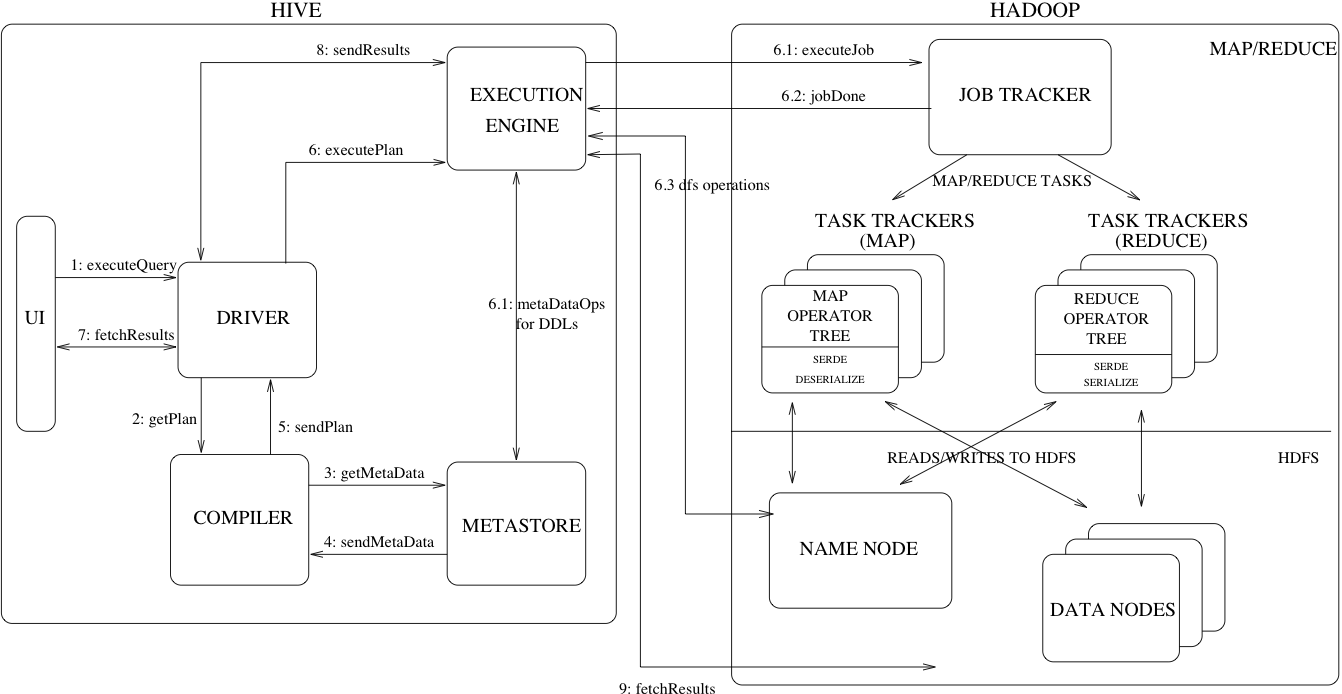

Figure 1 also shows how a typical query flows through the system.

图一显示一个普通的查询是如何流经Hive系统的。The UI calls the execute interface to the Driver (step 1 in Figure 1).

图中的第1步,UI向Driver调用执行接口The Driver creates a session handle for the query and sends the query to the compiler to generate an execution plan (step 2).

第2步,Driver为查询创建一个Session句柄,将查询发送到compiler编译器,生成一个执行计划(execution plan)。The compiler gets the necessary metadata from the metastore (steps 3 and 4).

第3-4步,编译器从metastore中获取必要的元数据信息。This metadata is used to typecheck the expressions in the query tree as well as to prune partitions based on query predicates.

元数据被用户对查询树中的表达式进行类型检查,以及基于查询谓词进行剪枝。The plan generated by the compiler (step 5) is a DAG of stages with each stage being either a map/reduce job, a metadata operation or an operation on HDFS.

第5步,编译器生成的计划是一个多个阶段的DAG,每个阶段都是一个MR任务,或者一个元数据操作、HDFS操作。For map/reduce stages, the plan contains map operator trees (operator trees that are executed on the mappers) and a reduce operator tree (for operations that need reducers). The execution engine submits these stages to appropriate components (steps 6, 6.1, 6.2 and 6.3).

对于MR阶段,这个计划包含map操作树和reduce操作树。这个执行引擎提交这些阶段到恰当的组件。In each task (mapper/reducer) the deserializer associated with the table or intermediate outputs is used to read the rows from HDFS files and these are passed through the associated operator tree. Once the output is generated, it is written to a temporary HDFS file though the serializer (this happens in the mapper in case the operation does not need a reduce).

The temporary files are used to provide data to subsequent map/reduce stages of the plan. For DML operations the final temporary file is moved to the table's location.

This scheme is used to ensure that dirty data is not read (file rename being an atomic operation in HDFS).

scheme被用来确保脏数据不会被读到。For queries, the contents of the temporary file are read by the execution engine directly from HDFS as part of the fetch call from the Driver (steps 7, 8 and 9).