推荐系统我们都很熟悉,淘宝推荐用户可能感兴趣的产品,搜索引擎帮助用户发现可能感兴趣的东西,这些都是推荐系统的内容。接下来讲述一个电影推荐的项目。

Netflix 电影推荐系统 这个项目是使用的Netflix的数据,数据记录了用户观看过的电影和用户对电影的评分,使用基于物品的协同过滤算法,需要根据所有用户的观看评分历史来找出不同电影之间的相似性,然后根据单个用户的历史电影评分来估算用户喜欢某部新电影的概率,以此来进行电影的推荐。 主要的工作可以分为: 1.构建评分矩阵 2.构建同现矩阵 3.归一化同现矩阵获得电影之间的关系 4.矩阵的相乘获取预估评分 具体的,数据文件每一行记录的数据为:用户,电影,评分 Map-Reducer1 按用户进行拆分 Mapper : 输入 用户,电影,评分 输出 key -> 用户 value -> 电影:评分 Reducer : key -> 用户 value -> 列表(电影:评分) Map-Reducer2 构建同现矩阵 Mapper: 输入 用户 电影:评分的这个列表,列表两两组合 输出 MovieA : MovieB 1 Reducer: MovieA: MovieB 次数 Map-Reducer3 归一化 按照行归一化 Mapper: 输入 MovieA:MovieB 次数 输出 MovieA MovieB = 次数 Reducer:使用一个map,记录MovieB 及 次数 遍历一遍求和sum总次数。然后输出 MovieB MovieA = B次数 / sum Map-Reducer4 矩阵相乘 Mapper1: 输入 MovieB MovieA = relation 就是简单的读取数据 Mapper2: 输入用户,电影,评分 输出 电影 用户:评分 Reducer: 得到movie_relation map和user_rate map,然后遍历entry 输出 用户:电影 relation * rate Map-Reducer5 求和 简单就是对用户:电影为键求和即可。

一个比较重要的trick,为什么矩阵相乘的时候是采用按行归一化,然后按照列写入。

不这样的话,如果按照行写入,那么比如键为M1的数据 记录的就是M1与电影 1 2 3 4 5的relation 那么就需要inmemory的存储用户对于每个电影的评分矩阵。

而我们的方法就不用嘛,相当于每一个小项考虑的是我这个电影对其他电影的贡献是多少。

一、电影推荐系统中的算法

- User Collaborative Filtering (User CF)

- Item Collaborative Filtering (Item CF)

- ...

1.1 User CF

User CF (协同过滤算法)是把与你有相同爱好的用户所喜欢的物品(并且你没有评过分)推荐给你。而怎么识别有相同爱好的用户呢?一个思路比如说可以根据用户对同一商品的评分来分析。

比如下图可以根据用户之前对电影1,2,3的评分推断A和C具有相同爱好,然后A看了电影4,给了高分,就把电影4推荐给用户C.

User-based算法存在两个重大问题:

数据稀疏性。一个大型的电子商务推荐系统一般有非常多的物品,用户可能买的其中不到1%的物品,不同用户之间买的物品重叠性较低,导致算法无法找到一个用户的邻居,即偏好相似的用户。

算法扩展性。最近邻居算法的计算量随着用户和物品数量的增加而增加,不适合数据量大的情况使用。

1.2Item CF

Item CF 是把与你之前喜欢的物品近似的物品推荐给你。

比如下图根据用户AB对电影1,2的评分推断电影1和电影3相似,然后用户C看了电影1,那么就再把电影3推荐给他。

1.3采用Item CF

本项目采用Item CF来实现,主要基于以下几点考虑:

用户数量比电影数量大的多得多

Item的改变不会特别频繁,降低计算复杂度

使用用户的历史数据来为用户推荐,结果更具有说服力

二、Item CF实现电影推荐

主要分为以下三个步骤:

- Build co-occurrence matrix

- Build rating matrix

- Matrix computation to get recommending result

2.1 Build co-occurrence matrix

使用Item CF,当然首先需要描述不同Item之间的关系,那么怎么定义这些Item之间的关系呢?

Based on user’s profile

- watching history

- rating history

- favorite list

Based on movie’s info

- movie category

- movie producer

我们使用基于用户的rating history来定义两个电影时间的关系。

我们认为,同一用户看过相同的两部电影,那么这两部电影就是有关系的。(无论评分高低,因为在看之前,既然两部电影都吸引了这个用户,那么就证明两部电影还是有一些关系的)

我们构建一个co-occurrence matrix来描述不同电影之间的关系。

2.2 Build rating matrix

那么怎么定义电影之间的不同呢?我们使用rating matrix.

这里如果用户没有对一部电影评分的话,默认为0,我们可以想象为用户都不想去看这部电影。然后一个改进措施可以取值为用户历史评分的均值,这样似乎更准确一些。

2.3 Matrix computation to get recommending result

2.3.1 对co-occurrence matrix进行归一化处理

2.3.2 矩阵相乘

如上图所示,归一化时候第一行就表示电影M1和电影M1,M2,M3,M4,M5的相似性依次为2/6,2/6,1/6,1/6,0,而用户B看过M1,M2,M3三部电影,评分分别为3,7,8,没看过M4,M5。第一行和UserB的这列数据相乘时候就得到电影M1在UserB这里的得分,依次类推,可以得到电影M2,M3,M4,M5在用户这里的得分。然后从用户B没看过的电影M4,M5中选出TopK来推荐给用户B。

三、Map-Reduce工作流程

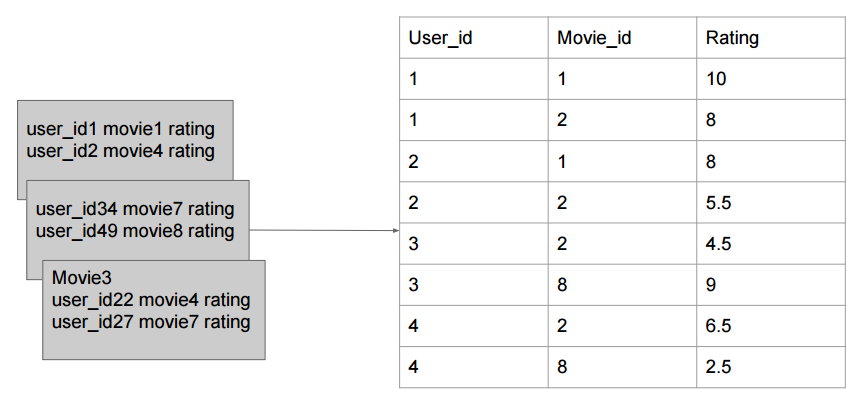

输入数据: 同PaperRank一样,我们不应该存储一颗矩阵,存储样式如下:

如上图所示,第一行就表示用户1看过10001这部电影,评分5.0.

3.1 MR1 Data divided by User

首先我们需要一个Mapper-Reducer来实现按照User-id分割数据,再按照User-id merge数据,得到每个用户看过的所有电影和评分。

Mapper:

Reducer:

3.2 MR2 构建co-occurrence matrix

使用一个mapper-reducer建立电影之间的两两相似性矩阵元。代表同一部电影或电影A,B同时被多少人看过。(A:B 代表A行B列)

Mapper:

Reducer:

3.3 MR3 对同现矩阵进行归一化操作

Mapper:

对MR2得到的结果按照行号进行拆分(按照行归一化)

Reducer:

按照行号求sum之后,分别得到当前行每一个列的位置归一化之后对应的值。然后按照列号为key写入HDFS。(之后矩阵相乘的时候分析为什么按照列号写入)

3.4 矩阵相乘

回想我们现在已经完成的工作,我们首先得到了User Rating Matrix,然后通过对同一个user id下的电影Id进行了一个两层循环得到了co-occurrence matrix。之后再对co-occurrence matrix进行行归一化,并进行了一个转置,让key为列。然后我们要做的就是矩阵的相乘了。

现在来分析我们之前说的为什么要把列存为key。(下边先不考虑多个用户,就只讨论一个用户)

我们要做的工作是什么?

假设现在有ABCD四部电影,用户user1,需要计算user1对A电影的评分。

一种方法是,使用同现矩阵的一行去乘以评分矩阵的一列,得到评分。同现矩阵: key : 行,value : A与ABCD的relation 那么就需要in-memory的存储用户对A,B,C,D的评分。不然你做不了啊,一边是key为行过来,另一边你过来的是什么东西?只能行的过来之后,对应的 列 = value 去 in-memory的查找那个列所对应的评分。

另一种方法是,同现矩阵的每一列和rating的一个数相乘,最后相加。同现矩阵: key : 列, value : 该列与A,B,C,D的relation。相当于我们计算A,B,C,D对A做了多少贡献,最后A把所有贡献加起来作为A的值,B,C,D类似。这样一来,我们以列为key,代表作贡献的电影,然后其评分同样以它为key,我们就不用再in-memoty的存储那么多东西了。

好了,现在来看这个Map-Reduce吧!

Mapper:

Mapper1仅仅是一个读取操作:

Mapper2需要变换Movie id为key

Reducer:

reducer需要对相同的key进行处理,区分来自于同现矩阵和来自于rating 矩阵,然后相乘写到HDFS,键为User: movie,值为这个用户看这个电影的来自于某一部其他电影的贡献。。。(好绕口)

3.5 Sum

最后再来一个Map-Reduce进行求和即可。

流程图如下

四、主要代码

1.Driver

public class Driver { public static void main(String[] args) throws Exception { DataDividerByUser dataDividerByUser = new DataDividerByUser(); CoOccurrenceMatrixGenerator coOccurrenceMatrixGenerator = new CoOccurrenceMatrixGenerator(); Normalize normalize = new Normalize(); Multiplication multiplication = new Multiplication(); Sum sum = new Sum(); String rawInput = args[0]; String userMovieListOutputDir = args[1]; String coOccurrenceMatrixDir = args[2]; String normalizeDir = args[3]; String multiplicationDir = args[4]; String sumDir = args[5]; String[] path1 = {rawInput, userMovieListOutputDir}; String[] path2 = {userMovieListOutputDir, coOccurrenceMatrixDir}; String[] path3 = {coOccurrenceMatrixDir, normalizeDir}; String[] path4 = {normalizeDir, rawInput, multiplicationDir}; String[] path5 = {multiplicationDir, sumDir}; dataDividerByUser.main(path1); coOccurrenceMatrixGenerator.main(path2); normalize.main(path3); multiplication.main(path4); sum.main(path5); } }

2.DataDividerByUser

1 import org.apache.hadoop.conf.Configuration; 2 import org.apache.hadoop.fs.Path; 3 import org.apache.hadoop.io.IntWritable; 4 import org.apache.hadoop.io.LongWritable; 5 import org.apache.hadoop.io.Text; 6 import org.apache.hadoop.mapreduce.Job; 7 import org.apache.hadoop.mapreduce.Mapper; 8 import org.apache.hadoop.mapreduce.Reducer; 9 import org.apache.hadoop.mapreduce.lib.input.TextInputFormat; 10 import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat; 11 12 import java.io.IOException; 13 import java.util.Iterator; 14 15 public class DataDividerByUser { 16 public static class DataDividerMapper extends Mapper<LongWritable, Text, IntWritable, Text> { 17 18 // map method 19 @Override 20 public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { 21 //input user,movie,rating 22 String line = value.toString().trim(); 23 String[] user_movie_value = line.split(","); 24 //divide data by user 25 context.write(new IntWritable(Integer.valueOf(user_movie_value[0])), new Text(user_movie_value[1] + ":" + user_movie_value[2])); 26 } 27 } 28 29 public static class DataDividerReducer extends Reducer<IntWritable, Text, IntWritable, Text> { 30 // reduce method 31 @Override 32 public void reduce(IntWritable key, Iterable<Text> values, Context context) 33 throws IOException, InterruptedException { 34 35 //merge data for one user 36 StringBuilder sb = new StringBuilder(); 37 Iterator<Text> iterator = values.iterator(); 38 while (iterator.hasNext()) { 39 sb.append(",").append(iterator.next()); 40 } 41 context.write(key, new Text(sb.toString().replaceFirst(",",""))); 42 } 43 } 44 45 public static void main(String[] args) throws Exception { 46 47 Configuration conf = new Configuration(); 48 49 Job job = Job.getInstance(conf); 50 job.setMapperClass(DataDividerMapper.class); 51 job.setReducerClass(DataDividerReducer.class); 52 53 job.setJarByClass(DataDividerByUser.class); 54 55 job.setInputFormatClass(TextInputFormat.class); 56 job.setOutputFormatClass(TextOutputFormat.class); 57 job.setOutputKeyClass(IntWritable.class); 58 job.setOutputValueClass(Text.class); 59 60 TextInputFormat.setInputPaths(job, new Path(args[0])); 61 TextOutputFormat.setOutputPath(job, new Path(args[1])); 62 63 job.waitForCompletion(true); 64 } 65 66 }

3.CoOccurrenceMatrixGenerator

1 import org.apache.hadoop.conf.Configuration; 2 import org.apache.hadoop.fs.Path; 3 import org.apache.hadoop.io.IntWritable; 4 import org.apache.hadoop.io.LongWritable; 5 import org.apache.hadoop.io.Text; 6 import org.apache.hadoop.mapreduce.Job; 7 import org.apache.hadoop.mapreduce.Mapper; 8 import org.apache.hadoop.mapreduce.Reducer; 9 import org.apache.hadoop.mapreduce.lib.input.TextInputFormat; 10 import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat; 11 12 import java.io.IOException; 13 import java.util.Iterator; 14 15 public class CoOccurrenceMatrixGenerator { 16 public static class MatrixGeneratorMapper extends Mapper<LongWritable, Text, Text, IntWritable> { 17 18 // map method 19 @Override 20 public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { 21 //value = userid movie1: rating, movie2: rating... 22 //key = movie1: movie2 value = 1 23 //calculate each user rating list: <movieA, movieB> 24 String line = value.toString().trim(); 25 String[] user_movie_rate = line.split(" "); 26 String[] movie_rate = user_movie_rate[1].split(","); 27 for (int i = 0; i < movie_rate.length; i++) { 28 String movieA = movie_rate[i].split(":")[0]; 29 for (int j = 0; j < movie_rate.length; j++) { 30 String movieB = movie_rate[j].split(":")[0]; 31 context.write(new Text(movieA + ":" + movieB), new IntWritable(1)); 32 } 33 } 34 35 } 36 } 37 38 public static class MatrixGeneratorReducer extends Reducer<Text, IntWritable, Text, IntWritable> { 39 // reduce method 40 @Override 41 public void reduce(Text key, Iterable<IntWritable> values, Context context) 42 throws IOException, InterruptedException { 43 //key movie1:movie2 value = iterable<1, 1, 1> 44 //calculate each two movies have been watched by how many people 45 int sum = 0; 46 Iterator<IntWritable> iterator = values.iterator(); 47 while (iterator.hasNext()) { 48 sum += iterator.next().get(); 49 } 50 context.write(key, new IntWritable(sum)); 51 } 52 } 53 54 public static void main(String[] args) throws Exception{ 55 56 Configuration conf = new Configuration(); 57 58 Job job = Job.getInstance(conf); 59 job.setMapperClass(MatrixGeneratorMapper.class); 60 job.setReducerClass(MatrixGeneratorReducer.class); 61 62 job.setJarByClass(CoOccurrenceMatrixGenerator.class); 63 64 job.setInputFormatClass(TextInputFormat.class); 65 job.setOutputFormatClass(TextOutputFormat.class); 66 job.setOutputKeyClass(Text.class); 67 job.setOutputValueClass(IntWritable.class); 68 69 TextInputFormat.setInputPaths(job, new Path(args[0])); 70 TextOutputFormat.setOutputPath(job, new Path(args[1])); 71 72 job.waitForCompletion(true); 73 74 } 75 }

4.Normalize

1 import org.apache.hadoop.conf.Configuration; 2 import org.apache.hadoop.fs.Path; 3 import org.apache.hadoop.io.LongWritable; 4 import org.apache.hadoop.io.Text; 5 import org.apache.hadoop.mapreduce.Job; 6 import org.apache.hadoop.mapreduce.Mapper; 7 import org.apache.hadoop.mapreduce.Reducer; 8 import org.apache.hadoop.mapreduce.lib.input.TextInputFormat; 9 import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat; 10 11 import java.io.IOException; 12 import java.util.HashMap; 13 import java.util.Iterator; 14 import java.util.Map; 15 16 public class Normalize { 17 18 public static class NormalizeMapper extends Mapper<LongWritable, Text, Text, Text> { 19 20 // map method 21 @Override 22 public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { 23 24 //movieA:movieB relation 25 //collect the relationship list for movieA 26 String line = value.toString().trim(); 27 String[] movie_relation = line.split(" "); 28 String[] movies = movie_relation[0].split(":"); 29 context.write(new Text(movies[0]), new Text(movies[1] + "=" + movie_relation[1])); 30 } 31 } 32 33 public static class NormalizeReducer extends Reducer<Text, Text, Text, Text> { 34 // reduce method 35 @Override 36 public void reduce(Text key, Iterable<Text> values, Context context) 37 throws IOException, InterruptedException { 38 39 //key = movieA, value=<movieB:relation, movieC:relation...> 40 //normalize each unit of co-occurrence matrix 41 int sum = 0; 42 HashMap<String, Integer> map = new HashMap<String, Integer>(); 43 Iterator<Text> iterator = values.iterator(); 44 while (iterator.hasNext()) { 45 String value = iterator.next().toString().trim(); 46 String[] movie_relation = value.split("="); 47 map.put(movie_relation[0], Integer.parseInt(movie_relation[1])); 48 sum += Integer.parseInt(movie_relation[1]); 49 } 50 for (Map.Entry<String, Integer> entry : map.entrySet()) { 51 context.write(new Text(entry.getKey()), new Text(key.toString() + "=" + (double)entry.getValue()/sum)); 52 } 53 } 54 } 55 56 public static void main(String[] args) throws Exception { 57 58 Configuration conf = new Configuration(); 59 60 Job job = Job.getInstance(conf); 61 job.setMapperClass(NormalizeMapper.class); 62 job.setReducerClass(NormalizeReducer.class); 63 64 job.setJarByClass(Normalize.class); 65 66 job.setInputFormatClass(TextInputFormat.class); 67 job.setOutputFormatClass(TextOutputFormat.class); 68 job.setOutputKeyClass(Text.class); 69 job.setOutputValueClass(Text.class); 70 71 TextInputFormat.setInputPaths(job, new Path(args[0])); 72 TextOutputFormat.setOutputPath(job, new Path(args[1])); 73 74 job.waitForCompletion(true); 75 } 76 }

5.Multiplication

1 import org.apache.hadoop.conf.Configuration; 2 import org.apache.hadoop.fs.Path; 3 import org.apache.hadoop.io.DoubleWritable; 4 import org.apache.hadoop.io.LongWritable; 5 import org.apache.hadoop.io.Text; 6 import org.apache.hadoop.mapreduce.Job; 7 import org.apache.hadoop.mapreduce.Mapper; 8 import org.apache.hadoop.mapreduce.Reducer; 9 import org.apache.hadoop.mapreduce.lib.chain.ChainMapper; 10 import org.apache.hadoop.mapreduce.lib.input.MultipleInputs; 11 import org.apache.hadoop.mapreduce.lib.input.TextInputFormat; 12 import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat; 13 14 import java.io.IOException; 15 import java.util.HashMap; 16 import java.util.Iterator; 17 import java.util.Map; 18 19 public class Multiplication { 20 public static class CooccurrenceMapper extends Mapper<LongWritable, Text, Text, Text> { 21 22 // map method 23 @Override 24 public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { 25 //input: movieB movieA=relation 26 String line = value.toString().trim(); 27 String[] movie_movie_ralation = line.split(" "); 28 //pass data to reducer 29 context.write(new Text(movie_movie_ralation[0]), new Text(movie_movie_ralation[1])); 30 } 31 } 32 33 public static class RatingMapper extends Mapper<LongWritable, Text, Text, Text> { 34 35 // map method 36 @Override 37 public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { 38 39 //input: user,movie,rating 40 String line = value.toString().trim(); 41 String[] user_movie_rate = line.split(","); 42 //pass data to reducer 43 context.write(new Text(user_movie_rate[1]), new Text(user_movie_rate[0] + ":" + user_movie_rate[2])); 44 } 45 } 46 47 public static class MultiplicationReducer extends Reducer<Text, Text, Text, DoubleWritable> { 48 // reduce method 49 @Override 50 public void reduce(Text key, Iterable<Text> values, Context context) 51 throws IOException, InterruptedException { 52 53 //key = movieB value = <movieA=relation, movieC=relation... userA:rating, userB:rating...> 54 //collect the data for each movie, then do the multiplication 55 HashMap<String, Double> movie_relation_map = new HashMap<String, Double>(); 56 HashMap<String, Double> movie_rate_map = new HashMap<String, Double>(); 57 Iterator<Text> iterator = values.iterator(); 58 while (iterator.hasNext()) { 59 String value =iterator.next().toString().trim(); 60 if (value.contains("=")) { 61 movie_relation_map.put(value.split("=")[0], Double.parseDouble(value.split("=")[1])); 62 } else { 63 movie_rate_map.put(value.split(":")[0], Double.parseDouble(value.split(":")[1])); 64 } 65 } 66 for (Map.Entry<String,Double> entry1 : movie_relation_map.entrySet()) { 67 String movie = entry1.getKey(); 68 double relation = entry1.getValue(); 69 for (Map.Entry<String, Double> entry2 : movie_rate_map.entrySet()) { 70 String user = entry2.getKey(); 71 double rate = entry2.getValue(); 72 context.write(new Text(user + ":" + movie), new DoubleWritable(relation * rate)); 73 } 74 } 75 } 76 } 77 78 79 public static void main(String[] args) throws Exception { 80 Configuration conf = new Configuration(); 81 82 Job job = Job.getInstance(conf); 83 job.setJarByClass(Multiplication.class); 84 85 ChainMapper.addMapper(job, CooccurrenceMapper.class, LongWritable.class, Text.class, Text.class, Text.class, conf); 86 ChainMapper.addMapper(job, RatingMapper.class, Text.class, Text.class, Text.class, Text.class, conf); 87 88 job.setMapperClass(CooccurrenceMapper.class); 89 job.setMapperClass(RatingMapper.class); 90 91 job.setReducerClass(MultiplicationReducer.class); 92 93 job.setMapOutputKeyClass(Text.class); 94 job.setMapOutputValueClass(Text.class); 95 job.setOutputKeyClass(Text.class); 96 job.setOutputValueClass(DoubleWritable.class); 97 98 MultipleInputs.addInputPath(job, new Path(args[0]), TextInputFormat.class, CooccurrenceMapper.class); 99 MultipleInputs.addInputPath(job, new Path(args[1]), TextInputFormat.class, RatingMapper.class); 100 101 TextOutputFormat.setOutputPath(job, new Path(args[2])); 102 103 job.waitForCompletion(true); 104 } 105 }

6.Sum

1 import org.apache.hadoop.conf.Configuration; 2 import org.apache.hadoop.fs.Path; 3 import org.apache.hadoop.io.DoubleWritable; 4 import org.apache.hadoop.io.LongWritable; 5 import org.apache.hadoop.io.Text; 6 import org.apache.hadoop.mapreduce.Job; 7 import org.apache.hadoop.mapreduce.Mapper; 8 import org.apache.hadoop.mapreduce.Reducer; 9 import org.apache.hadoop.mapreduce.lib.input.TextInputFormat; 10 import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat; 11 12 import java.io.IOException; 13 import java.util.Iterator; 14 15 /** 16 * Created by Michelle on 11/12/16. 17 */ 18 public class Sum { 19 20 public static class SumMapper extends Mapper<LongWritable, Text, Text, DoubleWritable> { 21 22 // map method 23 @Override 24 public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { 25 //pass data to reducer 26 String line = value.toString().trim(); 27 String[] user_movie_rate = line.split(" "); 28 context.write(new Text(user_movie_rate[0]), new DoubleWritable(Double.parseDouble(user_movie_rate[1]))); 29 } 30 } 31 32 public static class SumReducer extends Reducer<Text, DoubleWritable, Text, DoubleWritable> { 33 // reduce method 34 @Override 35 public void reduce(Text key, Iterable<DoubleWritable> values, Context context) 36 throws IOException, InterruptedException { 37 38 //user:movie relation 39 //calculate the sum 40 double sum = 0; 41 42 for (DoubleWritable value : values) { 43 sum += value.get(); 44 } 45 context.write(key, new DoubleWritable(sum)); 46 } 47 } 48 49 public static void main(String[] args) throws Exception { 50 51 Configuration conf = new Configuration(); 52 53 Job job = Job.getInstance(conf); 54 job.setMapperClass(SumMapper.class); 55 job.setReducerClass(SumReducer.class); 56 57 job.setJarByClass(Sum.class); 58 59 job.setInputFormatClass(TextInputFormat.class); 60 job.setOutputFormatClass(TextOutputFormat.class); 61 job.setOutputKeyClass(Text.class); 62 job.setOutputValueClass(DoubleWritable.class); 63 64 TextInputFormat.setInputPaths(job, new Path(args[0])); 65 TextOutputFormat.setOutputPath(job, new Path(args[1])); 66 67 job.waitForCompletion(true); 68 } 69 }