作业①:

要求:

熟练掌握 Selenium 查找HTML元素、爬取Ajax网页数据、等待HTML元素等内容。

使用Selenium框架爬取京东商城某类商品信息及图片。

代码:

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

import urllib.request

import threading

import sqlite3

import os

import datetime

from selenium.webdriver.common.keys import Keys

import time

class MySpider:

headers = {

"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

imagePath = "download"

def startUp(self, url, key):

# Initializing Chrome browser

chrome_options = Options()

chrome_options.add_argument('--headless')

chrome_options.add_argument('--disable-gpu')

self.driver = webdriver.Chrome(chrome_options=chrome_options)

self.count =0;

# Initializing variables

self.threads = []

self.No = 0

self.imgNo = 0

# Initializing database

try:

self.con = sqlite3.connect("phones.db")

self.cursor = self.con.cursor()

try:

# 如果有表就删除

self.cursor.execute("drop table phones")

except:

pass

try:

# 建立新的表

sql = "create table phones (mNo varchar(32) primary key, mMark varchar(256),mPrice varchar(32),mNote varchar(1024),mFile varchar(256))"

self.cursor.execute(sql)

except:

pass

except Exception as err:

print(err)

# Initializing images folder

try:

if not os.path.exists(MySpider.imagePath):

os.mkdir(MySpider.imagePath)

images = os.listdir(MySpider.imagePath)

for img in images:

s = os.path.join(MySpider.imagePath, img)

os.remove(s)

except Exception as err:

print(err)

self.driver.get(url)

keyInput = self.driver.find_element_by_id("key")

keyInput.send_keys(key)

keyInput.send_keys(Keys.ENTER)

def closeUp(self):

try:

self.con.commit()

self.con.close()

self.driver.close()

except Exception as err:

print(err);

def insertDB(self, mNo, mMark, mPrice, mNote, mFile):

try:

sql = "insert into phones (mNo,mMark,mPrice,mNote,mFile) values (?,?,?,?,?)"

self.cursor.execute(sql, (mNo, mMark, mPrice, mNote, mFile))

except Exception as err:

print(err)

def showDB(self):

try:

con = sqlite3.connect("phones.db")

cursor = con.cursor()

print("%-8s%-16s%-8s%-16s%s" % ("No", "Mark", "Price", "Image", "Note"))

cursor.execute("select mNo,mMark,mPrice,mFile,mNote from phones order by mNo")

rows = cursor.fetchall()

for row in rows:

print("%-8s %-16s %-8s %-16s %s" % (row[0], row[1], row[2], row[3], row[4]))

con.close()

except Exception as err:

print(err)

def download(self, src1, src2, mFile):

data = None

if src1:

try:

req = urllib.request.Request(src1, headers=MySpider.headers)

resp = urllib.request.urlopen(req, timeout=10)

data = resp.read()

except:

pass

if not data and src2:

try:

req = urllib.request.Request(src2, headers=MySpider.headers)

resp = urllib.request.urlopen(req, timeout=10)

data = resp.read()

except:

pass

if data:

print("download begin", mFile)

fobj = open(MySpider.imagePath + "\" + mFile, "wb")

fobj.write(data)

fobj.close()

print("download finish", mFile)

def processSpider(self):

try:

time.sleep(1)

self.count+=1

if self.count>=30:

return ;

print(self.driver.current_url)

lis = self.driver.find_elements_by_xpath("//div[@id='J_goodsList']//li[@class='gl-item']")

for li in lis:

# We find that the image is either in src or in data-lazy-img attribute

try:

src1 = li.find_element_by_xpath(".//div[@class='p-img']//a//img").get_attribute("src")

except:

src1 = ""

try:

src2 = li.find_element_by_xpath(".//div[@class='p-img']//a//img").get_attribute("data-lazy-img")

except:

src2 = ""

try:

price = li.find_element_by_xpath(".//div[@class='p-price']//i").text

except:

price = "0"

try:

note = li.find_element_by_xpath(".//div[@class='p-name p-name-type-2']//em").text

mark = note.split(" ")[0]

mark = mark.replace("爱心东东

", "")

mark = mark.replace(",", "")

note = note.replace("爱心东东

", "")

note = note.replace(",", "")

except:

note = ""

mark = ""

self.No = self.No + 1

no = str(self.No)

while len(no) < 6:

no = "0" + no

print(no, mark, price)

if src1:

src1 = urllib.request.urljoin(self.driver.current_url, src1)

p = src1.rfind(".")

mFile = no + src1[p:]

elif src2:

src2 = urllib.request.urljoin(self.driver.current_url, src2)

p = src2.rfind(".")

mFile = no + src2[p:]

if src1 or src2:

T = threading.Thread(target=self.download, args=(src1, src2, mFile))

T.setDaemon(False)

T.start()

self.threads.append(T)

else:

mFile = ""

self.insertDB(no, mark, price, note, mFile)

try:

self.driver.find_element_by_xpath("//span[@class='p-num']//a[@class='pn-next disabled']")

except:

nextPage = self.driver.find_element_by_xpath("//span[@class='p-num']//a[@class='pn-next']")

time.sleep(10)

nextPage.click()

self.processSpider()

except Exception as err:

print(err)

def executeSpider(self, url, key):

starttime = datetime.datetime.now()

print("Spider starting......")

self.startUp(url, key)

print("Spider processing......")

self.processSpider()

print("Spider closing......")

self.closeUp()

for t in self.threads:

t.join()

self.count+=1

if self.count>=30:

break

print("Spider completed......")

endtime = datetime.datetime.now()

elapsed = (endtime - starttime).seconds

print("Total ", elapsed, " seconds elapsed")

url = "http://www.jd.com"

spider = MySpider()

while True:

print("1.爬取")

print("2.显示")

print("3.退出")

s = input("请选择(1,2,3):")

if s == "1":

spider.executeSpider(url, "珐琅锅")

continue

elif s == "2":

spider.showDB()

continue

elif s == "3":

break

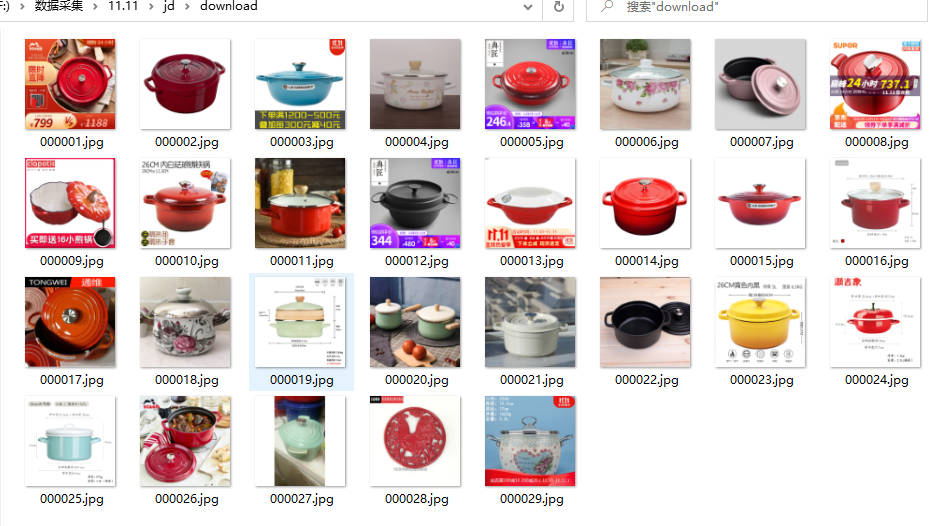

结果展示:

心得体会:复现ppt中的代码,熟悉从mysql的连接、建表,到selenium的爬取,再插入mysql数据的整个过程,其中重点掌握selenium继续下一页的内容爬取

作业②

要求:

熟练掌握 Selenium 查找HTML元素、爬取Ajax网页数据、等待HTML元素等内容。

使用Selenium框架+ MySQL数据库存储技术路线爬取“沪深A股”、“上证A股”、“深证A股”3个板块的股票数据信息。

代码:

import time

from selenium import webdriver

import pymysql

source_url = 'http://quote.eastmoney.com/center/gridlist.html'

class Spider():

def start(self,broad):

self.driver=webdriver.Chrome()

self.driver.get(source_url+broad)

def createtable(self,tablename):

try:

self.cursor.execute("drop table "+tablename[1:3])

except:

pass

self.cursor.execute("create table "+tablename[1:3]+"(count int,id varchar(16),name varchar(16),new_price varchar(16),up_rate varchar(16),down_rate varchar(16),pass_number varchar(16),pass_money varchar(16),rate varchar(16),highest varchar(16),lowest varchar(16),today varchar(16),yesterday varchar(16));")

def connect(self):

self.con = pymysql.connect(host="127.0.0.1", port=3306, user="root", passwd="123456", db="mydb",

charset="utf8")

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

self.cursor.execute("delete from share")

opened = True

self.count = 0

def close(self):

self.con.close()

def share(self,broad,num):

lis = self.driver.find_elements_by_xpath("//*[@id='table_wrapper-table']/tbody/tr")

for li in lis:

self.count += 1

if self.count>num:

break

print(li.text)

l=li.find_elements_by_xpath("./td")

self.cursor.execute(

"insert into "+broad[1:3]+"(count,id,name,new_price,up_rate,down_rate,"

"pass_number,pass_money,rate,highest,lowest,today,yesterday)"

"values (%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)",

(self.count, l[1].text, l[2].text, l[6].text, l[7].text, l[8].text,

l[9].text, l[10].text, l[11].text, l[12].text, l[13].text,

l[14].text, l[15].text))

self.con.commit()

if self.count>num:

return

# try:

g = self.driver.find_element_by_xpath("//*[@id='main-table_paginate']/a[2]")

g.click()

time.sleep(3)

self.share(broad, num)

# except :

# print("done")

def run(self,broad,num):

self.start(broad)

self.connect()

self.createtable(broad)

self.share(broad,num)

self.close()

s= Spider()

print("请选择爬取板块:

"

"1.沪深A股

"

"2.上证A股

"

"3.深证A股

")

broad=input()

n=eval(input("爬取的股票数量:"))

if broad=='1':

s.run("#hs_a_board",n)

if broad=='2':

s.run("#sh_a_board",n)

if broad=='3':

s.run("#sz_a_board",n)

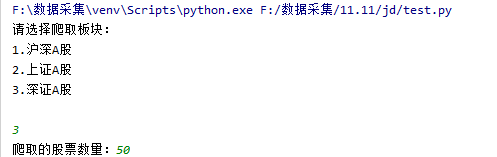

结果展示:

心得体会:再一次实践爬取股票的案例,这次要求通过selenium的模拟点击元素,进而获取数据,本次实践参照ppt内容,学习爬取函数自身调用的方法不断爬取下一页,直到达到输入参数。有个股票名包含空格,就没使用提取整行元素进行text方法然后用空格split分隔,调整为查找一行的所有td元素使用text方法

lis = self.driver.find_elements_by_xpath("//*[@id='table_wrapper-table']/tbody/tr")

for li in lis:

self.count += 1

if self.count>num:

break

print(li.text)

l=li.find_elements_by_xpath("./td")

self.cursor.execute(

"insert into "+broad[1:3]+"(count,id,name,new_price,up_rate,down_rate,"

"pass_number,pass_money,rate,highest,lowest,today,yesterday)"

"values (%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)",

(self.count, l[1].text, l[2].text, l[6].text, l[7].text, l[8].text,

l[9].text, l[10].text, l[11].text, l[12].text, l[13].text,

l[14].text, l[15].text))

作业③:

要求:

熟练掌握 Selenium 查找HTML元素、实现用户模拟登录、爬取Ajax网页数据、等待HTML元素等内容。

使用Selenium框架+MySQL爬取中国mooc网课程资源信息(课程号、课程名称、学校名称、主讲教师、团队成员、参加人数、课程进度、课程简介)

代码:

import time

from selenium import webdriver

import pymysql

source_url="https://www.icourse163.org/channel/2001.htm"

class mooc():

def start(self):

self.driver = webdriver.Chrome()

self.driver.get(source_url)

self.driver.maximize_window()

def createtable(self):

try:

self.cursor.execute("drop table mooc")

except:

pass

self.cursor.execute('create table mooc(id int,cCourse varchar(32),cTeacher varchar(16),cTeam varchar(64),cCollage varchar(32),cProcess varchar(64),'

'cCount varchar(64),cBrief text);')

def connect(self):

self.con = pymysql.connect(host="127.0.0.1", port=3306, user="root", passwd="123456", db="mydb",

charset="utf8")

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

opened = True

self.count = 0

def close(self):

self.con.commit()

self.con.close()

def mo(self):

time.sleep(1)

block = self.driver.find_element_by_xpath("//div[@class='_1gBJC']")

lis = block.find_elements_by_xpath("./div/div[@class='_3KiL7']")

for li in lis:

l=li.text.split("

")

if l[0]!="国家精品":

l=["1"]+l

print(l[0],l[1],l[2],l[3],l[4],l[5])

self.count+=1

cCourse=l[1]

cCollage=l[2]

cTeacher=l[3]

cCount=l[4]

li.click()

window = self.driver.window_handles

# 切换到新页面

self.driver.switch_to.window(window[1])

time.sleep(1)

cBrief=self.driver.find_element_by_xpath("//div[@id='j-rectxt2']").text

cTeam=self.driver.find_element_by_xpath("//*[@id='course-enroll-info']/div/div[1]/div[2]/div[1]/span[2]").text

team=self.driver.find_element_by_xpath("//*[@id='j-teacher']/div/div/div[2]/div/div[@class='um-list-slider_con']").text.split("

")

no=len(team)

cProcess=""

for i in range(0,no):

if (i%2==0)&(i!=0):

cProcess=cProcess+"/"+team[i]

elif (i%2==0):

cProcess=cProcess+team[i]

print(cProcess)

self.cursor.execute("insert into mooc(id, cCourse, cTeacher, cTeam, cCollage, cProcess, cCount, cBrief) "

"values( % s, % s, % s, % s, % s, % s, % s, % s)",

(self.count, cCourse, cTeacher, cTeam, cCollage, cProcess, cCount, cBrief))

self.driver.close()

self.driver.switch_to.window(window[0])

time.sleep(1)

#cProcess=

time.sleep(1)

if (self.driver.find_elements_by_xpath('//a[@class="_3YiUU "]')[-1].text == '下一页') & (self.count<=30):

next = self.driver.find_elements_by_xpath('//a[@class="_3YiUU "]')[-1]

next.click()

self.driver.execute_script("window.scrollTo(document.body.scrollHeight,0)")

self.mo()

c=mooc()

c.start()

c.connect()

c.createtable()

time.sleep(3)

c.mo()

c.close()

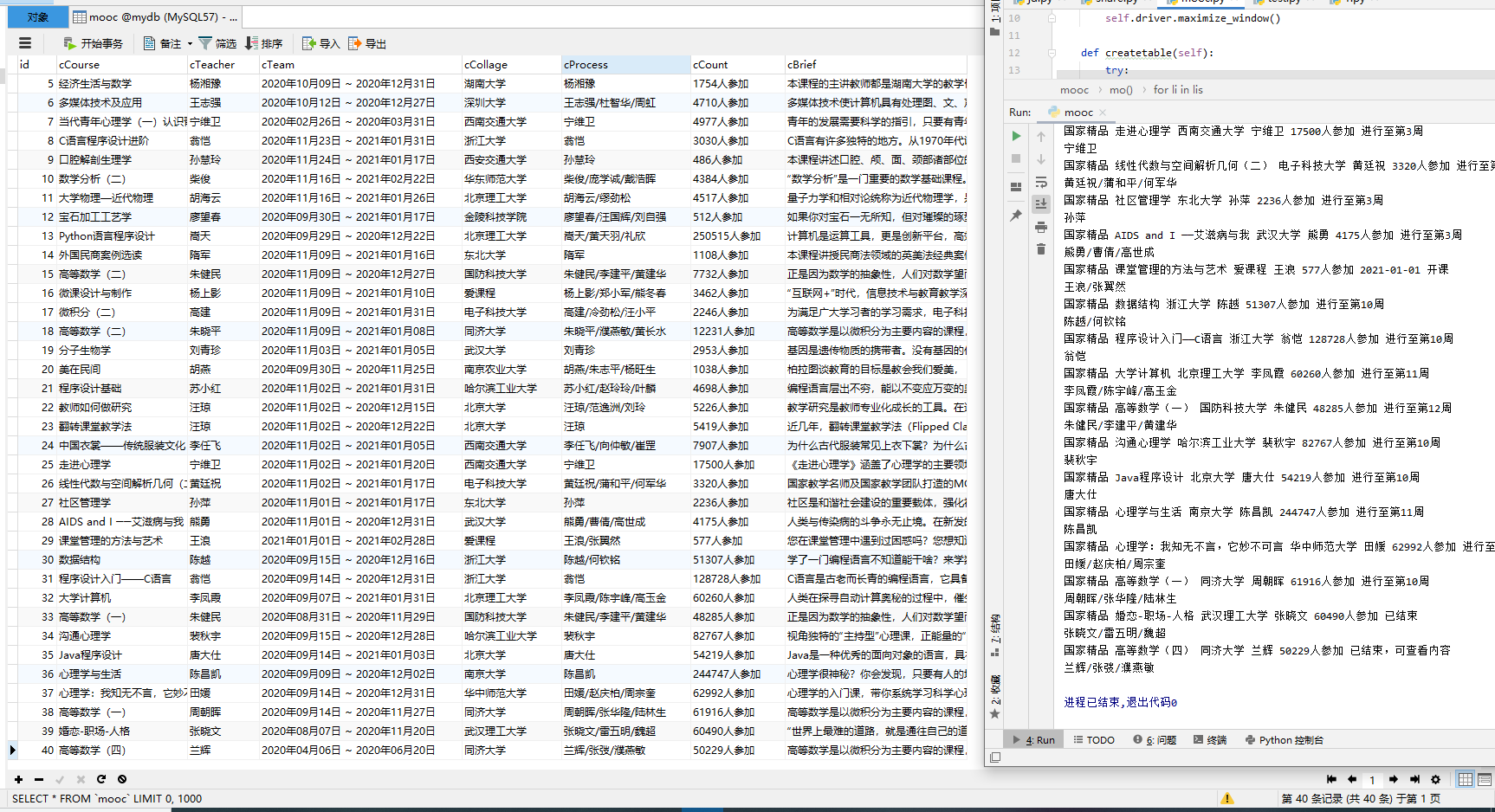

结果展示:

心得体会:翻页后出现无法点击的情况,请教同学后知道是因为点击下一页后,页面在下面,因为某种原因,要将页面拉至上方才能继续:使用"window.scrollTo(document.body.scrollHeight,0)"

同时所爬取mooc该网站上一页与下一页的可点击/不可点击时的tag一样,添加了一些判断解决。