原文链接:Spark集群基于Zookeeper的HA搭建部署笔记

1.环境介绍

(1)操作系统RHEL6.2-64

(2)两个节点:spark1(192.168.232.147),spark2(192.168.232.152)

(3)两个节点上都装好了Hadoop 2.2集群

2.安装Zookeeper

(1)下载Zookeeper:http://apache.claz.org/zookeeper ... keeper-3.4.5.tar.gz

(2)解压到/root/install/目录下

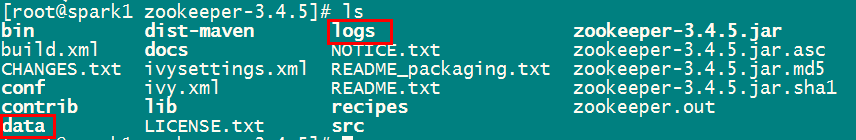

(3)创建两个目录,一个是数据目录,一个日志目录

(4)配置:进到conf目录下,把zoo_sample.cfg修改成zoo.cfg(这一步是必须的,否则zookeeper不认识zoo_sample.cfg),并添加如下内容

- dataDir=/root/install/zookeeper-3.4.5/data

- dataLogDir=/root/install/zookeeper-3.4.5/logs

- server.1=spark1:2888:3888

- server.2=spark2:2888:3888

(5)在/root/install/zookeeper-3.4.5/data目录下创建myid文件,并在里面写1

- cd /root/install/zookeeper-3.4.5/data

- echo 1>myid

(6)把/root/install/zookeeper-3.4.5整个目录复制到其他节点

- scp -r /root/install/zookeeper-3.4.5 root@spark2:/root/install/

(7)登录到spark2节点,修改myid文件里的值,将其修改为2

- cd /root/install/zookeeper-3.4.5/data

- echo 2>myid

(8)在spark1,spark2两个节点上分别启动zookeeper

- cd /root/install/zookeeper-3.4.5

- bin/zkServer.sh start

(9)查看进程进否成在

- [root@spark2 zookeeper-3.4.5]# bin/zkServer.sh start

- JMX enabled by default

- Using config: /root/install/zookeeper-3.4.5/bin/../conf/zoo.cfg

- Starting zookeeper ... STARTED

- [root@spark2 zookeeper-3.4.5]# jps

- 2490 Jps

- 2479 QuorumPeerMain

3.配置Spark的HA

(1)进到spark的配置目录,在spark-env.sh修改如下

- export

SPARK_DAEMON_JAVA_OPTS="-Dspark.deploy.recoveryMode=ZOOKEEPER

-Dspark.deploy.zookeeper.url=spark1:2181,spark2:2181

-Dspark.deploy.zookeeper.dir=/spark"

- export JAVA_HOME=/root/install/jdk1.7.0_21

- #export SPARK_MASTER_IP=spark1

- #export SPARK_MASTER_PORT=7077

- export SPARK_WORKER_CORES=1

- export SPARK_WORKER_INSTANCES=1

- export SPARK_WORKER_MEMORY=1g

(2)把这个配置文件分发到各个节点上去

- scp spark-env.sh root@spark2:/root/install/spark-1.0/conf/

(3)启动spark集群

- [root@spark1 spark-1.0]# sbin/start-all.sh

- starting org.apache.spark.deploy.master.Master, logging to

/root/install/spark-1.0/sbin/../logs/spark-root-org.apache.spark.deploy.master.Master-1-spark1.out

- spark1: starting org.apache.spark.deploy.worker.Worker, logging

to

/root/install/spark-1.0/sbin/../logs/spark-root-org.apache.spark.deploy.worker.Worker-1-spark1.out

- spark2: starting org.apache.spark.deploy.worker.Worker, logging to /root/install/spark-1.0/sbin/../logs/spark-root-org.apache.spark.deploy.worker.Worker-1-spark2.out

(4)进到spark2(192.168.232.152)节点,把start-master.sh 启动,当spark1(192.168.232.147)挂掉时,spark2顶替当master

- [root@spark2 spark-1.0]# sbin/start-master.sh

- starting org.apache.spark.deploy.master.Master, logging to

/root/install/spark-1.0/sbin/../logs/spark-root-org.apache.spark.deploy.master.Master-1-spark2.out

(5)查看spark1和spark2上运行的哪些进程

- [root@spark1 spark-1.0]# jps

- 5797 Worker

- 5676 Master

- 6287 Jps

- 2602 QuorumPeerMain

- [root@spark2 spark-1.0]# jps

- 2479 QuorumPeerMain

- 5750 Jps

- 5534 Worker

- 5635 Master

4.测试HA是否生效

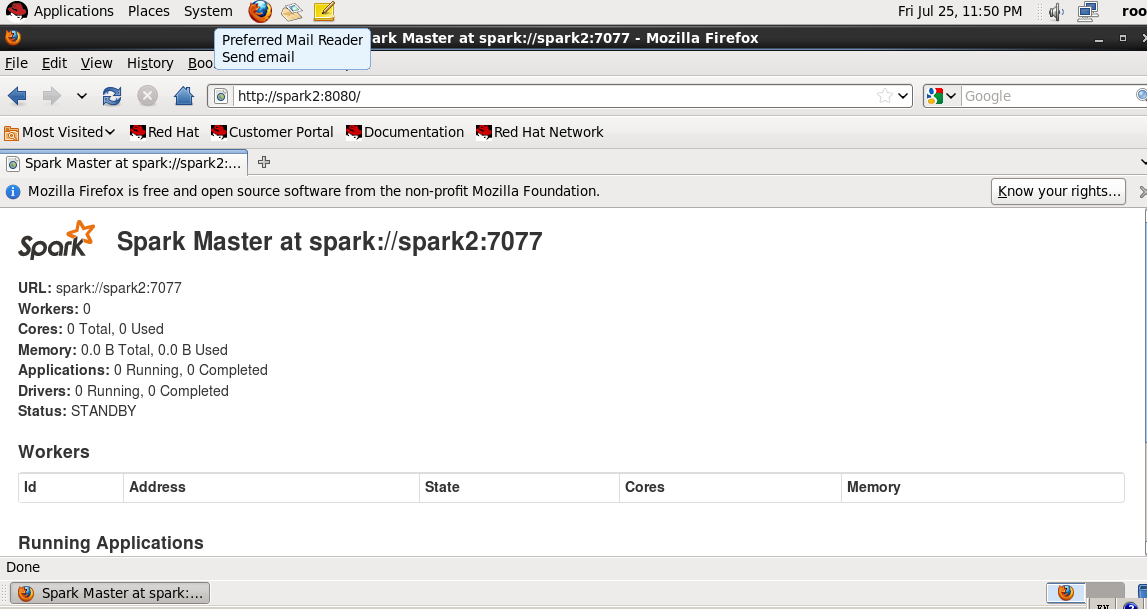

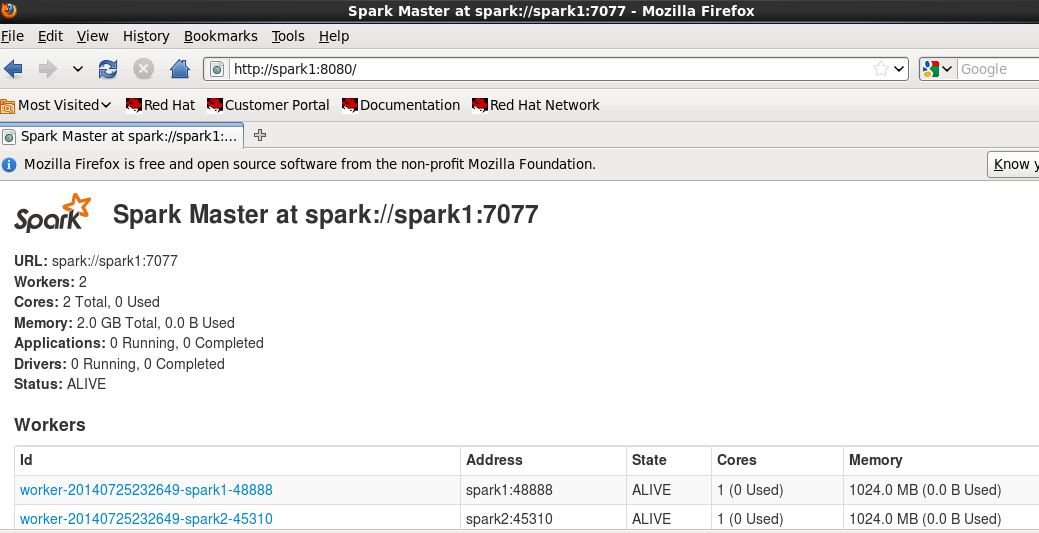

(1)先查看一下两个节点的运行情况,现在spark1运行了master,spark2是待命状态

(2)在spark1上把master服务停掉

- [root@spark1 spark-1.0]# sbin/stop-master.sh

- stopping org.apache.spark.deploy.master.Master

- [root@spark1 spark-1.0]# jps

- 5797 Worker

- 6373 Jps

- 2602 QuorumPeerMain

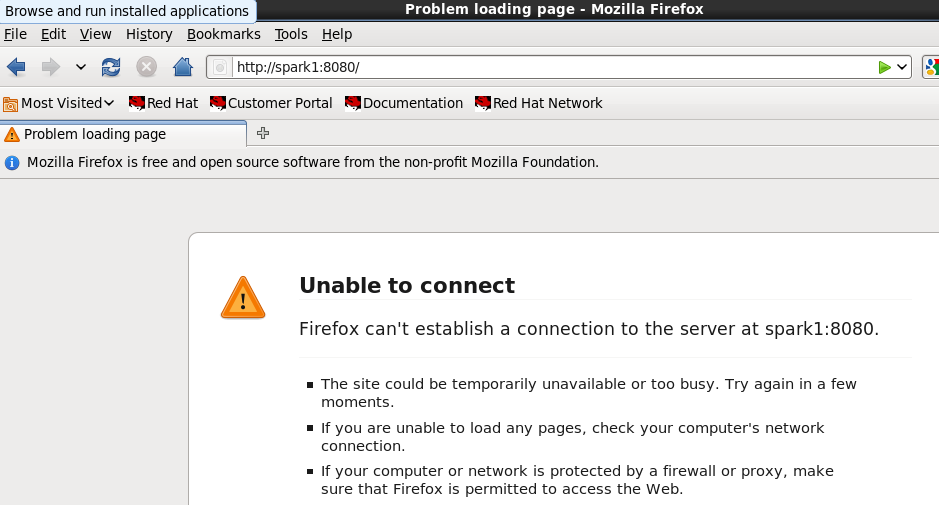

(3)用浏览器访问master的8080端口,看是否还活着。以下可以看出,master已经挂掉

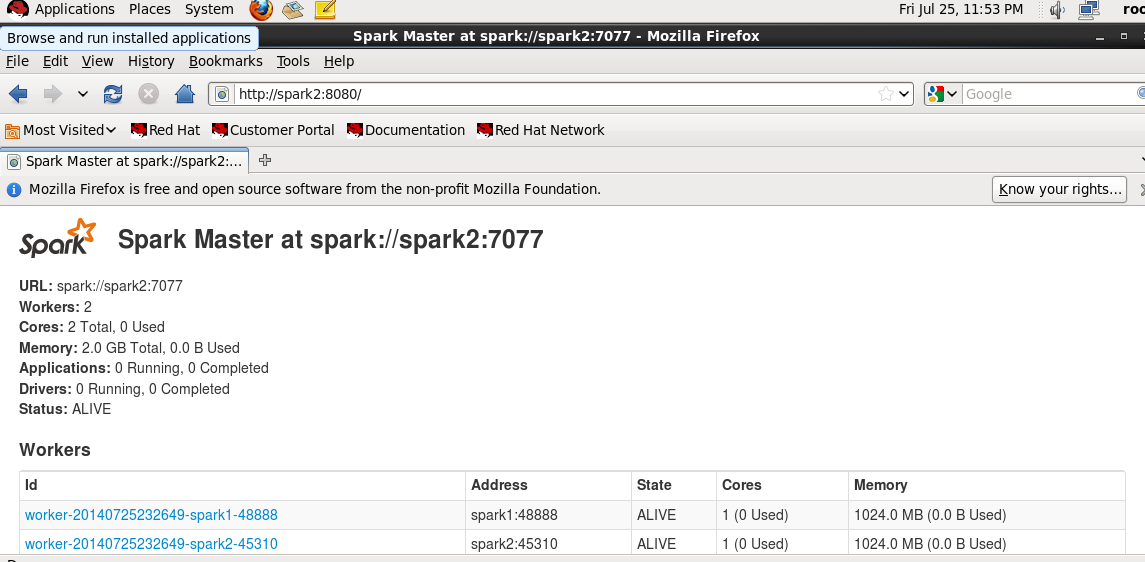

(4)再用浏览器访问查看spark2的状态,从下图看出,spark2已经被切换当master了