032:基于Consul和MGR的MySQL高可用架构

一、Consul

1、Consul简介

Consul是HashiCorp公司推出的一款开源工具, 基于go语言开发,用于实现分布式系统的服务发现与配置。

Consul的优势:

- 多数据中心服务发现(DNS + HTTP)

- 支持健康检查.

- 单数据中心微服务

- 内置Web UI,用于编辑K/V和查看健康检查状态

- 支持热配置,不用重启服务

- 支持编排任务

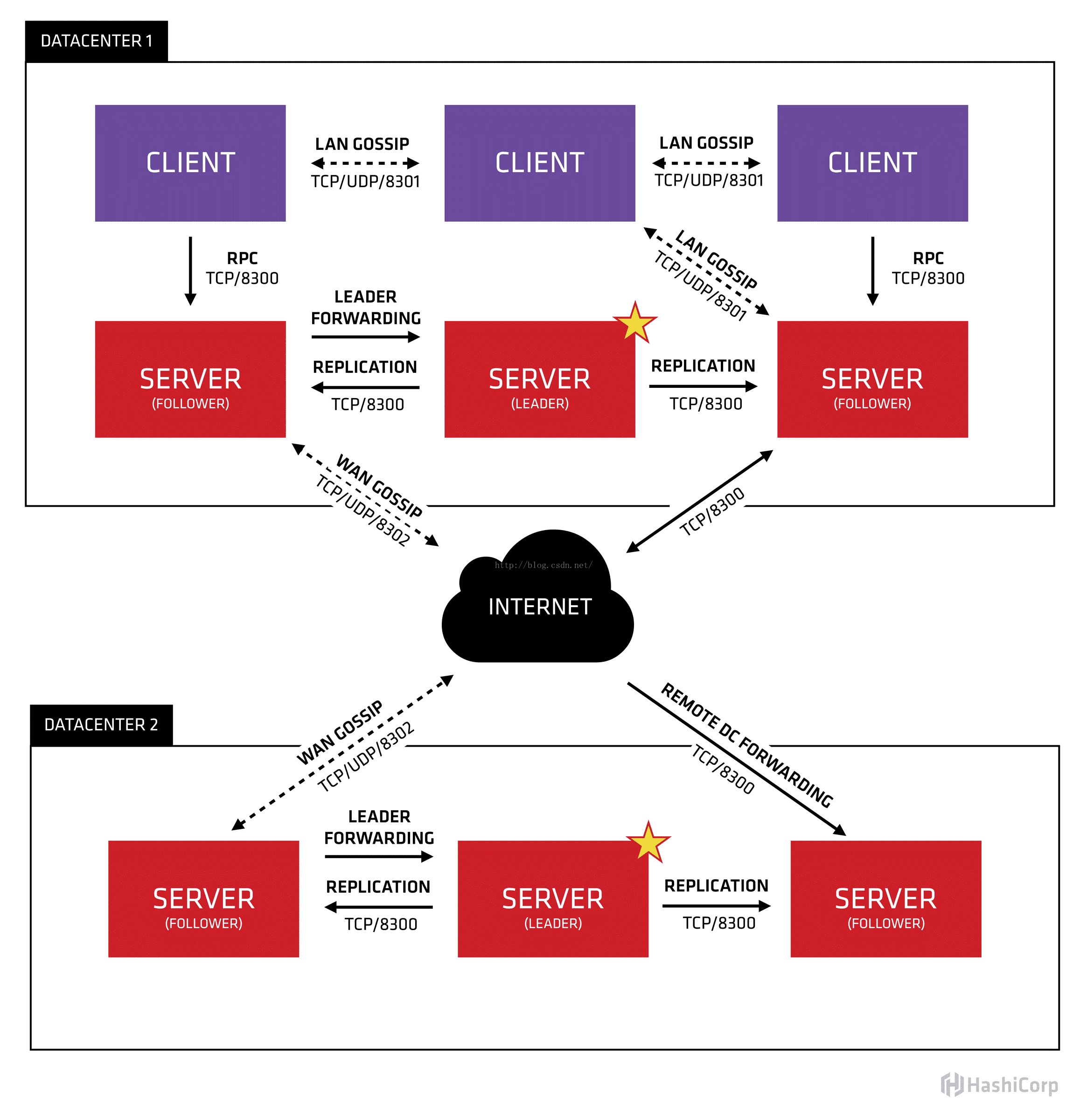

基础架构:

-

Consul Cluster由部署和运行了

Consul Agent的节点组成。 在Cluster中有两种角色:Server和Client。 -

Server和Client的角色和Consul Cluster上运行的应用服务无关, 是基于Consul层面的一种角色划分.

-

Consul Server: 用于维护Consul Cluster的状态信息, 实现数据一致性, 响应RPC请求。

官方建议是: 至少要运行3个或者3个以上的Consul Server。 多个server之中需要选举一个leader, 这个选举过程Consul基于Raft协议实现. 多个Server节点上的Consul数据信息保持强一致性。 在局域网内与本地客户端通讯,通过广域网与其他数据中心通讯。

-

Consul Client: 只维护自身的状态, 并将HTTP和DNS接口请求转发给服务端。

-

Consul 支持多数据中心, 多个数据中心要求每个数据中心都要安装一组Consul cluster,多个数据中心间基于gossip protocol协议来通讯, 使用

Raft算法实现一致性

2、准备环境

| hosts | ip | software | consul.json | service_json_01 | service_json_02 | script01 | script02 |

|---|---|---|---|---|---|---|---|

| node01 | 192.168.222.171 | mysql | Client.json | mysql_mgr_write_3306.json | mysql_mgr_read_3306.json | check_mysql_mgr_master.sh | check_mysql_mgr_slave.sh |

| node02 | 192.168.222.172 | mysql | Client.json | mysql_mgr_write_3306.json | mysql_mgr_read_3306.json | check_mysql_mgr_master.sh | check_mysql_mgr_slave.sh |

| node03 | 192.168.222.173 | mysql | Client.json | mysql_mgr_write_3306.json | mysql_mgr_read_3306.json | check_mysql_mgr_master.sh | check_mysql_mgr_slave.sh |

| node04 | 192.168.222.174 | mysql | Client.json | mysql_mgr_write_3306.json | mysql_mgr_read_3306.json | check_mysql_mgr_master.sh | check_mysql_mgr_slave.sh |

| node05 | 192.168.222.175 | mysql | |||||

| node06 | 192.168.222.176 | mysql | |||||

| node09 | 192.168.222.179 | server.json | |||||

| node10 | 192.168.222.180 | server.json | |||||

| node11 | 192.168.222.181 | server.json | |||||

| node12 | 192.168.222.182 | porxysql | Client.json | proxysql.json | proxysql_check.sh | ||

| audit01 | 192.168.222.199 | porxysql | Client.json | proxysql.json | proxysql_check.sh |

- node05、node06部署成单机多实例MGR,端口分别(33061~33065)

3、Consul 安装

这里采用ansible安装,不累述了

- 步骤如下:

--每台都安装consul

cd /software

wget https://releases.hashicorp.com/consul/1.4.0/consul_1.4.0_linux_amd64.zip

unzip /software/consul_1.4.0_linux_amd64.zip

cp /software/consul /usr/local/bin/

consul --version

--所有主机都创建目录/etc/consul.d/scripts/和/data/consul/,分别是放配置文件、存放数据的、mysql的健康检查脚本。

mkdir -p /etc/consul.d/scripts && mkdir -p /data/consul/

- 目录结构

[root@node12 consul.d]# tree /etc/consul.d

/etc/consul.d

├── client.json

├── proxysql.json

└── scripts

└── proxysql_check.sh

1 directory, 3 files

[root@node12 consul.d]# tree /data/consul/ --初始/data/consul/是空目录

/data/consul/

├── checkpoint-signature

├── consul.log

├── node-id

├── proxy

│ └── snapshot.json

├── serf

│ └── local.snapshot

└── services

3 directories, 5 files

[root@node12 consul.d]#

4、Consul配置文件

-

consul server 配置文件

advertise_addr(主机IP)和node_name(主机名),(node09、node10、node11)三个主机修改对应信息"domain":"gczheng", 所以后缀是servers.gczheng

[root@node09 ~]# cat /etc/consul.d/server.json

{

"addresses":{

"http":"0.0.0.0",

"dns":"0.0.0.0"

},

"bind_addr":"0.0.0.0",

"advertise_addr":"192.168.222.179",

"bootstrap_expect":3,

"datacenter":"dc1",

"data_dir":"/data/consul",

"dns_config":{

"allow_stale":true,

"max_stale":"87600h",

"node_ttl":"0s",

"service_ttl":{

"*":"0s"

}

},

"domain":"gczheng",

"enable_syslog":false,

"leave_on_terminate":false,

"log_level":"info",

"node_name":"node09.test.com",

"node_meta":{

"location":"gczheng"

},

"performance":{

"raft_multiplier":1

},

"ports":{

"http":8500,

"dns":53

},

"reconnect_timeout":"72h",

"recursors":[

"192.168.222.179",

"192.168.222.180",

"192.168.222.181"

],

"retry_join":[

"192.168.222.179",

"192.168.222.180",

"192.168.222.181"

],

"retry_interval":"10s",

"server":true,

"skip_leave_on_interrupt":true,

"ui":true

}

[root@node09 ~]#

-

consul client 配置文件

bind_addr修改为成对应的主机ip(node01、node02、node03、node04、node05、node06、node12、audit01)

[root@node01 consul.d]# cat /etc/consul.d/client.json

{

"data_dir": "/data/consul",

"enable_script_checks": true,

"bind_addr": "192.168.222.171",

"retry_join": ["192.168.222.179"],

"retry_interval": "30s",

"rejoin_after_leave": true,

"start_join": ["192.168.222.179"],

"datacenter": "dc1"

}

- proxysql 配置文件

[root@audit01 consul.d]# cat proxysql.json

{

"services":

[{

"id": "proxy1",

"name": "proxysql",

"address": "",

"tags": ["mysql_proxy"],

"port": 6033,

"check": {

"args": ["/etc/consul.d/scripts/proxysql_check.sh", "192.168.222.199"],

"interval": "5s"

},

"id": "proxy2",

"name": "proxysql",

"address": "",

"tags": ["mysql_proxy"],

"port": 6033,

"check":

{

"args": ["/etc/consul.d/scripts/proxysql_check.sh", "192.168.222.182"],

"interval": "5s"

}

}]

}

- mysql_mgr_write_3306.json

[root@node02 consul.d]# cat mysql_mgr_write_3306.json

{

"services": [{

"id": "mysql_write",

"name": "mysql_3306_w",

"address": "",

"port": 3306,

"enable_tag_override": false,

"checks": [{

"id": "mysql_w_c_01",

"name": "MySQL Write Check",

"args": ["/etc/consul.d/scripts/check_mysql_mgr_master.sh", "3306"],

"interval": "15s",

"timeout": "1s",

"service_id": "mysql_w"

}]

}]

}

[root@node02 consul.d]#

- mysql_mgr_read_3306.json

[root@node02 consul.d]# cat mysql_mgr_read_3306.json

{

"services": [{

"id": "mysql_read",

"name": "mysql_3306_r",

"address": "",

"port": 3306,

"enable_tag_override": false,

"checks": [{

"id": "mysql_r_c_01",

"name": "MySQL read Check",

"args": ["/etc/consul.d/scripts/check_mysql_mgr_slave.sh", "3306"],

"interval": "15s",

"timeout": "1s",

"service_id": "mysql_r"

}]

}]

}

5、Consul 服务检查脚本

- check_mysql_mgr_master.sh

#!/bin/bash

port=$1

user="gcdb"

passwod="iforgot"

comm="/usr/local/mysql/bin/mysql -u$user -h127.0.0.1 -P $port -p$passwod"

value=`$comm -Nse "select 1"`

primary_member=`$comm -Nse "select variable_value from performance_schema.global_status WHERE VARIABLE_NAME= 'group_replication_primary_member'"`

server_uuid=`$comm -Nse "select variable_value from performance_schema.global_variables where VARIABLE_NAME='server_uuid';"`

# 判断MySQL是否存活

if [ -z $value ]

then

echo "mysql $port is down....."

exit 2

fi

# 判断节点状态,是否存活

node_state=`$comm -Nse "select MEMBER_STATE from performance_schema.replication_group_members where MEMBER_ID='$server_uuid'"`

if [ $node_state != "ONLINE" ]

then

echo "MySQL $port state is not online...."

exit 2

fi

# 先判断MGR模式,在判断是否主节点

if [ -z "$primary_member" ]

then

echo "primary_member is empty,MGR is multi-primary mode"

exit 0

else

if [[ $server_uuid == $primary_member ]]

then

echo "MySQL $port Instance is master ........"

exit 0

else

echo "MySQL $port Instance is slave ........"

exit 2

fi

fi

# 判断是不是主节点

if [[ $server_uuid == $primary_member ]]

then

echo "MySQL $port Instance is master ........"

exit 0

else

echo "MySQL $port Instance is slave ........"

exit 2

fi

- check_mysql_mgr_slave.sh

#!/bin/bash

port=$1

user="gcdb"

passwod="iforgot"

comm="/usr/local/mysql/bin/mysql -u$user -h127.0.0.1 -P $port -p$passwod"

value=`$comm -Nse "select 1"`

primary_member=`$comm -Nse "select variable_value from performance_schema.global_status WHERE VARIABLE_NAME= 'group_replication_primary_member'"`

server_uuid=`$comm -Nse "select variable_value from performance_schema.global_variables where VARIABLE_NAME='server_uuid';"`

# 判断mysql是否存活

if [ -z $value ]

then

echo "mysql $port is down....."

exit 2

fi

# 判断节点状态

node_state=`$comm -Nse "select MEMBER_STATE from performance_schema.replication_group_members where MEMBER_ID='$server_uuid'"`

if [ $node_state != "ONLINE" ]

then

echo "MySQL $port state is not online...."

exit 2

fi

# 先判断MGR模式,再判断是否主节点

if [ -z "$primary_member" ]

then

echo "primary_member is empty,MGR is multi-primary mode"

exit 2

else

# 判断是不是主节点

if [[ $server_uuid != $primary_member ]]

then

echo "MySQL $port Instance is slave ........"

exit 0

else

node_num=`$comm -Nse "select count(*) from performance_schema.replication_group_members"`

# 判断如果没有任何从节点,主节点也注册从角色服务。

if [ $node_num -eq 1 ]

then

echo "MySQL $port Instance is slave ........"

exit 0

else

echo "MySQL $port Instance is master ........"

exit 2

fi

fi

fi

两个脚本放在/etc/consul.d/scripts/路径下授权执行权限。

[root@node01 ~]# chmod 755 /etc/consul.d/scripts/*

[root@node01 ~]# ll /etc/consul.d/scripts/ |grep check_mysql_mgr

-rwxr-xr-x 1 root root 1331 Dec 4 15:05 check_mysql_mgr_master.sh

-rwxr-xr-x 1 root root 1489 Dec 4 15:05 check_mysql_mgr_slave.sh

- proxysql_check.sh

#!/bin/bash

host=$1

user="gcdb"

passwod="iforgot"

port=6033

alive_nums=`/usr/local/mysql/bin/mysqladmin ping -h$host -P$port -u$user -p$passwod |grep "mysqld is alive" |wc -l`

# 判断是不是从库

if [ $alive_nums -eq 1 ]

then

echo "$host mysqld is alive........"

exit 0

else

echo "$host mysqld is failed........"

exit 2

fi

6、Consul启动

* consul server启动(node09、node10、node11)、clients也是用下面命令启动consul

nohup consul agent -config-dir=/etc/consul.d > /data/consul/consul.log &

node09 启动consul join

--启动三个server节点后,node09 推举为主

[root@node09 ~]# consul operator raft list-peers

Node ID Address State Voter RaftProtocol

node09.test.com fcca17ba-8ddf-ec76-4931-5f5bfa043e05 192.168.222.179:8300 leader true 3

node10.test.com c95847a4-a305-ac21-4ae5-21fd16bb39c2 192.168.222.180:8300 follower true 3

node11.test.com 8d8f6757-0ef1-79a7-d3ed-4b7e04430f88 192.168.222.181:8300 follower true 3

[root@node09 ~]#

--启动所有clients后,会自动加入集群,标记为client

[root@node09 ~]# consul members

Node Address Status Type Build Protocol DC Segment

node09.test.com 192.168.222.179:8301 alive server 1.4.0 2 dc1 <all>

node10.test.com 192.168.222.180:8301 alive server 1.4.0 2 dc1 <all>

node11.test.com 192.168.222.181:8301 alive server 1.4.0 2 dc1 <all>

node01.test.com 192.168.222.171:8301 alive client 1.4.0 2 dc1 <default>

node02.test.com 192.168.222.172:8301 alive client 1.4.0 2 dc1 <default>

node03.test.com 192.168.222.173:8301 alive client 1.4.0 2 dc1 <default>

node04.test.com 192.168.222.174:8301 alive client 1.4.0 2 dc1 <default>

node05.test.com 192.168.222.175:8301 alive client 1.4.0 2 dc1 <default>

[root@node09 ~]#

[root@node11 ~]# cat /data/consul/consul.log

bootstrap_expect > 0: expecting 3 servers

==> Starting Consul agent...

==> Consul agent running!

Version: 'v1.4.0'

Node ID: 'a1b27430-1a44-5d63-3af8-ec4a616803cc'

Node name: 'node11.test.com'

Datacenter: 'dc1' (Segment: '<all>')

Server: true (Bootstrap: false)

Client Addr: [127.0.0.1] (HTTP: 8500, HTTPS: -1, gRPC: -1, DNS: 53)

Cluster Addr: 192.168.222.181 (LAN: 8301, WAN: 8302)

Encrypt: Gossip: false, TLS-Outgoing: false, TLS-Incoming: false

==> Log data will now stream in as it occurs:

2018/12/03 16:27:39 [WARN] agent: Node name "node11.test.com" will not be discoverable via DNS due to invalid characters. Valid characters include all alpha-numerics and dashes.

2018/12/03 16:27:39 [INFO] raft: Initial configuration (index=0): []

2018/12/03 16:27:39 [INFO] raft: Node at 192.168.222.181:8300 [Follower] entering Follower state (Leader: "")

2018/12/03 16:27:39 [INFO] serf: EventMemberJoin: node11.test.com.dc1 192.168.222.181

2018/12/03 16:27:39 [INFO] serf: EventMemberJoin: node11.test.com 192.168.222.181

2018/12/03 16:27:39 [INFO] consul: Adding LAN server node11.test.com (Addr: tcp/192.168.222.181:8300) (DC: dc1)

2018/12/03 16:27:39 [INFO] agent: Started DNS server 0.0.0.0:53 (udp)

2018/12/03 16:27:39 [WARN] agent/proxy: running as root, will not start managed proxies

2018/12/03 16:27:39 [INFO] consul: Handled member-join event for server "node11.test.com.dc1" in area "wan"

2018/12/03 16:27:39 [INFO] agent: Started DNS server 0.0.0.0:53 (tcp)

2018/12/03 16:27:39 [INFO] agent: Started HTTP server on [::]:8500 (tcp)

2018/12/03 16:27:39 [INFO] agent: started state syncer

2018/12/03 16:27:39 [INFO] agent: Retry join LAN is supported for: aliyun aws azure digitalocean gce k8s os packet scaleway softlayer triton vsphere

2018/12/03 16:27:39 [INFO] agent: Joining LAN cluster...

2018/12/03 16:27:39 [INFO] agent: (LAN) joining: [192.168.222.179 192.168.222.180 192.168.222.181]

2018/12/03 16:27:39 [INFO] serf: EventMemberJoin: node10.test.com 192.168.222.180

2018/12/03 16:27:39 [WARN] memberlist: Refuting a suspect message (from: node11.test.com)

2018/12/03 16:27:39 [INFO] consul: Adding LAN server node10.test.com (Addr: tcp/192.168.222.180:8300) (DC: dc1)

- consul client启动(node01、node02、node03、node04、node05、node06)

--主机分别执行启动

shell> nohup consul agent -config-dir=/etc/consul.d > /data/consul/consul.log &

--node01的启动过程

[root@node01 ~]# cat /data/consul/consul.log |egrep -v ERR /data/consul/consul.log |egrep -v 'ERR|WARN'

==> Starting Consul agent...

==> Joining cluster...

Join completed. Synced with 1 initial agents

==> Consul agent running!

Version: 'v1.4.0'

Node ID: 'feb4c253-f861-fbe0-10c8-50ff7bbbbf17'

Node name: 'node01.test.com'

Datacenter: 'dc1' (Segment: '')

Server: false (Bootstrap: false)

Client Addr: [127.0.0.1] (HTTP: 8500, HTTPS: -1, gRPC: -1, DNS: 8600)

Cluster Addr: 192.168.222.171 (LAN: 8301, WAN: 8302)

Encrypt: Gossip: false, TLS-Outgoing: false, TLS-Incoming: false

==> Log data will now stream in as it occurs:

2018/12/03 15:30:04 [INFO] serf: EventMemberJoin: node01.test.com 192.168.222.171

2018/12/03 15:30:04 [INFO] serf: Attempting re-join to previously known node: node04.test.com: 192.168.222.174:8301

2018/12/03 15:30:04 [INFO] agent: Started DNS server 127.0.0.1:8600 (udp)

2018/12/03 15:30:04 [INFO] agent: Started DNS server 127.0.0.1:8600 (tcp)

2018/12/03 15:30:04 [INFO] agent: Started HTTP server on 127.0.0.1:8500 (tcp)

2018/12/03 15:30:04 [INFO] agent: (LAN) joining: [192.168.222.179]

2018/12/03 15:30:04 [INFO] agent: Retry join LAN is supported for: aliyun aws azure digitalocean gce k8s os packet scaleway softlayer triton vsphere

2018/12/03 15:30:04 [INFO] agent: Joining LAN cluster...

2018/12/03 15:30:04 [INFO] agent: (LAN) joining: [192.168.222.179]

2018/12/03 15:30:04 [INFO] serf: EventMemberJoin: node09.test.com 192.168.222.179

2018/12/03 15:30:04 [INFO] serf: EventMemberJoin: node11.test.com 192.168.222.181

2018/12/03 15:30:04 [INFO] serf: EventMemberJoin: node10.test.com 192.168.222.180

2018/12/03 15:30:04 [INFO] serf: EventMemberJoin: node04.test.com 192.168.222.174

2018/12/03 15:30:04 [INFO] consul: adding server node09.test.com (Addr: tcp/192.168.222.179:8300) (DC: dc1)

2018/12/03 15:30:04 [INFO] consul: adding server node11.test.com (Addr: tcp/192.168.222.181:8300) (DC: dc1)

2018/12/03 15:30:04 [INFO] consul: adding server node10.test.com (Addr: tcp/192.168.222.180:8300) (DC: dc1)

2018/12/03 15:30:04 [INFO] serf: EventMemberJoin: node05.test.com 192.168.222.175

2018/12/03 15:30:04 [INFO] serf: Re-joined to previously known node: node04.test.com: 192.168.222.174:8301

2018/12/03 15:30:04 [INFO] serf: EventMemberJoin: node03.test.com 192.168.222.173

2018/12/03 15:30:04 [INFO] serf: EventMemberJoin: node02.test.com 192.168.222.172

2018/12/03 15:30:04 [INFO] agent: (LAN) joined: 1 Err: <nil>

2018/12/03 15:30:04 [INFO] agent: started state syncer

2018/12/03 15:30:04 [INFO] agent: (LAN) joined: 1 Err: <nil>

2018/12/03 15:30:04 [INFO] agent: Join LAN completed. Synced with 1 initial agents

2018/12/03 15:30:04 [INFO] agent: Synced node info

2018/12/03 15:30:05 [INFO] agent: Caught signal: hangup

2018/12/03 15:30:05 [INFO] agent: Reloading configuration...

[root@node01 ~]# consul members

Node Address Status Type Build Protocol DC Segment

node09.test.com 192.168.222.179:8301 alive server 1.4.0 2 dc1 <all>

node10.test.com 192.168.222.180:8301 alive server 1.4.0 2 dc1 <all>

node11.test.com 192.168.222.181:8301 alive server 1.4.0 2 dc1 <all>

node01.test.com 192.168.222.171:8301 alive client 1.4.0 2 dc1 <default>

node02.test.com 192.168.222.172:8301 alive client 1.4.0 2 dc1 <default>

node03.test.com 192.168.222.173:8301 alive client 1.4.0 2 dc1 <default>

node04.test.com 192.168.222.174:8301 alive client 1.4.0 2 dc1 <default>

node05.test.com 192.168.222.175:8301 alive client 1.4.0 2 dc1 <default>

[root@node01 ~]#

更新consul配置执行刷新

--刷新consul

[root@node01 ~]# consul reload

Configuration reload triggered

[root@node01 ~]#

二、MGR搭建

1、MGR配置

-

直接使用杨建荣的快速搭建mgr脚本,配置多实例(node05)(一主四从)

-

手动搭建MGR(node01、node02、node03、node04)(多主模式)

- 请自行搭建

-

MGR默认账号

GRANT REPLICATION SLAVE ON *.* TO rpl_user@'%' IDENTIFIED BY 'rpl_pass';GRANT ALL ON *.* TO 'gcdb'@'%' IDENTIFIED BY 'iforgot';

2、MGR查看

(node01、node02、node03、node04)(多主模式)MGR启动完如下:

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-----------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-----------------+-------------+--------------+

| group_replication_applier | 30955199-ebdc-11e8-ac1f-005056ab820e | node04.test.com | 3306 | ONLINE |

| group_replication_applier | 8770cc17-ebdb-11e8-981b-005056ab9fa5 | node01.test.com | 3306 | ONLINE |

| group_replication_applier | c38416c3-ebd4-11e8-9b2c-005056aba7b5 | node03.test.com | 3306 | ONLINE |

| group_replication_applier | e5dba457-f6d5-11e8-97ca-005056abaf95 | node02.test.com | 3306 | ONLINE |

+---------------------------+--------------------------------------+-----------------+-------------+--------------+

4 rows in set (0.00 sec)

mysql> SELECT member_id,member_host,member_port,member_state,IF (global_status.variable_name IS NOT NULL,'primary','secondary') AS member_role FROM PERFORMANCE_SCHEMA.replication_group_members LEFT JOIN PERFORMANCE_SCHEMA.global_status ON global_status.variable_name='group_replication_primary_member' AND global_status.variable_value=replication_group_members.member_id;

+--------------------------------------+-----------------+-------------+--------------+-------------+

| member_id | member_host | member_port | member_state | member_role |

+--------------------------------------+-----------------+-------------+--------------+-------------+

| 30955199-ebdc-11e8-ac1f-005056ab820e | node04.test.com | 3306 | ONLINE | secondary |

| 8770cc17-ebdb-11e8-981b-005056ab9fa5 | node01.test.com | 3306 | ONLINE | secondary |

| c38416c3-ebd4-11e8-9b2c-005056aba7b5 | node03.test.com | 3306 | ONLINE | secondary |

| e5dba457-f6d5-11e8-97ca-005056abaf95 | node02.test.com | 3306 | ONLINE | secondary |

+--------------------------------------+-----------------+-------------+--------------+-------------+

4 rows in set (0.00 sec)

mysql>

(node05)(一主四从)MGR启动完如下:

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-----------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-----------------+-------------+--------------+

| group_replication_applier | 0ea53bea-ec72-11e8-913c-005056ab74f8 | node05.test.com | 33062 | ONLINE |

| group_replication_applier | 331664a5-ec72-11e8-a6f0-005056ab74f8 | node05.test.com | 33063 | ONLINE |

| group_replication_applier | 54f7be9e-ec72-11e8-ba6c-005056ab74f8 | node05.test.com | 33064 | ONLINE |

| group_replication_applier | 779b4473-ec72-11e8-8f08-005056ab74f8 | node05.test.com | 33065 | ONLINE |

| group_replication_applier | ee039eb5-ec71-11e8-bd33-005056ab74f8 | node05.test.com | 33061 | ONLINE |

+---------------------------+--------------------------------------+-----------------+-------------+--------------+

5 rows in set (0.00 sec)

mysql> select member_id,member_host,member_port,member_state,if (global_status.variable_name is not null,'primary','secondary') as member_role from performance_schema.replication_group_members left join performance_schema.global_status on global_status.variable_name='group_replication_primary_member' and global_status.variable_value=replication_group_members.member_id;

+--------------------------------------+-----------------+-------------+--------------+-------------+

| member_id | member_host | member_port | member_state | member_role |

+--------------------------------------+-----------------+-------------+--------------+-------------+

| 331664a5-ec72-11e8-a6f0-005056ab74f8 | node05.test.com | 33063 | ONLINE | primary |

| 0ea53bea-ec72-11e8-913c-005056ab74f8 | node05.test.com | 33062 | ONLINE | secondary |

| 54f7be9e-ec72-11e8-ba6c-005056ab74f8 | node05.test.com | 33064 | ONLINE | secondary |

| 779b4473-ec72-11e8-8f08-005056ab74f8 | node05.test.com | 33065 | ONLINE | secondary |

| ee039eb5-ec71-11e8-bd33-005056ab74f8 | node05.test.com | 33061 | ONLINE | secondary |

+--------------------------------------+-----------------+-------------+--------------+-------------+

5 rows in set (0.00 sec)

mysql> create database dbtest;

Query OK, 1 row affected (0.16 sec)

mysql> create table dbtest.t2(id int(4)primary key not null auto_increment,nums int(20) not null);

Query OK, 0 rows affected (0.17 sec)

mysql> insert into dbtest.t2(nums) values(1),(2),(3),(4),(5);

Query OK, 5 rows affected (0.05 sec)

Records: 5 Duplicates: 0 Warnings: 0

mysql> update dbtest.t2 set nums=100 where id =3; --id自增为7,这条语句执行没结果

Query OK, 0 rows affected (0.00 sec)

Rows matched: 0 Changed: 0 Warnings: 0

mysql> delete from dbtest.t2 where id =4; --id自增为7,这条语句执行没结果

Query OK, 0 rows affected (0.00 sec)

mysql> select * from dbtest.t2;

+----+------+

| id | nums |

+----+------+

| 7 | 1 | --group_replication_auto_increment_increment=7的缘故, --id自增为7

| 14 | 2 |

| 21 | 3 |

| 28 | 4 |

| 35 | 5 |

+----+------+

5 rows in set (0.00 sec)

mysql> create database test;

Query OK, 1 row affected (0.09 sec)

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| dbtest |

| mysql |

| performance_schema |

| sys |

| test |

+--------------------+

6 rows in set (0.00 sec)

mysql>

三 、Consul测试

1、MGR(多主模式)+ Consul模式

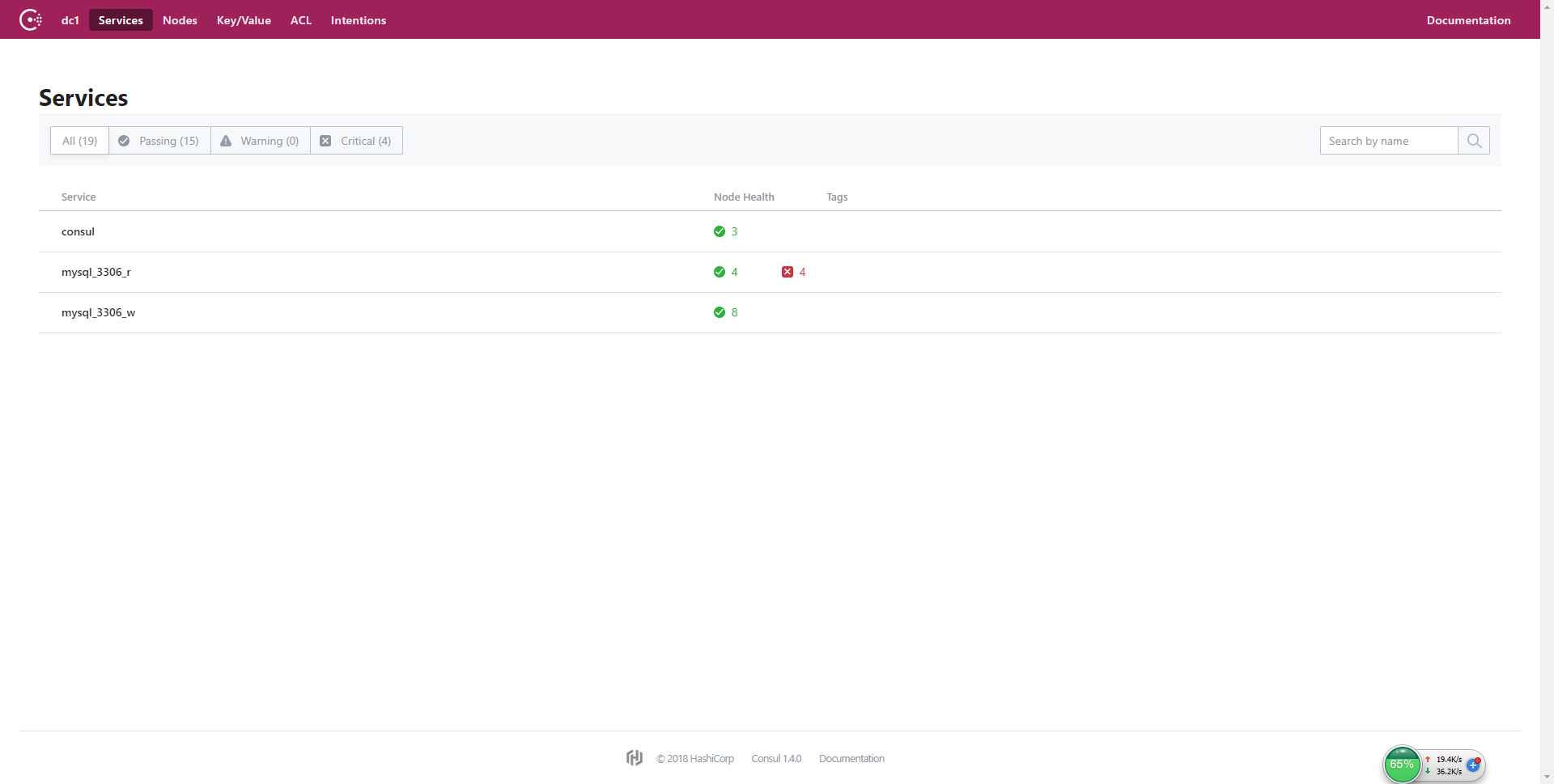

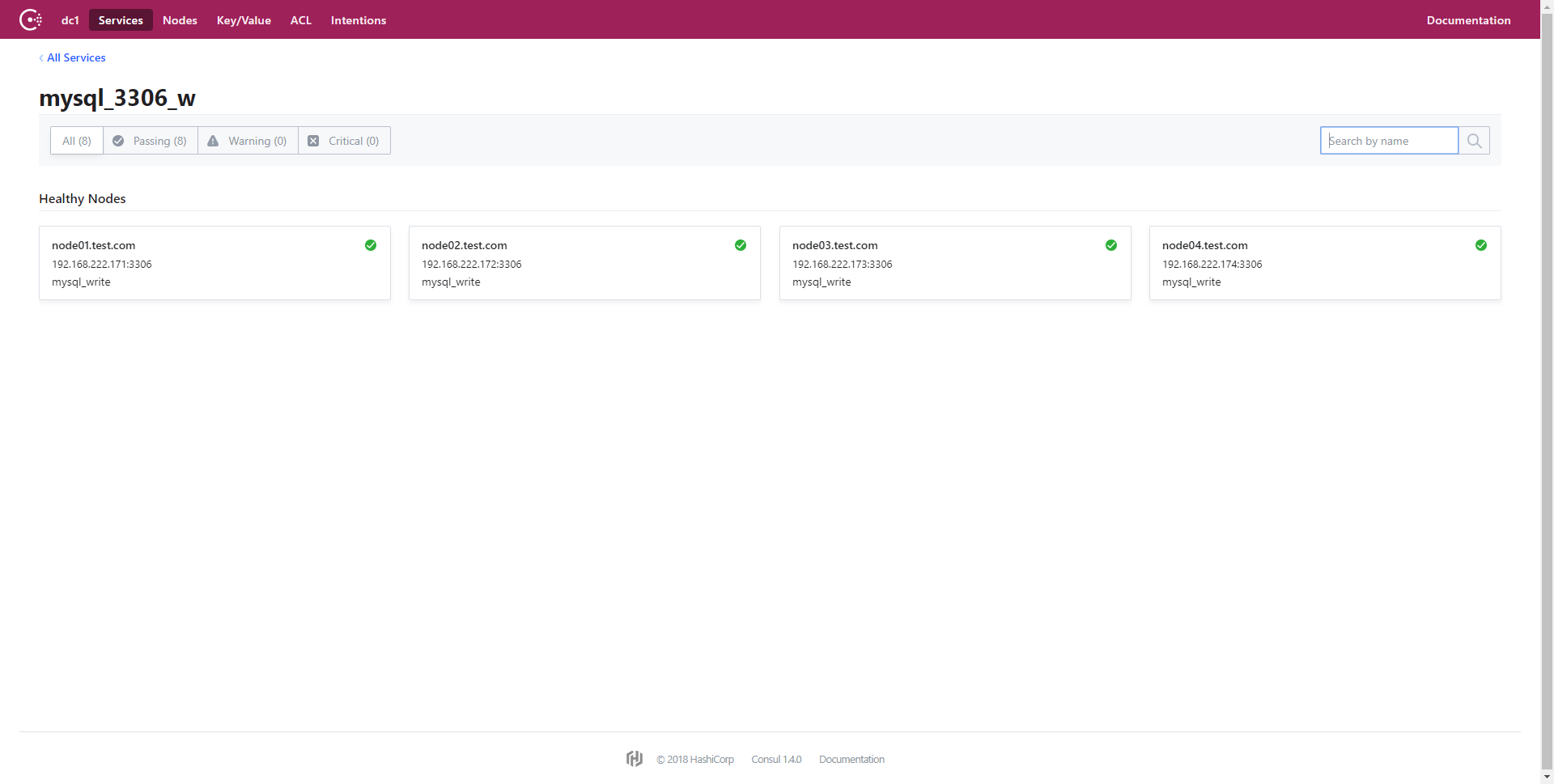

1.1 、Consul UI界面

地址:http://192.168.49.181:8500/ui/

检查到3个服务,consul 、mysql_3306_w、mysql_3306_r

- consul

- mysql_3306_r

- mysql_3306_w

1.2、Consul 检查DNS解析

- 域名转发

这里用Dnsmasq来做域名转发(访问MGR的客户端),域名: mysql_3306_w.service.gczheng

[root@node05 consul.d]# echo "server=/gczheng/127.0.0.1#53" > /etc/dnsmasq.d/10-consul

[root@node05 consul.d]# service dnsmasq restart

Redirecting to /bin/systemctl restart dnsmasq.service

[root@node05 consul.d]# cat /etc/resolv.conf

# Generated by NetworkManager

#nameserver 192.168.10.247

nameserver 127.0.0.1

[root@node05 consul.d]# consul reload

Configuration reload triggered

[root@node05 consul.d]# service dnsmasq restart

Redirecting to /bin/systemctl restart dnsmasq.service

[root@node05 consul.d]# ping mysql_3306_w.service.gczheng

PING mysql_3306_w.service.gczheng (192.168.222.173) 56(84) bytes of data.

64 bytes from node03.test.com (192.168.222.173): icmp_seq=1 ttl=64 time=0.283 ms --测试ok

64 bytes from node03.test.com (192.168.222.173): icmp_seq=2 ttl=64 time=0.266 ms

64 bytes from node03.test.com (192.168.222.173): icmp_seq=3 ttl=64 time=0.274 ms

dig解析域名 mysql_3306_w.service.gczheng

[root@node11 ~]# dig @127.0.0.1 -p 53 mysql_3306_w.service.gczheng ANY

; <<>> DiG 9.9.4-RedHat-9.9.4-51.el7_4.2 <<>> @127.0.0.1 -p 53 mysql_3306_w.service.gczheng ANY

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 7004

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 8, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;mysql_3306_w.service.gczheng. IN ANY

;; ANSWER SECTION:

mysql_3306_w.service.gczheng. 0 IN A 192.168.222.174

mysql_3306_w.service.gczheng. 0 IN TXT "consul-network-segment="

mysql_3306_w.service.gczheng. 0 IN A 192.168.222.172

mysql_3306_w.service.gczheng. 0 IN TXT "consul-network-segment="

mysql_3306_w.service.gczheng. 0 IN A 192.168.222.173

mysql_3306_w.service.gczheng. 0 IN TXT "consul-network-segment="

mysql_3306_w.service.gczheng. 0 IN A 192.168.222.171

mysql_3306_w.service.gczheng. 0 IN TXT "consul-network-segment="

;; Query time: 12 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Wed Dec 05 09:32:20 CST 2018

;; MSG SIZE rcvd: 265

[root@node11 ~]#

[root@node11 ~]# dig @127.0.0.1 -p 53 mysql_3306_w.service.gczheng SRV

; <<>> DiG 9.9.4-RedHat-9.9.4-51.el7_4.2 <<>> @127.0.0.1 -p 53 mysql_3306_w.service.gczheng SRV

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 450

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 4, AUTHORITY: 0, ADDITIONAL: 9

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;mysql_3306_w.service.gczheng. IN SRV

;; ANSWER SECTION:

mysql_3306_w.service.gczheng. 0 IN SRV 1 1 3306 node04.test.com.node.dc1.gczheng.

mysql_3306_w.service.gczheng. 0 IN SRV 1 1 3306 node03.test.com.node.dc1.gczheng.

mysql_3306_w.service.gczheng. 0 IN SRV 1 1 3306 node01.test.com.node.dc1.gczheng.

mysql_3306_w.service.gczheng. 0 IN SRV 1 1 3306 node02.test.com.node.dc1.gczheng.

;; ADDITIONAL SECTION:

node04.test.com.node.dc1.gczheng. 0 IN A 192.168.222.174

node04.test.com.node.dc1.gczheng. 0 IN TXT "consul-network-segment="

node03.test.com.node.dc1.gczheng. 0 IN A 192.168.222.173

node03.test.com.node.dc1.gczheng. 0 IN TXT "consul-network-segment="

node01.test.com.node.dc1.gczheng. 0 IN A 192.168.222.171

node01.test.com.node.dc1.gczheng. 0 IN TXT "consul-network-segment="

node02.test.com.node.dc1.gczheng. 0 IN A 192.168.222.172

node02.test.com.node.dc1.gczheng. 0 IN TXT "consul-network-segment="

;; Query time: 228 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Wed Dec 05 09:32:11 CST 2018

;; MSG SIZE rcvd: 473

[root@node11 ~]# ping mysql_3306_w.service.gczheng

PING mysql_3306_w.service.gczheng (192.168.222.171) 56(84) bytes of data.

64 bytes from node01.test.com (192.168.222.171): icmp_seq=1 ttl=64 time=0.174 ms

64 bytes from node01.test.com (192.168.222.171): icmp_seq=2 ttl=64 time=0.373 ms

[root@node11 ~]# ping mysql_3306_w.service.gczheng

PING mysql_3306_w.service.gczheng (192.168.222.172) 56(84) bytes of data.

64 bytes from node02.test.com (192.168.222.172): icmp_seq=1 ttl=64 time=0.258 ms

64 bytes from node02.test.com (192.168.222.172): icmp_seq=2 ttl=64 time=0.251 ms

[root@node11 ~]# ping mysql_3306_w.service.gczheng

PING mysql_3306_w.service.gczheng (192.168.222.173) 56(84) bytes of data.

64 bytes from node03.test.com (192.168.222.173): icmp_seq=1 ttl=64 time=0.184 ms

64 bytes from node03.test.com (192.168.222.173): icmp_seq=2 ttl=64 time=0.189 ms

[root@node11 ~]# ping mysql_3306_w.service.gczheng

PING mysql_3306_w.service.gczheng (192.168.222.174) 56(84) bytes of data.

64 bytes from node04.test.com (192.168.222.174): icmp_seq=1 ttl=64 time=0.576 ms

64 bytes from node04.test.com (192.168.222.174): icmp_seq=2 ttl=64 time=1.03 ms

[root@node11 ~]# mysql -uxxxx -pxxxx -h'mysql_3306_w.service.gczheng' -P3306

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 12730

Server version: 5.7.18-log MySQL Community Server (GPL)

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> exit

Bye

1.3、切换测试

1、关闭node04节点

[root@node04 consul.d]# mysqladmin -uroot -p -P3306 shutdown

Enter password:

[root@node04 consul.d]#

- 查看mgr状态

mysql> SELECT member_id,member_host,member_port,member_state,IF (global_status.variable_name IS NOT NULL,'primary','secondary') AS member_role FROM PERFORMANCE_SCHEMA.replication_group_members LEFT JOIN PERFORMANCE_SCHEMA.global_status ON global_status.variable_name='group_replication_primary_member' AND global_status.variable_value=replication_group_members.member_id;

+--------------------------------------+-----------------+-------------+--------------+-------------+

| member_id | member_host | member_port | member_state | member_role |

+--------------------------------------+-----------------+-------------+--------------+-------------+

| 8770cc17-ebdb-11e8-981b-005056ab9fa5 | node01.test.com | 3306 | ONLINE | secondary | --剩下三个节点

| c38416c3-ebd4-11e8-9b2c-005056aba7b5 | node03.test.com | 3306 | ONLINE | secondary |

| e5dba457-f6d5-11e8-97ca-005056abaf95 | node02.test.com | 3306 | ONLINE | secondary |

+--------------------------------------+-----------------+-------------+--------------+-------------+

3 rows in set (0.00 sec)

mysql>

-

查看consul 状态

解析域名(mysql_3306_w)

[root@node11 ~]# dig @127.0.0.1 -p 53 mysql_3306_w.service.gczheng SRV

; <<>> DiG 9.9.4-RedHat-9.9.4-51.el7_4.2 <<>> @127.0.0.1 -p 53 mysql_3306_w.service.gczheng SRV

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 19419

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 3, AUTHORITY: 0, ADDITIONAL: 7

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;mysql_3306_w.service.gczheng. IN SRV

;; ANSWER SECTION:

mysql_3306_w.service.gczheng. 0 IN SRV 1 1 3306 node01.test.com.node.dc1.gczheng. --三个节点

mysql_3306_w.service.gczheng. 0 IN SRV 1 1 3306 node02.test.com.node.dc1.gczheng.

mysql_3306_w.service.gczheng. 0 IN SRV 1 1 3306 node03.test.com.node.dc1.gczheng.

;; ADDITIONAL SECTION:

node01.test.com.node.dc1.gczheng. 0 IN A 192.168.222.171

node01.test.com.node.dc1.gczheng. 0 IN TXT "consul-network-segment="

node02.test.com.node.dc1.gczheng. 0 IN A 192.168.222.172

node02.test.com.node.dc1.gczheng. 0 IN TXT "consul-network-segment="

node03.test.com.node.dc1.gczheng. 0 IN A 192.168.222.173

node03.test.com.node.dc1.gczheng. 0 IN TXT "consul-network-segment="

;; Query time: 0 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Wed Dec 05 11:47:15 CST 2018

;; MSG SIZE rcvd: 369

[root@node11 ~]#

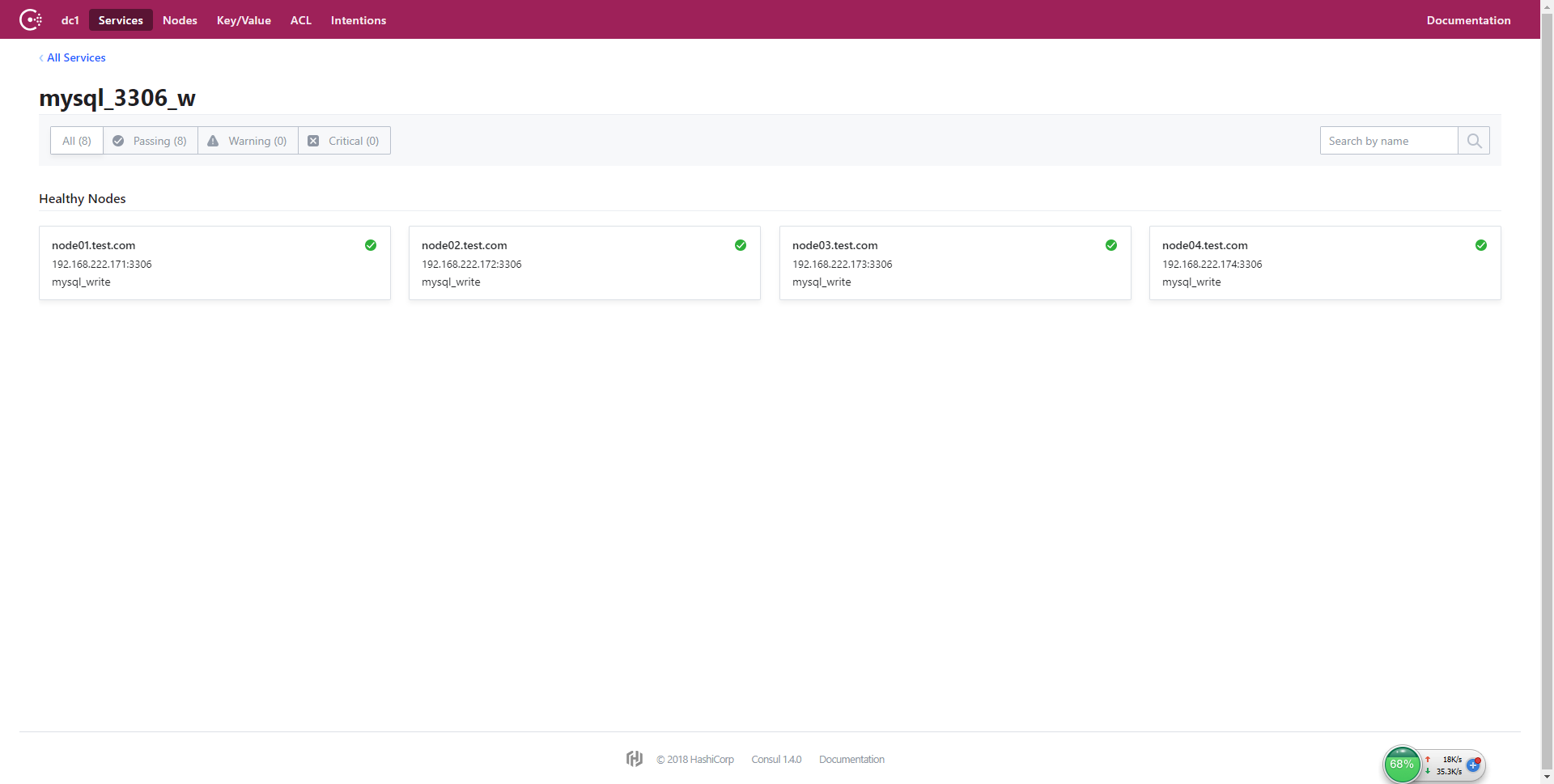

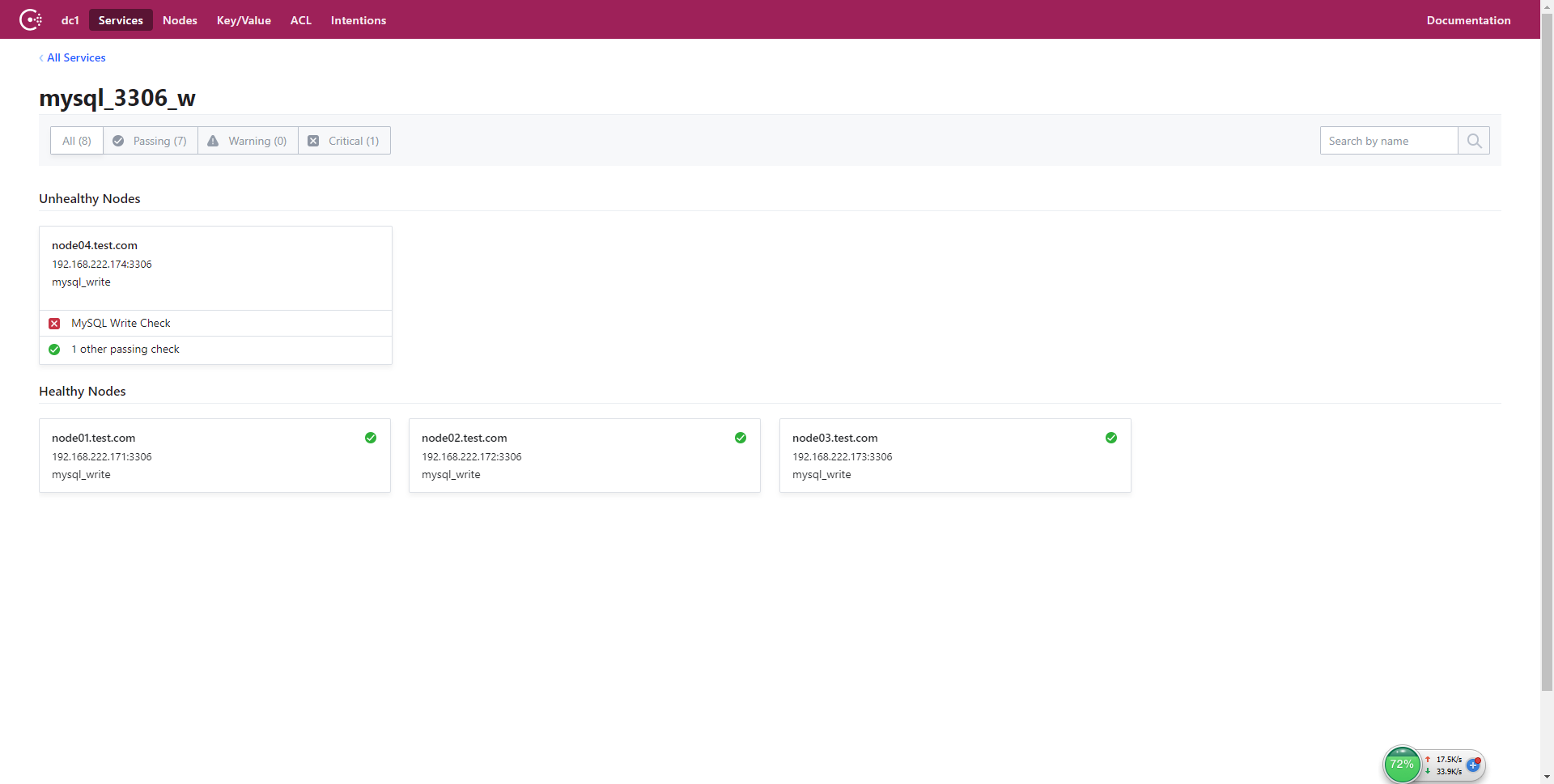

- Consul 界面查看

mysql_3306_w服务,剩下三个写服务(node01、node02、node03)

2、恢复node04节点

如果mysql故障无法写的情况,先把consul服务停止,在启动mysql服务加入MGR集群,最后启动consul服务

--注释掉服务

[root@node04 consul.d]# consul services deregister mysql_mgr_read_3306.json

Deregistered service: mysql_read

[root@node04 consul.d]# consul services deregister mysql_mgr_write_3306.json

Deregistered service: mysql_write

[root@node04 consul.d]# systemctl start mysql

[root@node04 consul.d]# mysql -uroot -piforgot

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 4

Server version: 5.7.18-log MySQL Community Server (GPL)

Copyright (c) 2009-2017 Percona LLC and/or its affiliates

Copyright (c) 2000, 2017, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

(root@localhost) 11:58:08 [(none)]> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+-----------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+-----------+-------------+-------------+--------------+

| group_replication_applier | | | NULL | OFFLINE |

+---------------------------+-----------+-------------+-------------+--------------+

1 row in set (0.00 sec)

(root@localhost) 11:58:23 [(none)]> start group_replication; --重新加入集群

Query OK, 0 rows affected (2.63 sec)

(root@localhost) 11:58:35 [(none)]> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-----------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-----------------+-------------+--------------+

| group_replication_applier | 30955199-ebdc-11e8-ac1f-005056ab820e | node04.test.com | 3306 | ONLINE |

| group_replication_applier | 8770cc17-ebdb-11e8-981b-005056ab9fa5 | node01.test.com | 3306 | ONLINE |

| group_replication_applier | c38416c3-ebd4-11e8-9b2c-005056aba7b5 | node03.test.com | 3306 | ONLINE |

| group_replication_applier | e5dba457-f6d5-11e8-97ca-005056abaf95 | node02.test.com | 3306 | ONLINE |

+---------------------------+--------------------------------------+-----------------+-------------+--------------+

4 rows in set (0.00 sec)

(root@localhost) 11:58:40 [(none)]> exit

Bye

[root@node04 consul.d]# consul reload --重新读取配置

Configuration reload triggered

[root@node04 consul.d]#

- mysql_3306_w服务

- 查看consul 状态

解析域名(mysql_3306_w)

[root@node11 ~]# dig @127.0.0.1 -p 53 mysql_3306_w.service.gczheng SRV

; <<>> DiG 9.9.4-RedHat-9.9.4-51.el7_4.2 <<>> @127.0.0.1 -p 53 mysql_3306_w.service.gczheng SRV

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 11299

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 4, AUTHORITY: 0, ADDITIONAL: 9

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;mysql_3306_w.service.gczheng. IN SRV

;; ANSWER SECTION:

mysql_3306_w.service.gczheng. 0 IN SRV 1 1 3306 node02.test.com.node.dc1.gczheng.

mysql_3306_w.service.gczheng. 0 IN SRV 1 1 3306 node01.test.com.node.dc1.gczheng.

mysql_3306_w.service.gczheng. 0 IN SRV 1 1 3306 node03.test.com.node.dc1.gczheng.

mysql_3306_w.service.gczheng. 0 IN SRV 1 1 3306 node04.test.com.node.dc1.gczheng.

;; ADDITIONAL SECTION:

node02.test.com.node.dc1.gczheng. 0 IN A 192.168.222.172

node02.test.com.node.dc1.gczheng. 0 IN TXT "consul-network-segment="

node01.test.com.node.dc1.gczheng. 0 IN A 192.168.222.171

node01.test.com.node.dc1.gczheng. 0 IN TXT "consul-network-segment="

node03.test.com.node.dc1.gczheng. 0 IN A 192.168.222.173

node03.test.com.node.dc1.gczheng. 0 IN TXT "consul-network-segment="

node04.test.com.node.dc1.gczheng. 0 IN A 192.168.222.174

node04.test.com.node.dc1.gczheng. 0 IN TXT "consul-network-segment="

;; Query time: 9 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Wed Dec 05 11:48:51 CST 2018

;; MSG SIZE rcvd: 473

[root@node11 ~]#

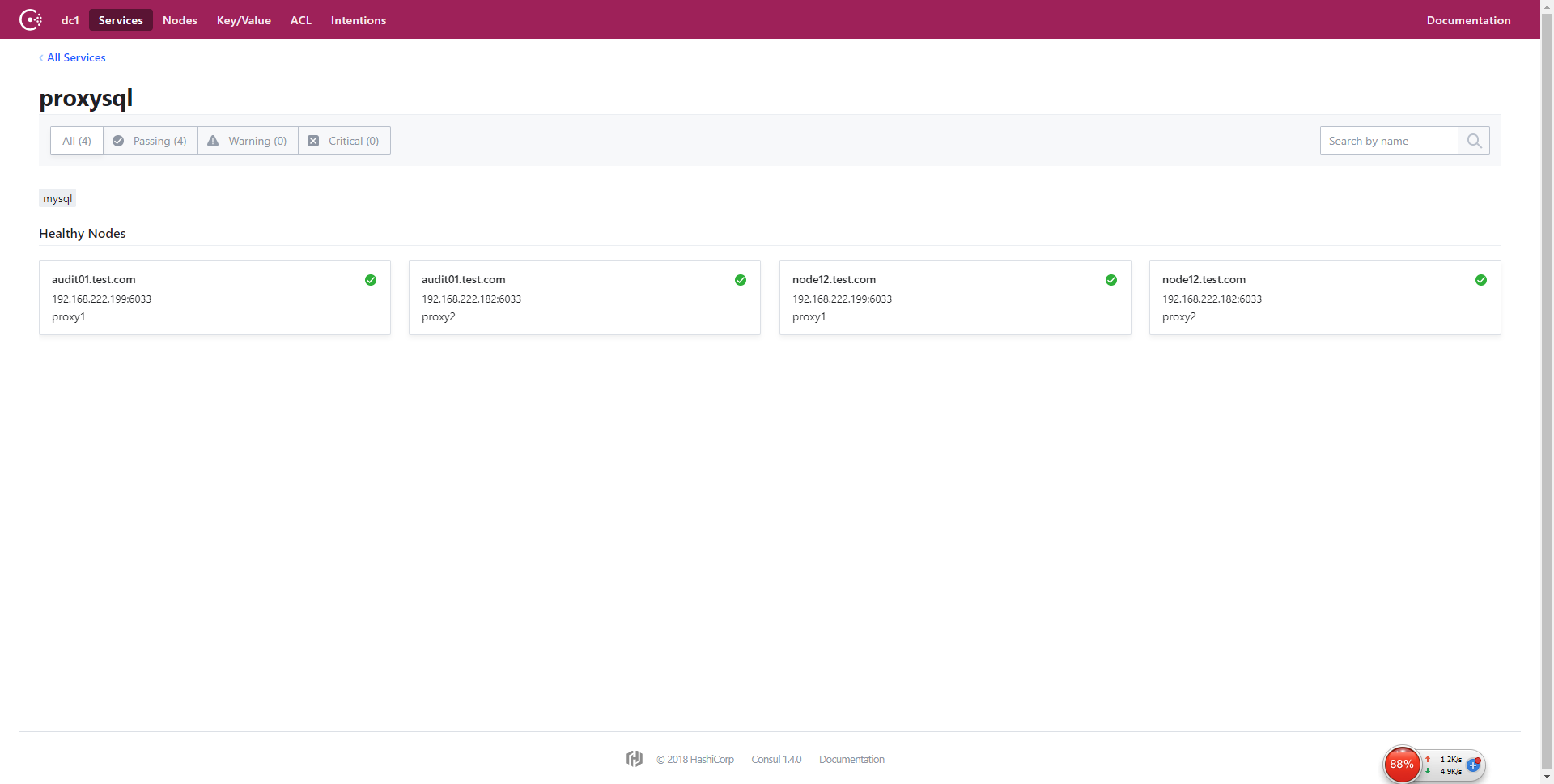

2、MGR(单主模式)+ Consul模式 + PorxySQL

2.1、PorxySQL配置

-

安装配置步骤

- 安装请自行参考官方文档安装指南

- 修改proxysql.cnf 配置文件,配置后端mysql连接

- 修改proxysql.json配置文件

- 启动consul服务读取proxysql.json配置

- 配置域名转发(访问proxysql的客户端),指向consul服务器

[root@audit01 consul.d]# egrep -v "^#|^$" /etc/proxysql.cnf

datadir="/var/lib/proxysql"

admin_variables=

{

admin_credentials="admin:admin" #更改账号

mysql_ifaces="127.0.0.1:6032;/tmp/proxysql_admin.sock" #限制本地访问

}

mysql_variables=

{

threads=4

max_connections=2048

default_query_delay=0

default_query_timeout=36000000

have_compress=true

poll_timeout=2000

interfaces="0.0.0.0:6033"

default_schema="information_schema"

stacksize=1048576

server_version="5.5.30"

connect_timeout_server=3000

monitor_username="rpl_user" #后端mysql监控账号

monitor_password="rpl_pass" #后端mysql监控密码

monitor_history=600000

monitor_connect_interval=60000

monitor_ping_interval=10000

monitor_read_only_interval=1500

monitor_read_only_timeout=500

ping_interval_server_msec=120000

ping_timeout_server=500

commands_stats=true

sessions_sort=true

connect_retries_on_failure=10

}

mysql_servers =

(

)

mysql_users:

(

)

mysql_query_rules:

(

)

scheduler=

(

)

mysql_replication_hostgroups=

(

)

[root@audit01 consul.d]#

[root@audit01 consul.d]# service proxysql restart

Shutting down ProxySQL: DONE!

Starting ProxySQL: 2018-12-05 17:58:53 [INFO] Using config file /etc/proxysql.cnf

DONE!

[root@audit01 consul.d]# service proxysql status

ProxySQL is running (3408).

[root@audit01 consul.d]#

-

配置后端mysql

登录管理端(mysql -P6032 -h127.0.0.1 -uadmin -padmin)配置后端mysql(audit01,node12)

INSERT INTO mysql_replication_hostgroups VALUES (20,21,'Standard Replication Groups');

INSERT INTO mysql_servers (hostname,hostgroup_id,port,weight) VALUES ('192.168.222.175',21,33061,1000),('192.168.222.175',21,33062,1000),('192.168.222.175',21,33063,1000),('192.168.222.175',21,33064,1000),('192.168.222.175',21,33065,1000);

LOAD MYSQL SERVERS TO RUNTIME; SAVE MYSQL SERVERS TO DISK;

INSERT INTO mysql_query_rules (active, match_pattern, destination_hostgroup, cache_ttl, apply) VALUES (1, '^SELECT .* FOR UPDATE', 20, NULL, 1);

INSERT INTO mysql_query_rules (active, match_pattern, destination_hostgroup, cache_ttl, apply) VALUES (1, '^SELECT .*', 21, NULL, 1);

LOAD MYSQL QUERY RULES TO RUNTIME; SAVE MYSQL QUERY RULES TO DISK;

INSERT INTO mysql_users (username,password,active,default_hostgroup,default_schema) VALUES ('gcdb','iforgot',1,20,'test');

LOAD MYSQL USERS TO RUNTIME; SAVE MYSQL USERS TO DISK;

use monitor;

UPDATE global_variables SET variable_value='rpl_user' WHERE variable_name='mysql-monitor_username';

UPDATE global_variables SET variable_value='rpl_pass' WHERE variable_name='mysql-monitor_password';

LOAD MYSQL VARIABLES TO RUNTIME; SAVE MYSQL VARIABLES TO DISK;

select * from mysql_servers;

select * from mysql_query_rules;

select * from mysql_users;

select * from global_variables where variable_name like "mysql-monitor%";

- 确认配置没问题,两个主机启动consul

[root@audit01 consul.d]# pwd

/etc/consul.d

[root@audit01 consul.d]# ll

total 8

-rw-r--r-- 1 root root 237 Dec 5 14:59 client.json

-rw-r--r-- 1 root root 542 Dec 5 16:48 proxysql.json

drwxr-xr-x 2 root root 6 Dec 5 14:57 scripts

#由于consul是通过ansible启动,这里就确认是否已启动

[root@audit01 consul.d]# ps -ef|grep consul |grep -v grep

root 1636 1 0 15:00 ? 00:00:49 consul agent -config-dir=/etc/consul.d

[root@audit01 consul.d]# consul catalog nodes

Node ID Address DC

audit01.test.com 8f638db7 192.168.222.199 dc1 --node节点已经加入

node01.test.com feb4c253 192.168.222.171 dc1

node02.test.com 2993cd81 192.168.222.172 dc1

node03.test.com 305293a0 192.168.222.173 dc1

node04.test.com 06c47aea 192.168.222.174 dc1

node05.test.com c8e917fb 192.168.222.175 dc1

node06.test.com 0bd03c71 192.168.222.176 dc1

node09.test.com fcca17ba 192.168.222.179 dc1

node10.test.com c95847a4 192.168.222.180 dc1

node11.test.com 8d8f6757 192.168.222.181 dc1

node12.test.com 3a0f5fdd 192.168.222.182 dc1 --node节点已经加入

[root@audit01 consul.d]# consul catalog services

consul

mysql_3306_r

mysql_3306_w

proxysql --可以看到服务已经加入,在页面也可以到

[root@audit01 consul.d]#

2.2 、查看页面

显示是四个心跳节点

2.3、检查DNS解析

配置DNS转发的客户端找一台测试

[root@node05 consul.d]# dig @127.0.0.1 -p 53 proxysql.service.gczheng SRV

; <<>> DiG 9.9.4-RedHat-9.9.4-61.el7_5.1 <<>> @127.0.0.1 -p 53 proxysql.service.gczheng SRV

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 4960

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 4, AUTHORITY: 0, ADDITIONAL: 9

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;proxysql.service.gczheng. IN SRV

;; ANSWER SECTION:

proxysql.service.gczheng. 0 IN SRV 1 1 6033 c0a8dec7.addr.dc1.gczheng.

proxysql.service.gczheng. 0 IN SRV 1 1 6033 audit01.test.com.node.dc1.gczheng.

proxysql.service.gczheng. 0 IN SRV 1 1 6033 c0a8deb6.addr.dc1.gczheng.

proxysql.service.gczheng. 0 IN SRV 1 1 6033 node12.test.com.node.dc1.gczheng.

;; ADDITIONAL SECTION:

c0a8dec7.addr.dc1.gczheng. 0 IN A 192.168.222.199

node12.test.com.node.dc1.gczheng. 0 IN TXT "consul-network-segment="

audit01.test.com.node.dc1.gczheng. 0 IN A 192.168.222.199

audit01.test.com.node.dc1.gczheng. 0 IN TXT "consul-network-segment="

c0a8deb6.addr.dc1.gczheng. 0 IN A 192.168.222.182

audit01.test.com.node.dc1.gczheng. 0 IN TXT "consul-network-segment="

node12.test.com.node.dc1.gczheng. 0 IN A 192.168.222.182

node12.test.com.node.dc1.gczheng. 0 IN TXT "consul-network-segment="

;; Query time: 3 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Wed Dec 05 18:14:41 CST 2018

;; MSG SIZE rcvd: 456

[root@node05 consul.d]# ping proxysql.service.gczheng

PING proxysql.service.gczheng (192.168.222.182) 56(84) bytes of data.

64 bytes from node12.test.com (192.168.222.182): icmp_seq=1 ttl=64 time=0.221 ms

64 bytes from node12.test.com (192.168.222.182): icmp_seq=2 ttl=64 time=0.282 ms

2.4、切换测试

1、测试proxysql负载均衡

[root@node05 consul.d]# mysql -ugcdb -piforgot -P6033 -hproxysql.service.gczheng -e "select @@port as 端口"

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------+

| 端口 |

+--------+

| 33061 | --根据延时随机连接后端mysql

+--------+

[root@node05 consul.d]# mysql -ugcdb -piforgot -P6033 -hproxysql.service.gczheng -e "select @@port as 端口"

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------+

| 端口 |

+--------+

| 33062 |

+--------+

[root@node05 consul.d]# mysql -ugcdb -piforgot -P6033 -hproxysql.service.gczheng -e "select @@port as 端口"

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------+

| 端口 |

+--------+

| 33064 |

+--------+

[root@node05 consul.d]# mysql -ugcdb -piforgot -P6033 -hproxysql.service.gczheng -e "select @@port as 端口"

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------+

| 端口 |

+--------+

| 33065 |

+--------+

[root@node05 consul.d]# mysql -ugcdb -piforgot -P6033 -hproxysql.service.gczheng -e "select @@port as 端口"

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------+

| 端口 |

+--------+

| 33063 |

+--------+

2、测试proxysql宕机或者roxysql 服务不可用

[root@node05 mgr_scripts]# dig @127.0.0.1 -p 53 proxysql.service.gczheng

; <<>> DiG 9.9.4-RedHat-9.9.4-61.el7_5.1 <<>> @127.0.0.1 -p 53 proxysql.service.gczheng

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 10214

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 3

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;proxysql.service.gczheng. IN A

;; ANSWER SECTION:

proxysql.service.gczheng. 0 IN A 192.168.222.199

proxysql.service.gczheng. 0 IN A 192.168.222.182

;; ADDITIONAL SECTION:

proxysql.service.gczheng. 0 IN TXT "consul-network-segment="

proxysql.service.gczheng. 0 IN TXT "consul-network-segment="

;; Query time: 1 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Thu Dec 06 14:19:18 CST 2018

;; MSG SIZE rcvd: 157

[root@node05 mgr_scripts]#

- node12 ifdown ens224网口

[root@node12 ~]# ifconfig ens224

ens224: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.222.182 netmask 255.255.255.0 broadcast 192.168.222.255

inet6 fe80::250:56ff:feab:bc9d prefixlen 64 scopeid 0x20<link>

ether 00:50:56:ab:bc:9d txqueuelen 1000 (Ethernet)

RX packets 1059557 bytes 178058970 (169.8 MiB)

RX errors 0 dropped 57 overruns 0 frame 0

TX packets 1022717 bytes 534301681 (509.5 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@node12 ~]# ifdown ens224

Device 'ens224' successfully disconnected.

[root@node12 ~]#

- audit日志显示如下

[root@audit01 consul.d]# tail -f /data/consul/consul.log

2018/12/06 16:40:41 [INFO] agent: Synced service "proxy"

2018/12/06 16:40:41 [INFO] agent: Synced service "proxy1"

2018/12/06 16:40:41 [INFO] agent: Synced service "proxy2"

2018/12/06 16:40:45 [INFO] agent: Synced check "mysql_proxy_02"

2018/12/06 16:40:50 [INFO] agent: Synced check "mysql_proxy_01"

2018/12/06 16:42:43 [INFO] memberlist: Marking node12.test.com as failed, suspect timeout reached (2 peer confirmations)

2018/12/06 16:42:43 [INFO] serf: EventMemberFailed: node12.test.com 192.168.222.182

2018/12/06 16:42:46 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:42:46 [INFO] agent: Synced check "mysql_proxy_02"

2018/12/06 16:43:02 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:43:18 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:43:34 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:43:50 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:44:06 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:44:22 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:44:38 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:44:54 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:45:10 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:45:26 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:45:42 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:45:58 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:46:14 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:46:30 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:46:46 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:47:02 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:47:18 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:47:34 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:47:50 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:48:06 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:48:22 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:48:38 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:48:54 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:49:10 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:49:11 [INFO] serf: attempting reconnect to node12.test.com 192.168.222.182:8301

2018/12/06 16:49:26 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:49:42 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:49:58 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:50:14 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:50:30 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:50:46 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:51:02 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:51:18 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:51:34 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:51:42 [INFO] serf: attempting reconnect to node12.test.com 192.168.222.182:8301

2018/12/06 16:51:50 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:52:06 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:52:22 [WARN] agent: Check "mysql_proxy_02": Timed out (1s) running check

2018/12/06 16:52:24 [INFO] serf: EventMemberJoin: node12.test.com 192.168.222.182

2018/12/06 16:52:30 [INFO] agent: Caught signal: hangup

2018/12/06 16:52:30 [INFO] agent: Reloading configuration...

2018/12/06 16:52:30 [INFO] agent: Deregistered service "proxy"

2018/12/06 16:52:30 [INFO] agent: Synced service "proxy1"

2018/12/06 16:52:30 [INFO] agent: Synced service "proxy2"

2018/12/06 16:52:30 [INFO] agent: Deregistered check "mysql_proxy_02"

2018/12/06 16:52:30 [INFO] agent: Deregistered check "mysql_proxy_01"

2018/12/06 16:52:33 [INFO] agent: Synced check "service:proxy2"

2018/12/06 16:54:00 [INFO] agent: Caught signal: hangup

2018/12/06 16:54:00 [INFO] agent: Reloading configuration...

2018/12/06 16:54:00 [INFO] agent: Synced service "proxy2"

2018/12/06 16:54:00 [INFO] agent: Synced service "proxy1"

2018/12/06 16:55:26 [INFO] memberlist: Suspect node12.test.com has failed, no acks received

2018/12/06 16:55:30 [INFO] memberlist: Marking node12.test.com as failed, suspect timeout reached (2 peer confirmations)

2018/12/06 16:55:30 [INFO] serf: EventMemberFailed: node12.test.com 192.168.222.182

2018/12/06 16:55:58 [WARN] agent: Check "service:proxy2": Timed out (30s) running check

2018/12/06 16:55:58 [INFO] agent: Synced check "service:proxy2"

2018/12/06 16:56:21 [WARN] agent: Check "service:proxy2" is now critical

2018/12/06 16:56:29 [WARN] agent: Check "service:proxy2" is now critical

2018/12/06 16:56:37 [WARN] agent: Check "service:proxy2" is now critical

2018/12/06 16:56:45 [WARN] agent: Check "service:proxy2" is now critical

2018/12/06 16:56:53 [WARN] agent: Check "service:proxy2" is now critical

2018/12/06 16:57:01 [WARN] agent: Check "service:proxy2" is now critical

2018/12/06 16:57:09 [WARN] agent: Check "service:proxy2" is now critical

2018/12/06 16:57:17 [WARN] agent: Check "service:proxy2" is now critical

2018/12/06 16:57:25 [WARN] agent: Check "service:proxy2" is now critical

2018/12/06 16:57:33 [WARN] agent: Check "service:proxy2" is now critical

- 域名解析

[root@node05 consul.d]# dig @127.0.0.1 -p 53 proxysql.service.gczheng

; <<>> DiG 9.9.4-RedHat-9.9.4-61.el7_5.1 <<>> @127.0.0.1 -p 53 proxysql.service.gczheng

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 53883

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 3

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;proxysql.service.gczheng. IN A

;; ANSWER SECTION:

proxysql.service.gczheng. 0 IN A 192.168.222.182 --199和182主机应答

proxysql.service.gczheng. 0 IN A 192.168.222.199

;; ADDITIONAL SECTION:

proxysql.service.gczheng. 0 IN TXT "consul-network-segment="

proxysql.service.gczheng. 0 IN TXT "consul-network-segment="

;; Query time: 1 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Thu Dec 06 16:55:03 CST 2018

;; MSG SIZE rcvd: 157

[root@node05 consul.d]# dig @127.0.0.1 -p 53 proxysql.service.gczheng

; <<>> DiG 9.9.4-RedHat-9.9.4-61.el7_5.1 <<>> @127.0.0.1 -p 53 proxysql.service.gczheng

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 37713

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 2

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;proxysql.service.gczheng. IN A

;; ANSWER SECTION:

proxysql.service.gczheng. 0 IN A 192.168.222.199 --只有199 应答

;; ADDITIONAL SECTION:

proxysql.service.gczheng. 0 IN TXT "consul-network-segment="

;; Query time: 1 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Thu Dec 06 16:56:16 CST 2018

;; MSG SIZE rcvd: 105

- ping

[root@node05 ~]# ping proxysql.service.gczheng

PING proxysql.service.gczheng (192.168.222.182) 56(84) bytes of data.

64 bytes from node12.test.com (192.168.222.182): icmp_seq=1 ttl=64 time=0.197 ms

64 bytes from node12.test.com (192.168.222.182): icmp_seq=2 ttl=64 time=0.291 ms

64 bytes from node12.test.com (192.168.222.182): icmp_seq=3 ttl=64 time=0.288 ms

64 bytes from node12.test.com (192.168.222.182): icmp_seq=4 ttl=64 time=0.235 ms

64 bytes from node12.test.com (192.168.222.182): icmp_seq=5 ttl=64 time=0.261 ms

64 bytes from node12.test.com (192.168.222.182): icmp_seq=6 ttl=64 time=0.313 ms

64 bytes from node12.test.com (192.168.222.182): icmp_seq=7 ttl=64 time=0.283 ms

64 bytes from node12.test.com (192.168.222.182): icmp_seq=8 ttl=64 time=0.337 ms

64 bytes from node12.test.com (192.168.222.182): icmp_seq=9 ttl=64 time=0.141 ms

64 bytes from node12.test.com (192.168.222.182): icmp_seq=10 ttl=64 time=0.337 ms

64 bytes from node12.test.com (192.168.222.182): icmp_seq=11 ttl=64 time=0.273 ms

64 bytes from node12.test.com (192.168.222.182): icmp_seq=12 ttl=64 time=0.258 ms

From node05.test.com (192.168.222.175) icmp_seq=60 Destination Host Unreachable

From node05.test.com (192.168.222.175) icmp_seq=61 Destination Host Unreachable

From node05.test.com (192.168.222.175) icmp_seq=62 Destination Host Unreachable

From node05.test.com (192.168.222.175) icmp_seq=63 Destination Host Unreachable

From node05.test.com (192.168.222.175) icmp_seq=64 Destination Host Unreachable

From node05.test.com (192.168.222.175) icmp_seq=65 Destination Host Unreachable

From node05.test.com (192.168.222.175) icmp_seq=66 Destination Host Unreachable

From node05.test.com (192.168.222.175) icmp_seq=67 Destination Host Unreachable

From node05.test.com (192.168.222.175) icmp_seq=68 Destination Host Unreachable

From node05.test.com (192.168.222.175) icmp_seq=69 Destination Host Unreachable

From node05.test.com (192.168.222.175) icmp_seq=70 Destination Host Unreachable

From node05.test.com (192.168.222.175) icmp_seq=71 Destination Host Unreachable

^C

--- proxysql.service.gczheng ping statistics ---

72 packets transmitted, 12 received, +12 errors, 83% packet loss, time 71004ms

rtt min/avg/max/mdev = 0.141/0.267/0.337/0.058 ms, pipe 4

[root@node05 ~]# ping proxysql.service.gczheng --重新连接后跳到199

PING proxysql.service.gczheng (192.168.222.199) 56(84) bytes of data.

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=1 ttl=64 time=0.233 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=2 ttl=64 time=0.220 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=3 ttl=64 time=0.259 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=4 ttl=64 time=0.249 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=5 ttl=64 time=0.254 ms

- node12 ifup ens224网口

[root@node12 consul.d]# ifup ens224

Connection successfully activated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/7)

-

域名解析

等待5s时间,域名解析到182

[root@node05 consul.d]# dig @127.0.0.1 -p 53 proxysql.service.gczheng

; <<>> DiG 9.9.4-RedHat-9.9.4-61.el7_5.1 <<>> @127.0.0.1 -p 53 proxysql.service.gczheng

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 29882

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 2

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;proxysql.service.gczheng. IN A

;; ANSWER SECTION:

proxysql.service.gczheng. 0 IN A 192.168.222.199

;; ADDITIONAL SECTION:

proxysql.service.gczheng. 0 IN TXT "consul-network-segment="

;; Query time: 1 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Thu Dec 06 17:04:33 CST 2018

;; MSG SIZE rcvd: 105

[root@node05 consul.d]# dig @127.0.0.1 -p 53 proxysql.service.gczheng

; <<>> DiG 9.9.4-RedHat-9.9.4-61.el7_5.1 <<>> @127.0.0.1 -p 53 proxysql.service.gczheng

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 65219

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 3

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;proxysql.service.gczheng. IN A

;; ANSWER SECTION:

proxysql.service.gczheng. 0 IN A 192.168.222.182

proxysql.service.gczheng. 0 IN A 192.168.222.199

;; ADDITIONAL SECTION:

proxysql.service.gczheng. 0 IN TXT "consul-network-segment="

proxysql.service.gczheng. 0 IN TXT "consul-network-segment="

;; Query time: 1 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Thu Dec 06 17:04:33 CST 2018

;; MSG SIZE rcvd: 157

- pin

[root@node05 ~]# ping proxysql.service.gczheng

PING proxysql.service.gczheng (192.168.222.199) 56(84) bytes of data.

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=1 ttl=64 time=0.185 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=2 ttl=64 time=0.285 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=3 ttl=64 time=0.238 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=4 ttl=64 time=0.300 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=5 ttl=64 time=0.285 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=6 ttl=64 time=0.182 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=7 ttl=64 time=0.209 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=8 ttl=64 time=0.271 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=9 ttl=64 time=0.845 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=10 ttl=64 time=0.222 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=11 ttl=64 time=0.251 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=12 ttl=64 time=0.276 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=13 ttl=64 time=0.237 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=14 ttl=64 time=0.266 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=15 ttl=64 time=0.267 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=16 ttl=64 time=0.251 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=17 ttl=64 time=0.162 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=18 ttl=64 time=0.297 ms

64 bytes from 192.168.222.199 (192.168.222.199): icmp_seq=19 ttl=64 time=0.244 ms

^C

--- proxysql.service.gczheng ping statistics ---

19 packets transmitted, 19 received, 0% packet loss, time 18002ms

rtt min/avg/max/mdev = 0.162/0.277/0.845/0.140 ms

[root@node05 ~]# ping proxysql.service.gczheng

PING proxysql.service.gczheng (192.168.222.182) 56(84) bytes of data.

64 bytes from node12.test.com (192.168.222.182): icmp_seq=1 ttl=64 time=0.227 ms

64 bytes from node12.test.com (192.168.222.182): icmp_seq=2 ttl=64 time=0.320 ms

3、注意事项

- 恢复节点:

需要确认是否只是mysql节点故障,还是整个节点故障,避免consul服务恢复,mysql节点未加入集群的情况。

- 域名解析:

客户端节点做本地域名解析时要指向三个服务器节点