Page Hits:

CPU reads a word of virtual memory which is cached in DRAM.

Page Faults(缺页):

流程:

术语:

Virtual memory systems use a different terminology from SRAM caches, even though many of the ideas are similar. In virtual memory parlance, blocks are known as pages. The activity of transferring a page between disk and memory is known as swapping or paging. Pages are swapped in (paged in) from disk to DRAM, and swapped out (paged out)from DRAM to disk. The strategy of waiting until the last moment to swap in a page, when a miss occurs, is known as demand paging.

Allocating Pages

For example, as a result of calling malloc. In the example, VP 5 is allocated by creating room on disk and updating PTE 5 to point to the newly created page on disk.

如图:

Locality to the Rescue Again

Virtual memory works well, mainly because of our old friend locality

If the working set size exceeds the size of physical memory, then the program can produce an unfortunate situation known as thrashing, where pages are swapped in and out continuously. Although virtual memory is usually efficient, if a program’s performance slows to a crawl, the wise programmer will consider the possibility that it is thrashing.

VM as a Tool for Memory Management

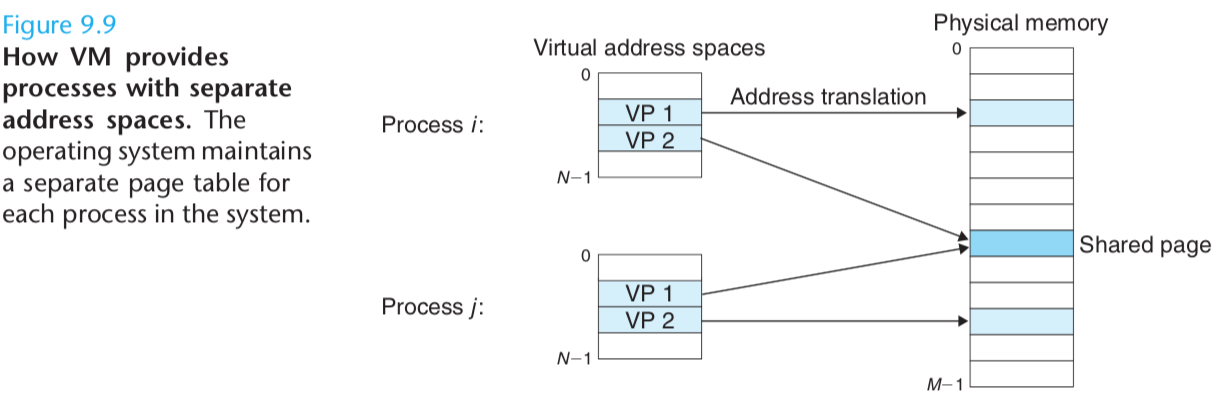

In fact, operating systems provide a separate page table, and thus a separate virtual address space, for each process.

Figure 9.9 shows the basic idea. In the example, the page table for process i maps VP1 to PP2 and VP2 to PP7.

Similarly, the page table for process j maps VP1 to PP 7 and VP 2 to PP 10.

Notice that multiple virtual pages can be mapped to the same shared physical page.

In particular, VM simplifies linking and loading, the sharing of code and data, and allocating memory to applications.

(1) Simplifying linking.

A separate address space allows each process to use the same basic format for its memory image, regardless of where the code and data actually reside in physical memory.

(2) Simplifying loading.

Virtual memory also makes it easy to load executable and shared object files into memory.

Recall from Chapter 7 that the .text and .data sections in ELF executables are contiguous. To load these sections into a newly created process, the Linux loader allocates a contiguous chunk of virtual pages starting at address 0x08048000 (32-bit address spaces) or 0x400000 (64-bit address spaces), marks them as invalid (i.e., not cached), and points their page table entries to the appropriate locations in the object file.

The interesting point is that the loader never actually copies any data from disk into memory.

The data is paged in automatically and on demand by the virtual memory system the first time each page is referenced, either by the CPU when it fetches an instruction, or by an executing instruction when it references a memory location.

This notion of mapping a set of contiguous virtual pages to an arbitrary location in an arbitrary file is known as memory mapping.

Unix provides a system call called mmap that allows application programs to do their own memory mapping.

(3) Simplifying sharing.

In general, each process has its own private code, data, heap, and stack areas that are not shared with any other process.

In this case, the operating system creates page tables that map the corresponding virtual pages to disjoint physical pages.

The operating system can arrange for multiple processes to share a single copy of this code by mapping the appropriate virtual pages in different processes to the same physical pages.

(4) Simplifying memory allocation.

Virtual memory provides a simple mechanism for allocating additional memory to user processes.

When a program running in a user process requests additional heap space (e.g., as a result of calling malloc), the operating system allocates an appropriate number, say, k,

of contiguous virtual memory pages, and maps them to k arbitrary physical pages located anywhere in physical memory.

Because of the way page tables work, there is no need for the operating system to locate k contiguous pages of physical memory. The pages can be scattered randomly in physical memory.

VM as a Tool for Memory Protection

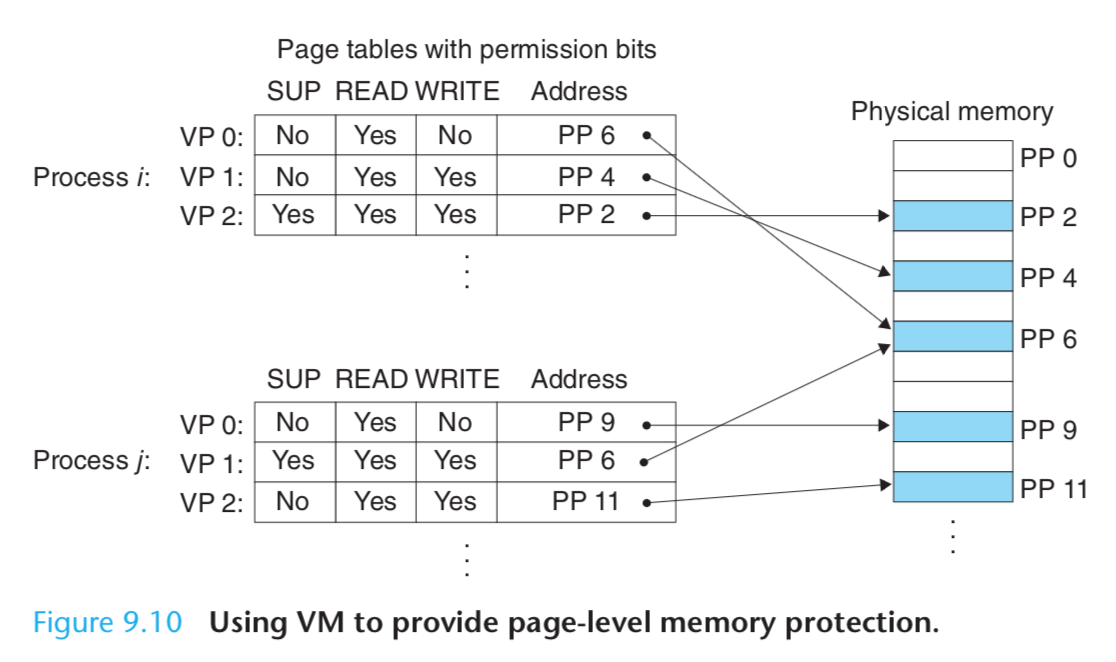

As we have seen, providing separate virtual address spaces makes it easy to isolate the private memories of different processes.

But the address translation mechanism can be extended in a natural way to provide even finer access control.

Since the address translation hardware reads a PTE each time the CPU generates an address, it is straightforward to control access to the contents of a virtual page by adding some additional permission bits to the PTE.

Figure 9.10 shows the general idea.

If an instruction violates these permissions, then the CPU triggers a general protection fault that transfers control to an exception handler in the kernel.

Unix shells typically report this exception as a “segmentation fault.”

Address Translation

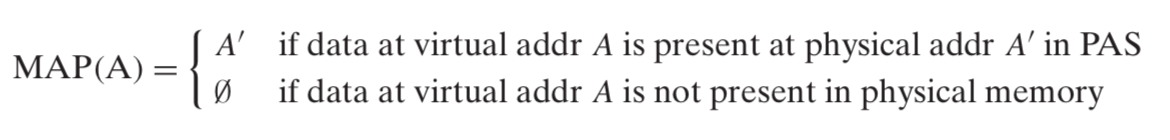

Formally, address translation is a mapping between the elements of an N- element virtual address space (VAS) and an M-element physical address space (PAS),

MAP:VAS→PAS∪∅

where

Figure 9.12 shows how the MMU uses the page table to perform this mapping.

A control register in the CPU, the page table base register (PTBR) points to the current page table.

The n-bit virtual address has two components: a p-bit virtual page offset (VPO) and an (n − p)-bit virtual page number (VPN).

The MMU uses the VPN to select the appropriate PTE.

For example, VPN 0 selects PTE 0, VPN 1 selects PTE 1, and so on.

The corresponding physical address is the concatenation of the physical page number (PPN) from the page table entry and the VPO from the virtual address.

And the physical page offset (PPO) is identical to the VPO.

Figure 9.13(a) shows the steps that the CPU hardware performs when there is a page hit.

-

. Step 1: The processor generates a virtual address and sends it to the MMU.

-

. Step 2: The MMU generates the PTE address and requests it from the cache/main memory.

-

. Step 3: The cache/main memory returns the PTE to the MMU.

-

. Step 3: The MMU constructs the physical address and sends it to cache/main memory.

-

. Step 4: The cache/main memory returns the requested data word to the pro- cessor.

Unlike a page hit, which is handled entirely by hardware, handling a page fault requires cooperation between hardware and the operating system kernel (Figure 9.13(b)).

-

. Steps 1 to 3: The same as Steps 1 to 3 in Figure 9.13(a).

-

. Step 4: The valid bit in the PTE is zero, so the MMU triggers an exception, which transfers control in the CPU to a page fault exception handler in the operating system kernel.

-

. Step 5: The fault handler identifies a victim page in physical memory, and if that page has been modified, pages it out to disk.

-

. Step 6: The fault handler pages in the new page and updates the PTE in memory.

-

Step 7: The fault handler returns to the original process, causing the faulting instruction to be restarted. The CPU resends the offending virtual address to the MMU.

Because the virtual page is now cached in physical memory, there is a hit, and after the MMU performs the steps in Figure 9.13(b), the main memory returns the requested word to the processor.