这是一个简单的c++爬虫,效率并不是很高...

1 #include<stdio.h> 2 int s1[1000000],s2[1000000]; 3 void fun(int a, int b) 4 { 5 int i,ii; 6 bool t1,t2,t3,t4; 7 s1[0] = s2[0] = s1[1] = s2[1] = 0; 8 for(i=a; i <= b; i++){ 9 ii = i; 10 t1 = t2 = t3 = t4 =false; 11 while(ii != 0 ){ 12 int a = ii %10; 13 if( a == 5) 14 { 15 t1 = true; 16 } 17 else if( a == 2) 18 { 19 t2 = true; 20 } 21 else if( a == 1) 22 { 23 t3 = true; 24 } 25 ii = ii / 10; 26 } 27 if(t1 && t2 && t3){ 28 s1[i-1] = s1[i-2] + 1; 29 ii = i; 30 while(ii != 0 ){ 31 int a = ii % 10; 32 int b = (ii / 10) % 10; 33 int c = (ii / 100) % 10; 34 if( c > 0 && a == 1 && b == 2 && c ==5) 35 t4 = true; 36 ii = ii / 10; 37 } 38 if(t4) 39 s2[i-1] = s2[i-2] + 1; 40 else 41 s2[i-1] = s2[i-2]; 42 } 43 else{ 44 s2[i-1] = s2[i-2]; 45 s1[i-1] = s1[i-2]; 46 } 47 } 48 } 49 50 int main() 51 { 52 int a,b,i=1; 53 fun(2,1000000); 54 while(scanf("%d%d",&a,&b) != EOF){ 55 if(a == 1) 56 printf("Case %d:%d %d ",i,s1[b-1]-s1[a-1],s2[b-1]-s2[a-1]); 57 else 58 printf("Case %d:%d %d ",i,s1[b-1]-s1[a-2],s2[b-1]-s2[a-2]); 59 i++; 60 } 61 return 0; 62 }

1 #include"urlThread.h" 2 #include<QFile> 3 #include<QMessageBox> 4 #include<QTextStream> 5 #include <QMainWindow> 6 void urlThread::run() 7 { 8 open(); 9 } 10 11 void urlThread::startThread() 12 { 13 start(); 14 } 15 16 //显示找到的url 17 void urlThread::open() 18 { 19 QString path = "url.txt"; 20 QFile file(path); 21 if (!file.open(QIODevice::ReadOnly | QIODevice::Text)) { 22 // QMessageBox::warning(this,tr("Read File"), 23 // tr("Cannot open file: %1").arg(path)); 24 send("error!cannot open url.txt!"); 25 return; 26 } 27 QTextStream in(&file); 28 while(in.readLine().compare("") != 0){ 29 //ui->textBrowser->append(in.readLine()); 30 send(q2s(in.readLine())); 31 Sleep(1); 32 } 33 file.close(); 34 }

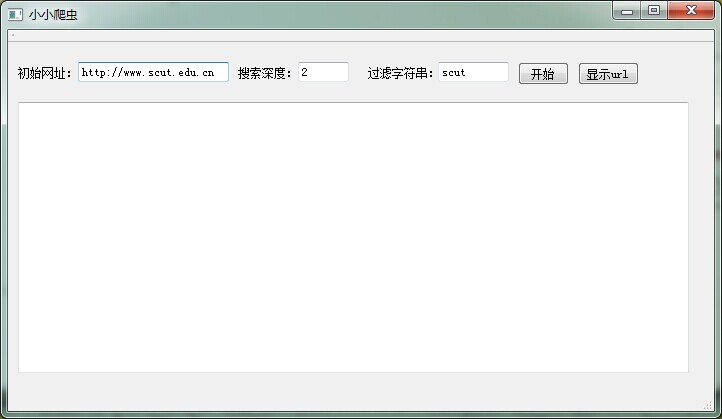

1 #include "mainwindow.h" 2 #include <QApplication> 3 4 int main(int argc, char *argv[]) 5 { 6 QApplication a(argc, argv); 7 MainWindow w; 8 w.setWindowTitle("小小爬虫"); 9 w.show(); 10 11 return a.exec(); 12 }

1 #include "mainwindow.h" 2 #include "ui_mainwindow.h" 3 4 MainWindow::MainWindow(QWidget *parent) : 5 QMainWindow(parent), 6 ui(new Ui::MainWindow) 7 { 8 ui->setupUi(this); 9 QObject::connect(ui->start,SIGNAL(released()),this,SLOT(beginGeturl())); 10 //QObject::connect(ui->display,SIGNAL(released()),this,SLOT(open())); 11 QObject::connect(ui->display,SIGNAL(released()),&uth,SLOT(startThread())); 12 QObject::connect(&uth,&urlThread::sendMessage,this,&MainWindow::receiveMessage); 13 QObject::connect(&crawler,&Crawler::sendMessage,this,&MainWindow::receiveMessage); 14 } 15 16 MainWindow::~MainWindow() 17 { 18 delete ui; 19 } 20 21 void MainWindow::receiveMessage(const QString name) 22 { 23 ui->textBrowser->append(name); 24 ui->textBrowser->moveCursor(QTextCursor::End); 25 } 26 27 void MainWindow::open() 28 { 29 QString path = "url.txt"; 30 QFile file(path); 31 if (!file.open(QIODevice::ReadOnly | QIODevice::Text)) { 32 QMessageBox::warning(this,tr("Read File"), 33 tr("Cannot open file: %1").arg(path)); 34 return; 35 } 36 QTextStream in(&file); 37 while(in.readLine().compare("") != 0){ 38 //ui->textBrowser->append(in.readLine()); 39 crawler.send(q2s(in.readLine())); 40 } 41 file.close(); 42 } 43 44 void MainWindow::beginGeturl() 45 { 46 //crawler = new Crawler(); 47 string url = "" ,dep, filter = "www"; 48 if(!ui->site->text().isEmpty()) 49 url = q2s(ui->site->text()); 50 crawler.addURL(url); 51 int depth = 1; 52 if(!ui->depth->text().isEmpty()) 53 { 54 url = q2s(ui->depth->text()); 55 depth = atoi(url.c_str()); 56 } 57 if(!ui->filter->text().isEmpty()) 58 filter = q2s(ui->filter->text()); 59 crawler.setJdugeDomain(filter); 60 crawler.setDepth(depth); 61 crawler.startThread(); 62 }

1 #ifndef CRAWLER_H 2 #define CRAWLER_H 3 4 #include<set> 5 #include<string> 6 #include<queue> 7 #include "winsock2.h" 8 #include <iostream> 9 #include <fstream> 10 #include <stdio.h> 11 #include<time.h> 12 #include<winsock.h> 13 #include<QThread> 14 15 #pragma comment(lib, "ws2_32.lib") 16 using namespace std; 17 18 bool ParseURL(const string & url, string & host, string & resource); 19 bool GetHttpResponse(const string & url, char * &response, int &bytesRead); 20 QString s2q(const string &s); 21 string q2s(const QString &s); 22 23 #define DEFAULT_PAGE_BUF_SIZE 1000000 24 25 class Crawler: public QThread 26 { 27 Q_OBJECT 28 private: 29 queue<string> urlWaiting; 30 set<string> urlWaitset; 31 set<string> urlProcessed; 32 set<string> urlError; 33 set<string> disallow; 34 set<string>::iterator it; 35 int numFindUrl; 36 time_t starttime, finish; 37 string filter; 38 int depth; 39 40 public: 41 Crawler(){ filter = "�";numFindUrl = 0;} 42 ~Crawler(){} 43 void begin(); 44 void setDepth(int depth); 45 void processURL(string& strUrl); 46 void addURL(string url); 47 void log(string entry, int num); 48 void HTMLParse(string & htmlResponse, const string & host); 49 bool getRobotx(const string & url, char * &response, int &bytesRead); 50 void setJdugeDomain(const string domain); 51 long urlOtherWebsite(string url); 52 void send(string s) 53 { 54 QString qs = s2q(s); 55 emit sendMessage(qs); 56 } 57 signals: 58 void sendMessage(const QString name); 59 60 public slots: 61 bool startThread(); 62 63 protected: 64 void run(); 65 66 }; 67 #endif // CRAWLER_H

1 #ifndef MAINWINDOW_H 2 #define MAINWINDOW_H 3 4 #include <QMainWindow> 5 #include<QFile> 6 #include<QMessageBox> 7 #include<QTextStream> 8 #include<QLineEdit> 9 #include<QDebug> 10 #include"crawler.h" 11 #include"urlThread.h" 12 13 namespace Ui { 14 class MainWindow; 15 } 16 17 class MainWindow : public QMainWindow 18 { 19 Q_OBJECT 20 21 public: 22 explicit MainWindow(QWidget *parent = 0); 23 ~MainWindow(); 24 void receiveMessage(const QString name); 25 26 public slots: 27 void beginGeturl(); 28 void open(); 29 30 private: 31 Ui::MainWindow *ui; 32 Crawler crawler; 33 urlThread uth; 34 }; 35 36 #endif // MAINWINDOW_H

1 #ifndef URLTHREAD_H 2 #define URLTHREAD_H 3 #include"crawler.h" 4 5 class urlThread: public QThread 6 { 7 Q_OBJECT 8 public slots: 9 void startThread(); 10 void open(); 11 void send(string s) 12 { 13 QString qs = s2q(s); 14 emit sendMessage(qs); 15 } 16 signals: 17 void sendMessage(const QString name); 18 protected: 19 void run(); 20 }; 21 22 #endif // URLTHREAD_H

1 <?xml version="1.0" encoding="UTF-8"?> 2 <ui version="4.0"> 3 <class>MainWindow</class> 4 <widget class="QMainWindow" name="MainWindow"> 5 <property name="geometry"> 6 <rect> 7 <x>0</x> 8 <y>0</y> 9 <width>706</width> 10 <height>381</height> 11 </rect> 12 </property> 13 <property name="windowTitle"> 14 <string>MainWindow</string> 15 </property> 16 <widget class="QWidget" name="centralWidget"> 17 <widget class="QLabel" name="label"> 18 <property name="geometry"> 19 <rect> 20 <x>10</x> 21 <y>20</y> 22 <width>54</width> 23 <height>21</height> 24 </rect> 25 </property> 26 <property name="text"> 27 <string>初始网址:</string> 28 </property> 29 </widget> 30 <widget class="QLineEdit" name="site"> 31 <property name="geometry"> 32 <rect> 33 <x>70</x> 34 <y>20</y> 35 <width>151</width> 36 <height>20</height> 37 </rect> 38 </property> 39 <property name="text"> 40 <string>http://www.scut.edu.cn</string> 41 </property> 42 </widget> 43 <widget class="QLabel" name="label_2"> 44 <property name="geometry"> 45 <rect> 46 <x>230</x> 47 <y>20</y> 48 <width>54</width> 49 <height>21</height> 50 </rect> 51 </property> 52 <property name="text"> 53 <string>搜索深度:</string> 54 </property> 55 </widget> 56 <widget class="QLineEdit" name="depth"> 57 <property name="geometry"> 58 <rect> 59 <x>290</x> 60 <y>20</y> 61 <width>51</width> 62 <height>20</height> 63 </rect> 64 </property> 65 <property name="text"> 66 <string>2</string> 67 </property> 68 </widget> 69 <widget class="QLabel" name="label_3"> 70 <property name="geometry"> 71 <rect> 72 <x>360</x> 73 <y>20</y> 74 <width>71</width> 75 <height>21</height> 76 </rect> 77 </property> 78 <property name="text"> 79 <string>过滤字符串:</string> 80 </property> 81 </widget> 82 <widget class="QLineEdit" name="filter"> 83 <property name="geometry"> 84 <rect> 85 <x>430</x> 86 <y>20</y> 87 <width>71</width> 88 <height>20</height> 89 </rect> 90 </property> 91 <property name="text"> 92 <string>scut</string> 93 </property> 94 </widget> 95 <widget class="QPushButton" name="start"> 96 <property name="geometry"> 97 <rect> 98 <x>510</x> 99 <y>20</y> 100 <width>51</width> 101 <height>23</height> 102 </rect> 103 </property> 104 <property name="text"> 105 <string>开始</string> 106 </property> 107 </widget> 108 <widget class="QPushButton" name="display"> 109 <property name="geometry"> 110 <rect> 111 <x>570</x> 112 <y>20</y> 113 <width>61</width> 114 <height>23</height> 115 </rect> 116 </property> 117 <property name="text"> 118 <string>显示url</string> 119 </property> 120 </widget> 121 <widget class="QTextBrowser" name="textBrowser"> 122 <property name="geometry"> 123 <rect> 124 <x>10</x> 125 <y>60</y> 126 <width>671</width> 127 <height>271</height> 128 </rect> 129 </property> 130 </widget> 131 </widget> 132 <widget class="QMenuBar" name="menuBar"> 133 <property name="geometry"> 134 <rect> 135 <x>0</x> 136 <y>0</y> 137 <width>706</width> 138 <height>23</height> 139 </rect> 140 </property> 141 </widget> 142 <widget class="QToolBar" name="mainToolBar"> 143 <attribute name="toolBarArea"> 144 <enum>TopToolBarArea</enum> 145 </attribute> 146 <attribute name="toolBarBreak"> 147 <bool>false</bool> 148 </attribute> 149 </widget> 150 <widget class="QStatusBar" name="statusBar"/> 151 </widget> 152 <layoutdefault spacing="6" margin="11"/> 153 <resources/> 154 <connections/> 155 </ui>

ui文件要自己设计

爬虫还有一些小问题,抓取的url content并不完全,某些地址还有一点小问题...

貌似博客园没有上传附件的地方?还是我没找到?希望得到提示~