本文的安装只涉及了hadoop-common、hadoop-hdfs、hadoop-mapreduce和hadoop-yarn,并不包含HBase、Hive和Pig等。

http://blog.csdn.net/aquester/article/details/24621005

1. 规划

1.1. 机器列表

|

NameNode |

SecondaryNameNode |

DataNodes |

|

172.16.0.100 |

172.16.0.101 |

172.16.0.110 |

|

172.16.0.111 |

||

|

172.16.0.112 |

1.2. 主机名

|

机器IP |

主机名 |

|

172.16.0.100 |

NameNode |

|

172.16.0.101 |

SecondaryNameNode |

|

172.16.0.110 |

DataNode110 |

|

172.16.0.111 |

DataNode111 |

|

172.16.0.112 |

DataNode112 |

2.设定IP与主机名

# rm -rf /etc/udev/rules.d/*.rules

# vi /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=yes

BOOTPROTO=static

NETMASK=255.255.0.0

GATEWAY=192.168.0.6

IPADDR=192.168.1.20

DNS1=192.168.0.3

DNS2=192.168.0.6

DEFROUTE=yes

PEERDNS=yes

PEERROUTES=yes

IPV4_FAILURE_FATAL=yes

IPV6INIT=no

NAME="System eth0"

# vi /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=NameNode.smartmap

# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

192.168.1.20 NameNode NameNode.smartmap

192.168.1.50 SecondaryNameNode SecondaryNameNode.smartmap

192.168.1.70 DataNode110 DataNode110.smartmap

192.168.1.90 DataNode111 DataNode111.smartmap

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

3.免密码登录

3.1. 免密码登录范围

要求能通过免登录包括使用IP和主机名都能免密码登录:

1) NameNode能免密码登录所有的DataNode

2) SecondaryNameNode能免密码登录所有的DataNode

3) NameNode能免密码登录自己

4) SecondaryNameNode能免密码登录自己

5) NameNode能免密码登录SecondaryNameNode

6) SecondaryNameNode能免密码登录NameNode

7) DataNode能免密码登录自己

8) DataNode不需要配置免密码登录NameNode、SecondaryNameNode和其它DataNode。

3.2. 软件安装

# yum install openssh-clients (NameNode、SecondaryNameNode和其它DataNode均执行)

# yum install wget

3.3. SSH配置

vi /etc/ssh/sshd_config (NameNode、SecondaryNameNode和其它DataNode均执行)

RSAAuthentication yes

PubkeyAuthentication yes

AuthorizedKeysFile .ssh/authorized_keys

service sshd restart

3.4. SSH无密码配置

# ssh-keygen -t rsa (NameNode、SecondaryNameNode和其它DataNode均执行)

看到图形输出,表示密钥生成成功,目录下多出两个文件

私钥文件:id_raa

公钥文件:id_rsa.pub

将公钥文件id_rsa.pub内容放到authorized_keys文件中:

# cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys (NameNode、SecondaryNameNode均执行)

将公钥文件authorized_keys分发到各dataNode节点:

(NameNode、SecondaryNameNode均执行)

# scp authorized_keys root@SecondaryNameNode:/root/.ssh/

# scp authorized_keys root@DataNode110:/root/.ssh/

# scp authorized_keys root@DataNode111:/root/.ssh/

# scp authorized_keys root@DataNode112:/root/.ssh/

3.5. SSH无密码登录验证

验证ssh无密码登录:

(NameNode均执行)

# ssh root@localhost

# ssh root@SecondaryNameNode

# ssh root@DataNode110

# ssh root@DataNode111

# ssh root@DataNode112

(SecondaryNameNode均执行)

# ssh root@localhost

# ssh root@NameNode

# ssh root@DataNode110

# ssh root@DataNode111

# ssh root@DataNode112

(DataNode110均执行)

# ssh root@localhost

(DataNode111均执行)

# ssh root@localhost

(DataNode111均执行)

# ssh root@localhost

4.JDK安装与环境变量配置

(以下内容NameNode、SecondaryNameNode和其它DataNode均执行)

4.1. JDK下载

jdk-7u72-linux-x64.tar.gz

# scp jdk-7u72-linux-x64.tar.gz root@192.168.1.50:/opt/

4.2.卸载系统自带的开源JDK

# rpm -qa |grep java

# rpm –e java

4.3.把安装文件拷贝到用户目录

例如:

/opt/java目录下

4.4.解压文件

# tar -xzvf jdk-7u72-linux-x64.tar.gz

解压后,在/opt/java目录下就会生成一个新的目录 jdk1.7.0_72,该目录下存放的是解压后的文件。

至此,安装工作基本完成,下面是要进行环境变量的设置。

注意:如果你下载的文件是rpm 格式的话,可以通过下面的命令来安装:

rpm -ivh jdk-7u72-linux-x64.rpm

4.5.环境变量设置

修改.profile文件 (推荐此种方式,这样其他程序也可以友好的使用JDK了)

# vi /etc/profile

在文件中找到export PATH USER LOGNAME MAIL HOSTNAME HISTSIZE INPUTRC,改为下面的形式:

export JAVA_HOME=/opt/java/jdk1.7.0_72

export PATH=$PATH:$JAVA_HOME/bin:$JAVA_HOME/jre/bin

export CLASSPATH=.:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

4.6.让环境变量生效

执行配置文件令其立刻生效

# source /etc/profile

之后执行以下命令验证是否安装成功

# java -version

如果出现下面的信息,则表示安装成功

java version "1.7.0_72"

Java(TM) SE Runtime Environment (build 1.7.0_72-b14)

Java HotSpot(TM) 64-Bit Server VM (build 24.72-b04, mixed mode)

5.Hadoop安装与配置

5.1.Hadoop下载

# wget http://mirrors.hust.edu.cn/apache/hadoop/common/stable/hadoop-2.5.1.tar.gz

# scp hadoop-2.5.1.tar.gz root@192.168.1.50:/opt/

5.2.解压文件

# tar -zxvf hadoop-2.5.1.tar.gz

5.3.配置

# cd /opt/hadoop-2.5.1/etc/hadoop

cp /opt/hadoop/hadoop-2.5.1/share/doc/hadoop/hadoop-project-dist/hadoop-common/core-default.xml /opt/hadoop/hadoop-2.5.1/etc/hadoop/core-site.xml

cp /opt/hadoop/hadoop-2.5.1/share/doc/hadoop/hadoop-project-dist/hadoop-hdfs/hdfs-default.xml /opt/hadoop/hadoop-2.5.1/etc/hadoop/hdfs-site.xml

cp /opt/hadoop/hadoop-2.5.1/share/doc/hadoop/hadoop-yarn/hadoop-yarn-common/yarn-default.xml /opt/hadoop/hadoop-2.5.1/etc/hadoop/yarn-site.xml

cp /opt/hadoop/hadoop-2.5.1/share/doc/hadoop/hadoop-mapreduce-client/hadoop-mapreduce-client-core/mapred-default.xml /opt/hadoop/hadoop-2.5.1/etc/hadoop/mapred-site.xml

# mkdir -p /opt/hadoop/tmp/dfs/name

# mkdir -p /opt/hadoop/tmp/dfs/data

# mkdir -p /opt/hadoop/tmp/dfs/namesecondary

5.3.1.core-site.xml

# vi core-site.xml

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/hadoop/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.1.20:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>4096</value>

</property>

</configuration>

|

属性名 |

属性值 |

涉及范围 |

|

fs.defaultFS |

hdfs://192.168.1.20:9000 |

所有节点 |

|

hadoop.tmp.dir |

/opt/hadoop/tmp |

所有节点 |

|

fs.default.name |

hdfs://192.168.1.20:9000 |

|

5.3.2.hdfs-site.xml

# vi hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.http-address</name>

<value>192.168.1.20:50070</value>

</property>

<property>

<name>dfs.namenode.http-bind-host</name>

<value>192.168.1.20</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>192.168.1.50:50090</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

|

属性名 |

属性值 |

涉及范围 |

|

dfs.namenode.http-address |

192.168.1.20:50070 |

所有节点 |

|

dfs.namenode.http-bind-host |

192.168.1.20 |

所有节点 |

|

dfs.namenode.secondary.http-address |

192.168.1.50:50090 |

NameNode、SecondaryNameNode |

|

dfs.replication |

2 |

|

2.3.3.mapred-site.xml

# vi mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobtracker.http.address</name>

<value>192.168.1.20:50030</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>192.168.1.20:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>192.168.1.20:19888</value>

</property>

<property>

<name>mapreduce.jobhistory.admin.address</name>

<value>192.168.1.20:10033</value>

</property>

</configuration>

|

属性名 |

属性值 |

涉及范围 |

|

mapreduce.framework.name |

yarn |

所有节点 |

|

mapreduce.jobtracker.http.address |

192.168.1.20:50030 |

|

2.3.4.yarn-site.xml

# vi yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>192.168.1.20</value>

</property>

</configuration>

|

属性名 |

属性值 |

涉及范围 |

|

192.168.1.20 |

所有节点 |

|

|

yarn.nodemanager.aux-services |

mapreduce_shuffle |

所有节点 |

|

|

|

|

5.3.5.slaves

# vi slaves

DataNode110

DataNode111

5.3.5.secondaryNamenodes

# vi master

SecondaryNameNode

5.3.6.修改JAVA_HOME

分别在文件hadoop-env.sh和yarn-env.sh中添加JAVA_HOME配置

# vi hadoop-env.sh

export JAVA_HOME=/opt/java/jdk1.7.0_72

export HADOOP_HOME=/opt/hadoop/hadoop-2.5.1

export HADOOP_COMMON_LIB_NATIVE_DIR=${HADOOP_HOME}/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native"

# vi yarn-env.sh

export JAVA_HOME=/opt/java/jdk1.7.0_72

export HADOOP_HOME=/opt/hadoop/hadoop-2.5.1

export HADOOP_COMMON_LIB_NATIVE_DIR=${HADOOP_HOME}/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native"

5.4.环境变量设置

修改.profile文件 (推荐此种方式,这样其他程序也可以友好的使用JDK了)

# vi /etc/profile

在文件中找到export PATH USER LOGNAME MAIL HOSTNAME HISTSIZE INPUTRC,改为下面的形式:

export JAVA_HOME=/opt/java/jdk1.7.0_72

export JRE_HOME=$JAVA_HOME/jre

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/lib:$JAVA_HOME/jre/lib:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export HADOOP_HOME=/opt/hadoop/hadoop-2.5.1

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_YARN_HOME=$HADOOP_HOME

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export CLASSPATH=$HADOOP_HOME/lib:$CLASSPATH

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=/opt/hadoop/hadoop-2.5.1/lib/native"

5.4.1.让环境变量生效

执行配置文件令其立刻生效

# source /etc/profile

5.5.启动HDFS

5.5.1.格式化NameNode

# hdfs namenode -format

5.5.1.启动HDFS

. /opt/hadoop/hadoop-2.5.1/sbin/start-dfs.sh

5.5.1.启动YARN

. /opt/hadoop/hadoop-2.5.1/sbin/start-yarn.sh

设置logger级别,看下具体原因

export HADOOP_ROOT_LOGGER=DEBUG,console

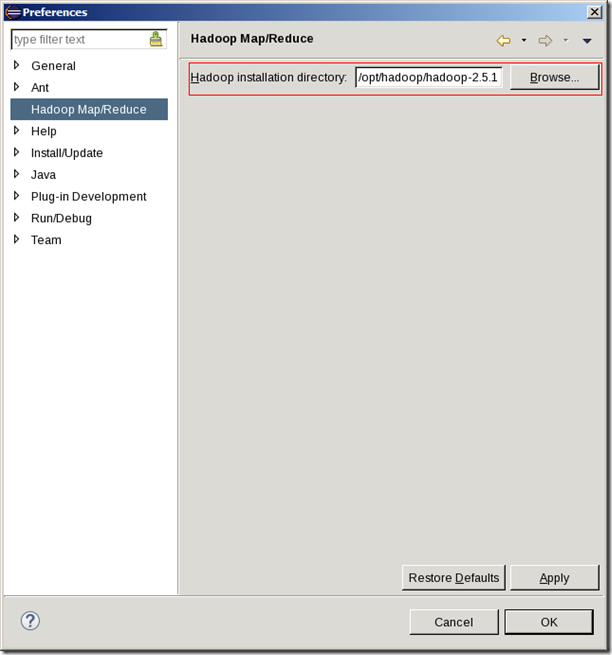

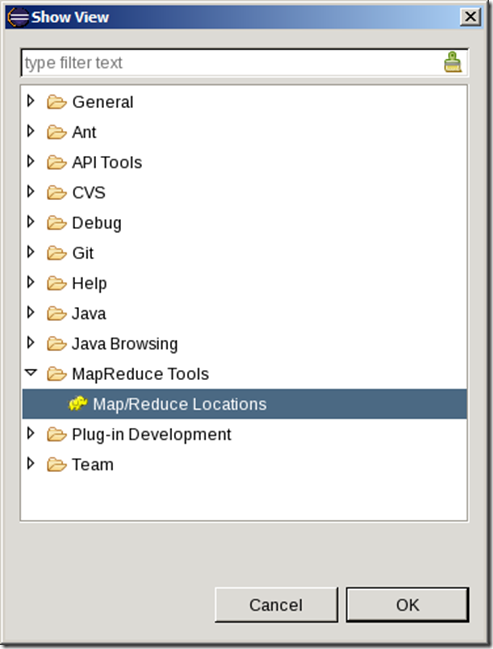

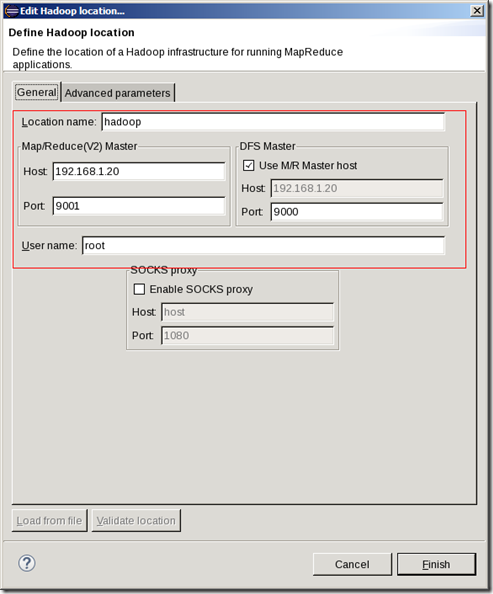

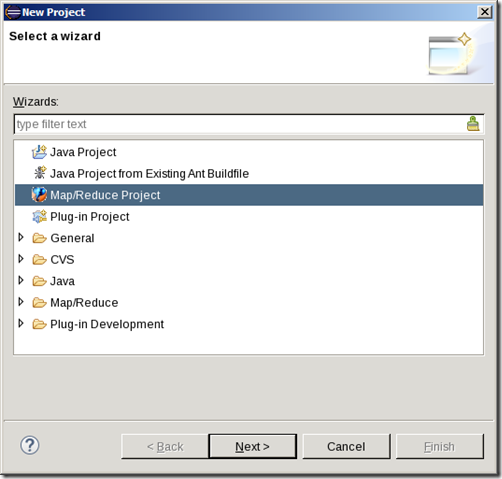

windows->show view->other-> MapReduce Tools->Map/Reduce Locations

hadoop-2.5.1-src.tar.gzhadoop-2.5.1-srchadoop-mapreduce-projecthadoop-mapreduce-examplessrcmainjavaorgapachehadoopexamples - TAR+GZIP archive, unpacked size 82,752,131 bytes