|

|

主控终端 |

|

主机名 |

ubuntuhadoop.smartmap.com |

|

IP |

192.168.1.60 |

|

Subnet mask |

255.255.255.0 |

|

Gateway |

192.168.1.1 |

|

DNS |

218.30.19.50 |

|

|

61.134.1.5 |

|

Search domains |

smartmap.com |

|

|

|

|

|

|

|

|

|

1. 设置网络IP

sudo nmtui

sudo /etc/init.d/networking restart

2. 设置主机名

sudo hostnamectl set-hostname=ubuntuhadoop.smartmap.com

sudo gedit /etc/hosts

127.0.0.1 localhost

# 127.0.0.1 ubuntuhadoop.smartmap.com

# 127.0.0.1 ubuntuhadoop

192.168.1.60 ubuntuhadoop

192.168.1.60 ubuntuhadoop.smartmap.com

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

3. 关闭防火墙

sudo ufw disable

4. 安装VMwareTool

https://www.linuxidc.com/Linux/2016-04/130807.htm

5. 安装SSH

5.1. 安装SSH服务

sudo apt-get install -y openssh-server

5.2. 设置SSH

sudo vi /etc/ssh/sshd_config

PermitRootLogin yes

5.3. 启动SSH服务

sudo service ssh start

sudo service ssh restart

5.4. 节点间无密码互访

5.4.1. zyx用户

cd ~

ssh-keygen -t rsa

cp .ssh/id_rsa.pub .ssh/authorized_keys

5.4.2. root用户

sudo su – root

cd ~

ssh-keygen -t rsa

cp .ssh/id_rsa.pub .ssh/authorized_keys

exit

6. 设置vim(Ubuntu中的vim太难用了)

sudo gedit /etc/vim/vimrc.tiny

" set compatible

set nocompatible

set backspace=2

" vim: set ft=vim:

7. 安装JDK

7.1. 加入Oracle的JDK仓库

sudo add-apt-repository ppa:webupd8team/java

7.2. 更新

sudo apt-get update

7.3. 安装

sudo apt-get install oracle-java8-installer

注意:java默认安装在 /usr/lib/jvm文件夹下

7.4. 环境变量配置

sudo gedit /etc/profile

export JAVA_HOME=/usr/lib/jvm/java-8-oracle

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=.:$CLASSPATH:$JAVA_HOME/lib:$JRE_HOME/lib

export PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin

export HADOOP_HOME=/usr/local/hadoop/hadoop-2.7.5

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop

export PATH=$PATH:$HADOOP_HOME/sbin

export PATH=$PATH:$HADOOP_HOME/bin

export LD_LIBRARY_PATH=$JAVA_HOME/jre/lib/amd64/server:/usr/local/lib:$HADOOP_HOME/lib/native

export JAVA_LIBRARY_PATH=$LD_LIBRARY_PATH:$JAVA_LIBRARY_PATH

souce /etc/profile

8. 安装Hadoop

8.1. 解压hadoop

sudo mkdir /usr/local/hadoop

sudo tar zxvf hadoop-2.7.5.tar.gz -C /usr/local/hadoop

8.2. 创建目录

mkdir /home/zyx/tmp

mkdir /home/zyx/dfs

mkdir /home/zyx/dfs/name

mkdir /home/zyx/dfs/data

mkdir /home/zyx/dfs/checkpoint

mkdir /home/zyx/yarn

mkdir /home/zyx/yarn/local

8.3. 修改Hadoop的配置文件

各配置文件在/usr/local/hadoop/hadoop-2.7.5/etc/hadoop/目录下

8.3.1. hadoop-env.sh

# The java implementation to use.

# export JAVA_HOME=${JAVA_HOME}

export JAVA_HOME=/usr/lib/jvm/java-8-oracle

export HADOOP_HOME=/usr/local/hadoop/hadoop-2.7.5

export PATH=$PATH:/usr/local/hadoop/hadoop-2.7.5/bin

8.3.2. yarn-env.sh

# some Java parameters

# export JAVA_HOME=/home/y/libexec/jdk1.6.0/

export JAVA_HOME=/usr/lib/jvm/java-8-oracle

8.3.3. core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://192.168.1.60:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/zyx/tmp</value>

</property>

</configuration>

8.3.4. hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.http-address</name>

<value>192.168.1.60:50070</value>

</property>

<property>

<name>dfs.namenode.http-bind-host</name>

<value>192.168.1.60</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>192.168.1.60:50090</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/home/zyx/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/home/zyx/dfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.checkpoint.dir</name>

<value>/home/zyx/dfs/checkpoint</value>

</property>

</configuration>

8.3.5. mapred-site.xml

sudo cp /usr/local/hadoop/hadoop-2.7.5/etc/hadoop/mapred-site.xml.template /usr/local/hadoop/hadoop-2.7.5/etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

8.3.6. yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>192.168.1.60</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>/home/zyx/yarn/local</value>

</property>

</configuration>

8.3.7. slave

sudo gedit /usr/local/hadoop/hadoop-2.7.5/etc/hadoop/slaves

192.168.1.60

9. Hadoop启动

9.1. HDFS文件的格式化

注意在zyx用户下

hadoop namenode -format

9.2. DFS服务的启动与关停

注意在root用户下

cd /usr/local/hadoop/hadoop-2.7.5/sbin/

sudo ./start-dfs.sh

sudo ./stop-dfs.sh

9.3. 查看数据节点信息

hadoop dfsadmin -report

9.4. YARN服务的启动与关停

注意在root用户下

cd /usr/local/hadoop/hadoop-2.7.5/sbin/

sudo ./start-yarn.sh

sudo ./stop-yarn.sh

9.5. 查看本机上已启动的服务

sudo jps

9.6. HDFS的Web UI管理界面

http://192.168.1.60:50070/dfshealth.html#tab-overview

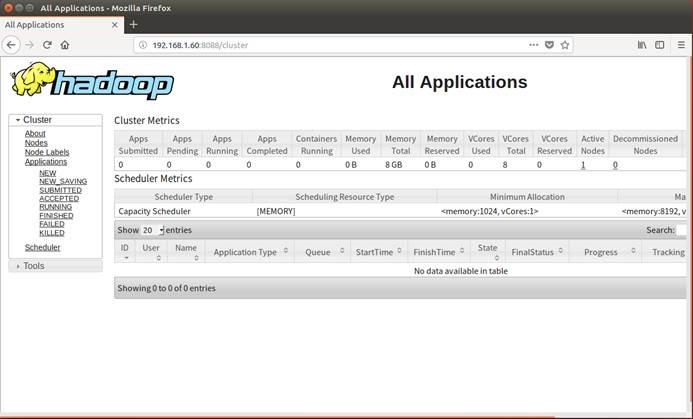

9.7. MapReduce应用的Web UI管理界面

http://192.168.1.60:8088/cluster

10. 安装Eclipse

10.1. 解压eclipse

sudo mv /home/zyx/software/eclipse-java-neon-3-linux-gtk-x86_64.tar.gz /opt/

sudo tar zxvf /opt/eclipse-java-neon-3-linux-gtk-x86_64.tar.gz

sudo mkdir /opt/eclipse/workspace

10.2. 安装插件

sudo mv /home/zyx/software/hadoop-eclipse-plugin-2.7.3.jar /opt/

sudo cp /opt/hadoop-eclipse-plugin-2.7.3.jar /opt/eclipse/dropins/

ls -la dropins/

10.3. 启动Eclipse

cd /opt/eclipse/

sudo ./eclipse

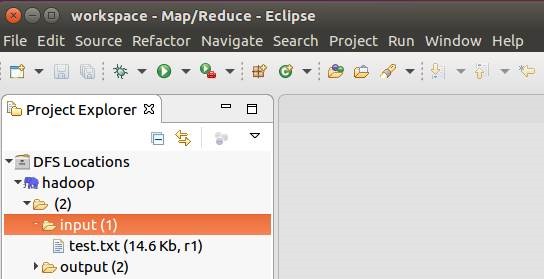

11. 准备数据

11.1. 查找应用数据

sudo cp /usr/local/hadoop/hadoop-2.7.5/NOTICE.txt /home/zyx/test.txt

sudo chown zyx:zyx /home/zyx/test.txt

11.2. 将数据放入HDFS中

hadoop fs -mkdir /input

hadoop fs -put /home/zyx/test.txt /input

hadoop fs -chmod -R 777 /input/test.txt

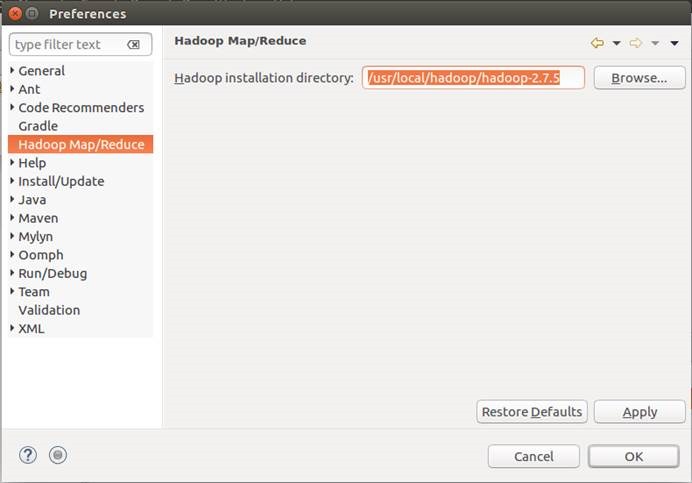

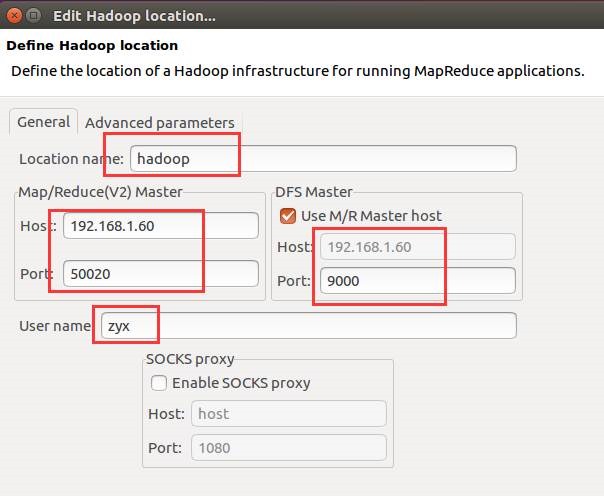

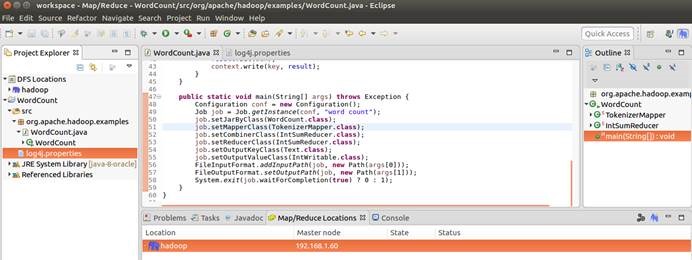

12. 配置Eclipse中的Hadoop设置

12.1. 设置Hadoop安装路径

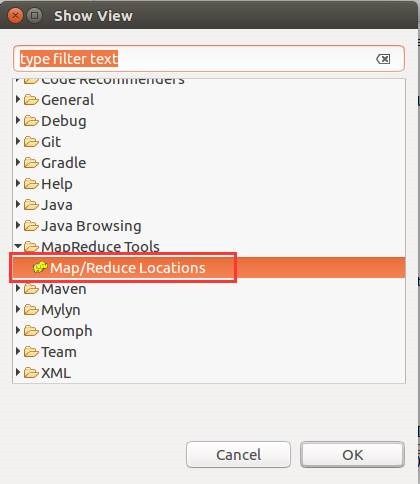

12.2. 设Eclipse的Hadoop视图

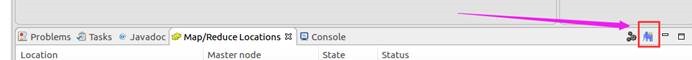

12.3. 连接HDFS

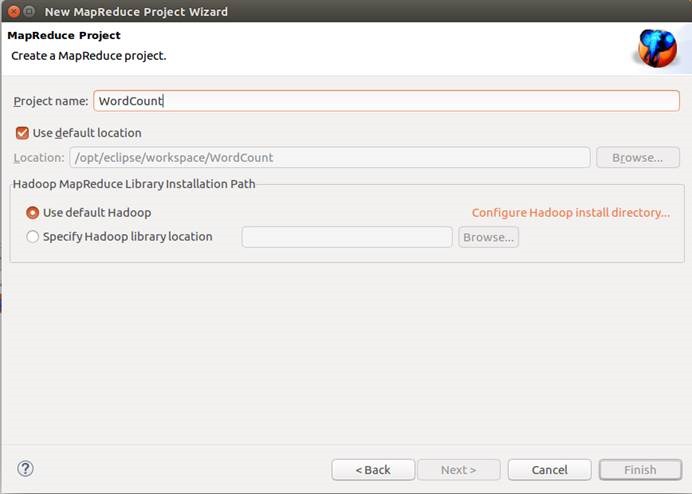

12.4. 新建MapReduce工程

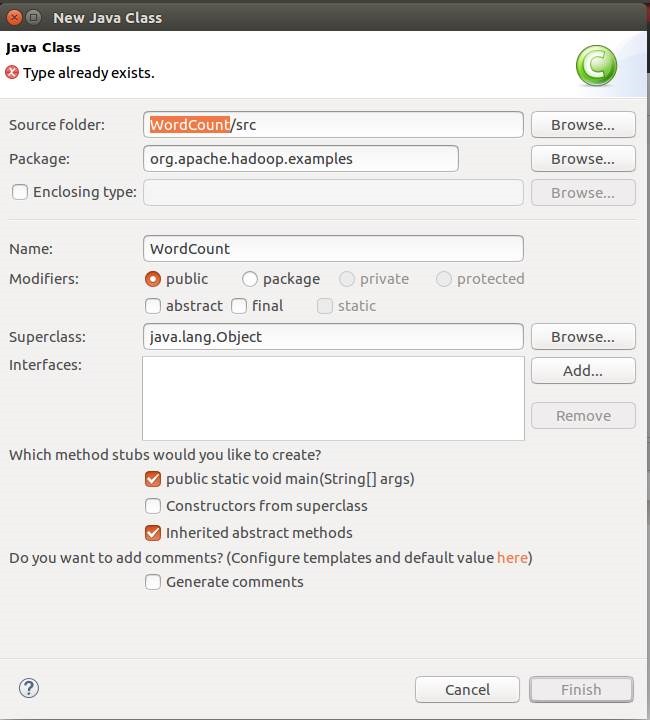

12.5. 新建运行的类

WordCount类中的内容

package org.apache.hadoop.examples;

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class WordCount {

public static class TokenizerMapper extends Mapper<Object, Text, Text, IntWritable> {

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(Object key, Text value, Context context) throws IOException, InterruptedException {

StringTokenizer itr = new StringTokenizer(value.toString());

while (itr.hasMoreTokens()) {

word.set(itr.nextToken());

context.write(word, one);

}

}

}

public static class IntSumReducer extends Reducer<Text, IntWritable, Text, IntWritable> {

private IntWritable result = new IntWritable();

public void reduce(Text key, Iterable<IntWritable> values, Context context)

throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

12.6. 新建日志配置文件log4j.properties

在src/目录下新建日志配置文件log4j.properties

其内容如下:

log4j.rootLogger=debug, stdout, R

#log4j.rootLogger=stdout, R

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

#log4j.appender.stdout.layout.ConversionPattern=%5p - %m%n

log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n

log4j.appender.R=org.apache.log4j.RollingFileAppender

log4j.appender.R.File=log4j.log

log4j.appender.R.MaxFileSize=100KB

log4j.appender.R.MaxBackupIndex=1

log4j.appender.R.layout=org.apache.log4j.PatternLayout

#log4j.appender.R.layout.ConversionPattern=%p %t %c - %m%n

log4j.appender.R.layout.ConversionPattern=%d %p [%c] - %m%n

#log4j.logger.com.codefutures=DEBUG

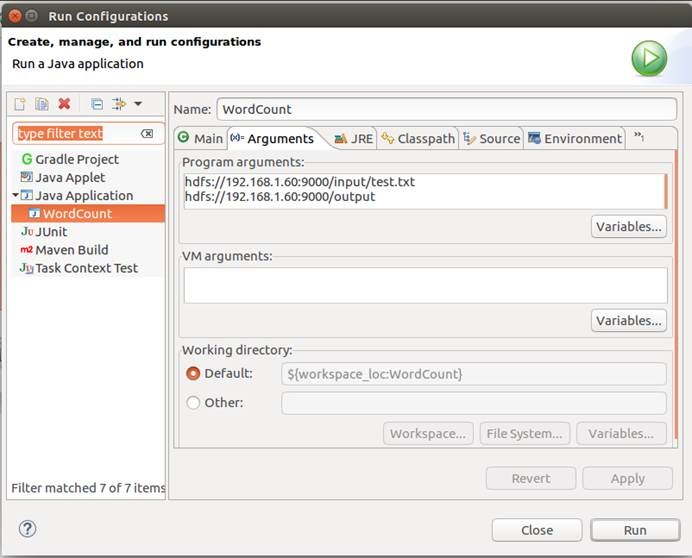

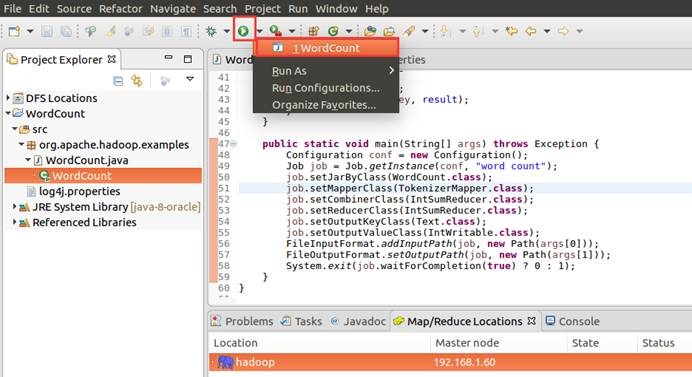

12.7. 运行

12.7.1. 运行环境设置

12.7.1.1. 设置输入与输出

hdfs://192.168.1.60:9000/input/test.txt

hdfs://192.168.1.60:9000/output

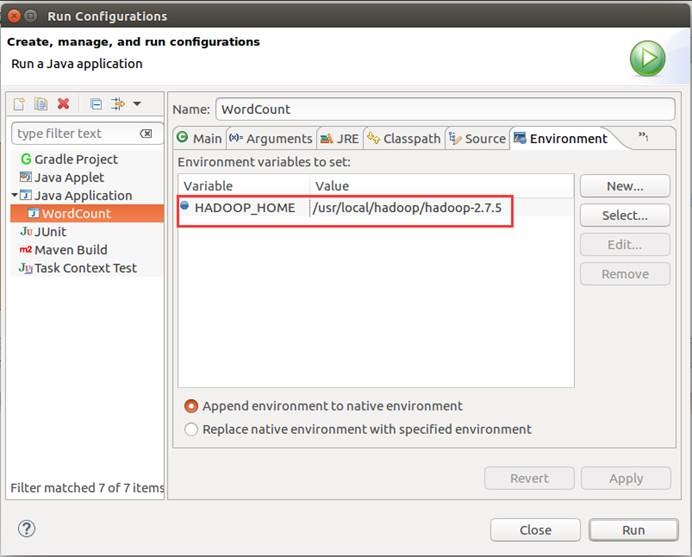

12.7.1.2. 设置环境变量

HADOOP_HOME=/usr/local/hadoop/hadoop-2.7.5

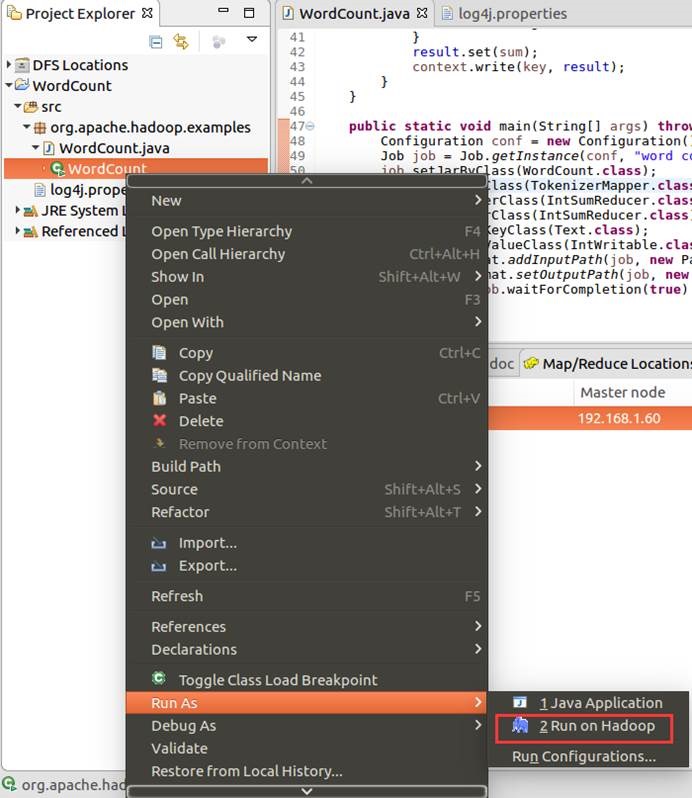

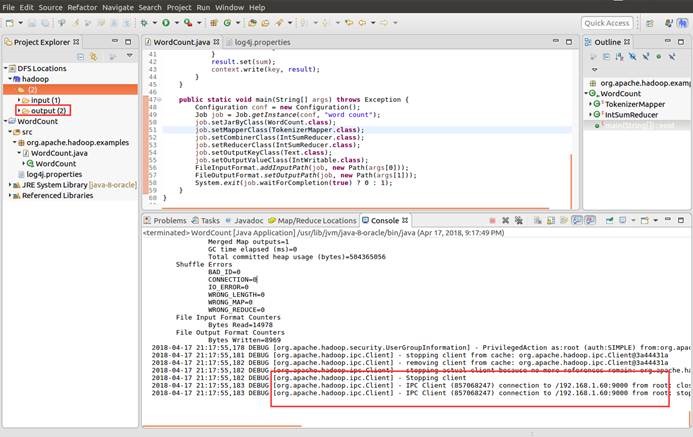

12.8. 启动运行

12.9. 查看运行结果