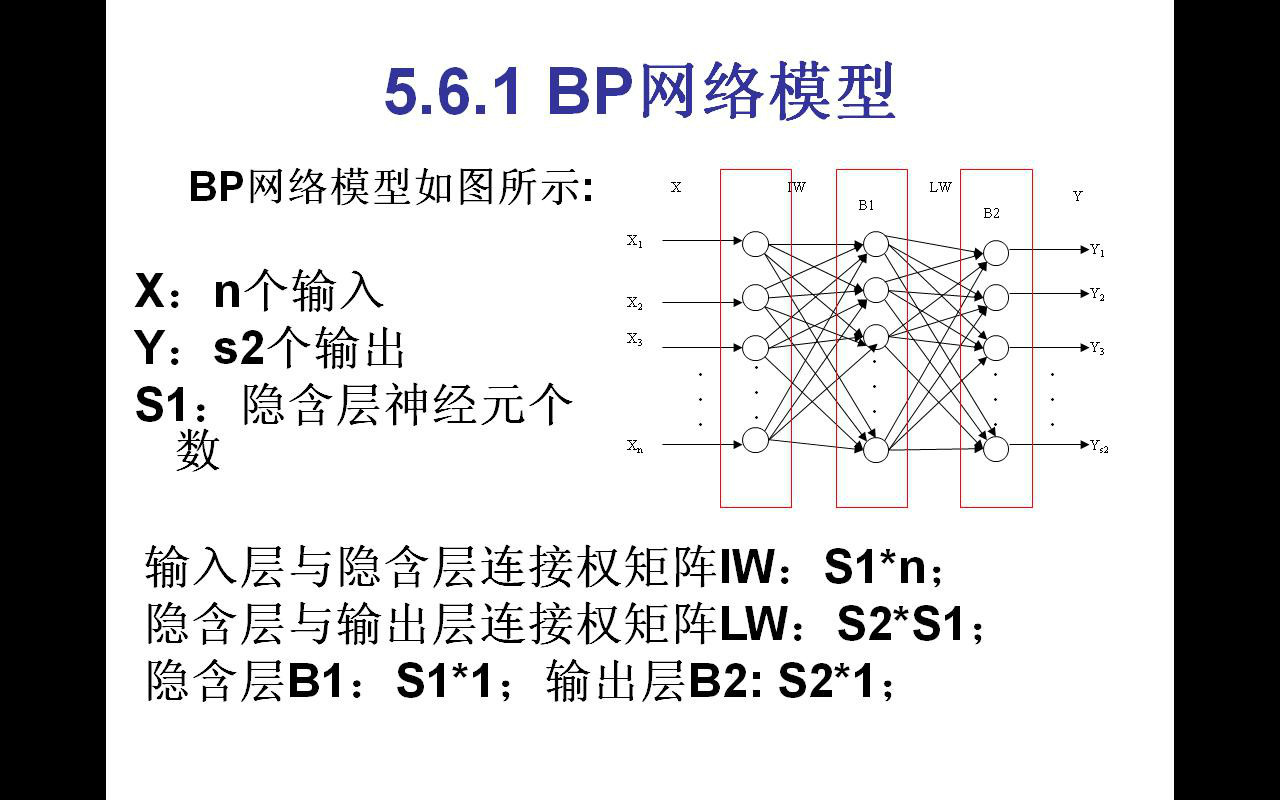

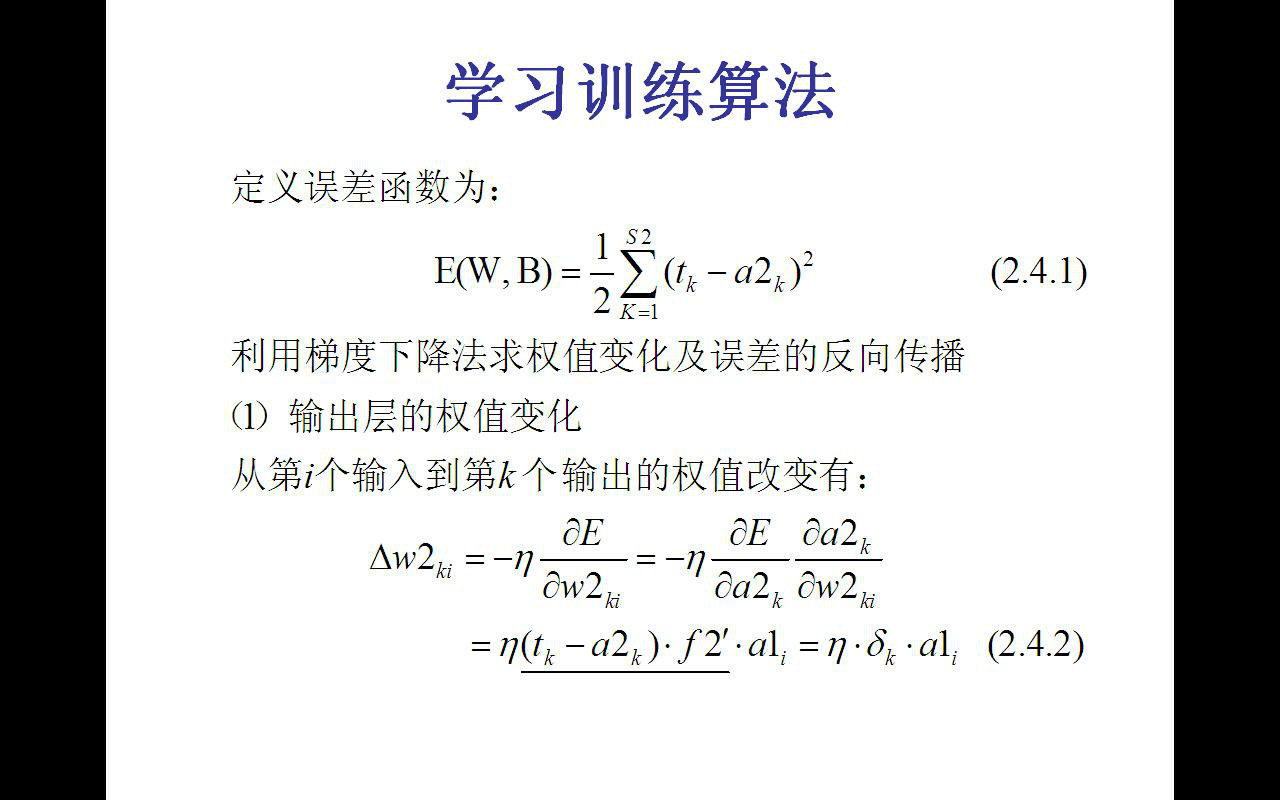

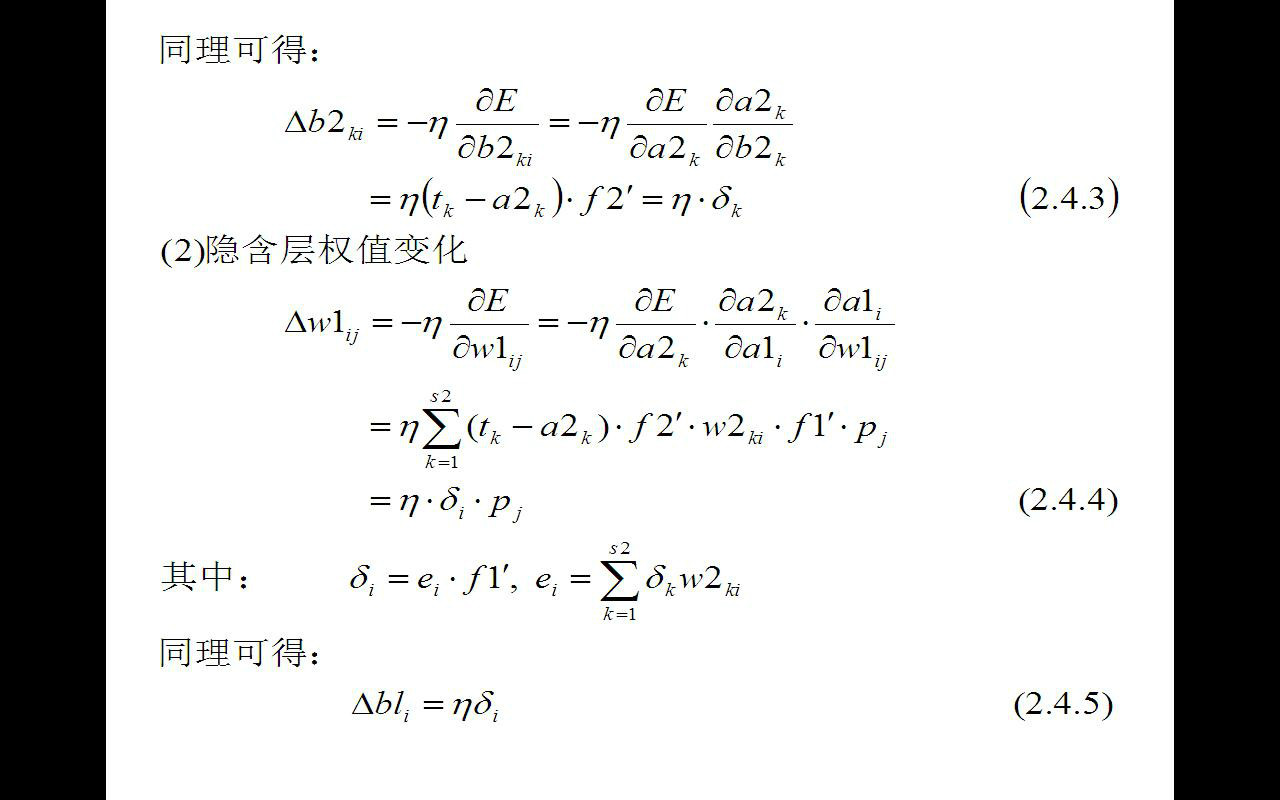

BP(Back Propagation)神经网络是1986年由Rumelhart和McCelland为首的科学家小组提出,是一种按误差逆传播算法训练的多层前馈网络,是目前应用最广泛的神经网络模型之一。BP网络能学习和存贮大量的输入-输出模式映射关系,而无需事前揭示描述这种映射关系的数学方程。它的学习规则是使用梯度下降法,通过反向传播来不断调整网络的权值和阈值,使网络的误差平方和最小。BP神经网络模型拓扑结构包括输入层(input)、隐层(hidden layer)和输出层(output layer)。

关键字:人工智能,神经网络,BP

The BP neural network

Abstract: the structure of the neural network is to simulate the human brain tissue, in an attempt to use the knowledge of bionics to solve problems. Undeniably artificial intelligence and virtual reality will become a hot technology in the recent years, virtual reality entry threshold is low, and artificial intelligence research needs certain levels of knowledge, but there is no doubt that every new breakthrough in the field of artificial intelligence will deeply affect the development of science and technology in the future.

BP (Back Propagation) neural network is a group of scientists headed by Rumelhart and McCelland 1986, is a kind of according to the error Back Propagation algorithm training of the multilayer feedforward network, is currently one of the most widely used neural network model. BP network can learn and store a lot of input - output model mapping, without prior reveal describe the mathematical equations of the mapping relationship. Its learning rule is to use gradient descent method, by back propagation to constantly adjust the network weights and threshold, minimize the error sum of squares of the network. BP neural network model of topological structures include input layer (input), the hidden layer, hidden layer) and output layer (output layer).

Keywords: artificial intelligence, neural network, BP

1,研究背景

“人脑是如何工作的?”

“人类能否制作模拟人脑的人工神经元?”

2,研究方向

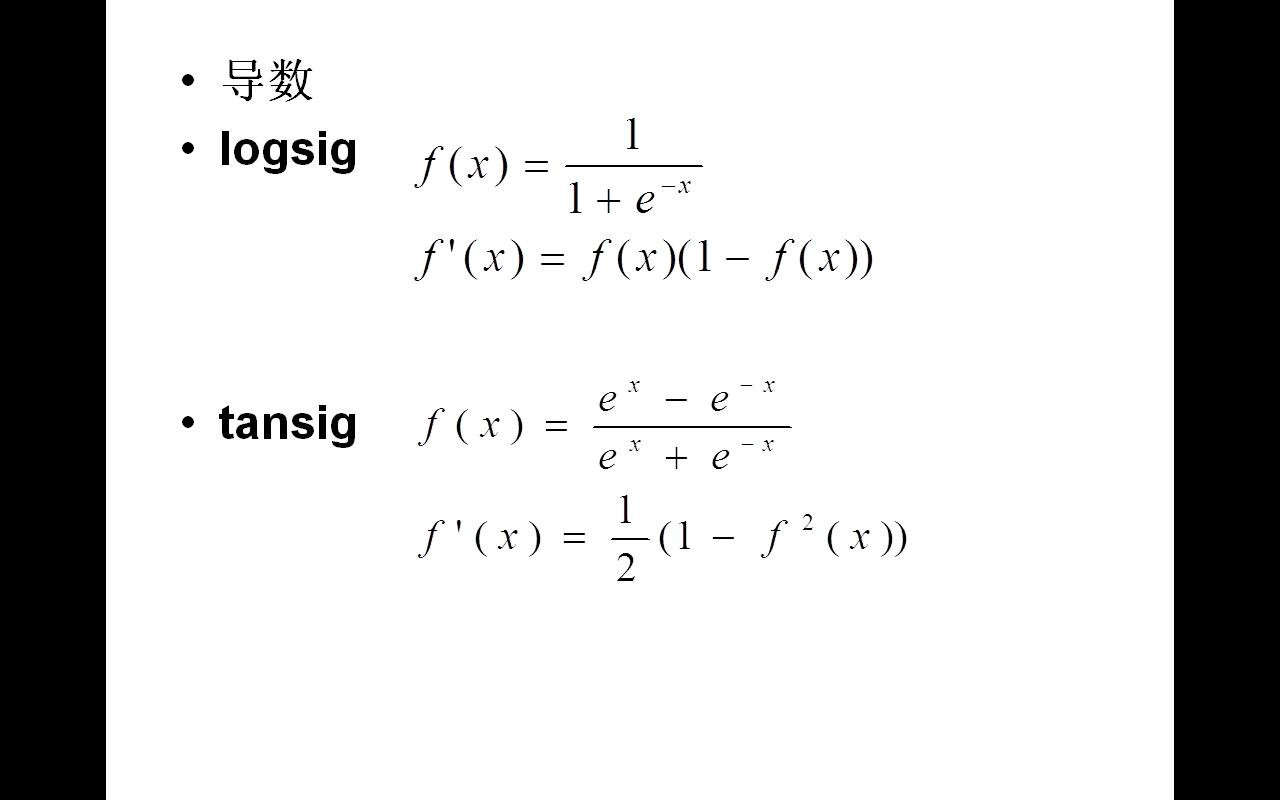

a 1 = tansig (IW 1,1 p 1 +b 1 )

tansig(x)=tanh(x)=(ex-e-x)/(ex+e-x)

a 2 = purelin (LW 2,1 a 1 +b 2 )

3,程序设计

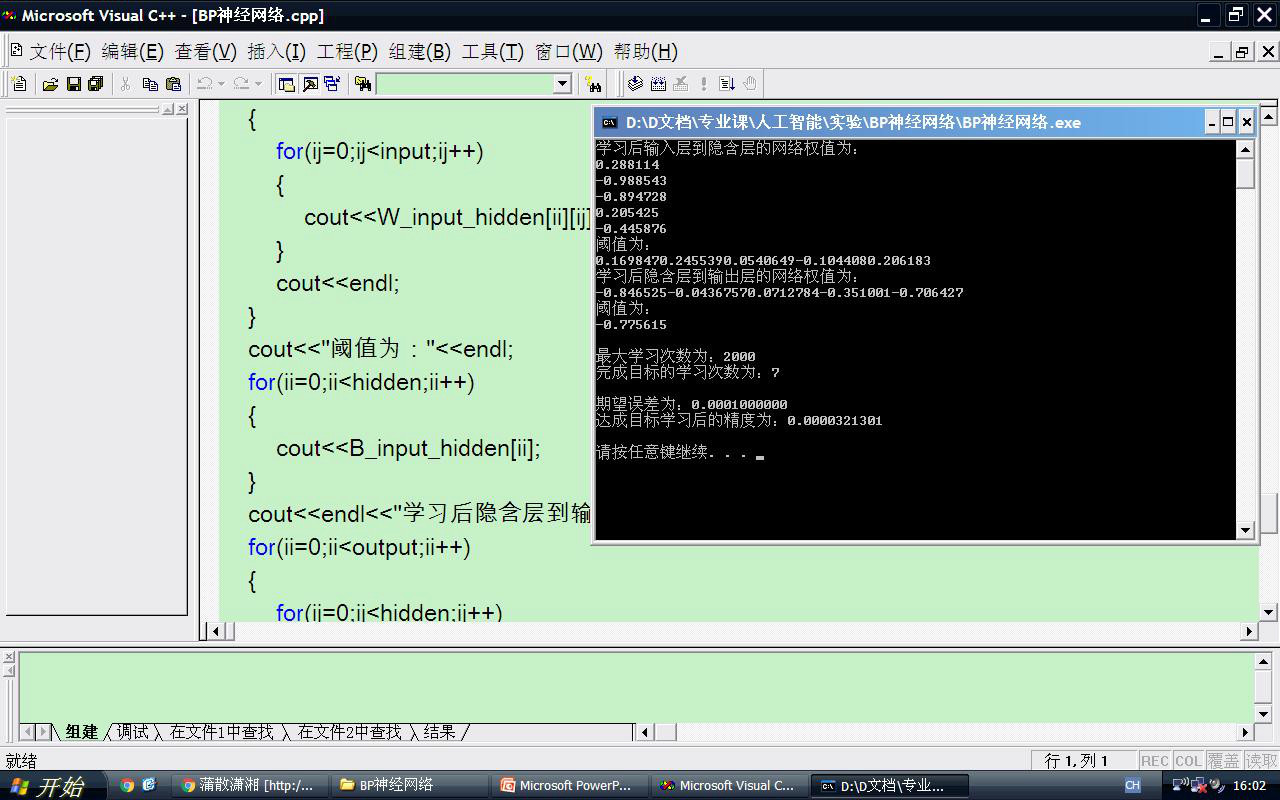

1 #include<iostream> 2 #include<cstdlib> 3 #include<fstream> 4 #include<ctime> 5 #include<cmath> 6 #include<iomanip> 7 using namespace std; 8 9 const int max_learn_length = 2000; //最大学习次数 10 const double study_rate = 0.01; //学习率(数据调整步长值) 11 const double anticipation_error = 0.0001; //期望误差 12 const int input = 1; //每组样本输入层数据量 13 const int hidden = 5; //每组样本隐含层数据量 14 const int output = 1; //每组样本输出层数据量 15 16 int sample; //样本数量 17 double *P; //输入矢量 18 double *T; //输出矢量 19 20 void readfile() 21 { 22 fstream p,t; 23 p.open("p.txt",ios::in); 24 t.open("t.txt",ios::in); 25 if(!p||!t) 26 { 27 cout<<"file load failed!"<<endl; 28 exit(0); 29 } 30 p>>sample; 31 t>>sample; 32 P=new double[sample]; 33 T=new double[sample]; 34 for(int ii=0;!p.eof()&&!t.eof();ii++) 35 { 36 p>>P[ii]; 37 t>>T[ii]; 38 } 39 p.close(); 40 t.close(); 41 } 42 43 void displaydata() 44 { 45 cout<<"sample="<<sample<<endl; 46 cout<<setiosflags(ios::fixed)<<setprecision(6); 47 for(int ii=0;ii<sample;ii++) 48 { 49 cout<<"P["<<ii<<"]="<<P[ii]<<" T["<<ii<<"]="<<T[ii]<<endl; 50 } 51 } 52 53 int main(int argc, char **argv) 54 { 55 double precision; //误差精度变量 56 double W_input_hidden[hidden][input]; //输入层到隐含层的网络权值变量 57 double W_hidden_output[output][hidden]; //隐含层到输出层的网络权值变量 58 double B_input_hidden[hidden]; //输入层到隐含层阈值变量 59 double B_hidden_output[output]; //隐含层到输出层阈值变量 60 double E_input_hidden[hidden]; //输入层到隐含层误差 61 double E_hidden_output[output]; //隐含层到输出层误差 62 double A_input_hidden[hidden]; //隐含层实际输出值 63 double A_hidden_output[output]; //输出层实际输出值 64 int ii, ij, ik, ic; 65 66 readfile(); 67 displaydata(); 68 69 srand(time(0)); //初始化随机函数 70 71 for (ii = 0; ii<hidden; ii++) 72 { 73 B_input_hidden[ii] = 2 * (double)rand() / RAND_MAX - 1; //阈值变量赋随机值(-1,1) 74 for (ij = 0; ij<input; ij++) //网络权值变量赋随机值 75 { 76 W_input_hidden[ii][ij] = 2 * (double)rand() / RAND_MAX - 1; 77 } 78 } 79 for (ii = 0; ii<output; ii++) 80 { 81 B_hidden_output[ii] = 2 * (double)rand() / RAND_MAX - 1; //阈值变量赋随机值(-1,1) 82 for (ij = 0; ij<hidden; ij++) //网络权值变量赋随机值 83 { 84 W_hidden_output[ii][ij] = 2 * (double)rand() / RAND_MAX - 1; 85 } 86 } 87 88 precision = INT_MAX ; //初始化精度值 89 for (ic = 0; ic < max_learn_length; ic++) //最大学习次数内循环 90 { 91 if (precision<anticipation_error) //循环剪枝函数 92 { 93 break; 94 } 95 precision = 0; 96 for (ii = 0; ii<sample; ii++) //21组样本循环叠加误差精度 97 { 98 for (ij = 0; ij<hidden; ij++) //输入层到隐含层的输出计算 99 { 100 A_input_hidden[ij] = 0.0; 101 for (ik = 0; ik<input; ik++) 102 { 103 A_input_hidden[ij] += P[ik] * W_input_hidden[ij][ik]; 104 } 105 A_input_hidden[ij] += B_input_hidden[ij]; 106 A_input_hidden[ij]=(double)2/(1+exp(-2*A_input_hidden[ij]))-1; 107 } 108 for (ij = 0; ij<output; ij++) //中间层到输出层的输出计算 109 { 110 A_hidden_output[ij] = 0.0; 111 for (ik = 0; ik<hidden; ik++) 112 { 113 A_hidden_output[ij] += A_input_hidden[ik] * W_hidden_output[ij][ik]; 114 } 115 A_hidden_output[ij] += B_hidden_output[ij]; 116 } 117 118 for(ij=0;ij<output;ij++) //隐含层到输出层的误差效能计算 119 { 120 E_hidden_output[ij]=T[ij]-A_hidden_output[ij]; 121 } 122 for(ij=0;ij<hidden;ij++) //输入层到隐含层的误差效能计算 123 { 124 E_input_hidden[ij]=0.0; 125 for(ik=0;ik<output;ik++) 126 { 127 E_input_hidden[ij]+=E_hidden_output[ik]*W_hidden_output[ik][ij]; 128 } 129 E_input_hidden[ij]=E_input_hidden[ij]*(1-A_input_hidden[ij]); 130 } 131 132 for (ij = 0; ij<output; ij++) //通过学习率调整隐含层到输出层的网络权值和阈值 133 { 134 for (ik = 0; ik<hidden; ik++) 135 { 136 W_hidden_output[ij][ik] += study_rate*E_hidden_output[ij]*A_input_hidden[ik]; 137 } 138 B_hidden_output[ij] += study_rate*E_hidden_output[ij]; 139 } 140 for (ij = 0; ij<hidden; ij++) //通过学习率调整输入层到隐含层的网络权值和阈值 141 { 142 for (ik = 0; ik<input; ik++) 143 { 144 W_input_hidden[ij][ik] += study_rate*E_input_hidden[ij]*P[ik]; 145 } 146 B_input_hidden[ij] += study_rate*E_input_hidden[ij]; 147 } 148 149 for (ij = 0; ij<output; ij++) //计算误差精度 150 { 151 precision += pow((T[ij] - A_hidden_output[ij]),2); 152 } 153 } 154 } 155 cout<<"学习后输入层到隐含层的网络权值为:"<<endl; 156 for(ii=0;ii<hidden;ii++) 157 { 158 for(ij=0;ij<input;ij++) 159 { 160 cout<<W_input_hidden[ii][ij]; 161 } 162 cout<<endl; 163 } 164 cout<<"阈值为:"<<endl; 165 for(ii=0;ii<hidden;ii++) 166 { 167 cout<<B_input_hidden[ii]; 168 } 169 cout<<endl<<"学习后隐含层到输出层的网络权值为:"<<endl; 170 for(ii=0;ii<output;ii++) 171 { 172 for(ij=0;ij<hidden;ij++) 173 { 174 cout<<W_hidden_output[ii][ij]; 175 } 176 cout<<endl; 177 } 178 cout<<"阈值为:"<<endl; 179 for(ii=0;ii<output;ii++) 180 { 181 cout<<B_hidden_output[ii]; 182 } 183 cout<<endl<<endl; 184 cout <<"最大学习次数为:" << max_learn_length << endl; 185 cout << "完成目标的学习次数为:" << ic << endl; 186 cout << endl << "期望误差为:" <<setiosflags(ios::fixed) 187 <<setprecision(10)<< anticipation_error << endl; 188 cout << "达成目标学习后的精度为:" << precision <<endl<< endl; 189 system("pause"); 190 return 0; 191 }

p.txt

1 21 2 -1 3 -0.9 4 -0.8 5 -0.7 6 -0.6 7 -0.5 8 -0.4 9 -0.3 10 -0.2 11 -0.1 12 0 13 0.1 14 0.2 15 0.3 16 0.4 17 0.5 18 0.6 19 0.7 20 0.8 21 0.9 22 1

t.txt

1 21 2 -0.9602 3 -0.5770 4 -0.0729 5 0.3771 6 0.6405 7 0.6600 8 0.4609 9 0.1336 10 -0.2013 11 -0.4344 12 -0.5000 13 -0.3930 14 -0.1647 15 0.0988 16 0.3072 17 0.3960 18 0.3449 19 0.1816 20 -0.0312 21 -0.2189 22 -0.3201