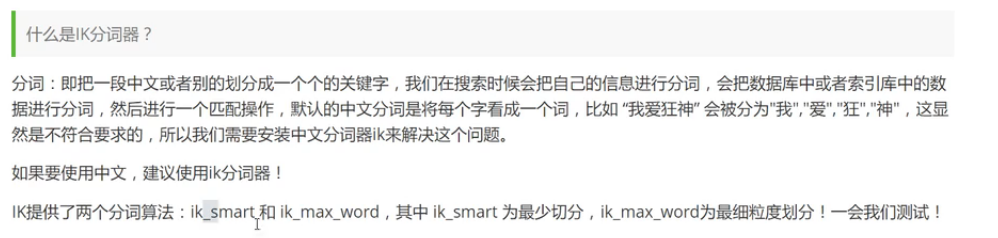

1. 什么是IK分词器

2. 下载IK分词器

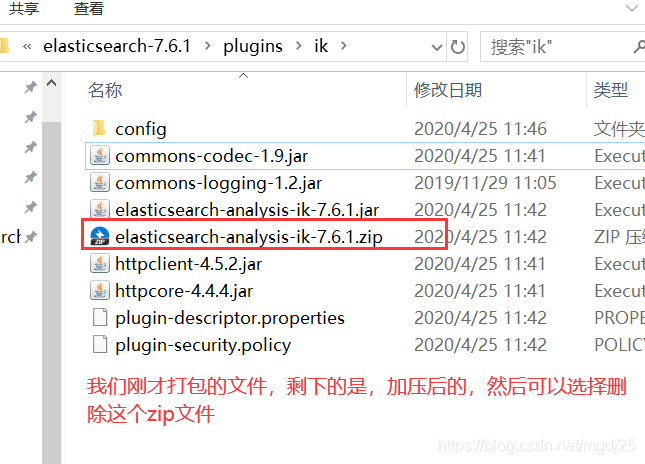

下载地址,版本要和ES的版本对应上

https://github.com/medcl/elasticsearch-analysis-ik/releases/tag/v7.3.1

注意下载zip版本

下载完毕后,放入到我们的elasticsearch/plus/ik插件目录里即可

-

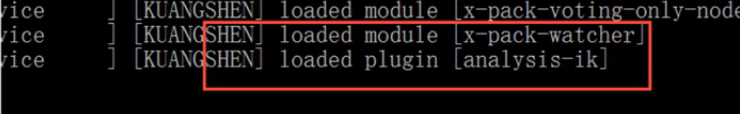

重启观察ES,可以看到IK分词器被加载了!

-

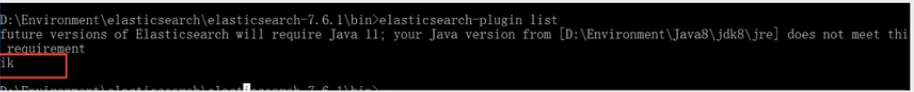

elasticsearch-plugin可以通过这个命令查看加载进来的插件

docker下载的安装的查看方法

root@haima-PC:/usr/local/docker/efk/docker_compose_efk# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c43439e99ff0 docker_compose_efk_fluentd "/bin/entrypoint.sh …" 29 seconds ago Up 27 seconds 5140/tcp, 0.0.0.0:24224->24224/tcp, 0.0.0.0:24224->24224/udp docker_compose_efk_fluentd_1

00eda4c1585d docker_compose_efk_kibana "/usr/local/bin/dumb…" 29 seconds ago Up 26 seconds 0.0.0.0:5601->5601/tcp docker_compose_efk_kibana_1

6e2e42f9e3ca docker.elastic.co/elasticsearch/elasticsearch:7.3.1 "/usr/local/bin/dock…" 31 seconds ago Up 29 seconds 0.0.0.0:9200->9200/tcp, 9300/tcp docker_compose_efk_elasticsearch_1

root@haima-PC:/usr/local/docker/efk/docker_compose_efk# docker exec -it docker_compose_efk_elasticsearch_1 bash ./bin/elasticsearch-plugin list

ik

详细查看我github里的readme.md

https://github.com/haimait/docker_compose_efk

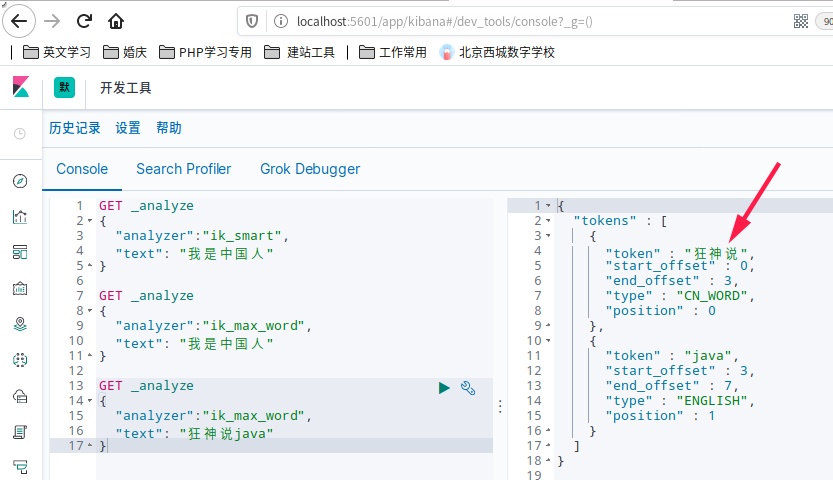

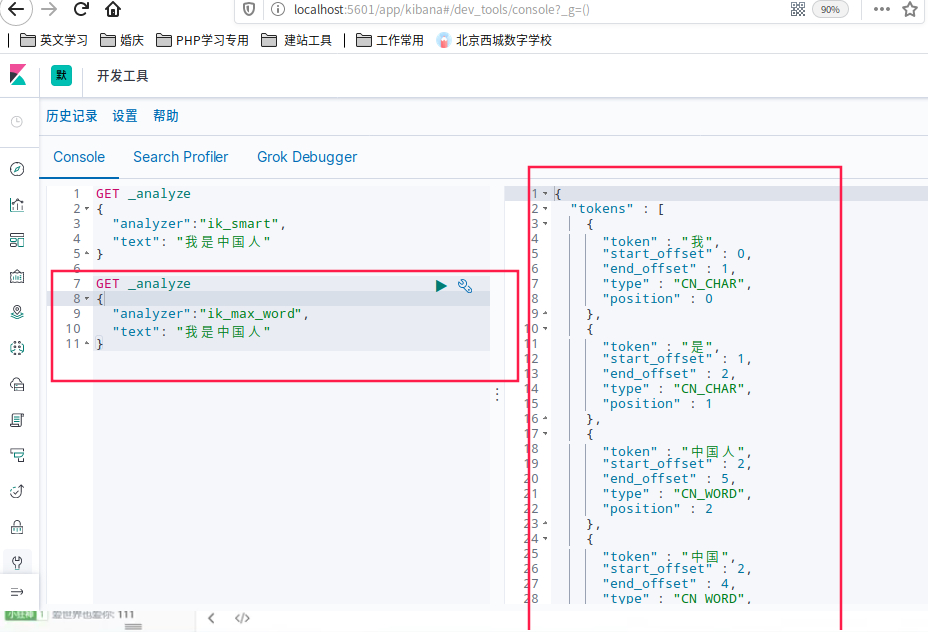

5. 使用kibana测试!

查看不同的分词效果

ik_smart和ik_max_word,其中ik_smart为最少切分

GET _analyze

{

"analyzer":"ik_smart",

"text": "我是中国人"

}

划出了3组

{

"tokens" : [

{

"token" : "我",

"start_offset" : 0,

"end_offset" : 1,

"type" : "CN_CHAR",

"position" : 0

},

{

"token" : "是",

"start_offset" : 1,

"end_offset" : 2,

"type" : "CN_CHAR",

"position" : 1

},

{

"token" : "中国人",

"start_offset" : 2,

"end_offset" : 5,

"type" : "CN_WORD",

"position" : 2

}

]

}

ik_max_word为最细粒度划分,穷尽词库的可能

GET _analyze

{

"analyzer":"ik_max_word",

"text": "我是中国人"

}

划出了5组

{

"tokens" : [

{

"token" : "我",

"start_offset" : 0,

"end_offset" : 1,

"type" : "CN_CHAR",

"position" : 0

},

{

"token" : "是",

"start_offset" : 1,

"end_offset" : 2,

"type" : "CN_CHAR",

"position" : 1

},

{

"token" : "中国人",

"start_offset" : 2,

"end_offset" : 5,

"type" : "CN_WORD",

"position" : 2

},

{

"token" : "中国",

"start_offset" : 2,

"end_offset" : 4,

"type" : "CN_WORD",

"position" : 3

},

{

"token" : "国人",

"start_offset" : 3,

"end_offset" : 5,

"type" : "CN_WORD",

"position" : 4

}

]

}

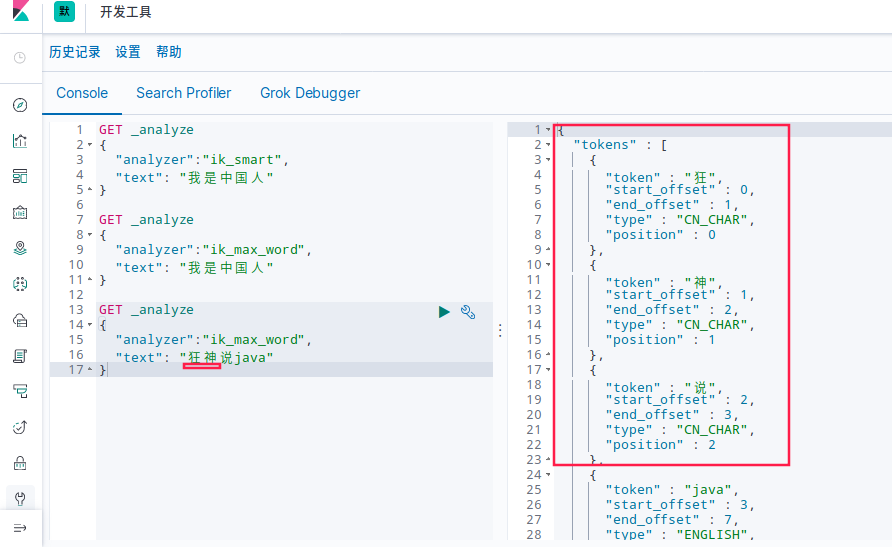

测试狂神说java

发现狂神说被分词器拆分开了,这里我们不想让它拆开,就需要在分词器中添加一个自己的词典,把自己的词放到自己的词典里

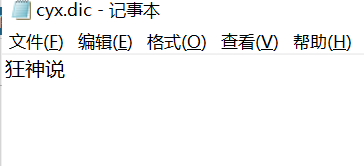

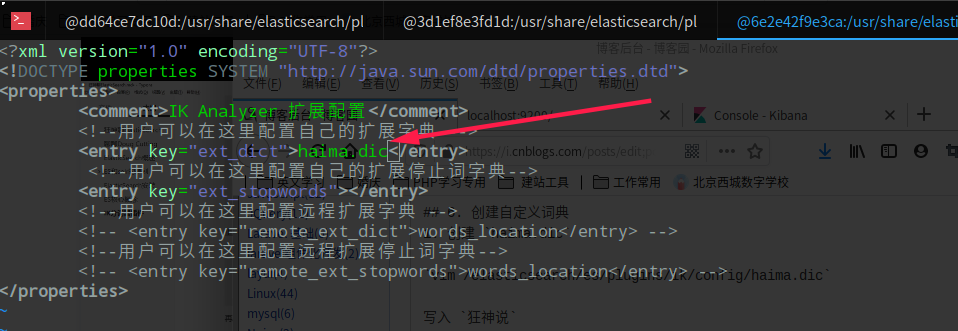

6. 创建自定义词典

- 创建

haima.dic

vim /elasticsearch/es/plugins/ik/config/haima.dic

写入 狂神说

- 把自定义的

haima.dic字典,载入到配置文件里

vim /elasticsearch/es/plugins/ik/config/IKAnalyzer.cfg.xml

- 重启es服务

root@haima-PC:/usr/local/docker/efk/docker_compose_efk/elasticsearch/es/plugins/ik/config# docker restart docker_compose_efk_elasticsearch_1

docker_compose_efk_elasticsearch_1

- 再看一下分词器,

狂神说已经不会被分开了