Hadoop: Setup Maven project for MapReduce in 5mn

I am sure I am not the only one who ever struggled with Hadoop eclipse plugin installation. This plugin strongly depends on your environment (eclipse, ant, jdk) and hadoop distribution and version. Moreover, it only provides the Old API for MapReduce.

It is so simple to create a maven project for Hadoop that wasting time trying to build this plugin becomes totally useless. I am describing on this article how to setup a first maven hadoop project for Cloudera CDH4 on eclipse.

Prerequisite

maven 3 jdk 1.6 eclipse with m2eclipse plugin installed

Add Cloudera repository

Cloudera jar files are not available on default Maven central repository. You need to explicitly add cloudera repo in your settings.xml (under ${HOME}/.m2/settings.xml).

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

|

<?xml version="1.0" encoding="UTF-8"?><settings> <profiles> <profile> <id>standard-extra-repos</id> <activation> <activeByDefault>true</activeByDefault> </activation> <repositories> <repository> <!-- Central Repository --> <id>central</id> <releases> <enabled>true</enabled> </releases> <snapshots> <enabled>true</enabled> </snapshots> </repository> <repository> <!-- Cloudera Repository --> <id>cloudera</id> <releases> <enabled>true</enabled> </releases> <snapshots> <enabled>true</enabled> </snapshots> </repository> </repositories> </profile> </profiles></settings> |

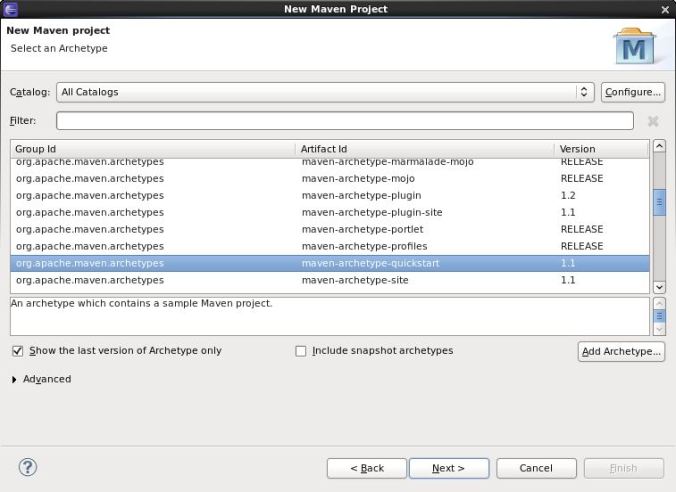

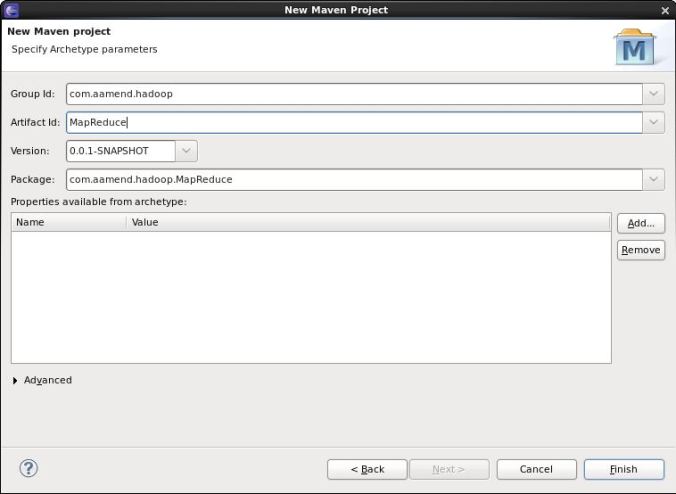

Create Maven project

On eclipse, create a new Maven project as follow

Add Hadoop Nature

For Cloudera distribution CDH4, open pom.xml file and add the following dependencies

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

|

<dependencyManagement> <dependencies> <dependency> <groupId>jdk.tools</groupId> <artifactId>jdk.tools</artifactId> <version>1.6</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-hdfs</artifactId> <version>2.0.0-cdh4.0.0</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-auth</artifactId> <version>2.0.0-cdh4.0.0</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>2.0.0-cdh4.0.0</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-core</artifactId> <version>2.0.0-mr1-cdh4.0.1</version> </dependency> <dependency> <groupId>junit</groupId> <artifactId>junit-dep</artifactId> <version>4.8.2</version> </dependency> </dependencies></dependencyManagement><dependencies> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-hdfs</artifactId> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-auth</artifactId> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-core</artifactId> </dependency> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>4.10</version> <scope>test</scope> </dependency></dependencies><build> <plugins> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-compiler-plugin</artifactId> <version>2.1</version> <configuration> <source>1.6</source> <target>1.6</target> </configuration> </plugin> </plugins></build> |

Download dependencies

Now that you have added your Cloudera repository and created your project, download dependencies. This can be easily done by right-clicking on your eclipse project, “update Maven dependencies”.

All these dependencies must have been added on your .m2 repository.

[developer@localhost ~]$ find .m2/repository/org/apache/hadoop -name "*.jar" .m2/repository/org/apache/hadoop/hadoop-tools/1.0.4/hadoop-tools-1.0.4.jar .m2/repository/org/apache/hadoop/hadoop-common/2.0.0-cdh4.0.0/hadoop-common-2.0.0-cdh4.0.0-sources.jar .m2/repository/org/apache/hadoop/hadoop-common/2.0.0-cdh4.0.0/hadoop-common-2.0.0-cdh4.0.0.jar .m2/repository/org/apache/hadoop/hadoop-core/2.0.0-mr1-cdh4.0.1/hadoop-core-2.0.0-mr1-cdh4.0.1-sources.jar .m2/repository/org/apache/hadoop/hadoop-core/2.0.0-mr1-cdh4.0.1/hadoop-core-2.0.0-mr1-cdh4.0.1.jar .m2/repository/org/apache/hadoop/hadoop-hdfs/2.0.0-cdh4.0.0/hadoop-hdfs-2.0.0-cdh4.0.0.jar .m2/repository/org/apache/hadoop/hadoop-streaming/1.0.4/hadoop-streaming-1.0.4.jar .m2/repository/org/apache/hadoop/hadoop-auth/2.0.0-cdh4.0.0/hadoop-auth-2.0.0-cdh4.0.0.jar [developer@localhost ~]$

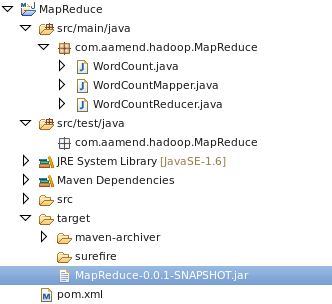

Create WordCount example

Create your driver code

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

|

package com.aamend.hadoop.MapReduce;import java.io.IOException;import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.FileSystem;import org.apache.hadoop.fs.Path;import org.apache.hadoop.io.IntWritable;import org.apache.hadoop.io.Text;import org.apache.hadoop.mapreduce.Job;import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;public class WordCount { public static void main(String[] args) throws IOException, InterruptedException, ClassNotFoundException { Path inputPath = new Path(args[0]); Path outputDir = new Path(args[1]); // Create configuration Configuration conf = new Configuration(true); // Create job Job job = new Job(conf, "WordCount"); job.setJarByClass(WordCountMapper.class); // Setup MapReduce job.setMapperClass(WordCountMapper.class); job.setReducerClass(WordCountReducer.class); job.setNumReduceTasks(1); // Specify key / value job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); // Input FileInputFormat.addInputPath(job, inputPath); job.setInputFormatClass(TextInputFormat.class); // Output FileOutputFormat.setOutputPath(job, outputDir); job.setOutputFormatClass(TextOutputFormat.class); // Delete output if exists FileSystem hdfs = FileSystem.get(conf); if (hdfs.exists(outputDir)) hdfs.delete(outputDir, true); // Execute job int code = job.waitForCompletion(true) ? 0 : 1; System.exit(code); }} |

Create Mapper class

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

|

package com.aamend.hadoop.MapReduce;import java.io.IOException;import org.apache.hadoop.io.IntWritable;import org.apache.hadoop.io.Text;import org.apache.hadoop.mapreduce.Mapper;public class WordCountMapper extends Mapper<Object, Text, Text, IntWritable> { private final IntWritable ONE = new IntWritable(1); private Text word = new Text(); public void map(Object key, Text value, Context context) throws IOException, InterruptedException { String[] csv = value.toString().split(","); for (String str : csv) { word.set(str); context.write(word, ONE); } }} |

Create your Reducer class

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

package com.aamend.hadoop.MapReduce;import java.io.IOException;import org.apache.hadoop.io.IntWritable;import org.apache.hadoop.io.Text;import org.apache.hadoop.mapreduce.Reducer;public class WordCountReducer extends Reducer<Text, IntWritable, Text, IntWritable> { public void reduce(Text text, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException { int sum = 0; for (IntWritable value : values) { sum += value.get(); } context.write(text, new IntWritable(sum)); }} |

Build project

Exporting jar file is actually out of the box using maven. Execute the following command

mvn clean install

You should see same output as below

.../... [INFO] [INFO] --- maven-jar-plugin:2.3.2:jar (default-jar) @ MapReduce --- [INFO] Building jar: /home/developer/Workspace/hadoop/MapReduce/target/MapReduce-0.0.1-SNAPSHOT.jar [INFO] [INFO] --- maven-install-plugin:2.3.1:install (default-install) @ MapReduce --- [INFO] Installing /home/developer/Workspace/hadoop/MapReduce/target/MapReduce-0.0.1-SNAPSHOT.jar to /home/developer/.m2/repository/com/aamend/hadoop/MapReduce/0.0.1-SNAPSHOT/MapReduce-0.0.1-SNAPSHOT.jar [INFO] Installing /home/developer/Workspace/hadoop/MapReduce/pom.xml to /home/developer/.m2/repository/com/aamend/hadoop/MapReduce/0.0.1-SNAPSHOT/MapReduce-0.0.1-SNAPSHOT.pom [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESS [INFO] ------------------------------------------------------------------------ [INFO] Total time: 9.159s [INFO] Finished at: Sat May 25 00:35:56 GMT+02:00 2013 [INFO] Final Memory: 16M/212M [INFO] ------------------------------------------------------------------------

And your jar file must be available on project’s target directory (additionally in your ${HOME}/.m2 local repository).

This jar is ready to be executed on your Hadoop environment.

hadoop jar MapReduce-0.0.1-SNAPSHOT.jar com.aamend.hadoop.MapReduce.WordCount input output

Each time I need to create a new Hadoop project, I simply copy pom.xml template described above, and that’s it..