一.Scrapy 框架简介

1.简介

Scrapy是用纯Python实现一个为了爬取网站数据、提取结构性数据而编写的应用框架,用途非常广泛。 框架的力量,用户只需要定制开发几个模块就可以轻松的实现一个爬虫,用来抓取网页内容以及各种图片,非常之方便。 Scrapy 使用了 Twisted'twɪstɪd异步网络框架来处理网络通讯,可以加快我们的下载速度,不用自己去实现异步框架,并且包含了各种中间件接口,可以灵活的完成各种需求

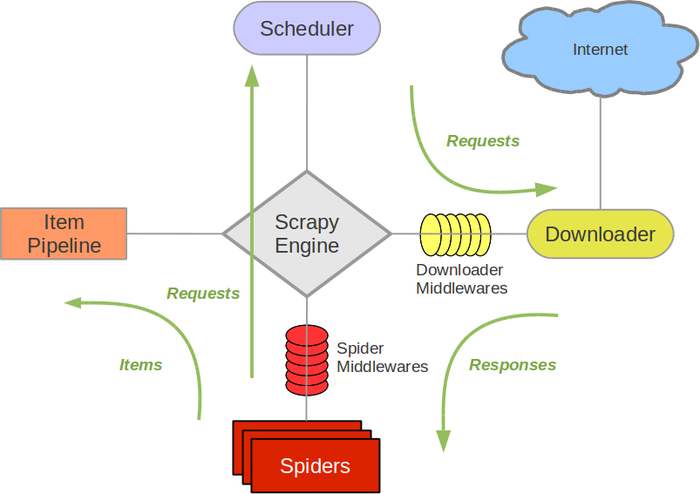

框架图如下:

流程:

Scrapy Engine(引擎): 负责Spider、ItemPipeline、Downloader、Scheduler中间的通讯,信号、数据传递等。

Scheduler(调度器): 它负责接受引擎发送过来的Request请求,并按照一定的方式进行整理排列,入队,当引擎需要时,交还给引擎。

Downloader(下载器):负责下载Scrapy Engine(引擎)发送的所有Requests请求,并将其获取到的Responses交还给Scrapy Engine(引擎),由引擎交给Spider来处理,

Spider(爬虫):它负责处理所有Responses,从中分析提取数据,获取Item字段需要的数据,并将需要跟进的URL提交给引擎,再次进入Scheduler(调度器),

Item Pipeline(管道):它负责处理Spider中获取到的Item,并进行进行后期处理(详细分析、过滤、存储等)的地方.

Downloader Middlewares(下载中间件):你可以当作是一个可以自定义扩展下载功能的组件。

Spider Middlewares(Spider中间件):你可以理解为是一个可以自定扩展和操作引擎和Spider中间通信的功能组件(比如进入Spider的Responses;和从Spider出去的Requests)

2.用法步骤

1.新建项目 (scrapy startproject xxx):新建一个新的爬虫项目 2.明确目标 (编写items.py):明确你想要抓取的目标 3.制作爬虫 (spiders/xxspider.py):制作爬虫开始爬取网页 4.存储内容 (pipelines.py):设计管道存储爬取内容

3.安装

Windows 安装方式 Python 2 / 3 升级pip版本:pip install --upgrade pip 通过pip 安装 Scrapy 框架pip install Scrapy Ubuntu 需要9.10或以上版本安装方式 Python 2 / 3 安装非Python的依赖 sudo apt-get install python-dev python-pip libxml2-dev libxslt1-dev zlib1g-dev libffi-dev libssl-dev 通过pip 安装 Scrapy 框架 sudo pip install scrapy 安装后,只要在命令终端输入 scrapy,看到正确信息即可

二.快速入门

a.创建一个新的Scrapy项目。进入自定义的项目目录中,运行下列命令:

scrapy startproject qsbk #[qsbk]为项目名字

其中, qsbk为项目名称,可以看到将会创建一个 qsbk文件夹,目录结构大致如下: 下面来简单介绍一下各个主要文件的作用: scrapy.cfg :项目的配置文件 qsbk/ :项目的Python模块,将会从这里引用代码 qsbk/items.py :项目的目标文件 qsbk/pipelines.py :项目的管道文件 qsbk/settings.py :项目的设置文件 qsbk/spiders/ :存储爬虫代码目录

b.创建一个案例

使用命令创建一个爬虫,先进去qsbk目录

scrapy genspider qsbk_spider "qiushibaike.com" #qsbk_spider 是爬虫名,不能和项目名一样,[qiushibaike.com]为要爬取的域名,

创建了一个名字叫做qsbk_spider的爬虫,并且能爬取的网页只会限制在qiushibaike.com这个域名下

settings.py

# -*- coding: utf-8 -*- # Scrapy settings for qsbk project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://doc.scrapy.org/en/latest/topics/settings.html # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html # https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'qsbk' SPIDER_MODULES = ['qsbk.spiders'] NEWSPIDER_MODULE = 'qsbk.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'qsbk (+http://www.yourdomain.com)' # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} DEFAULT_REQUEST_HEADERS = { 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', 'Accept-Language': 'en', 'User-Agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36' } # Enable or disable spider middlewares # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'qsbk.middlewares.QsbkSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'qsbk.middlewares.QsbkDownloaderMiddleware': 543, #} # Enable or disable extensions # See https://doc.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html #ITEM_PIPELINES = { # 'qsbk.pipelines.QsbkPipeline': 300, #} # Enable and configure the AutoThrottle extension (disabled by default) # See https://doc.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

qsbk_spider.py

import scrapy class QsbkSpiderSpider(scrapy.Spider): name = 'qsbk_spider' allowed_domains = ['qiushibaike.com'] start_urls = ['https://www.qiushibaike.com/text/page/1/'] def parse(self, response): #SelectorList duanzidivs=response.xpath('//div[@id="content-left"]/div') for duanzidiv in duanzidivs: #Selector authors=duanzidiv.xpath('.//h2/text()').get().strip() content=duanzidiv.xpath('.//div[@class="content"]/span/text()').getall() content="".join(content) print(authors,content)

在qsbk目录下创建start.py

from scrapy import cmdline cmdline.execute(["scrapy","crawl","qsbk_spider"])

执行即可看到结果

c.保存数据

qsbk_spider.py

import scrapy from qsbk.items import QsbkItem class QsbkSpiderSpider(scrapy.Spider): name = 'qsbk_spider' allowed_domains = ['qiushibaike.com'] start_urls = ['https://www.qiushibaike.com/text/page/1/'] def parse(self, response): #SelectorList duanzidivs=response.xpath('//div[@id="content-left"]/div') items=[] for duanzidiv in duanzidivs: #Selector author=duanzidiv.xpath('.//h2/text()').get().strip() content=duanzidiv.xpath('.//div[@class="content"]/span/text()').getall() content="".join(content) item=QsbkItem(author=author,content=content) yield item #或者将此行注销,将下面两行的#去掉 # items.append(item) # return items #注意:提取出来的数据是一个Selector或者是一个SelectorList # getall()获取的是所有文本,返回的是一个列表,get()获取的是第一个文本,返回的是str

settings.py

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36'

}

ROBOTSTXT_OBEY = False

ITEM_PIPELINES = { 'qsbk.pipelines.QsbkPipeline': 300, } #将此行的注释打开

pipelines.py

import json class QsbkPipeline(object): def __init__(self): self.fp=open("duanzi.json","w",encoding="utf-8") def open_spider(self,spider): print("开始了") def process_item(self, item, spider): item_json=json.dumps(dict(item),ensure_ascii=False) self.fp.write(item_json+" ") return item def close_spider(self,spider): self.fp.close() print("结束了") #open_spider:当爬虫被打开的时候执行 #process_item:当爬虫有item传过来的时候被调用 #close_spider:当爬虫关闭的时候会被调用 #要激活pipline,应该再settings.py中,设置ITEM_PIPLINES

items.py

import scrapy class QsbkItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() author=scrapy.Field() content=scrapy.Field()

在qsbk目录下创建start.py

from scrapy import cmdline cmdline.execute(["scrapy","crawl","qsbk_spider"])

d.优化保存数据

pipelines.py

from scrapy.exporters import JsonItemExporter class QsbkPipeline(object): def __init__(self): self.fp=open("duanzi.json","wb") self.exporter=JsonItemExporter(self.fp,ensure_ascii=False,encoding="utf-8") self.exporter.start_exporting() def open_spider(self,spider): print("开始了") def process_item(self, item, spider): # item_json=json.dumps(dict(item),ensure_ascii=False) # self.fp.write(item_json+" ") self.exporter.export_item(item) return item def close_spider(self,spider): self.exporter.finish_exporting() self.fp.close() print("结束了")

或者

from scrapy.exporters import JsonLinesItemExporter class QsbkPipeline(object): def __init__(self): self.fp=open("duanzi.json","wb") self.exporter=JsonLinesItemExporter(self.fp,ensure_ascii=False,encoding="utf-8") def open_spider(self,spider): print("开始了") def process_item(self, item, spider): # item_json=json.dumps(dict(item),ensure_ascii=False) # self.fp.write(item_json+" ") self.exporter.export_item(item) return item def close_spider(self,spider): self.exporter.finish_exporting() self.fp.close() print("结束了")

其他不变

e.多次请求下一页

qsbk_spider.py

import scrapy from qsbk.items import QsbkItem class QsbkSpiderSpider(scrapy.Spider): name = 'qsbk_spider' allowed_domains = ['qiushibaike.com'] start_urls = ['https://www.qiushibaike.com/text/page/1/'] base_domain="https://www.qiushibaike.com" def parse(self, response): #SelectorList duanzidivs=response.xpath('//div[@id="content-left"]/div') items=[] for duanzidiv in duanzidivs: #Selector author=duanzidiv.xpath('.//h2/text()').get().strip() content=duanzidiv.xpath('.//div[@class="content"]/span/text()').getall() content="".join(content) item=QsbkItem(author=author,content=content) yield item #或者将此行注销,将下面两行的#去掉 # items.append(item) # return items next_url=response.xpath('//ul[@class="pagination"]/li[last()]/a/@href').get() if not next_url: return else: yield scrapy.Request(self.base_domain+next_url,callback=self.parse)

待