一、下载Kubernetes(简称K8S)二进制文件,和 docker 离线包

下载离线docker安装包:

docker-ce-17.03.2.ce-1.el7.centos.x86_64.rpm

docker-ce-selinux-17.03.2.ce-1.el7.centos.noarch.rpm

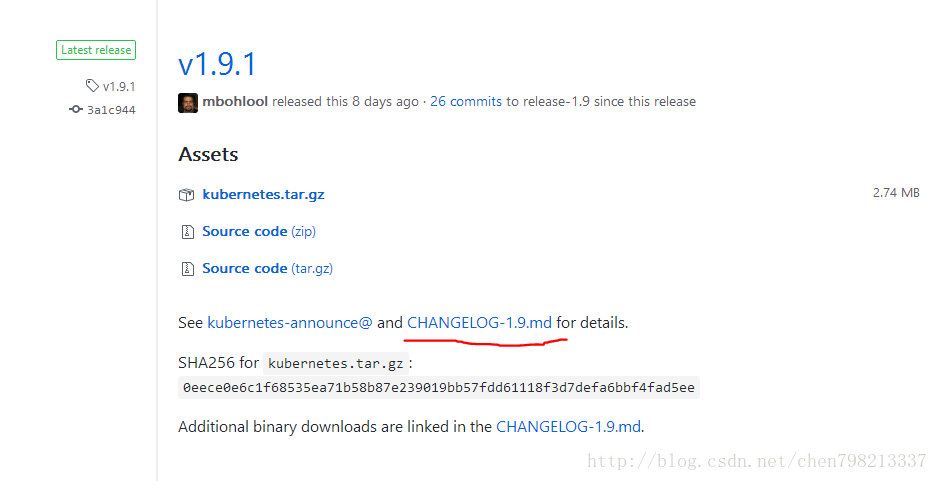

1)https://github.com/kubernetes/kubernetes/releases

从上边的网址中选择相应的版本,本文以1.9.1版本为例,从 CHANGELOG页面 下载二进制文件。

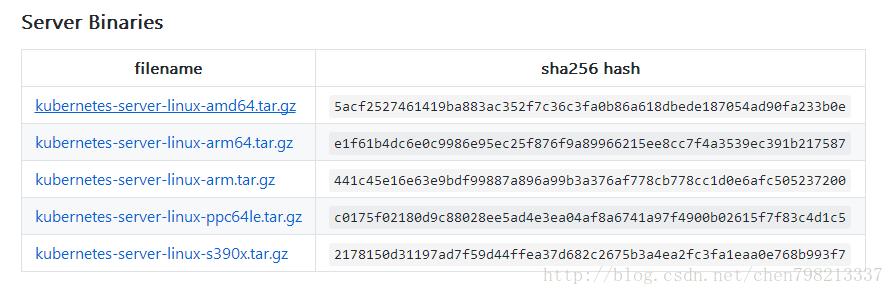

2)组件选择:选择Service Binaries中的kubernetes-server-linux-amd64.tar.gz

该文件已经包含了 K8S所需要的全部组件,无需单独下载Client等组件。

二、安装规划

1)下载K8S解压,把每个组件依次复制到/usr/bin目录文件下,然后创建systemd服务文见,最后启动该组件

3) 本例:以三个节点为例。具体节点安装组件如下

| 节点IP地址 | 角色 | 安装组件名称 |

|---|---|---|

| 192.168.137.3 | Master(管理节点) | etcd、kube-apiserver、kube-controller-manager、kube-scheduler |

| 192.168.137.4 | Node1(计算节点) | docker 、kubelet、kube-proxy |

| 192.168.137.5 | Node2(计算节点) | docker 、kubelet、kube-proxy |

其中etcd为K8S数据库

三、Master节点部署

注意:在CentOS7系统 以二进制文件部署,所有组件都需要4个步骤:

1)复制对应的二进制文件到/usr/bin目录下

2)创建systemd service启动服务文件

3)创建service 中对应的配置参数文件

4)将该应用加入到开机自启

1 etcd数据库安装

(1) ectd数据库安装

下载:K8S需要etcd作为数据库。以 v3.2.9为例,下载地址如下:

https://github.com/coreos/etcd/releases/

下载解压后将etcd、etcdctl二进制文件复制到/usr/bin目录

(2)设置 etcd.service服务文件

在/etc/systemd/system/目录里创建etcd.service,其内容如下:

[root@k8s-master]# cat /etc/systemd/system/etcd.service

[Unit] Description=etcd.service [Service] Type=notify TimeoutStartSec=0 Restart=always WorkingDirectory=/var/lib/etcd EnvironmentFile=-/etc/etcd/etcd.conf ExecStart=/usr/bin/etcd [Install] WantedBy=multi-user.target

其中WorkingDirectory为etcd数据库目录,需要在etcd**安装前创建**

(3)创建配置/etc/etcd/etcd.conf文件

[root@k8s-master]# cat /etc/etcd/etcd.conf

ETCD_NAME=ETCD Server ETCD_DATA_DIR="/var/lib/etcd/" ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379" ETCD_ADVERTISE_CLIENT_URLS="http://192.168.137.3:2379"

(4)配置开机启动

#systemctl daemon-reload #systemctl enable etcd.service #systemctl start etcd.service

(5)检验etcd是否安装成功

# etcdctl cluster-health member 8e9e05c52164694d is healthy: got healthy result from http://localhost:2379

2 kube-apiserver服务

(1)复制二进制文件到/usr/bin目录

将kube-apiserver、kube-controller-manger、kube-scheduler 三个可执行文件复制到/usr/bin目录

(2)新建并编辑/kube-apiserver.service 文件

[root@k8s-master]#cat /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

After=etcd.service

Wants=etcd.service

[Service]

EnvironmentFile=/etc/kubernetes/apiserver

ExecStart=/usr/bin/kube-apiserver

$KUBE_ETCD_SERVERS

$KUBE_API_ADDRESS

$KUBE_API_PORT

$KUBE_SERVICE_ADDRESSES

$KUBE_ADMISSION_CONTROL

$KUBE_API_LOG

$KUBE_API_ARGS

Restart=on-failure

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

3)新建参数配置文件/etc/kubernetes/apiserver

[root@k8s-master]#cat /etc/kubernetes/apiserver

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0" KUBE_API_PORT="--insecure-port=8080" KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.137.5:2379" KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=169.169.0.0/16" KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota" KUBE_API_LOG="--logtostderr=false --log-dir=/home/k8s-t/log/kubernets --v=2" KUBE_API_ARGS=" "

3 kube-controller-manger部署

(1)配置kube-controller-manager systemd 文件服务

命令内容如下:

[root@k8s-master]#cat /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Scheduler

After=kube-apiserver.service

Requires=kube-apiserver.service

[Service]

EnvironmentFile=-/etc/kubernetes/controller-manager

ExecStart=/usr/bin/kube-controller-manager

$KUBE_MASTER

$KUBE_CONTROLLER_MANAGER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

2)配置参数文件 /etc/kubernetes/controller-manager 内容如下:

[root@k8s-master]#cat /etc/kubernetes/controller-manager

KUBE_MASTER="--master=http://192.168.137.5:8080" KUBE_CONTROLLER_MANAGER_ARGS=" "

4 kube-scheduler组件部署

(1)配置kube-scheduler systemd服务文件

[root@k8s-master]#cat /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

After=kube-apiserver.service

Requires=kube-apiserver.service

[Service]

User=root

EnvironmentFile=-/etc/kubernetes/scheduler

ExecStart=/usr/bin/kube-scheduler

$KUBE_MASTER

$KUBE_SCHEDULER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

(2)配置/etc/kubernetes/scheduler参数文件

[root@k8s-master]#cat /etc/kubernetes/scheduler

KUBE_MASTER="--master=http://192.168.137.5:8080" KUBE_SCHEDULER_ARGS="--logtostderr=true --log-dir=/home/k8s-t/log/kubernetes --v=2"

5 将各组件加入开机自启

(1)命令如下:

systemctl daemon-reload systemctl enable kube-apiserver.service systemctl start kube-apiserver.service systemctl enable kube-controller-manager.service systemctl start kube-controller-manager.service systemctl enable kube-scheduler.service systemctl start kube-scheduler.service

至此,k8smaster节点安装完毕

Master一键重启服务:

for i in etcd kube-apiserver kube-controller-manager kube-scheduler docker;do systemctl restart $i;done

====================================

Node节点安装:

Node节点安装需要复制kubernetes/service/bin的kube-proxy,kubelet到/usr/bin/目录下,

安装离线docker安装包

yum localinstall docler*

- 安装kube-proxy服务

(1)添加/usr/lib/systemd/system/kube-proxy.service文件,内容如下:

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/proxy

ExecStart=/usr/bin/kube-proxy

$KUBE_LOGTOSTDERR

$KUBE_LOG_LEVEL

$KUBE_MASTER

$KUBE_PROXY_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

2)创建/etc/kubernetes目录

mkdir -p /etc/kubernetes

3)添加/etc/kubernetes/proxy配置文件

vim /etc/kubernetes/proxy,内容如下:

KUBE_PROXY_ARGS=""

(4)添加/etc/kubernetes/config文件

KUBE_LOGTOSTDERR="--logtostderr=true" KUBE_LOG_LEVEL="--v=0" KUBE_ALLOW_PRIV="--allow_privileged=false" KUBE_MASTER="--master=http://192.168.1.10:8080"

(5)启动kube-proxy服务

systemctl daemon-reload systemctl start kube-proxy.service

(6)查看kube-proxy启动状态

[root@server2 bin]# netstat -lntp | grep kube-proxy tcp 0 0 127.0.0.1:10249 0.0.0.0:* LISTEN 11754/kube-proxy tcp6 0 0 :::10256 :::* LISTEN 11754/kube-proxy

2. 安装kubelet服务

(1) 创建/usr/lib/systemd/system/kubelet.service文件

[Unit] Description=Kubernetes Kubelet Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=docker.service Requires=docker.service [Service] WorkingDirectory=/var/lib/kubelet EnvironmentFile=-/etc/kubernetes/kubelet ExecStart=/usr/bin/kubelet $KUBELET_ARGS Restart=on-failure KillMode=process [Install] WantedBy=multi-user.target

(2) 创建kubelet所需文件路径

mkdir -p /var/lib/kubelet

(3) 创建kubelet配置文件

vim /etc/kubernetes/kubelet,内容如下:

KUBELET_HOSTNAME="--hostname-override=192.168.1.128" KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=reg.docker.tb/harbor/pod-infrastructure:latest" KUBELET_ARGS="--enable-server=true --enable-debugging-handlers=true --fail-swap-on=false --kubeconfig=/var/lib/kubelet/kubeconfig"

(4) 添加/var/lib/kubelet/kubeconfig文件

然后还要添加一个配置文件,因为1.9.0在kubelet里不再使用KUBELET_API_SERVER来跟API通信,而是通过别一个yaml的配置来实现。

vim /var/lib/kubelet/kubeconfig ,内容如下:

apiVersion: v1

kind: Config

users:

- name: kubelet

clusters:

- name: kubernetes

cluster:

server: http://192.168.1.10:8080

contexts:

- context:

cluster: kubernetes

user: kubelet

name: service-account-context

current-context: service-account-context

5)启动kubelet

关闭swap分区:swapoff -a (不然kubelet启动报错)

systemctl daemon-reload

systemctl start kubelet.service

(6)查看kubelet文件状态

[root@server2 ~]# netstat -lntp | grep kubelet

[root@server2 ~]# netstat -lntp | grep kubelet tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 15410/kubelet tcp6 0 0 :::10250 :::* LISTEN 15410/kubelet tcp6 0 0 :::10255 :::* LISTEN 15410/kubelet tcp6 0 0 :::4194 :::* LISTEN 15410/kubelet

获取节点:

kubectl get nodes