1、为什么开启动态资源分配

⽤户提交Spark应⽤到Yarn上时,可以通过spark-submit的num-executors参数显示地指定executor 个数,随后,ApplicationMaster会为这些executor申请资源,每个executor作为⼀个Container在 Yarn上运⾏。Spark调度器会把Task按照合适的策略分配到executor上执⾏。所有任务执⾏完后, executor被杀死,应⽤结束。在job运⾏的过程中,⽆论executor是否领取到任务,都会⼀直占有着 资源不释放。很显然,这在任务量⼩且显示指定⼤量executor的情况下会很容易造成资源浪费

2.yarn-site.xml加入配置,并重启yarn服务

spark版本:2.2.1,hadoop版本:cdh5.14.2-2.6.0,不是clouder集成的cdh是手动单独搭建的

vim etc/hadoop/yarn-site.xml <property> <name>yarn.nodemanager.aux-services</name> <value>spark_shuffle,mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.aux-services.spark_shuffle.class</name> <value>org.apache.spark.network.yarn.YarnShuffleService</value> </property>

重启yarn时的需要注意的异常:nodemanager没有正常启动,yarn的8080页面的core与memory都为空

Caused by: java.lang.RuntimeException: java.lang.ClassNotFoundException: Class org.apache.spark.network.yarn.YarnShuffleService not found at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2349) at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2373) ... 10 more Caused by: java.lang.ClassNotFoundException: Class org.apache.spark.network.yarn.YarnShuffleService not found at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2255) at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2347) ... 11 more 2020-02-17 19:54:59,185 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Stopping NodeManager metrics system... 2020-02-17 19:54:59,185 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: NodeManager metrics system stopped. 2020-02-17 19:54:59,185 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: NodeManager metrics system shutdown complete. 2020-02-17 19:54:59,185 FATAL org.apache.hadoop.yarn.server.nodemanager.NodeManager: Error starting NodeManager java.lang.RuntimeException: java.lang.RuntimeException: java.lang.ClassNotFoundException: Class org.apache.spark.network.yarn.YarnShuffleService not found at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2381) at org.apache.hadoop.yarn.server.nodemanager.containermanager.AuxServices.serviceInit(AuxServices.java:121) at org.apache.hadoop.service.AbstractService.init(AbstractService.java:163) at org.apache.hadoop.service.CompositeService.serviceInit(CompositeService.java:107) at org.apache.hadoop.yarn.server.nodemanager.containermanager.ContainerManagerImpl.serviceInit(ContainerManagerImpl.java:236) at org.apache.hadoop.service.AbstractService.init(AbstractService.java:163) at org.apache.hadoop.service.CompositeService.serviceInit(CompositeService.java:107) at org.apache.hadoop.yarn.server.nodemanager.NodeManager.serviceInit(NodeManager.java:318) at org.apache.hadoop.service.AbstractService.init(AbstractService.java:163) at org.apache.hadoop.yarn.server.nodemanager.NodeManager.initAndStartNodeManager(NodeManager.java:562) at org.apache.hadoop.yarn.server.nodemanager.NodeManager.main(NodeManager.java:609) Caused by: java.lang.RuntimeException: java.lang.ClassNotFoundException: Class org.apache.spark.network.yarn.YarnShuffleService not found at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2349) at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2373) ... 10 more Caused by: java.lang.ClassNotFoundException: Class org.apache.spark.network.yarn.YarnShuffleService not found at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2255) at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2347) ... 11 more 2020-02-17 19:54:59,189 INFO org.apache.hadoop.yarn.server.nodemanager.NodeManager: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NodeManager at bigdata.server1/192.168.121.12 ************************************************************/

原因是缺少了:sparkShuffle的jar包

mv spark/yarn/spark-2.11-2.2.1-shuffle_.jar /opt/modules/hadoop-2.6.0-cdh5.14.2/share/hadoop/yarn/

nodemanager依然启动不了,查询nodemanger.log日志、继续报错:

java.lang.NoSuchMethodError: org.spark_project.com.fasterxml.jackson.core.JsonFactory.requiresPropertyOrdering()Z

添加了jackson的包没啥用,网上有一样的报错方式:https://www.oschina.net/question/3721355_2269200

结果:未解决

3.spark的动态资源分配开启

spark.shuffle.service.enabled true //启⽤External shuffle Service服务

spark.shuffle.service.port 7337 //Shuffle Service服务端⼝,必须和yarn-site中的⼀致

spark.dynamicAllocation.enabled true //开启动态资源分配

spark.dynamicAllocation.minExecutors 1 //每个Application最⼩分配的executor数

spark.dynamicAllocation.maxExecutors 30 //每个Application最⼤并发分配的executor数

spark.dynamicAllocation.schedulerBacklogTimeout 1s

spark.dynamicAllocation.sustainedSchedulerBacklogTimeout 5s

也可以在代码或者脚本中添加sparkconf

4.hadoop版本cdh5.14.2-2.6.0与spark 2.2.1单独搭建的会报错

5.使用clouderCDH 5.14.0 的版本测试

1.在yarn-site.xml添加上面的配置

1

2.普通提交,spark2版本进行shell提交,观察yarn

spark2-shell --master yarn-client --executor-memory 2G --num-executors 10

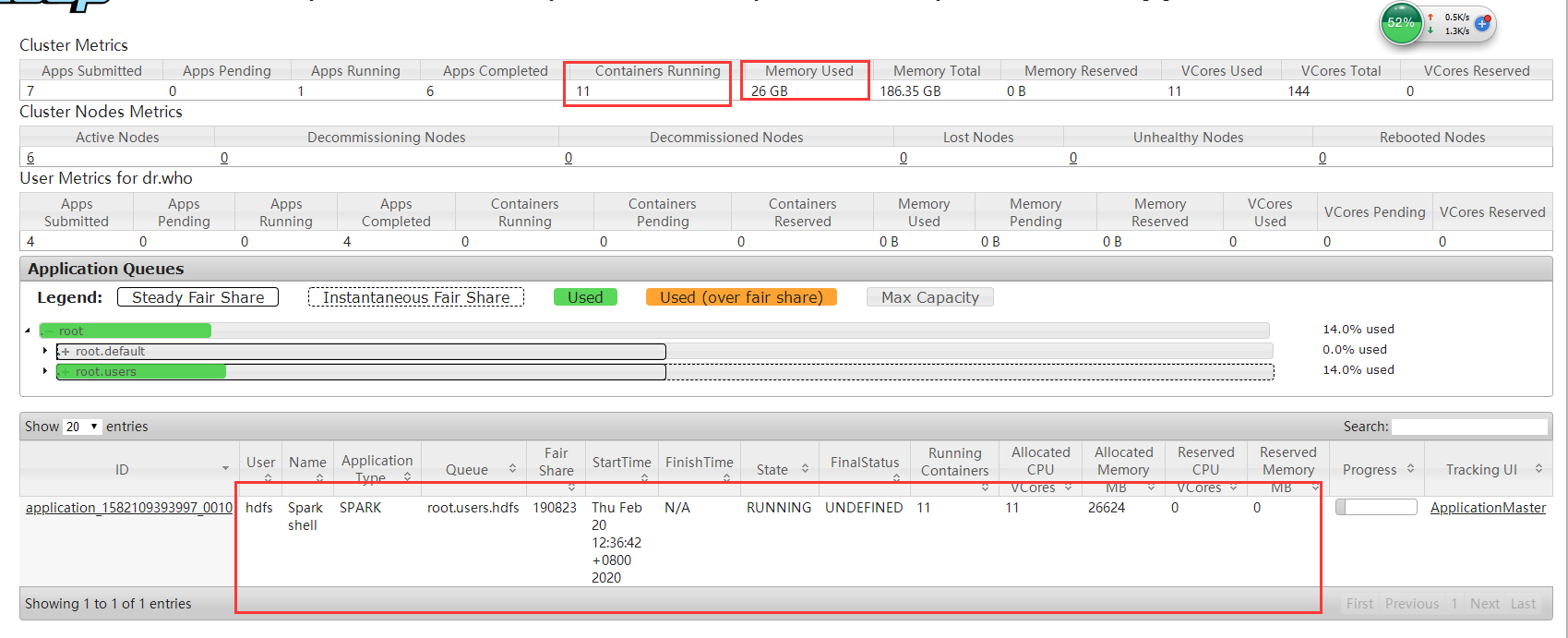

可以看到10个executor(driver占一核)没有任务也是申请到资源,占着不用,造成了资源浪费

3.使用spark的动态资源分配提交

spark2-shell —master yarn —eploy-mode client //指定队列 —queue "test" //日志配置 —conf spark.driver.extraJava0ptions=-Dlog4j.configuration=log4j-yarn.properties —conf spark.executor.extraJava0ptions=-Dlog4j.configuration=log4j-yarn.properties —conf spark.serializer=org.apache.spark.serializer.KryoSerializer //推测执行等待时间 —conf spark.locality.wait=10 //最大失败重试次数 —conf spark.task.maxFailures=8 —conf spark.ui.killEnabled=false —conf spark.logConf=true //非堆内存配置 —conf spa rk.yarn.d river.memoryOverhead=512 —conf spark.yarn.executor.memoryOverhead=1024 —conf spark.yarn.maxAppAttempts=4 —conf spark.yarn.am.attemptFailuresValidityInterval=lh —conf spark.yarn.executor.failuresValidityInterval=lh //动态资源开启 —conf spark.dynamicAllocation.enabled=true //最大最小申请的Executors数 —conf spark.dynamicAllocation.minExecutors=l —conf spark.dynamicAllocation.maxExecutors=30 —conf spark.dynamicAllocation.executorldleTimeout=3s —conf spark.shuffle.service.enabled=true

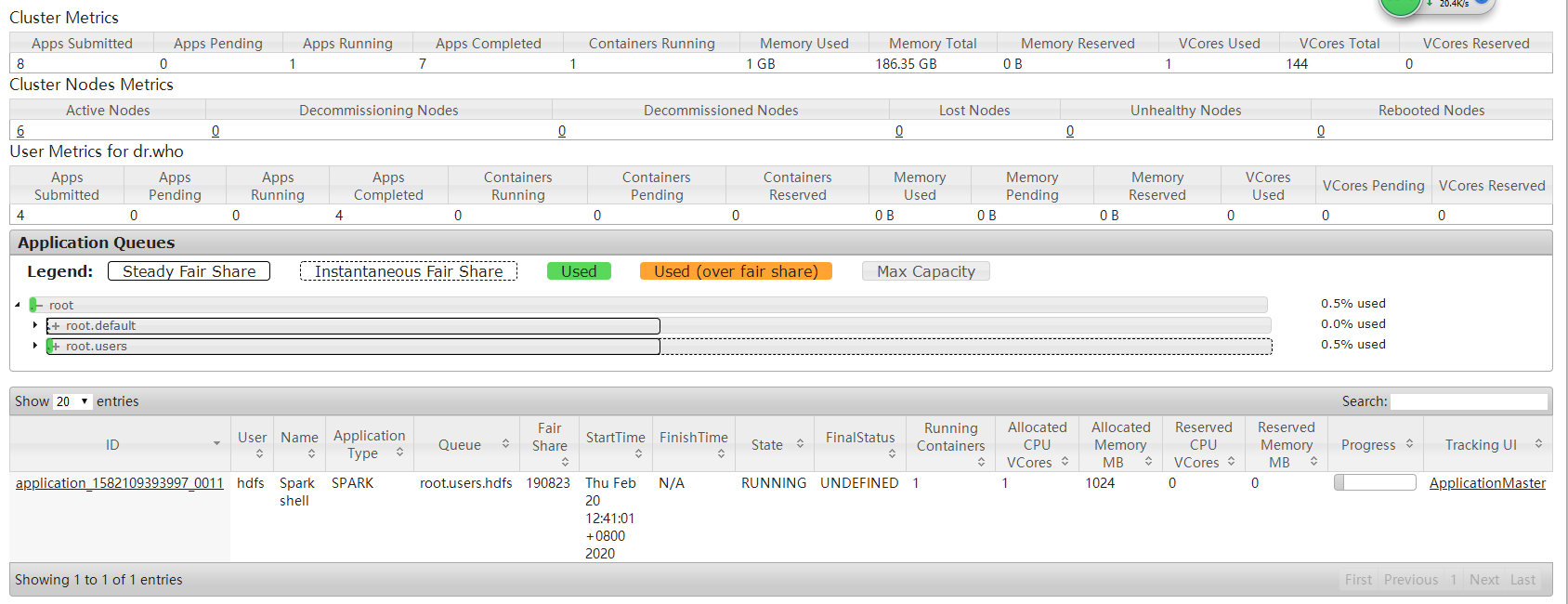

可以看到申请的只有1个executor(driver端的),暂时没有提交任务,最小申请为1个,

sc.textFile("file:///etc/hosts").flatMap(line => line.split(" ")).map(word => (word,1)).reduceByKey(_ + _).count()

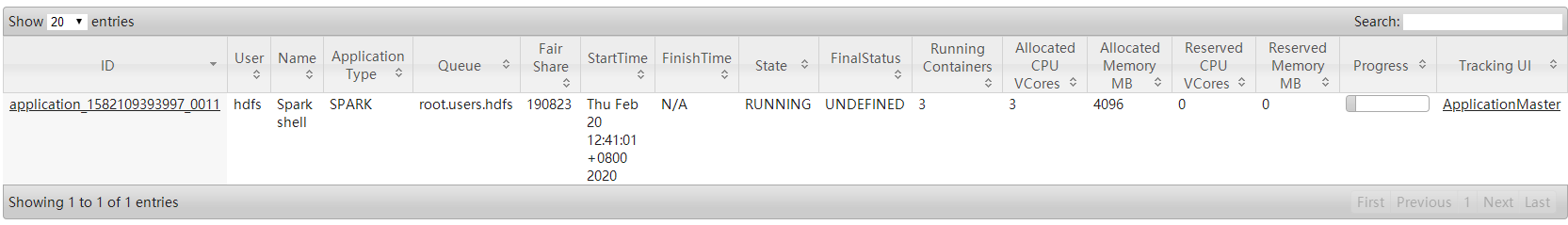

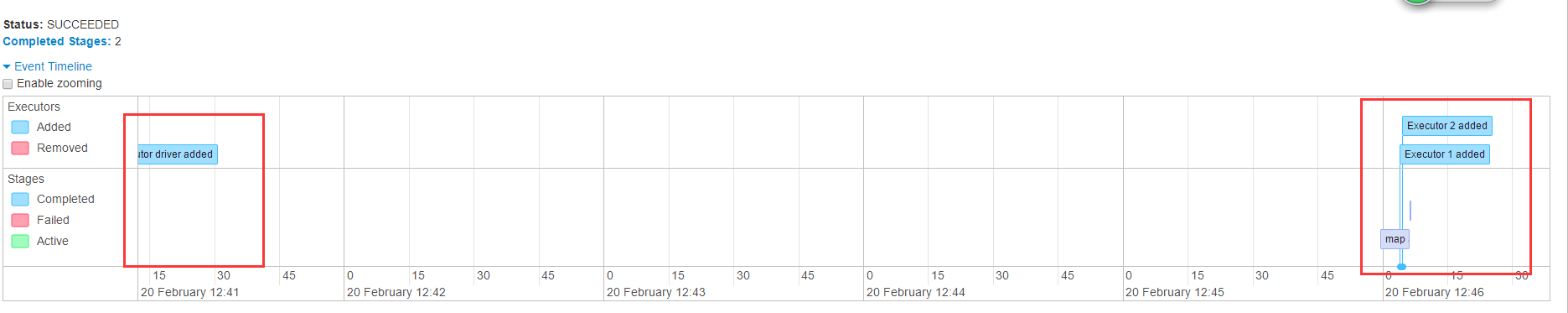

提交一个wrodcount程序跑一下,发现使用2个executor(1个driver),说明这种数量级的数据就2个就可以满足了,不需要开启更多的资源去空转,占用

4.动态资源的好处

1.多个部门去使用集群资源,有运行的任务时候申请资源,没有时将资源回收给yarn,供其他人使用

2.防止小数据申请大资源,造成资源浪费,executor空转

3.在进行流式处理时不建议开启,流式处理的数据量在不同时段是不同的,需要最大利用资源,从而提高消费速度,以免造成数据堆积,流式处理时如果一直去判断数据量的大小进行动态申请时,创建与销毁资源也需要时间,从而让流式处理造成了延迟