1.前言

说明:安装hive前提是要先安装hadoop集群,并且hive只需要再hadoop的namenode节点集群里安装即可(需要再所有namenode上安装),可以不在datanode节点的机器上安装。另外还需要说明的是,虽然修改配置文件并不需要你已经把hadoop跑起来,但是本文中用到了hadoop命令,在执行这些命令前你必须确保hadoop是在正常跑着的,而且启动hive的前提也是需要hadoop在正常跑着,所以建议你先将hadoop跑起来在按照本文操作。有关如何安装和启动hadoop集群。

hive 版本下载:hive-2.3.4/

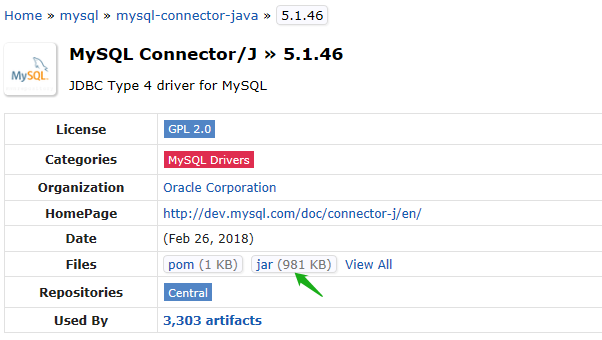

下载 mysql-connector-java-5.1.46.jar

https://mvnrepository.com/artifact/mysql/mysql-connector-java/5.1.46

把包上传到/home/hadoop/hive

-rw-r--r--. 1 hadoop hadoop 232234292 Jan 6 06:49 apache-hive-2.3.4-bin.tar.gz

-rw-r--r--. 1 hadoop hadoop 1004838 Jan 6 06:49 mysql-connector-java-5.1.46.jar

解压:

tar -xvf apache-hive-2.3.4-bin.tar.gz

mv apache-hive-2.3.4-bin hive

然后把 mysql-connector-java-5.1.46.jar移动到hive2.3.4/lib下

把hive移动到/home/hadoop下

vi /etc/profile 添加下面

export HIVE_HOME=/home/hadoop/hive

export PATH=$PATH:$HIVE_HOME/bin

生效:

source /etc/profile

修改hive配置文件

cp hive-env.sh.template hive-env.sh

cp hive-default.xml.template hive-site.xml

cp hive-log4j2.properties.template hive-log4j2.properties

cp hive-exec-log4j2.properties.template hive-exec-log4j2.properties

修改 hive-env.sh 文件添加:

export JAVA_HOME=/usr/local/jdk1.8

export HADOOP_HOME=/home/hadoop/hadoop-2.7.3

export HIVE_HOME=/home/hadoop/hive

export HIVE_CONF_DIR=/home/hadoop/hive/conf

在hdfs中创建目录,并授权,用于存储文件

启动hadoop

hdfs dfs -mkdir -p /user/hive/warehouse

hdfs dfs -mkdir -p /user/hive/tmp

hdfs dfs -mkdir -p /user/hive/log

hdfs dfs -chmod -R 777 /user/hive/warehouse

hdfs dfs -chmod -R 777 /user/hive/tmp

hdfs dfs -chmod -R 777 /user/hive/log

验证:

[hadoop@master conf]$ hdfs dfs -ls /user/hive

Found 3 items

drwxrwxrwx - hadoop supergroup 0 2019-01-06 07:09 /user/hive/log

drwxrwxrwx - hadoop supergroup 0 2019-01-06 07:09 /user/hive/tmp

drwxrwxrwx - hadoop supergroup 0 2019-01-06 07:09 /user/hive/warehouse

修改hive-site.xml

<property>

<name>hive.exec.scratchdir</name>

<value>/user/hive/tmp</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

</property>

<property>

<name>hive.querylog.location</name>

<value>/user/hive/log</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true&characterEncoding=UTF-8&useSSL=false</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>mysql</value> --mysql 的密码

</property>

创建 tmp 文件夹 ,并修改权限 mkdir -p /home/hadoop/apps/hive/tmp chmod -R 777 /home/hadoop/apps/hive/tmp

将hive-site.xml文件中的${system:java.io.tmpdir}替换为hive的临时目录 /home/hadoop/apps/hive/tmp

把 {system:user.name} 改成 {user.name}

初始化:

[hadoop@master conf]$ schematool -dbType mysql -initSchema SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/home/hadoop/hive/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Metastore connection URL: jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true&characterEncoding=UTF-8&useSSL=false Metastore Connection Driver : com.mysql.jdbc.Driver Metastore connection User: root

原因是jar重复导致,删除一个jar包

[hadoop@master tmp]$ cd /home/hadoop/hive/lib/

[hadoop@master lib]$ ll log4j-slf4j-impl-2.6.2.jar

-rw-r--r--. 1 hadoop hadoop 22927 Jan 6 06:57 log4j-slf4j-impl-2.6.2.jar

[hadoop@master lib]$ rm -rf log4j-slf4j-impl-2.6.2.jar

[hadoop@master conf]$ schematool -dbType mysql -initSchema

Metastore connection URL: jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true&characterEncoding=UTF-8&useSSL=false

Metastore Connection Driver : com.mysql.jdbc.Driver

Metastore connection User: root

Starting metastore schema initialization to 2.3.0

Initialization script hive-schema-2.3.0.mysql.sql

Initialization script completed

schemaTool completed

启动hive方式一:

[hadoop@master conf]$ hive which: no hbase in (/usr/local/jdk1.8/bin:/usr/local/jdk1.8/jre/bin:/usr/local/bin:/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/sbin:/home/hadoop/hive/bin:/home/hadoop/hadoop-2.7.3/sbin:/home/hadoop/hadoop-2.7.3/bin) Logging initialized using configuration in file:/home/hadoop/hive/conf/hive-log4j2.properties Async: true Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. hive> show database;

启动方式二:

使用 beeline

必须先对hadoop的core-site.xml进行配置,在文件里面添加并保存

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

先启动 hiveserver2

[hadoop@master conf]$ hiveserver2 which: no hbase in (/usr/local/jdk1.8/bin:/usr/local/jdk1.8/jre/bin:/usr/local/bin:/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/sbin:/home/hadoop/hive/bin:/home/hadoop/hadoop-2.7.3/sbin:/home/hadoop/hadoop-2.7.3/bin) 2019-01-06 09:59:09: Starting HiveServer2

在启动

[hadoop@master hadoop]$ beeline Beeline version 2.3.4 by Apache Hive beeline> !connect jdbc:hive2://master:10000 Connecting to jdbc:hive2://master:10000 Enter username for jdbc:hive2://master:10000: hadoop Enter password for jdbc:hive2://master:10000: ****** Connected to: Apache Hive (version 2.3.4) Driver: Hive JDBC (version 2.3.4) Transaction isolation: TRANSACTION_REPEATABLE_READ 0: jdbc:hive2://master:10000> show databases; +----------------+ | database_name | +----------------+ | default | +----------------+ 1 row selected (1.196 seconds) 0: jdbc:hive2://master:10000>

启动方式三 :Web UI

一、页面配置

[hadoop@master conf]$ vi hive-site.xml

<property>

<name>hive.server2.webui.host</name>

<value>192.168.1.30</value> --主机IP

<description>The host address the HiveServer2 WebUI will listen on</description>

</property>

<property>

<name>hive.server2.webui.port</name>

<value>10002</value>

<description>The port the HiveServer2 WebUI will listen on. This can beset to 0 or a negative integer to disable the web UI</description>

</property>

二、切记,在修改完hive的hive-site.xml的配置之后,一定要重新启动HiveServer2服务

[hadoop@master conf]$ hive --service hiveserver2 &

[1] 3731

[hadoop@master conf]$ which: no hbase in (/usr/local/jdk1.8/bin:/usr/local/jdk1.8/jre/bin:/usr/local/bin:/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/sbin:/home/hadoop/hive/bin:/home/hadoop/hadoop-2.7.3/sbin:/home/hadoop/hadoop-2.7.3/bin)

2019-01-08 10:38:40: Starting HiveServer2

。。。。。。

三、在浏览器前台输入网址:http://192.168.1.30:10002/

[hadoop@master bin]$ beeline Beeline version 2.3.4 by Apache Hive beeline> !connect jdbc:hive2://master:10000 hadoop hadoop org.apache.hive.jdbc.HiveDriver Connecting to jdbc:hive2://master:10000 Connected to: Apache Hive (version 2.3.4) Driver: Hive JDBC (version 2.3.4) Transaction isolation: TRANSACTION_REPEATABLE_READ 0: jdbc:hive2://master:10000> show databases; OK +----------------+ | database_name | +----------------+ | db_hive | | default | +----------------+ 2 rows selected (1.555 seconds) 0: jdbc:hive2://master:10000> use db_hive; OK No rows affected (0.153 seconds) 0: jdbc:hive2://master:10000> show tables; OK +-----------+ | tab_name | +-----------+ | u2 | | u4 | +-----------+ 2 rows selected (0.159 seconds) 0: jdbc:hive2://master:10000> select * from u2; OK +--------+----------+---------+-----------+---------+ | u2.id | u2.name | u2.age | u2.month | u2.day | +--------+----------+---------+-----------+---------+ | 1 | xm1 | 16 | 9 | 14 | | 2 | xm2 | 18 | 9 | 14 | | 3 | xm3 | 22 | 9 | 14 | | 4 | xh4 | 20 | 9 | 14 | | 5 | xh5 | 22 | 9 | 14 | | 6 | xh6 | 23 | 9 | 14 | | 7 | xh7 | 25 | 9 | 14 | | 8 | xh8 | 28 | 9 | 14 | | 9 | xh9 | 32 | 9 | 14 | +--------+----------+---------+-----------+---------+ 9 rows selected (2.672 seconds) 0: jdbc:hive2://master:10000> [hadoop@master bin]$ beeline -u jdbc:hive2://master:10000/default Connecting to jdbc:hive2://master:10000/default Connected to: Apache Hive (version 2.3.4) Driver: Hive JDBC (version 2.3.4) Transaction isolation: TRANSACTION_REPEATABLE_READ Beeline version 2.3.4 by Apache Hive 0: jdbc:hive2://master:10000/default> show databases; OK +----------------+ | database_name | +----------------+ | db_hive | | default | +----------------+ 2 rows selected (0.208 seconds)

完。。。