1、环境规划,三台主机

10.213.14.51/24 10.213.14.52/24 10.213.14.53/24 集群网络

172.140.140.11、22 172.140.140.12/22 172.140.140.13/22 复制网络

centos7.3 ceph版本 luminous 12.2.4 ceph-deploy 2.0.0

2、ceph体系架构

3、ceph核心概念

- Monitors: A Ceph Monitor (ceph-mon) maintains maps of the cluster state, including the monitor map, manager map, the OSD map, and the CRUSH map. These maps are critical cluster state required for Ceph daemons to coordinate with each other. Monitors are also responsible for managing authentication between daemons and clients. At least three monitors are normally required for redundancy and high availability.

- Managers: A Ceph Manager daemon (ceph-mgr) is responsible for keeping track of runtime metrics and the current state of the Ceph cluster, including storage utilization, current performance metrics, and system load. The Ceph Manager daemons also host python-based plugins to manage and expose Ceph cluster information, including a web-based dashboard and REST API. At least two managers are normally required for high availability.

该组件的主要作用是分担和扩展monitor的部分功能,减轻monitor的负担,让更好地管理ceph存储系统

- Ceph OSDs: A Ceph OSD (object storage daemon, ceph-osd) stores data, handles data replication, recovery, rebalancing, and provides some monitoring information to Ceph Monitors and Managers by checking other Ceph OSD Daemons for a heartbeat. At least 3 Ceph OSDs are normally required for redundancy and high availability.

- MDSs: A Ceph Metadata Server (MDS, ceph-mds) stores metadata on behalf of the Ceph Filesystem (i.e., Ceph Block Devices and Ceph Object Storage do not use MDS). Ceph Metadata Servers allow POSIX file system users to execute basic commands (like ls, find, etc.) without placing an enormous burden on the Ceph Storage Cluster.

4、预备工作

hostnamectl set-hostname ceph-1 cat /etc/hosts 10.213.14.51 ceph-1 10.213.14.52 ceph-2 10.213.14.53 ceph-3 yum install -y https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm (每台都需要) yum install ceph-deploy ntp ntpdate ntp-doc –y (ceph-deploy ceph工具只需在一台上面部署即可) systemctl start ntpd systemctl enable ntpd 免秘钥登录

[root@ceph-1 ceph]# cat /etc/yum.repos.d/ceph.repo

[ceph]

name=Ceph packages for $basearch

baseurl=https://download.ceph.com/rpm-luminous/el7/$basearch

enabled=1

gpgcheck=1

priority=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[ceph-noarch]

name=Ceph noarch packages

baseurl=https://download.ceph.com/rpm-luminous/el7/noarch

enabled=1

gpgcheck=1

priority=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[ceph-source]

name=Ceph source packages

baseurl=https://download.ceph.com/rpm-luminous/el7/SRPMS

enabled=0

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

priority=1

export CEPH_DEPLOY_REPO_URL=https://download.ceph.com/rpm-luminous/el7/

export CEPH_DEPLOY_GPG_URL=https://download.ceph.com/keys/release.asc

5、ceph集群部署

创建工作目录 mkdir -p /root /ceph && cd /root/ceph ceph-deploy new ceph-{1,2,3} [root@ceph-1 ceph]# export CEPH_DEPLOY_REPO_URL=http://mirrors.163.com/ceph/rpm-luminous/el7 [root@ceph-1 ceph]# export CEPH_DEPLOY_GPG_URL=http://mirrors.163.com/ceph/keys/release.asc [root@ceph-1 ceph]# ceph-deploy new ceph-{1,2,3} [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.0): /bin/ceph-deploy new ceph-1 ceph-2 ceph-3 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] func : <function new at 0x23eaaa0> [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x244f320> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] ssh_copykey : True [ceph_deploy.cli][INFO ] mon : ['ceph-1', 'ceph-2', 'ceph-3'] [ceph_deploy.cli][INFO ] public_network : None [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] cluster_network : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] fsid : None [ceph_deploy.new][DEBUG ] Creating new cluster named ceph [ceph_deploy.new][INFO ] making sure passwordless SSH succeeds [ceph-1][DEBUG ] connected to host: ceph-1 [ceph-1][DEBUG ] detect platform information from remote host [ceph-1][DEBUG ] detect machine type [ceph-1][DEBUG ] find the location of an executable [ceph-1][INFO ] Running command: /usr/sbin/ip link show [ceph-1][INFO ] Running command: /usr/sbin/ip addr show [ceph-1][DEBUG ] IP addresses found: [u'10.213.14.51', u'172.17.0.1', u'172.140.140.11', u'10.50.50.13'] [ceph_deploy.new][DEBUG ] Resolving host ceph-1 [ceph_deploy.new][DEBUG ] Monitor ceph-1 at 10.213.14.51 [ceph_deploy.new][INFO ] making sure passwordless SSH succeeds [ceph-2][DEBUG ] connected to host: ceph-1 [ceph-2][INFO ] Running command: ssh -CT -o BatchMode=yes ceph-2 [ceph-2][DEBUG ] connected to host: ceph-2 [ceph-2][DEBUG ] detect platform information from remote host [ceph-2][DEBUG ] detect machine type [ceph-2][DEBUG ] find the location of an executable [ceph-2][INFO ] Running command: /usr/sbin/ip link show [ceph-2][INFO ] Running command: /usr/sbin/ip addr show [ceph-2][DEBUG ] IP addresses found: [u'10.213.14.52', u'172.17.0.1', u'172.140.140.13', u'10.50.50.12'] [ceph_deploy.new][DEBUG ] Resolving host ceph-2 [ceph_deploy.new][DEBUG ] Monitor ceph-2 at 10.213.14.52 [ceph_deploy.new][INFO ] making sure passwordless SSH succeeds [ceph-3][DEBUG ] connected to host: ceph-1 [ceph-3][INFO ] Running command: ssh -CT -o BatchMode=yes ceph-3 [ceph-3][DEBUG ] connected to host: ceph-3 [ceph-3][DEBUG ] detect platform information from remote host [ceph-3][DEBUG ] detect machine type [ceph-3][DEBUG ] find the location of an executable [ceph-3][INFO ] Running command: /usr/sbin/ip link show [ceph-3][INFO ] Running command: /usr/sbin/ip addr show [ceph-3][DEBUG ] IP addresses found: [u'172.140.140.15', u'172.17.0.1', u'10.213.14.53'] [ceph_deploy.new][DEBUG ] Resolving host ceph-3 [ceph_deploy.new][DEBUG ] Monitor ceph-3 at 10.213.14.53 [ceph_deploy.new][DEBUG ] Monitor initial members are ['ceph-1', 'ceph-2', 'ceph-3'] [ceph_deploy.new][DEBUG ] Monitor addrs are ['10.213.14.51', '10.213.14.52', '10.213.14.53'] [ceph_deploy.new][DEBUG ] Creating a random mon key... [ceph_deploy.new][DEBUG ] Writing monitor keyring to ceph.mon.keyring... [ceph_deploy.new][DEBUG ] Writing initial config to ceph.conf... [root@ceph-1 ceph]# ll total 20 -rw-r--r-- 1 root root 238 Apr 17 11:07 ceph.conf -rw-r--r-- 1 root root 10605 Apr 17 11:07 ceph-deploy-ceph.log -rw------- 1 root root 73 Apr 17 11:07 ceph.mon.keyring Vim ceph.conf public network = 10.213.14.0/24 设置集群网络,对外提供服务 cluster network = 172.140.140.0/22设置复制网络,集群内部通信

安装ceph软件包

安装ceph软件包

[root@ceph-1 ceph]# ceph-deploy install ceph-1 ceph-2 ceph-3

[root@ceph-1 ceph]# ceph-deploy install ceph-1 ceph-2 ceph-3 #部分输出信息如下

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.0): /usr/bin/ceph-deploy install ceph-1 ceph-2 ceph-3

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] testing : None

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x2822d40>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] dev_commit : None

[ceph_deploy.cli][INFO ] install_mds : False

[ceph_deploy.cli][INFO ] stable : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] adjust_repos : True

[ceph_deploy.cli][INFO ] func : <function install at 0x278e2a8>

[ceph_deploy.cli][INFO ] install_mgr : False

[ceph_deploy.cli][INFO ] install_all : False

[ceph_deploy.cli][INFO ] repo : False

[ceph_deploy.cli][INFO ] host : ['ceph-1', 'ceph-2', 'ceph-3']

[ceph_deploy.cli][INFO ] install_rgw : False

[ceph_deploy.cli][INFO ] install_tests : False

[ceph_deploy.cli][INFO ] repo_url : None

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] install_osd : False

[ceph_deploy.cli][INFO ] version_kind : stable

[ceph_deploy.cli][INFO ] install_common : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] dev : master

[ceph_deploy.cli][INFO ] nogpgcheck : False

[ceph_deploy.cli][INFO ] local_mirror : None

[ceph_deploy.cli][INFO ] release : None

[ceph_deploy.cli][INFO ] install_mon : False

[ceph_deploy.cli][INFO ] gpg_url : None

[ceph_deploy.install][DEBUG ] Installing stable version jewel on cluster ceph hosts ceph-1 ceph-2 ceph-3

[ceph_deploy.install][DEBUG ] Detecting platform for host ceph-1 ...

[ceph-1][DEBUG ] connected to host: ceph-1

[ceph-1][DEBUG ] detect platform information from remote host

[ceph-1][DEBUG ] detect machine type

[ceph_deploy.install][INFO ] Distro info: CentOS Linux 7.3.1611 Core

[ceph-1][INFO ] installing Ceph on ceph-1

[ceph-1][INFO ] using custom repository location: https://download.ceph.com/rpm-luminous/el7/

[ceph-1][INFO ] Running command: yum clean all

[ceph-1][DEBUG ] Loaded plugins: fastestmirror, priorities

[ceph-1][DEBUG ] Cleaning repos: base ceph ceph-noarch epel extras updates

[ceph-1][DEBUG ] Cleaning up everything

[ceph-1][DEBUG ] Cleaning up list of fastest mirrors

[ceph-1][INFO ] Running command: rpm --import https://download.ceph.com/keys/release.asc

[ceph-1][DEBUG ] add yum repo file in /etc/yum.repos.d/

[ceph-1][INFO ] Running command: yum -y install yum-plugin-priorities

[ceph-1][DEBUG ] Loaded plugins: fastestmirror, priorities

ceph-2][DEBUG ] Replaced:

[ceph-2][DEBUG ] libcephfs1.x86_64 1:10.2.10-0.el7 rdma.noarch 0:7.3_4.7_rc2-5.el7

[ceph-2][DEBUG ]

[ceph-2][DEBUG ] Complete!

[ceph-2][INFO ] Running command: ceph --version

[ceph-2][DEBUG ] ceph version 12.2.4 (52085d5249a80c5f5121a76d6288429f35e4e77b) luminous (stable)

[ceph_deploy.install][DEBUG ] Detecting platform for host ceph-3 ...

[ceph-3][DEBUG ] connected to host: ceph-3

[ceph-3][DEBUG ] detect platform information from remote host

[ceph-3][DEBUG ] detect machine type

[ceph_deploy.install][INFO ] Distro info: CentOS Linux 7.3.1611 Core

[ceph-3][INFO ] installing Ceph on ceph-3

[ceph-3][INFO ] using custom repository location: https://download.ceph.com/rpm-luminous/el7/

[ceph-3][INFO ] Running command: yum clean all

[ceph-3][DEBUG ] Loaded plugins: fastestmirror, priorities

[ceph-3][DEBUG ] Cleaning repos: base epel extras updates

[ceph-3][DEBUG ] Cleaning up everything

[ceph-3][INFO ] Running command: rpm --import https://download.ceph.com/keys/release.asc

[ceph-3][DEBUG ] add yum repo file in /etc/yum.repos.d/

[ceph-3][INFO ] Running command: yum -y install yum-plugin-priorities

[ceph-3][DEBUG ] Loaded plugins: fastestmirror, priorities

[ceph-3][DEBUG ] Dependencies Resolved

[ceph-3][DEBUG ]

[ceph-3][DEBUG ] ================================================================================

[ceph-3][DEBUG ] Package Arch Version Repository Size

[ceph-3][DEBUG ] ================================================================================

[ceph-3][DEBUG ] Installing:

[ceph-3][DEBUG ] ceph x86_64 2:12.2.4-0.el7 ceph 3.0 k

[ceph-3][DEBUG ] ceph-radosgw x86_64 2:12.2.4-0.el7 ceph 3.7 M

[ceph-3][DEBUG ] Installing for dependencies:

[ceph-3][DEBUG ] ceph-base x86_64 2:12.2.4-0.el7 ceph 3.9 M

[ceph-3][DEBUG ] ceph-common x86_64 2:12.2.4-0.el7 ceph 15 M

[ceph-3][DEBUG ] ceph-mds x86_64 2:12.2.4-0.el7 ceph 3.5 M

[ceph-3][DEBUG ] ceph-mgr x86_64 2:12.2.4-0.el7 ceph 3.6 M

[ceph-3][DEBUG ] ceph-mon x86_64 2:12.2.4-0.el7 ceph 5.0 M

[ceph-3][DEBUG ] ceph-osd x86_64 2:12.2.4-0.el7 ceph 13 M

[ceph-3][DEBUG ] ceph-selinux x86_64 2:12.2.4-0.el7 ceph 20 k

[ceph-3][DEBUG ]

[ceph-3][DEBUG ] Transaction Summary

[ceph-3][DEBUG ] ================================================================================

[ceph-3][DEBUG ] Install 2 Packages (+7 Dependent packages)

[ceph-3][DEBUG ]

[ceph-3][DEBUG ] Total download size: 47 M

[ceph-3][DEBUG ] Installed size: 161 M

[ceph-3][DEBUG ] Downloading packages:

[ceph-3][DEBUG ] Installed:

[ceph-3][DEBUG ] ceph.x86_64 2:12.2.4-0.el7 ceph-radosgw.x86_64 2:12.2.4-0.el7

[ceph-3][DEBUG ]

[ceph-3][DEBUG ] Dependency Installed:

[ceph-3][DEBUG ] ceph-base.x86_64 2:12.2.4-0.el7 ceph-common.x86_64 2:12.2.4-0.el7

[ceph-3][DEBUG ] ceph-mds.x86_64 2:12.2.4-0.el7 ceph-mgr.x86_64 2:12.2.4-0.el7

[ceph-3][DEBUG ] ceph-mon.x86_64 2:12.2.4-0.el7 ceph-osd.x86_64 2:12.2.4-0.el7

[ceph-3][DEBUG ] ceph-selinux.x86_64 2:12.2.4-0.el7

[ceph-3][DEBUG ]

[ceph-3][DEBUG ] Complete!

[ceph-3][INFO ] Running command: ceph --version

[ceph-3][DEBUG ] ceph version 12.2.4 (52085d5249a80c5f5121a76d6288429f35e4e77b) luminous (stable)

初始化mon,并生成key

[root@ceph-1 ceph]# ceph-deploy mon create-initial

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.0): /usr/bin/ceph-deploy mon create-initial

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create-initial

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x1605830>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mon at 0x15f50c8>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] keyrings : None

[ceph_deploy.mon][DEBUG ] Deploying mon, cluster ceph hosts ceph-1 ceph-2 ceph-3

[ceph_deploy.mon][DEBUG ] detecting platform for host ceph-1 ...

[ceph-1][DEBUG ] connected to host: ceph-1

[ceph-1][DEBUG ] detect platform information from remote host

[ceph-1][DEBUG ] detect machine type

[ceph-1][DEBUG ] find the location of an executable

[ceph_deploy.mon][INFO ] distro info: CentOS Linux 7.3.1611 Core

[ceph-1][DEBUG ] determining if provided host has same hostname in remote

[ceph-1][DEBUG ] get remote short hostname

[ceph-1][DEBUG ] deploying mon to ceph-1

[ceph-1][DEBUG ] get remote short hostname

[ceph-1][DEBUG ] remote hostname: ceph-1

[ceph-1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-1][DEBUG ] create the mon path if it does not exist

[ceph-1][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-ceph-1/done

[ceph-1][DEBUG ] done path does not exist: /var/lib/ceph/mon/ceph-ceph-1/done

[ceph-1][INFO ] creating keyring file: /var/lib/ceph/tmp/ceph-ceph-1.mon.keyring

[ceph-1][DEBUG ] create the monitor keyring file

[ceph-1][INFO ] Running command: ceph-mon --cluster ceph --mkfs -i ceph-1 --keyring /var/lib/ceph/tmp/ceph-ceph-1.mon.keyring --setuser 167 --setgroup 167

[ceph-1][INFO ] unlinking keyring file /var/lib/ceph/tmp/ceph-ceph-1.mon.keyring

[ceph-1][DEBUG ] create a done file to avoid re-doing the mon deployment

[ceph-1][DEBUG ] create the init path if it does not exist

[ceph-1][INFO ] Running command: systemctl enable ceph.target

[ceph-1][INFO ] Running command: systemctl enable ceph-mon@ceph-1

[ceph-1][WARNIN] Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@ceph-1.service to /usr/lib/systemd/system/ceph-mon@.service.

[ceph-1][INFO ] Running command: systemctl start ceph-mon@ceph-1

[ceph-1][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-1.asok mon_status

[ceph-1][DEBUG ] ********************************************************************************

[ceph-1][DEBUG ] status for monitor: mon.ceph-1

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "election_epoch": 0,

[ceph-1][DEBUG ] "extra_probe_peers": [

[ceph-1][DEBUG ] "10.213.14.52:6789/0",

[ceph-1][DEBUG ] "10.213.14.53:6789/0"

[ceph-1][DEBUG ] ],

[ceph-1][DEBUG ] "feature_map": {

[ceph-1][DEBUG ] "mon": {

[ceph-1][DEBUG ] "group": {

[ceph-1][DEBUG ] "features": "0x1ffddff8eea4fffb",

[ceph-1][DEBUG ] "num": 1,

[ceph-1][DEBUG ] "release": "luminous"

[ceph-1][DEBUG ] }

[ceph-1][DEBUG ] }

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] "features": {

[ceph-1][DEBUG ] "quorum_con": "0",

[ceph-1][DEBUG ] "quorum_mon": [],

[ceph-1][DEBUG ] "required_con": "0",

[ceph-1][DEBUG ] "required_mon": []

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] "monmap": {

[ceph-1][DEBUG ] "created": "2018-04-17 21:42:59.721694",

[ceph-1][DEBUG ] "epoch": 0,

[ceph-1][DEBUG ] "features": {

[ceph-1][DEBUG ] "optional": [],

[ceph-1][DEBUG ] "persistent": []

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] "fsid": "2acc281e-dcb1-49d2-abb3-efcec654ae8c",

[ceph-1][DEBUG ] "modified": "2018-04-17 21:42:59.721694",

[ceph-1][DEBUG ] "mons": [

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "10.213.14.51:6789/0",

[ceph-1][DEBUG ] "name": "ceph-1",

[ceph-1][DEBUG ] "public_addr": "10.213.14.51:6789/0",

[ceph-1][DEBUG ] "rank": 0

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "0.0.0.0:0/1",

[ceph-1][DEBUG ] "name": "ceph-2",

[ceph-1][DEBUG ] "public_addr": "0.0.0.0:0/1",

[ceph-1][DEBUG ] "rank": 1

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "0.0.0.0:0/2",

[ceph-1][DEBUG ] "name": "ceph-3",

[ceph-1][DEBUG ] "public_addr": "0.0.0.0:0/2",

[ceph-1][DEBUG ] "rank": 2

[ceph-1][DEBUG ] }

[ceph-1][DEBUG ] ]

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] "name": "ceph-1",

[ceph-1][DEBUG ] "outside_quorum": [

[ceph-1][DEBUG ] "ceph-1"

[ceph-1][DEBUG ] ],

[ceph-1][DEBUG ] "quorum": [],

[ceph-1][DEBUG ] "rank": 0,

[ceph-1][DEBUG ] "state": "probing",

[ceph-1][DEBUG ] "sync_provider": []

[ceph-1][DEBUG ] }

[ceph-1][DEBUG ] ********************************************************************************

[ceph-1][INFO ] monitor: mon.ceph-1 is running

[ceph-1][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-1.asok mon_status

[ceph_deploy.mon][DEBUG ] detecting platform for host ceph-2 ...

[ceph-2][DEBUG ] connected to host: ceph-2

[ceph-2][DEBUG ] detect platform information from remote host

[ceph-2][DEBUG ] detect machine type

[ceph-2][DEBUG ] find the location of an executable

[ceph_deploy.mon][INFO ] distro info: CentOS Linux 7.3.1611 Core

[ceph-2][DEBUG ] determining if provided host has same hostname in remote

[ceph-2][DEBUG ] get remote short hostname

[ceph-2][DEBUG ] deploying mon to ceph-2

[ceph-2][DEBUG ] get remote short hostname

[ceph-2][DEBUG ] remote hostname: ceph-2

[ceph-2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-2][DEBUG ] create the mon path if it does not exist

[ceph-2][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-ceph-2/done

[ceph-2][DEBUG ] done path does not exist: /var/lib/ceph/mon/ceph-ceph-2/done

[ceph-2][INFO ] creating keyring file: /var/lib/ceph/tmp/ceph-ceph-2.mon.keyring

[ceph-2][DEBUG ] create the monitor keyring file

[ceph-2][INFO ] Running command: ceph-mon --cluster ceph --mkfs -i ceph-2 --keyring /var/lib/ceph/tmp/ceph-ceph-2.mon.keyring --setuser 167 --setgroup 167

[ceph-2][INFO ] unlinking keyring file /var/lib/ceph/tmp/ceph-ceph-2.mon.keyring

[ceph-2][DEBUG ] create a done file to avoid re-doing the mon deployment

[ceph-2][DEBUG ] create the init path if it does not exist

[ceph-2][INFO ] Running command: systemctl enable ceph.target

[ceph-2][INFO ] Running command: systemctl enable ceph-mon@ceph-2

[ceph-2][WARNIN] Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@ceph-2.service to /usr/lib/systemd/system/ceph-mon@.service.

[ceph-2][INFO ] Running command: systemctl start ceph-mon@ceph-2

[ceph-2][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-2.asok mon_status

[ceph-2][DEBUG ] ********************************************************************************

[ceph-2][DEBUG ] status for monitor: mon.ceph-2

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "election_epoch": 0,

[ceph-2][DEBUG ] "extra_probe_peers": [

[ceph-2][DEBUG ] "10.213.14.51:6789/0",

[ceph-2][DEBUG ] "10.213.14.53:6789/0"

[ceph-2][DEBUG ] ],

[ceph-2][DEBUG ] "feature_map": {

[ceph-2][DEBUG ] "mon": {

[ceph-2][DEBUG ] "group": {

[ceph-2][DEBUG ] "features": "0x1ffddff8eea4fffb",

[ceph-2][DEBUG ] "num": 1,

[ceph-2][DEBUG ] "release": "luminous"

[ceph-2][DEBUG ] }

[ceph-2][DEBUG ] }

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] "features": {

[ceph-2][DEBUG ] "quorum_con": "0",

[ceph-2][DEBUG ] "quorum_mon": [],

[ceph-2][DEBUG ] "required_con": "0",

[ceph-2][DEBUG ] "required_mon": []

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] "monmap": {

[ceph-2][DEBUG ] "created": "2018-04-17 21:43:02.790329",

[ceph-2][DEBUG ] "epoch": 0,

[ceph-2][DEBUG ] "features": {

[ceph-2][DEBUG ] "optional": [],

[ceph-2][DEBUG ] "persistent": []

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] "fsid": "2acc281e-dcb1-49d2-abb3-efcec654ae8c",

[ceph-2][DEBUG ] "modified": "2018-04-17 21:43:02.790329",

[ceph-2][DEBUG ] "mons": [

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "10.213.14.52:6789/0",

[ceph-2][DEBUG ] "name": "ceph-2",

[ceph-2][DEBUG ] "public_addr": "10.213.14.52:6789/0",

[ceph-2][DEBUG ] "rank": 0

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "0.0.0.0:0/1",

[ceph-2][DEBUG ] "name": "ceph-1",

[ceph-2][DEBUG ] "public_addr": "0.0.0.0:0/1",

[ceph-2][DEBUG ] "rank": 1

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "0.0.0.0:0/2",

[ceph-2][DEBUG ] "name": "ceph-3",

[ceph-2][DEBUG ] "public_addr": "0.0.0.0:0/2",

[ceph-2][DEBUG ] "rank": 2

[ceph-2][DEBUG ] }

[ceph-2][DEBUG ] ]

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] "name": "ceph-2",

[ceph-2][DEBUG ] "outside_quorum": [

[ceph-2][DEBUG ] "ceph-2"

[ceph-2][DEBUG ] ],

[ceph-2][DEBUG ] "quorum": [],

[ceph-2][DEBUG ] "rank": 0,

[ceph-2][DEBUG ] "state": "probing",

[ceph-2][DEBUG ] "sync_provider": []

[ceph-2][DEBUG ] }

[ceph-2][DEBUG ] ********************************************************************************

[ceph-2][INFO ] monitor: mon.ceph-2 is running

[ceph-2][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-2.asok mon_status

[ceph_deploy.mon][DEBUG ] detecting platform for host ceph-3 ...

[ceph-3][DEBUG ] connected to host: ceph-3

[ceph-3][DEBUG ] detect platform information from remote host

[ceph-3][DEBUG ] detect machine type

[ceph-3][DEBUG ] find the location of an executable

[ceph_deploy.mon][INFO ] distro info: CentOS Linux 7.3.1611 Core

[ceph-3][DEBUG ] determining if provided host has same hostname in remote

[ceph-3][DEBUG ] get remote short hostname

[ceph-3][DEBUG ] deploying mon to ceph-3

[ceph-3][DEBUG ] get remote short hostname

[ceph-3][DEBUG ] remote hostname: ceph-3

[ceph-3][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-3][DEBUG ] create the mon path if it does not exist

[ceph-3][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-ceph-3/done

[ceph-3][DEBUG ] done path does not exist: /var/lib/ceph/mon/ceph-ceph-3/done

[ceph-3][INFO ] creating keyring file: /var/lib/ceph/tmp/ceph-ceph-3.mon.keyring

[ceph-3][DEBUG ] create the monitor keyring file

[ceph-3][INFO ] Running command: ceph-mon --cluster ceph --mkfs -i ceph-3 --keyring /var/lib/ceph/tmp/ceph-ceph-3.mon.keyring --setuser 167 --setgroup 167

[ceph-3][INFO ] unlinking keyring file /var/lib/ceph/tmp/ceph-ceph-3.mon.keyring

[ceph-3][DEBUG ] create a done file to avoid re-doing the mon deployment

[ceph-3][DEBUG ] create the init path if it does not exist

[ceph-3][INFO ] Running command: systemctl enable ceph.target

[ceph-3][INFO ] Running command: systemctl enable ceph-mon@ceph-3

[ceph-3][WARNIN] Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@ceph-3.service to /usr/lib/systemd/system/ceph-mon@.service.

[ceph-3][INFO ] Running command: systemctl start ceph-mon@ceph-3

[ceph-3][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-3.asok mon_status

[ceph-3][DEBUG ] ********************************************************************************

[ceph-3][DEBUG ] status for monitor: mon.ceph-3

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "election_epoch": 1,

[ceph-3][DEBUG ] "extra_probe_peers": [

[ceph-3][DEBUG ] "10.213.14.51:6789/0",

[ceph-3][DEBUG ] "10.213.14.52:6789/0"

[ceph-3][DEBUG ] ],

[ceph-3][DEBUG ] "feature_map": {

[ceph-3][DEBUG ] "mon": {

[ceph-3][DEBUG ] "group": {

[ceph-3][DEBUG ] "features": "0x1ffddff8eea4fffb",

[ceph-3][DEBUG ] "num": 1,

[ceph-3][DEBUG ] "release": "luminous"

[ceph-3][DEBUG ] }

[ceph-3][DEBUG ] }

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] "features": {

[ceph-3][DEBUG ] "quorum_con": "0",

[ceph-3][DEBUG ] "quorum_mon": [],

[ceph-3][DEBUG ] "required_con": "0",

[ceph-3][DEBUG ] "required_mon": []

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] "monmap": {

[ceph-3][DEBUG ] "created": "2018-04-17 21:43:05.838595",

[ceph-3][DEBUG ] "epoch": 0,

[ceph-3][DEBUG ] "features": {

[ceph-3][DEBUG ] "optional": [],

[ceph-3][DEBUG ] "persistent": []

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] "fsid": "2acc281e-dcb1-49d2-abb3-efcec654ae8c",

[ceph-3][DEBUG ] "modified": "2018-04-17 21:43:05.838595",

[ceph-3][DEBUG ] "mons": [

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "10.213.14.51:6789/0",

[ceph-3][DEBUG ] "name": "ceph-1",

[ceph-3][DEBUG ] "public_addr": "10.213.14.51:6789/0",

[ceph-3][DEBUG ] "rank": 0

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "10.213.14.52:6789/0",

[ceph-3][DEBUG ] "name": "ceph-2",

[ceph-3][DEBUG ] "public_addr": "10.213.14.52:6789/0",

[ceph-3][DEBUG ] "rank": 1

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "10.213.14.53:6789/0",

[ceph-3][DEBUG ] "name": "ceph-3",

[ceph-3][DEBUG ] "public_addr": "10.213.14.53:6789/0",

[ceph-3][DEBUG ] "rank": 2

[ceph-3][DEBUG ] }

[ceph-3][DEBUG ] ]

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] "name": "ceph-3",

[ceph-3][DEBUG ] "outside_quorum": [],

[ceph-3][DEBUG ] "quorum": [],

[ceph-3][DEBUG ] "rank": 2,

[ceph-3][DEBUG ] "state": "electing",

[ceph-3][DEBUG ] "sync_provider": []

[ceph-3][DEBUG ] }

[ceph-3][DEBUG ] ********************************************************************************

[ceph-3][INFO ] monitor: mon.ceph-3 is running

[ceph-3][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-3.asok mon_status

[ceph_deploy.mon][INFO ] processing monitor mon.ceph-1

[ceph-1][DEBUG ] connected to host: ceph-1

[ceph-1][DEBUG ] detect platform information from remote host

[ceph-1][DEBUG ] detect machine type

[ceph-1][DEBUG ] find the location of an executable

[ceph-1][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-1.asok mon_status

[ceph_deploy.mon][WARNIN] mon.ceph-1 monitor is not yet in quorum, tries left: 5

[ceph_deploy.mon][WARNIN] waiting 5 seconds before retrying

[ceph-1][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-1.asok mon_status

[ceph_deploy.mon][INFO ] mon.ceph-1 monitor has reached quorum!

[ceph_deploy.mon][INFO ] processing monitor mon.ceph-2

[ceph-2][DEBUG ] connected to host: ceph-2

[ceph-2][DEBUG ] detect platform information from remote host

[ceph-2][DEBUG ] detect machine type

[ceph-2][DEBUG ] find the location of an executable

[ceph-2][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-2.asok mon_status

[ceph_deploy.mon][INFO ] mon.ceph-2 monitor has reached quorum!

[ceph_deploy.mon][INFO ] processing monitor mon.ceph-3

[ceph-3][DEBUG ] connected to host: ceph-3

[ceph-3][DEBUG ] detect platform information from remote host

[ceph-3][DEBUG ] detect machine type

[ceph-3][DEBUG ] find the location of an executable

[ceph-3][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-3.asok mon_status

[ceph_deploy.mon][INFO ] mon.ceph-3 monitor has reached quorum!

[ceph_deploy.mon][INFO ] all initial monitors are running and have formed quorum

[ceph_deploy.mon][INFO ] Running gatherkeys...

[ceph_deploy.gatherkeys][INFO ] Storing keys in temp directory /tmp/tmpNUhCCs

[ceph-1][DEBUG ] connected to host: ceph-1

[ceph-1][DEBUG ] detect platform information from remote host

[ceph-1][DEBUG ] detect machine type

[ceph-1][DEBUG ] get remote short hostname

[ceph-1][DEBUG ] fetch remote file

[ceph-1][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --admin-daemon=/var/run/ceph/ceph-mon.ceph-1.asok mon_status

[ceph-1][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-1/keyring auth get client.admin

[ceph-1][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-1/keyring auth get-or-create client.admin osd allow * mds allow * mon allow * mgr allow *

[ceph-1][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-1/keyring auth get client.bootstrap-mds

[ceph-1][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-1/keyring auth get-or-create client.bootstrap-mds mon allow profile bootstrap-mds

[ceph-1][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-1/keyring auth get client.bootstrap-mgr

[ceph-1][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-1/keyring auth get-or-create client.bootstrap-mgr mon allow profile bootstrap-mgr

[ceph-1][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-1/keyring auth get client.bootstrap-osd

[ceph-1][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-1/keyring auth get-or-create client.bootstrap-osd mon allow profile bootstrap-osd

[ceph-1][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-1/keyring auth get client.bootstrap-rgw

[ceph-1][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-1/keyring auth get-or-create client.bootstrap-rgw mon allow profile bootstrap-rgw

[ceph_deploy.gatherkeys][INFO ] Storing ceph.client.admin.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mds.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mgr.keyring

[ceph_deploy.gatherkeys][INFO ] keyring 'ceph.mon.keyring' already exists

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-osd.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-rgw.keyring

[ceph_deploy.gatherkeys][INFO ] Destroy temp directory /tmp/tmpNUhCCs

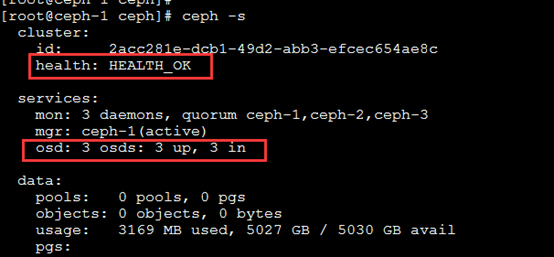

[root@ceph-1 ceph]# ceph -s 2018-04-17 21:47:38.302260 7f868a4e5700 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.admin.keyring,/etc/ceph/ceph.kg,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory 2018-04-17 21:47:38.302272 7f868a4e5700 -1 monclient: ERROR: missing keyring, cannot use cephx for authentication 2018-04-17 21:47:38.302274 7f868a4e5700 0 librados: client.admin initialization error (2) No such file or directory [errno 2] error connecting to the cluster 解决: [root@ceph-1 ceph]# ceph-deploy admin ceph-1 ceph-2 ceph-3 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.0): /usr/bin/ceph-deploy admin ceph-1 ceph-2 ceph-3 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x141ec20> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] client : ['ceph-1', 'ceph-2', 'ceph-3'] [ceph_deploy.cli][INFO ] func : <function admin at 0x1377e60> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-1 [ceph-1][DEBUG ] connected to host: ceph-1 [ceph-1][DEBUG ] detect platform information from remote host [ceph-1][DEBUG ] detect machine type [ceph-1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-2 [ceph-2][DEBUG ] connected to host: ceph-2 [ceph-2][DEBUG ] detect platform information from remote host [ceph-2][DEBUG ] detect machine type [ceph-2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-3 [ceph-3][DEBUG ] connected to host: ceph-3 [ceph-3][DEBUG ] detect platform information from remote host [ceph-3][DEBUG ] detect machine type [ceph-3][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [root@ceph-1 ceph]# [root@ceph-1 ceph]# [root@ceph-1 ceph]# [root@ceph-1 ceph]# ceph -s cluster: id: 2acc281e-dcb1-49d2-abb3-efcec654ae8c health: HEALTH_OK services: mon: 3 daemons, quorum ceph-1,ceph-2,ceph-3 mgr: no daemons active osd: 0 osds: 0 up, 0 in data: pools: 0 pools, 0 pgs objects: 0 objects, 0 bytes usage: 0 kB used, 0 kB / 0 kB avail pgs:

6、创建osd

创建osd 注意要先分区,不能是裸盘 (每个节点暂用一块硬盘对应一个osd) [root@ceph-1 ceph]# ceph-deploy osd create --data /dev/sde1 ceph-1 root@ceph-1 ceph]# ceph-deploy osd create --data /dev/sde1 ceph-1 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.0): /usr/bin/ceph-deploy osd create --data /dev/sde1 ceph-1 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] bluestore : None [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f2022216c68> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] fs_type : xfs [ceph_deploy.cli][INFO ] block_wal : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] journal : None [ceph_deploy.cli][INFO ] subcommand : create [ceph_deploy.cli][INFO ] host : ceph-1 [ceph_deploy.cli][INFO ] filestore : None [ceph_deploy.cli][INFO ] func : <function osd at 0x7f20221f9578> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] zap_disk : False [ceph_deploy.cli][INFO ] data : /dev/sde1 [ceph_deploy.cli][INFO ] block_db : None [ceph_deploy.cli][INFO ] dmcrypt : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] debug : False [ceph_deploy.osd][DEBUG ] Creating OSD on cluster ceph with data device /dev/sde1 [ceph-1][DEBUG ] connected to host: ceph-1 [ceph-1][DEBUG ] detect platform information from remote host [ceph-1][DEBUG ] detect machine type [ceph-1][DEBUG ] find the location of an executable [ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.3.1611 Core [ceph_deploy.osd][DEBUG ] Deploying osd to ceph-1 [ceph-1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [ceph-1][DEBUG ] find the location of an executable [ceph-1][INFO ] Running command: /usr/sbin/ceph-volume --cluster ceph lvm create --bluestore --data /dev/sde1 [ceph-1][DEBUG ] Running command: ceph-authtool --gen-print-key [ceph-1][DEBUG ] Running command: ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new e2778b0e-0965-4d3c-9ff9-3f126db4b0d6 [ceph-1][DEBUG ] Running command: vgcreate --force --yes ceph-2acc281e-dcb1-49d2-abb3-efcec654ae8c /dev/sde1 [ceph-1][DEBUG ] stdout: Wiping xfs signature on /dev/sde1. [ceph-1][DEBUG ] stdout: Physical volume "/dev/sde1" successfully created. [ceph-1][DEBUG ] stdout: Volume group "ceph-2acc281e-dcb1-49d2-abb3-efcec654ae8c" successfully created [ceph-1][DEBUG ] Running command: lvcreate --yes -l 100%FREE -n osd-block-e2778b0e-0965-4d3c-9ff9-3f126db4b0d6 ceph-2acc281e-dcb1-49d2-abb3-efcec654ae8c [ceph-1][DEBUG ] stdout: Logical volume "osd-block-e2778b0e-0965-4d3c-9ff9-3f126db4b0d6" created. [ceph-1][DEBUG ] Running command: ceph-authtool --gen-print-key [ceph-1][DEBUG ] Running command: mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-0 [ceph-1][DEBUG ] Running command: chown -R ceph:ceph /dev/dm-0 [ceph-1][DEBUG ] Running command: ln -s /dev/ceph-2acc281e-dcb1-49d2-abb3-efcec654ae8c/osd-block-e2778b0e-0965-4d3c-9ff9-3f126db4b0d6 /var/lib/ceph/osd/ceph-0/block [ceph-1][DEBUG ] Running command: ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-0/activate.monmap [ceph-1][DEBUG ] stderr: got monmap epoch 1 [ceph-1][DEBUG ] Running command: ceph-authtool /var/lib/ceph/osd/ceph-0/keyring --create-keyring --name osd.0 --add-key AQB7stZarid9ExAAr4aIn6HZssfI6VWb/fs88Q== [ceph-1][DEBUG ] stdout: creating /var/lib/ceph/osd/ceph-0/keyring [ceph-1][DEBUG ] stdout: added entity osd.0 auth auth(auid = 18446744073709551615 key=AQB7stZarid9ExAAr4aIn6HZssfI6VWb/fs88Q== with 0 caps) [ceph-1][DEBUG ] Running command: chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/keyring [ceph-1][DEBUG ] Running command: chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/ [ceph-1][DEBUG ] Running command: ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 0 --monmap /var/lib/ceph/osd/ceph-0/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-0/ --osd-uuid e2778b0e-0965-4d3c-9ff9-3f126db4b0d6 --setuser ceph --setgroup ceph [ceph-1][DEBUG ] --> ceph-volume lvm prepare successful for: /dev/sde1 [ceph-1][DEBUG ] Running command: ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-2acc281e-dcb1-49d2-abb3-efcec654ae8c/osd-block-e2778b0e-0965-4d3c-9ff9-3f126db4b0d6 --path /var/lib/ceph/osd/ceph-0 [ceph-1][DEBUG ] Running command: ln -snf /dev/ceph-2acc281e-dcb1-49d2-abb3-efcec654ae8c/osd-block-e2778b0e-0965-4d3c-9ff9-3f126db4b0d6 /var/lib/ceph/osd/ceph-0/block [ceph-1][DEBUG ] Running command: chown -R ceph:ceph /dev/dm-0 [ceph-1][DEBUG ] Running command: chown -R ceph:ceph /var/lib/ceph/osd/ceph-0 [ceph-1][DEBUG ] Running command: systemctl enable ceph-volume@lvm-0-e2778b0e-0965-4d3c-9ff9-3f126db4b0d6 [ceph-1][DEBUG ] stderr: Created symlink from /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-0-e2778b0e-0965-4d3c-9ff9-3f126db4b0d6.service to /usr/lib/systemd/system/ceph-volume@.service. [ceph-1][DEBUG ] Running command: systemctl start ceph-osd@0 [ceph-1][DEBUG ] --> ceph-volume lvm activate successful for osd ID: 0 [ceph-1][DEBUG ] --> ceph-volume lvm activate successful for osd ID: None [ceph-1][DEBUG ] --> ceph-volume lvm create successful for: /dev/sde1 [ceph-1][INFO ] checking OSD status... [ceph-1][DEBUG ] find the location of an executable [ceph-1][INFO ] Running command: /bin/ceph --cluster=ceph osd stat --format=json [ceph_deploy.osd][DEBUG ] Host ceph-1 is now ready for osd use. [root@ceph-1 ceph]# ll /var/lib/ceph/osd/ total 8 drwxrwxrwt 2 ceph ceph 300 Apr 17 22:50 ceph-0 -rw-r--r-- 1 root root 5084 Apr 17 22:35 ceph-deploy-ceph.log [root@ceph-1 ceph]# ll /var/lib/ceph/osd/ceph-0/ total 48 -rw-r--r-- 1 ceph ceph 402 Apr 17 22:50 activate.monmap lrwxrwxrwx 1 ceph ceph 93 Apr 17 22:50 block -> /dev/ceph-2acc281e-dcb1-49d2-abb3-efcec654ae8c/osd-block-e2778b0e-0965-4d3c-9ff9-3f126db4b0d6 -rw-r--r-- 1 ceph ceph 2 Apr 17 22:50 bluefs -rw-r--r-- 1 ceph ceph 37 Apr 17 22:50 ceph_fsid -rw-r--r-- 1 ceph ceph 37 Apr 17 22:50 fsid -rw------- 1 ceph ceph 55 Apr 17 22:50 keyring -rw-r--r-- 1 ceph ceph 8 Apr 17 22:50 kv_backend -rw-r--r-- 1 ceph ceph 21 Apr 17 22:50 magic -rw-r--r-- 1 ceph ceph 4 Apr 17 22:50 mkfs_done -rw-r--r-- 1 ceph ceph 41 Apr 17 22:50 osd_key -rw-r--r-- 1 ceph ceph 6 Apr 17 22:50 ready -rw-r--r-- 1 ceph ceph 10 Apr 17 22:50 type -rw-r--r-- 1 ceph ceph 2 Apr 17 22:50 whoami [root@ceph-1 ceph]# ceph-deploy osd create --data /dev/sde1 ceph-2 [root@ceph-1 ceph]# ceph-deploy osd create --data /dev/sdf1 ceph-3

创建mgr(一台或三台创建都可以)Required only for luminous+ builds, i.e >= 12.x builds*

[root@ceph-1 ceph]# ceph-deploy mgr create ceph-1

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.0): /usr/bin/ceph-deploy mgr create ceph-1

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] mgr : [('ceph-1', 'ceph-1')]

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7faadd01f0e0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mgr at 0x7faadd8a1d70>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mgr][DEBUG ] Deploying mgr, cluster ceph hosts ceph-1:ceph-1

[ceph-1][DEBUG ] connected to host: ceph-1

[ceph-1][DEBUG ] detect platform information from remote host

[ceph-1][DEBUG ] detect machine type

[ceph_deploy.mgr][INFO ] Distro info: CentOS Linux 7.3.1611 Core

[ceph_deploy.mgr][DEBUG ] remote host will use systemd

[ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to ceph-1

[ceph-1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-1][WARNIN] mgr keyring does not exist yet, creating one

[ceph-1][DEBUG ] create a keyring file

[ceph-1][DEBUG ] create path recursively if it doesn't exist

[ceph-1][INFO ] Running command: ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.ceph-1 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-ceph-1/keyring

[ceph-1][INFO ] Running command: systemctl enable ceph-mgr@ceph-1

[ceph-1][WARNIN] Created symlink from /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@ceph-1.service to /usr/lib/systemd/system/ceph-mgr@.service.

[ceph-1][INFO ] Running command: systemctl start ceph-mgr@ceph-1

[ceph-1][INFO ] Running command: systemctl enable ceph.target

7、创建mon

创建mon [root@ceph-1 ceph]# ceph-deploy mon create ceph-1 [root@ceph-1 ceph]# ceph-deploy mon create ceph-1 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.0): /usr/bin/ceph-deploy mon create ceph-1 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] subcommand : create [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7ffb65203830> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] mon : ['ceph-1'] [ceph_deploy.cli][INFO ] func : <function mon at 0x7ffb651f30c8> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] keyrings : None [ceph_deploy.mon][DEBUG ] Deploying mon, cluster ceph hosts ceph-1 [ceph_deploy.mon][DEBUG ] detecting platform for host ceph-1 ... [ceph-1][DEBUG ] connected to host: ceph-1 [ceph-1][DEBUG ] detect platform information from remote host [ceph-1][DEBUG ] detect machine type [ceph-1][DEBUG ] find the location of an executable [ceph_deploy.mon][INFO ] distro info: CentOS Linux 7.3.1611 Core [ceph-1][DEBUG ] determining if provided host has same hostname in remote [ceph-1][DEBUG ] get remote short hostname [ceph-1][DEBUG ] deploying mon to ceph-1 [ceph-1][DEBUG ] get remote short hostname [ceph-1][DEBUG ] remote hostname: ceph-1 [ceph-1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [ceph-1][DEBUG ] create the mon path if it does not exist [ceph-1][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-ceph-1/done [ceph-1][DEBUG ] create a done file to avoid re-doing the mon deployment [ceph-1][DEBUG ] create the init path if it does not exist [ceph-1][INFO ] Running command: systemctl enable ceph.target [ceph-1][INFO ] Running command: systemctl enable ceph-mon@ceph-1 [ceph-1][INFO ] Running command: systemctl start ceph-mon@ceph-1 [ceph-1][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-1.asok mon_status [ceph-1][DEBUG ] ******************************************************************************** [ceph-1][DEBUG ] status for monitor: mon.ceph-1 [ceph-1][DEBUG ] { [ceph-1][DEBUG ] "election_epoch": 6, [ceph-1][DEBUG ] "extra_probe_peers": [ [ceph-1][DEBUG ] "10.213.14.52:6789/0", [ceph-1][DEBUG ] "10.213.14.53:6789/0" [ceph-1][DEBUG ] ], [ceph-1][DEBUG ] "feature_map": { [ceph-1][DEBUG ] "client": { [ceph-1][DEBUG ] "group": { [ceph-1][DEBUG ] "features": "0x1ffddff8eea4fffb", [ceph-1][DEBUG ] "num": 1, [ceph-1][DEBUG ] "release": "luminous" [ceph-1][DEBUG ] } [ceph-1][DEBUG ] }, [ceph-1][DEBUG ] "mon": { [ceph-1][DEBUG ] "group": { [ceph-1][DEBUG ] "features": "0x1ffddff8eea4fffb", [ceph-1][DEBUG ] "num": 1, [ceph-1][DEBUG ] "release": "luminous" [ceph-1][DEBUG ] } [ceph-1][DEBUG ] }, [ceph-1][DEBUG ] "osd": { [ceph-1][DEBUG ] "group": { [ceph-1][DEBUG ] "features": "0x1ffddff8eea4fffb", [ceph-1][DEBUG ] "num": 1, [ceph-1][DEBUG ] "release": "luminous" [ceph-1][DEBUG ] } [ceph-1][DEBUG ] } [ceph-1][DEBUG ] }, [ceph-1][DEBUG ] "features": { [ceph-1][DEBUG ] "quorum_con": "2305244844532236283", [ceph-1][DEBUG ] "quorum_mon": [ [ceph-1][DEBUG ] "kraken", [ceph-1][DEBUG ] "luminous" [ceph-1][DEBUG ] ], [ceph-1][DEBUG ] "required_con": "153140804152475648", [ceph-1][DEBUG ] "required_mon": [ [ceph-1][DEBUG ] "kraken", [ceph-1][DEBUG ] "luminous" [ceph-1][DEBUG ] ] [ceph-1][DEBUG ] }, [ceph-1][DEBUG ] "monmap": { [ceph-1][DEBUG ] "created": "2018-04-17 21:42:59.721694", [ceph-1][DEBUG ] "epoch": 1, [ceph-1][DEBUG ] "features": { [ceph-1][DEBUG ] "optional": [], [ceph-1][DEBUG ] "persistent": [ [ceph-1][DEBUG ] "kraken", [ceph-1][DEBUG ] "luminous" [ceph-1][DEBUG ] ] [ceph-1][DEBUG ] }, [ceph-1][DEBUG ] "fsid": "2acc281e-dcb1-49d2-abb3-efcec654ae8c", [ceph-1][DEBUG ] "modified": "2018-04-17 21:42:59.721694", [ceph-1][DEBUG ] "mons": [ [ceph-1][DEBUG ] { [ceph-1][DEBUG ] "addr": "10.213.14.51:6789/0", [ceph-1][DEBUG ] "name": "ceph-1", [ceph-1][DEBUG ] "public_addr": "10.213.14.51:6789/0", [ceph-1][DEBUG ] "rank": 0 [ceph-1][DEBUG ] }, [ceph-1][DEBUG ] { [ceph-1][DEBUG ] "addr": "10.213.14.52:6789/0", [ceph-1][DEBUG ] "name": "ceph-2", [ceph-1][DEBUG ] "public_addr": "10.213.14.52:6789/0", [ceph-1][DEBUG ] "rank": 1 [ceph-1][DEBUG ] }, [ceph-1][DEBUG ] { [ceph-1][DEBUG ] "addr": "10.213.14.53:6789/0", [ceph-1][DEBUG ] "name": "ceph-3", [ceph-1][DEBUG ] "public_addr": "10.213.14.53:6789/0", [ceph-1][DEBUG ] "rank": 2 [ceph-1][DEBUG ] } [ceph-1][DEBUG ] ] [ceph-1][DEBUG ] }, [ceph-1][DEBUG ] "name": "ceph-1", [ceph-1][DEBUG ] "outside_quorum": [], [ceph-1][DEBUG ] "quorum": [ [ceph-1][DEBUG ] 0, [ceph-1][DEBUG ] 1, [ceph-1][DEBUG ] 2 [ceph-1][DEBUG ] ], [ceph-1][DEBUG ] "rank": 0, [ceph-1][DEBUG ] "state": "leader", [ceph-1][DEBUG ] "sync_provider": [] [ceph-1][DEBUG ] } [ceph-1][DEBUG ] ******************************************************************************** [ceph-1][INFO ] monitor: mon.ceph-1 is running [ceph-1][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-1.asok mon_status ceph-deploy mon create ceph-2 ceph-deploy mon create ceph-3 查看mon状态 [root@ceph-1 ceph]# ceph quorum_status --format json-pretty

8、启用dashboard

启用dashborad

[root@ceph-1 ceph]# ceph mgr module enable dashboard

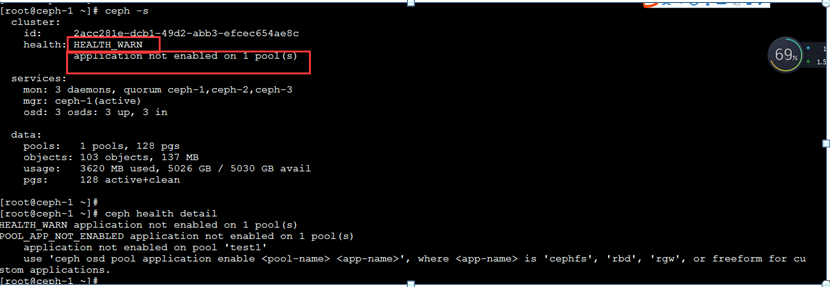

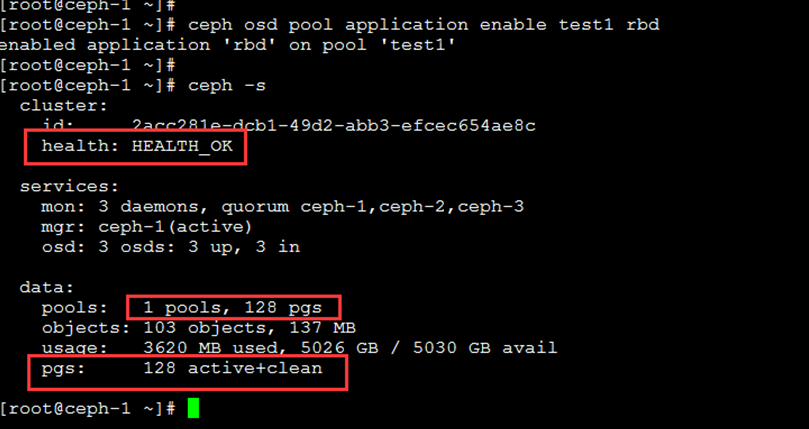

至此整个ceph集群构建完毕,下面测试rbd块存储

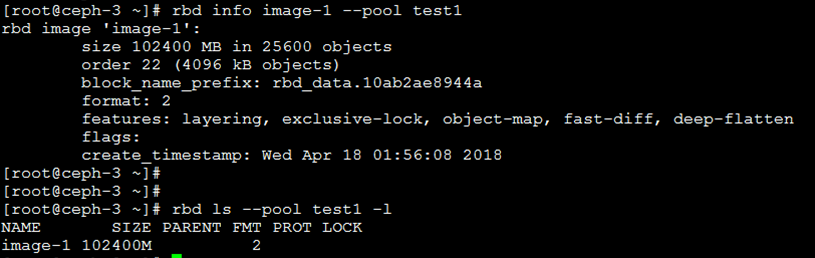

[root@ceph-3 ~]# ceph osd pool create test1 128 #创建新的存储池,而不是使用默认的rbd

[root@ceph-3 ~]# rbd create --size 100G image-1 --pool test1 #创建一个快

[root@ceph-3 ~]# rbd info image-1 --pool test1 #查看rbd信息

root@ceph-3 ~]# rbd --pool test1 feature disable image-1 exclusive-lock, object-map, fast-diff, deep-flatten #禁用不支持的属性

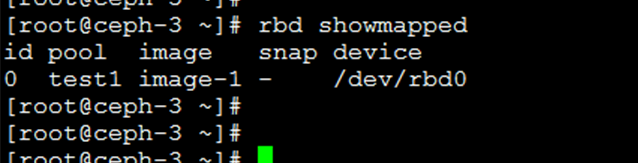

[root@ceph-3 ~]# rbd map --pool test1 image-1 #映射块 image-1 到本地

/dev/rbd0

[root@ceph-3 ~]# mkfs.ext4 /dev/rbd0 #格式化块设备

[root@ceph-1 ~]# ceph osd pool application enable test1 rbd

enabled application 'rbd' on pool 'test1'

测试写入数

[root@ceph-3 rbd]# dd if=/dev/zero of=/mnt/rbd/a count=30000 bs=1M

30000+0 records in

30000+0 records out

31457280000 bytes (31 GB) copied, 66.36 s, 474 MB/s

删除ceph

ceph-deploy purge ceph-1 ceph-2 ceph-3

ceph-deploy purgedata ceph-1 ceph-2 ceph-3

ceph-deploy forgetkeys

参考文档

http://docs.ceph.org.cn/

http://docs.ceph.com/