本例代码下载:https://files.cnblogs.com/files/xiandedanteng/InsertMillionComparison20191012.rar

我的数据库环境是mysql Ver 14.14 Distrib 5.6.45, for Linux (x86_64) using EditLine wrapper

这个数据库是安装在T440p的虚拟机上的,操作系统为CentOs6.5.

插入一千万条数据,一次执行时间是4m57s,一次是5m。

数据表的定义是这样的:

CREATE TABLE `emp` ( `Id` int(11) NOT NULL AUTO_INCREMENT, `name` varchar(255) DEFAULT NULL, `age` smallint(3) DEFAULT NULL, `cdate` timestamp NULL DEFAULT NULL COMMENT 'createtime', PRIMARY KEY (`Id`) ) ENGINE=InnoDB AUTO_INCREMENT=13548008 DEFAULT CHARSET=utf8;

这是一个以id为自增主键,包含了三种不同类型字段的简单表。

我使用MyBatis的Batch Insert功能给数据表插入数据,其SQL在Mapper中定义成这样:

<?xml version="1.0" encoding="UTF-8" ?> <!DOCTYPE mapper PUBLIC "-//mybatis.org//DTD Mapper 3.0//EN" "http://mybatis.org/dtd/mybatis-3-mapper.dtd" > <mapper namespace="com.hy.mapper.EmpMapper"> <select id="selectById" resultType="com.hy.entity.Employee"> select id,name,age,cdate as ctime from emp where id=#{id} </select> <insert id="batchInsert"> insert into emp(name,age,cdate) values <foreach collection="list" item="emp" separator=","> (#{emp.name},#{emp.age},#{emp.ctime,jdbcType=TIMESTAMP}) </foreach> </insert> </mapper>

与之对应的接口类是这样的:

package com.hy.mapper; import java.util.List; import com.hy.entity.Employee; public interface EmpMapper { Employee selectById(long id); int batchInsert(List<Employee> emps); }

实体类Employee如下:

package com.hy.entity; import java.text.MessageFormat; public class Employee { private long id; private String name; private int age; private String ctime; public Employee() { } public Employee(String name,int age,String ctime) { this.name=name; this.age=age; this.ctime=ctime; } public String toString() { Object[] arr={id,name,age,ctime}; String retval=MessageFormat.format("Employee id={0},name={1},age={2},created_datetime={3}", arr); return retval; } public long getId() { return id; } public void setId(long id) { this.id = id; } public String getName() { return name; } public void setName(String name) { this.name = name; } public int getAge() { return age; } public void setAge(int age) { this.age = age; } public String getCtime() { return ctime; } public void setCtime(String ctime) { this.ctime = ctime; } }

如果插入数据不多可以这样书写:

package com.hy.action; import java.io.Reader; import java.util.ArrayList; import java.util.List; import org.apache.ibatis.io.Resources; import org.apache.ibatis.session.SqlSession; import org.apache.ibatis.session.SqlSessionFactory; import org.apache.ibatis.session.SqlSessionFactoryBuilder; import org.apache.log4j.Logger; import com.hy.entity.Employee; import com.hy.mapper.EmpMapper; public class BatchInsert01 { private static Logger logger = Logger.getLogger(SelectById.class); public static void main(String[] args) throws Exception{ Reader reader=Resources.getResourceAsReader("mybatis-config.xml"); SqlSessionFactory ssf=new SqlSessionFactoryBuilder().build(reader); reader.close(); SqlSession session=ssf.openSession(); try { EmpMapper mapper=session.getMapper(EmpMapper.class); List<Employee> emps=new ArrayList<Employee>(); emps.add(new Employee("Bill",22,"2018-12-25")); emps.add(new Employee("Cindy",22,"2018-12-25")); emps.add(new Employee("Douglas",22,"2018-12-25")); int changed=mapper.batchInsert(emps); System.out.println("changed="+changed); session.commit(); }catch(Exception ex) { logger.error(ex); session.rollback(); }finally { session.close(); } } }

如果插入数据多,就必须采用分批提交的方式,我采用的是插入一千个数据后提交一次,然后重复一万次的方式:

package com.hy.action; import java.io.Reader; import java.util.ArrayList; import java.util.List; import org.apache.ibatis.io.Resources; import org.apache.ibatis.session.SqlSession; import org.apache.ibatis.session.SqlSessionFactory; import org.apache.ibatis.session.SqlSessionFactoryBuilder; import org.apache.log4j.Logger; import com.hy.entity.Employee; import com.hy.mapper.EmpMapper; public class BatchInsert1000 { private static Logger logger = Logger.getLogger(SelectById.class); public static void main(String[] args) throws Exception{ long startTime = System.currentTimeMillis(); Reader reader=Resources.getResourceAsReader("mybatis-config.xml"); SqlSessionFactory ssf=new SqlSessionFactoryBuilder().build(reader); reader.close(); SqlSession session=ssf.openSession(); try { EmpMapper mapper=session.getMapper(EmpMapper.class); String ctime="2017-11-01 00:00:01"; for(int i=0;i<10000;i++) { List<Employee> emps=new ArrayList<Employee>(); for(int j=0;j<1000;j++) { Employee emp=new Employee("E"+i,20,ctime); emps.add(emp); } int changed=mapper.batchInsert(emps); session.commit(); System.out.println("#"+i+" changed="+changed); } }catch(Exception ex) { session.rollback(); logger.error(ex); }finally { session.close(); long endTime = System.currentTimeMillis(); logger.info("Time elapsed:" + toDhmsStyle((endTime - startTime)/1000) + "."); } } // format seconds to day hour minute seconds style // Example 5000s will be formatted to 1h23m20s public static String toDhmsStyle(long allSeconds) { String DateTimes = null; long days = allSeconds / (60 * 60 * 24); long hours = (allSeconds % (60 * 60 * 24)) / (60 * 60); long minutes = (allSeconds % (60 * 60)) / 60; long seconds = allSeconds % 60; if (days > 0) { DateTimes = days + "d" + hours + "h" + minutes + "m" + seconds + "s"; } else if (hours > 0) { DateTimes = hours + "h" + minutes + "m" + seconds + "s"; } else if (minutes > 0) { DateTimes = minutes + "m" + seconds + "s"; } else { DateTimes = seconds + "s"; } return DateTimes; } }

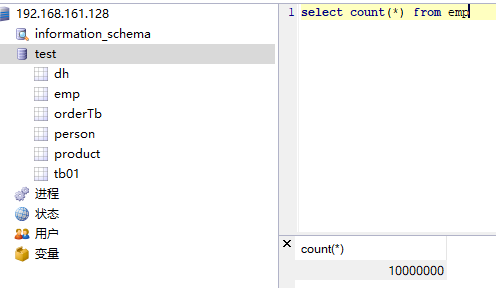

最后查询数据库,结果如下:

当然插入过程中还有一些插曲,在后继篇章中我会说明。

--END-- 2019年10月12日16:52:14