本文作者:hhh5460

本文地址:https://www.cnblogs.com/hhh5460/p/10147265.html

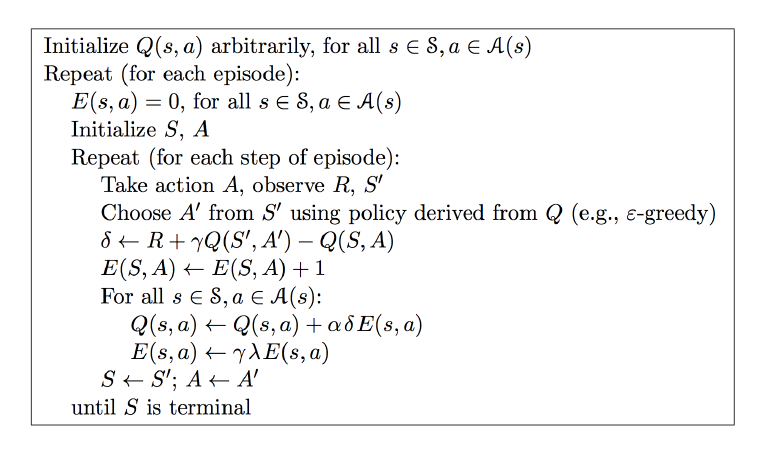

将例一用saras lambda算法重新撸了一遍,没有参照任何其他人的代码。仅仅根据伪代码,就撸出来了。感觉已真正理解了saras lambda算法。记录如下

0. saras lambda算法伪代码

图片来源:https://morvanzhou.github.io/static/results/reinforcement-learning/3-3-1.png(莫凡)

1. saras lambda算法真实代码

# e_table是q_table的拷贝 e_table = q_table.copy() # ... # saras(lambda)算法 # 参见:https://morvanzhou.github.io/static/results/reinforcement-learning/3-3-1.png for i in range(13): # 0. e_table清零 e_table *= 0 # 1.从状态0开始 current_state = 0 # 2.选择一个合法的动作 current_action = choose_action(current_state, epsilon) # 3.进入循环,探索学习 while current_state != states[-1]: # 4.取下一状态 next_state = get_next_state(current_state, current_action) # 5.取下一奖励 next_reward = rewards[next_state] # 6.取下一动作 next_action = choose_action(next_state, epsilon) # 7.计算德塔 delta = next_reward + gamma * q_table.ix[next_state, next_action] - q_table.ix[current_state, current_action] # 8.当前状态、动作对应的e_table的值加1 #e_table.ix[current_state, current_action] += 1 # 这是标准的操作,但是莫凡指出改成下面两句效果更好! e_table.ix[current_state] *= 0 e_table.ix[current_state, current_action] = 1 # 9.遍历每一个状态的所有动作(不能仅合法动作) for state in states: for action in actions: # 10.逐个更新q_talbe, e_table中对应的值 q_table.ix[state, action] += alpha * delta * e_table.ix[state, action] e_table.ix[state, action] *= gamma * lambda_ # 11.进入下一状态、动作 current_state, current_action = next_state, next_action

第9步,刚开始我这么写:for action in get_valid_actions(state):,运行后发现没有这样写好:for action in actions:

2. 完整代码

''' -o---T # T 就是宝藏的位置, o 是探索者的位置 ''' # 作者: hhh5460 # 时间:20181220 '''saras(lambda)算法实现''' import pandas as pd import random import time epsilon = 0.9 # 贪婪度 greedy alpha = 0.1 # 学习率 gamma = 0.8 # 奖励递减值 lambda_ = 0.9 # 衰减值 states = range(6) # 状态集。从0到5 actions = ['left', 'right'] # 动作集。也可添加动作'none',表示停留 rewards = [0,0,0,0,0,1] # 奖励集。只有最后的宝藏所在位置才有奖励1,其他皆为0 q_table = pd.DataFrame(data=[[0 for _ in actions] for _ in states], index=states, columns=actions) e_table = q_table.copy() def update_env(state): '''更新环境,并打印''' env = list('-----T') # 环境 env[state] = 'o' # 更新环境 print(' {}'.format(''.join(env)), end='') time.sleep(0.1) def get_next_state(state, action): '''对状态执行动作后,得到下一状态''' global states # l,r,n = -1,+1,0 if action == 'right' and state != states[-1]: # 除末状态(位置),向右+1 next_state = state + 1 elif action == 'left' and state != states[0]: # 除首状态(位置),向左-1 next_state = state -1 else: next_state = state return next_state def get_valid_actions(state): '''取当前状态下的合法动作集合,与reward无关!''' global actions # ['left', 'right'] valid_actions = set(actions) if state == states[0]: # 首状态(位置),则 不能向左 valid_actions -= set(['left']) if state == states[-1]: # 末状态(位置),则 不能向右 valid_actions -= set(['right']) return list(valid_actions) def choose_action(state, epsilon_=0.9): '''选择动作,根据状态''' if random.uniform(0,1) > epsilon_: # 探索 action = random.choice(get_valid_actions(state)) else: # 利用(贪婪) #current_action = q_table.ix[current_state].idxmax() # 这种写法是有问题的! s = q_table.ix[state].filter(items=get_valid_actions(state)) action = random.choice(s[s==s.max()].index) # 可能多个最大值,当然,一个更好 return action # saras(lambda)算法 # 参见:https://morvanzhou.github.io/static/results/reinforcement-learning/3-3-1.png for i in range(13): e_table *= 0 # 清零 current_state = 0 current_action = choose_action(current_state, epsilon) update_env(current_state) # 环境相关 total_steps = 0 # 环境相关 while current_state != states[-1]: next_state = get_next_state(current_state, current_action) next_reward = rewards[next_state] next_action = choose_action(next_state, epsilon) delta = next_reward + gamma * q_table.ix[next_state, next_action] - q_table.ix[current_state, current_action] #e_table.ix[current_state, current_action] += 1 # 这是标准的操作,但是莫凡指出改成下面两句效果更好! e_table.ix[current_state] *= 0 e_table.ix[current_state, current_action] = 1 for state in states: for action in actions: #get_valid_actions(state): q_table.ix[state, action] += alpha * delta * e_table.ix[state, action] e_table.ix[state, action] *= gamma * lambda_ current_state, current_action = next_state, next_action update_env(current_state) # 环境相关 total_steps += 1 # 环境相关 print(' Episode {}: total_steps = {}'.format(i, total_steps), end='') # 环境相关 time.sleep(2) # 环境相关 print(' ', end='') # 环境相关 print(' q_table:') print(q_table)