源码见:https://github.com/hiszm/hadoop-train

HDFS API编程

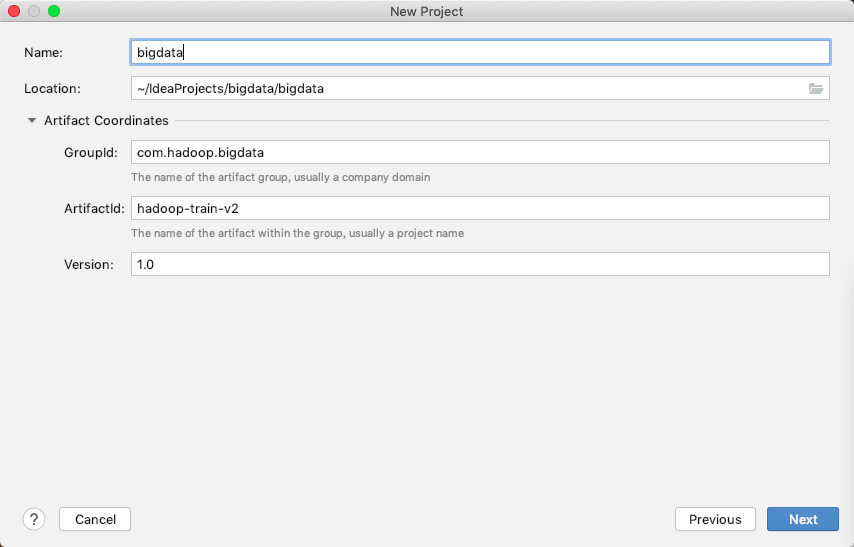

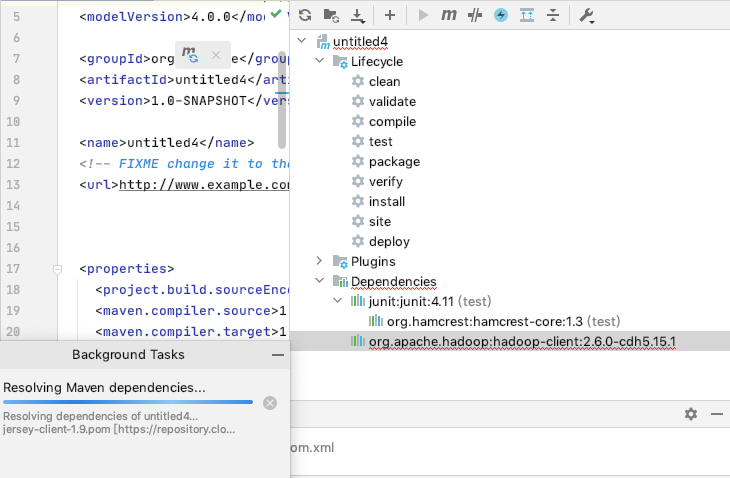

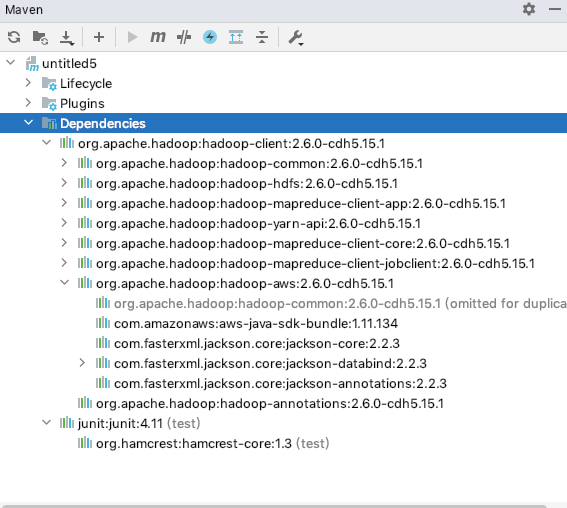

开发环境搭建

porn.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.example</groupId>

<artifactId>untitled4</artifactId>

<version>1.0-SNAPSHOT</version>

<name>untitled4</name>

<!-- FIXME change it to the project's website -->

<url>http://www.example.com</url>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.7</maven.compiler.source>

<maven.compiler.target>1.7</maven.compiler.target>

<hadoop.version>2.6.0-cdh5.15.1</hadoop.version>

</properties>

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

</repositories>

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.6.0-cdh5.15.1</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.11</version>

<scope>test</scope>

</dependency>

</dependencies>

<build>

<pluginManagement><!-- lock down plugins versions to avoid using Maven defaults (may be moved to parent pom) -->

<plugins>

<!-- clean lifecycle, see https://maven.apache.org/ref/current/maven-core/lifecycles.html#clean_Lifecycle -->

<plugin>

<artifactId>maven-clean-plugin</artifactId>

<version>3.1.0</version>

</plugin>

<!-- default lifecycle, jar packaging: see https://maven.apache.org/ref/current/maven-core/default-bindings.html#Plugin_bindings_for_jar_packaging -->

<plugin>

<artifactId>maven-resources-plugin</artifactId>

<version>3.0.2</version>

</plugin>

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.8.0</version>

</plugin>

<plugin>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.22.1</version>

</plugin>

<plugin>

<artifactId>maven-jar-plugin</artifactId>

<version>3.0.2</version>

</plugin>

<plugin>

<artifactId>maven-install-plugin</artifactId>

<version>2.5.2</version>

</plugin>

<plugin>

<artifactId>maven-deploy-plugin</artifactId>

<version>2.8.2</version>

</plugin>

<!-- site lifecycle, see https://maven.apache.org/ref/current/maven-core/lifecycles.html#site_Lifecycle -->

<plugin>

<artifactId>maven-site-plugin</artifactId>

<version>3.7.1</version>

</plugin>

<plugin>

<artifactId>maven-project-info-reports-plugin</artifactId>

<version>3.0.0</version>

</plugin>

</plugins>

</pluginManagement>

</build>

</project>

等下载完毕就可以了

最后速度太慢,我就挂梯子了

这个就是安装完毕后的样子

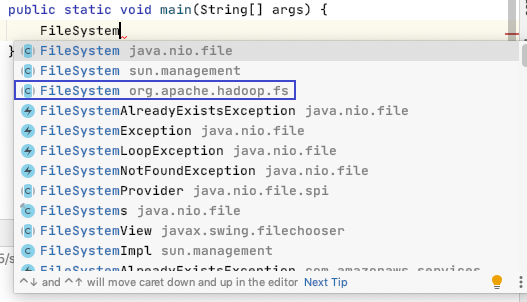

HelloWorld

package org.example;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.net.URI;

//使用java API来操作文件系统

public class HDFSapp {

public static void main(String[] args) throws Exception {

Configuration configuration =new Configuration();

FileSystem fileSystem= FileSystem.get(new URI("hdfs://hadoop000:8020"), configuration);

Path path= new Path("/hdfsapi/test");

boolean result= fileSystem.mkdirs(path);

System.out.println(result);

}

}

第一次出现了报错Permission denied

Exception in thread "main" org.apache.hadoop.security.AccessControlException: Permission denied: user=jacksun, access=WRITE, inode="/":hadoop:supergroup:drwxr-xr-x

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkFsPermission(DefaultAuthorizationProvider.java:279)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.chec

-rw-r--r-- 1 hadoop supergroup 1366 2020-08-17 21:35 /README.txt

drwxr-xr-x - hadoop supergroup 0 2020-08-17 21:48 /hdfs-test

-rw-r--r-- 1 hadoop supergroup 181367942 2020-08-17 21:59 /jdk-8u91-linux-x64.tar.gz

原因我们不是supergroup组里面的没有写权限

解决办法加入用户hadoop到里面

package org.example;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.net.URI;

//使用java API来操作文件系统

public class HDFSapp {

public static void main(String[] args) throws Exception {

Configuration configuration =new Configuration();

FileSystem fileSystem= FileSystem.get(new URI("hdfs://hadoop000:8020"), configuration, "hadoop");

Path path= new Path("/hdfsapi/test");

boolean result= fileSystem.mkdirs(path);

System.out.println(result);

}

}

true

Process finished with exit code 0

[hadoop@hadoop000 sbin]$ hadoop fs -ls /

Found 4 items

-rw-r--r-- 1 hadoop supergroup 1366 2020-08-17 21:35 /README.txt

drwxr-xr-x - hadoop supergroup 0 2020-08-17 21:48 /hdfs-test

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:08 /hdfsapi

-rw-r--r-- 1 hadoop supergroup 181367942 2020-08-17 21:59 /jdk-8u91-linux-x64.tar.gz

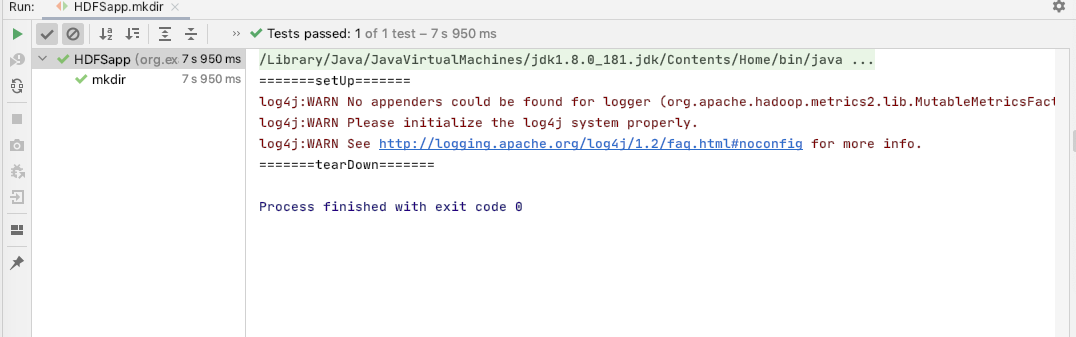

jUnit封装

package org.example;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

import java.net.URI;

//使用java API来操作文件系统

public class HDFSapp {

public static final String HDFS_PATH="hdfs://hadoop000:8020";

FileSystem fileSystem=null;

Configuration configuration=null;

@Before

public void setUp() throws Exception{

System.out.println("=======setUp=======");

//fileSyetem参数(指定uri,客户端指定的配置参数,客户端的身份即用户名)

configuration=new Configuration();

fileSystem=FileSystem.get(new URI(HDFS_PATH),configuration,"hadoop");

}

@Test

public void mkdir() throws Exception{

fileSystem.mkdirs(new Path("/hdfsapi/test2"));

}

@After

public void tearDown(){

configuration=null;

fileSystem=null;

System.out.println("=======tearDown=======");

}

// public static void main(String[] args) throws Exception {

// Configuration configuration =new Configuration();

// FileSystem fileSystem= FileSystem.get(new URI("hdfs://hadoop000:8020"), configuration, "hadoop");

// Path path= new Path("/hdfsapi/test");

// boolean result= fileSystem.mkdirs(path);

// System.out.println(result);

// }

}

[hadoop@hadoop000 sbin]$ hadoop fs -ls /hdfsapi

Found 2 items

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:08 /hdfsapi/test

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:44 /hdfsapi/test2

查看HDFS文件内容

//查看HDFS内容

@Test

public void text() throws Exception{

FSDataInputStream in=fileSystem.open(new Path("/README.txt"));

IOUtils.copyBytes(in,System.out,1024);

}

创建文件

//创建文件

@Test

public void create() throws Exception{

FSDataOutputStream out=fileSystem.create(new Path("/hdfsapi/b.txt"));

out.writeUTF("hello world replication");

out.flush();

out.close();

}

[hadoop@hadoop000 sbin]$ hadoop dfs -ls /hdfsapi

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

Found 4 items

-rw-r--r-- 1 hadoop supergroup 25 2020-08-21 09:48 /hdfsapi/b.txt

-rw-r--r-- 3 hadoop supergroup 13 2020-08-19 10:07 /hdfsapi/c.txt

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:08 /hdfsapi/test

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:44 /hdfsapi/test2

[hadoop@hadoop000 sbin]$

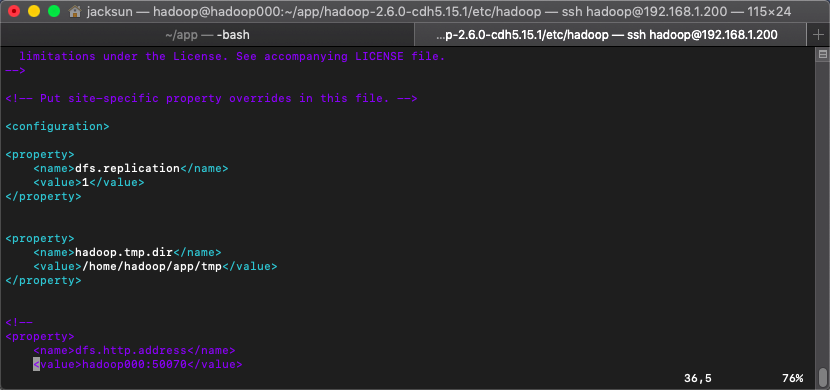

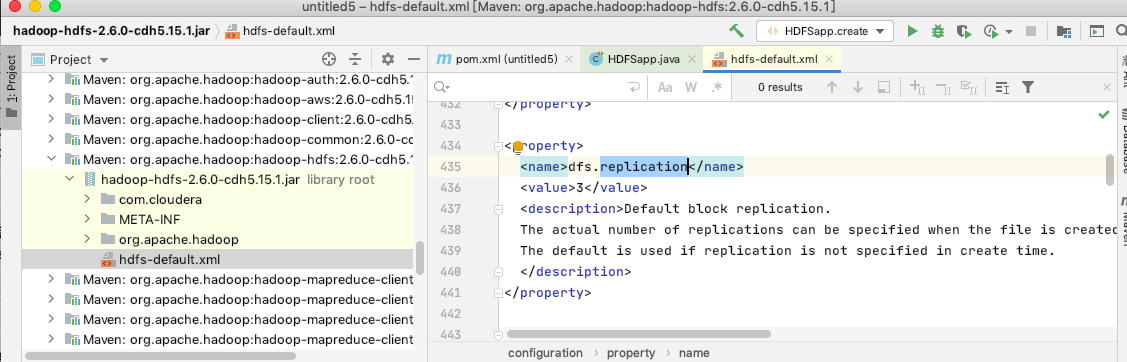

副本系数剖析

[hadoop@hadoop000 sbin]$ hadoop fs -ls /

Found 4 items

-rw-r--r-- 1 hadoop supergroup 1366 2020-08-17 21:35 /README.txt

drwxr-xr-x - hadoop supergroup 0 2020-08-17 21:48 /hdfs-test

drwxr-xr-x - hadoop supergroup 0 2020-08-19 10:07 /hdfsapi

-rw-r--r-- 1 hadoop supergroup 181367942 2020-08-17 21:59 /jdk-8u91-linux-x64.tar.gz

[hadoop@hadoop000 sbin]$ hadoop fs -ls /hdfsapi

Found 3 items

-rw-r--r-- 3 hadoop supergroup 13 2020-08-19 10:07 /hdfsapi/a.txt

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:08 /hdfsapi/test

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:44 /hdfsapi/test2

我们发现一个细节

是用ssh在服务器上创建的文件副本系数有1个

但是使用java创建的副本系数创建的副本洗漱有3个

那是因为在idea的配置里面我们设置的是3个

在代码中添加下面的 configuration.set("dfs.replication","1");

可以实现对副本洗漱的控制

@Before

public void setUp() throws Exception{

System.out.println("=======setUp=======");

//fileSyetem参数(指定uri,客户端指定的配置参数,客户端的身份即用户名)

configuration=new Configuration();

configuration.set("dfs.replication","1");

fileSystem=FileSystem.get(new URI(HDFS_PATH),configuration,"hadoop");

}

[hadoop@hadoop000 hadoop]$ hadoop fs -ls /hdfsapi

Found 4 items

-rw-r--r-- 3 hadoop supergroup 13 2020-08-19 10:07 /hdfsapi/a.txt

-rw-r--r-- 1 hadoop supergroup 0 2020-08-19 10:46 /hdfsapi/b.txt

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:08 /hdfsapi/test

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:44 /hdfsapi/test2

重命名

//重命名

@Test

public void rename() throws Exception{

Path oldPath=new Path("/hdfsapi/a.txt");

Path newPath=new Path("/hdfsapi/c.txt");

boolean result =fileSystem.rename(oldPath,newPath);

System.out.println(result);

}

[hadoop@hadoop000 sbin]$ hadoop fs -ls /hdfsapi/

Found 4 items

-rw-r--r-- 3 hadoop supergroup 13 2020-08-19 10:07 /hdfsapi/a.txt

-rw-r--r-- 1 hadoop supergroup 25 2020-08-19 10:46 /hdfsapi/b.txt

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:08 /hdfsapi/test

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:44 /hdfsapi/test2

[hadoop@hadoop000 sbin]$ hadoop fs -ls /hdfsapi/

Found 4 items

-rw-r--r-- 1 hadoop supergroup 25 2020-08-19 10:46 /hdfsapi/b.txt

-rw-r--r-- 3 hadoop supergroup 13 2020-08-19 10:07 /hdfsapi/c.txt

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:08 /hdfsapi/test

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:44 /hdfsapi/test2

上传本地文件到HDFS

//上传本地文件到HDFS系统

@Test

public void copyFromLocalFile() throws Exception{

Path src =new Path("/Users/jacksun/data/local.txt");

Path dst =new Path("/hdfsapi/");

fileSystem.copyFromLocalFile(src,dst);

}

[hadoop@hadoop000 sbin]$ hadoop dfs -ls /hdfsapi

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

Found 4 items

-rw-r--r-- 1 hadoop supergroup 25 2020-08-21 09:48 /hdfsapi/b.txt

-rw-r--r-- 3 hadoop supergroup 13 2020-08-19 10:07 /hdfsapi/c.txt

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:08 /hdfsapi/test

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:44 /hdfsapi/test2

[hadoop@hadoop000 sbin]$ hadoop dfs -ls /hdfsapi

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

Found 5 items

-rw-r--r-- 1 hadoop supergroup 25 2020-08-21 09:48 /hdfsapi/b.txt

-rw-r--r-- 3 hadoop supergroup 13 2020-08-19 10:07 /hdfsapi/c.txt

-rw-r--r-- 1 hadoop supergroup 18 2020-08-21 09:52 /hdfsapi/local.txt

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:08 /hdfsapi/test

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:44 /hdfsapi/test2

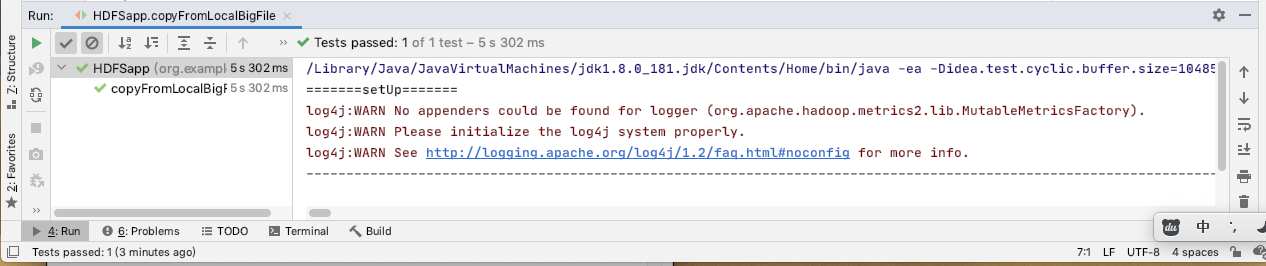

带进度的上传大文件

//上传本地文件到HDFS系统(大文件,进度条)

@Test

public void copyFromLocalBigFile() throws Exception{

InputStream in =new BufferedInputStream(new FileInputStream(new File("/Users/jacksun/data/music.ape")));

FSDataOutputStream out =fileSystem.create(new Path("/hdfsapi/music.ape"),

new Progressable() {

@Override

public void progress() {

System.out.print("-");

}

}

);

IOUtils.copyBytes(in,out,1024);

}

[hadoop@hadoop000 sbin]$ hadoop dfs -ls /hdfsapi

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

Found 6 items

-rw-r--r-- 1 hadoop supergroup 25 2020-08-21 09:48 /hdfsapi/b.txt

-rw-r--r-- 3 hadoop supergroup 13 2020-08-19 10:07 /hdfsapi/c.txt

-rw-r--r-- 1 hadoop supergroup 18 2020-08-21 09:52 /hdfsapi/local.txt

-rw-r--r-- 1 hadoop supergroup 48097077 2020-08-21 09:52 /hdfsapi/music.ape

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:08 /hdfsapi/test

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:44 /hdfsapi/test2

下载文件

// 从HDFS系统下载文件到本地

@Test

public void copyToLocalFile() throws Exception{

Path src =new Path("/hdfsapi/hello.txt");

Path dst =new Path("/Users/jacksun/data");

fileSystem.copyFromLocalFile(src,dst);

}

列出文件夹下的所有内容

//列出文件列表

@Test

public void listFiles() throws Exception {

FileStatus[] statuses = fileSystem.listStatus(new Path("/hdfsapi"));

for (FileStatus file : statuses) {

printFileStatus(file);

}

}

private void printFileStatus(FileStatus file) {

String isDir = file.isDirectory() ? "文件夹" : "文件";

String permission = file.getPermission().toString();

short replication = file.getReplication();

long length = file.getLen();

String path = file.getPath().toString();

System.out.println(isDir + " " + permission + " " +

replication + " " + length + " " + path);

}

=======setUp=======

log4j:WARN No appenders could be found for logger (org.apache.hadoop.metrics2.lib.MutableMetricsFactory).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

文件 rw-r--r-- 1 25 hdfs://hadoop000:8020/hdfsapi/b.txt

文件 rw-r--r-- 3 13 hdfs://hadoop000:8020/hdfsapi/c.txt

文件 rw-r--r-- 1 18 hdfs://hadoop000:8020/hdfsapi/local.txt

文件 rw-r--r-- 1 48097077 hdfs://hadoop000:8020/hdfsapi/music.ape

文件夹 rwxr-xr-x 0 0 hdfs://hadoop000:8020/hdfsapi/test

文件夹 rwxr-xr-x 0 0 hdfs://hadoop000:8020/hdfsapi/test2

=======tearDown=======

递归列出文件夹下的所有文件

//递归列出所有文件

@Test

public void listFileRecursive() throws Exception {

RemoteIterator<LocatedFileStatus> files = fileSystem.listFiles(

new Path("/hdfsapi"), true);

while (files.hasNext()) {

LocatedFileStatus file = files.next();

printFileStatus(file);

}

}

=======setUp=======

log4j:WARN No appenders could be found for logger (org.apache.hadoop.metrics2.lib.MutableMetricsFactory).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

文件 rw-r--r-- 1 25 hdfs://hadoop000:8020/hdfsapi/b.txt

文件 rw-r--r-- 3 13 hdfs://hadoop000:8020/hdfsapi/c.txt

文件 rw-r--r-- 1 18 hdfs://hadoop000:8020/hdfsapi/local.txt

文件 rw-r--r-- 1 48097077 hdfs://hadoop000:8020/hdfsapi/music.ape

查看文件块信息

// 查看文件块信息

@Test

public void getFielBlockLocations() throws Exception {

FileStatus fileStatus = fileSystem.getFileStatus(

new Path("/hdfsapi/test/a.txt"));

BlockLocation[] blocks = fileSystem.getFileBlockLocations(fileStatus,

0, fileStatus.getLen());

for (BlockLocation block : blocks) {

for (String name : block.getNames()) {

System.out.println(name + ":" + block.getOffset() + ":" +

block.getLength() + ":" + block.getHosts());

}

}

}

删除文件

//删除文件

@Test

public void delete() throws Exception {

fileSystem.delete(new Path("/hdfsapi/test/idea.dmg"), true);

}

Found 6 items

-rw-r--r-- 1 hadoop supergroup 25 2020-08-21 09:48 /hdfsapi/b.txt

-rw-r--r-- 3 hadoop supergroup 13 2020-08-19 10:07 /hdfsapi/c.txt

-rw-r--r-- 1 hadoop supergroup 18 2020-08-21 09:52 /hdfsapi/local.txt

-rw-r--r-- 1 hadoop supergroup 48097077 2020-08-21 09:52 /hdfsapi/music.ape

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:08 /hdfsapi/test

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:44 /hdfsapi/test2

[hadoop@hadoop000 sbin]$ hadoop dfs -ls /hdfsapi

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

Found 5 items

-rw-r--r-- 1 hadoop supergroup 25 2020-08-21 09:57 /hdfsapi/b.txt

-rw-r--r-- 3 hadoop supergroup 13 2020-08-19 10:07 /hdfsapi/c.txt

-rw-r--r-- 1 hadoop supergroup 48097077 2020-08-21 09:57 /hdfsapi/music.ape

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:08 /hdfsapi/test

drwxr-xr-x - hadoop supergroup 0 2020-08-19 09:44 /hdfsapi/test2

所有文件

package org.example;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.util.Progressable;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

import java.io.BufferedInputStream;

import java.io.File;

import java.io.FileInputStream;

import java.io.InputStream;

import java.net.URI;

//使用java API来操作文件系统

public class HDFSapp {

public static final String HDFS_PATH="hdfs://hadoop000:8020";

FileSystem fileSystem=null;

Configuration configuration=null;

@Before

public void setUp() throws Exception{

System.out.println("=======setUp=======");

//fileSyetem参数(指定uri,客户端指定的配置参数,客户端的身份即用户名)

configuration=new Configuration();

configuration.set("dfs.replication","1");

fileSystem=FileSystem.get(new URI(HDFS_PATH),configuration,"hadoop");

}

//创建文件夹

@Test

public void mkdir() throws Exception{

fileSystem.mkdirs(new Path("/hdfsapi/test2"));

}

//查看HDFS内容

@Test

public void text() throws Exception{

FSDataInputStream in=fileSystem.open(new Path("/README.txt"));

IOUtils.copyBytes(in,System.out,1024);

}

//========================================

//创建文件

@Test

public void create() throws Exception{

FSDataOutputStream out=fileSystem.create(new Path("/hdfsapi/b.txt"));

out.writeUTF("hello world replication");

out.flush();

out.close();

}

//重命名

@Test

public void rename() throws Exception{

Path oldPath=new Path("/hdfsapi/a.txt");

Path newPath=new Path("/hdfsapi/c.txt");

boolean result =fileSystem.rename(oldPath,newPath);

System.out.println(result);

}

//上传本地文件到HDFS系统

@Test

public void copyFromLocalFile() throws Exception{

Path src =new Path("/Users/jacksun/data/local.txt");

Path dst =new Path("/hdfsapi/");

fileSystem.copyFromLocalFile(src,dst);

}

//上传本地文件到HDFS系统(大文件,进度条)

@Test

public void copyFromLocalBigFile() throws Exception{

InputStream in =new BufferedInputStream(new FileInputStream(new File("/Users/jacksun/data/music.ape")));

FSDataOutputStream out =fileSystem.create(new Path("/hdfsapi/music.ape"),

new Progressable() {

@Override

public void progress() {

System.out.print("-");

}

}

);

IOUtils.copyBytes(in,out,1024);

}

// 从HDFS系统下载文件到本地

@Test

public void copyToLocalFile() throws Exception{

Path src =new Path("/hdfsapi/hello.txt");

Path dst =new Path("/Users/jacksun/data");

fileSystem.copyFromLocalFile(src,dst);

}

//列出文件列表

@Test

public void listFiles() throws Exception {

FileStatus[] statuses = fileSystem.listStatus(new Path("/hdfsapi"));

for (FileStatus file : statuses) {

printFileStatus(file);

}

}

private void printFileStatus(FileStatus file) {

String isDir = file.isDirectory() ? "文件夹" : "文件";

String permission = file.getPermission().toString();

short replication = file.getReplication();

long length = file.getLen();

String path = file.getPath().toString();

System.out.println(isDir + " " + permission + " " +

replication + " " + length + " " + path);

}

//递归列出所有文件

@Test

public void listFileRecursive() throws Exception {

RemoteIterator<LocatedFileStatus> files = fileSystem.listFiles(

new Path("/hdfsapi"), true);

while (files.hasNext()) {

LocatedFileStatus file = files.next();

printFileStatus(file);

}

}

// 查看文件块信息

@Test

public void getFielBlockLocations() throws Exception {

FileStatus fileStatus = fileSystem.getFileStatus(

new Path("/hdfsapi/test/a.txt"));

BlockLocation[] blocks = fileSystem.getFileBlockLocations(fileStatus,

0, fileStatus.getLen());

for (BlockLocation block : blocks) {

for (String name : block.getNames()) {

System.out.println(name + ":" + block.getOffset() + ":" +

block.getLength() + ":" + block.getHosts());

}

}

}

//删除文件

@Test

public void delete() throws Exception {

fileSystem.delete(new Path("/hdfsapi/local.txt"), true);

}

@After

public void tearDown(){

configuration=null;

fileSystem=null;

System.out.println("=======tearDown=======");

}

// public static void main(String[] args) throws Exception {

// Configuration configuration =new Configuration();

// FileSystem fileSystem= FileSystem.get(new URI("hdfs://hadoop000:8020"), configuration, "hadoop");

// Path path= new Path("/hdfsapi/test");

// boolean result= fileSystem.mkdirs(path);

// System.out.println(result);

// }

}