一、官方文档

https://docs.ceph.com/en/latest/

http://docs.ceph.org.cn/rbd/rbd/

二、块存储

块存储简称(RADOS Block Device),是一种有序的字节序块,也是Ceph三大存储类型中最为常用的存储方式,Ceph的块存储时基于RADOS的,因此它也借助RADOS的快照,复制和一致性等特性提供了快照,克隆和备份等操作。Ceph的块设备值一种精简置备模式,可以拓展块存储的大小且存储的数据以条带化的方式存储到Ceph集群中的多个OSD中。

2.1、创建pool

官方文档:http://docs.ceph.org.cn/rados/operations/pools/

# 1、 查看pool命令

[root@node1 ceph-deploy]# ceph osd lspools

# 2、首先得创建一个pool(名字为ceph-demo pg数量64 pgp数量64 副本数【replicated】默认是3)

[root@node1 ceph-deploy]# ceph osd pool create ceph-demo 64 64

pool 'ceph-demo' created

# 3、查看pool

[root@node1 ceph-deploy]# ceph osd lspools

1 ceph-demo

# 4、查看pg、pgp、副本的数量

[root@node1 ceph-deploy]# ceph osd pool get ceph-demo pg_num

pg_num: 64

[root@node1 ceph-deploy]# ceph osd pool get ceph-demo pgp_num

pgp_num: 64

[root@node1 ceph-deploy]# ceph osd pool get ceph-demo size

size: 3

# 5、查看调度算法

[root@node1 ceph-deploy]# ceph osd pool get ceph-demo crush_rule

crush_rule: replicated_rule

# 6、调整就用set

[root@node1 ceph-deploy]# ceph osd pool set ceph-demo pg_num 128

set pool 1 pg_num to 128

[root@node1 ceph-deploy]# ceph osd pool set ceph-demo pgp_num 128

set pool 1 pgp_num to 128

# 7、查看调整后的pg、pgp

[root@node1 ceph-deploy]# ceph osd pool get ceph-demo pg_num

pg_num: 128

[root@node1 ceph-deploy]# ceph osd pool get ceph-demo pgp_num

pgp_num: 128

2.2、创建块存储文件

官方文档:http://docs.ceph.org.cn/rbd/rados-rbd-cmds/

# 1、创建方式(2种方式都可) -p 指定pool名称、--image 指定image(块名字)名字

[root@node1 ~]# rbd create -p ceph-demo --image rbd-demo.img --size 1G

[root@node1 ~]# rbd create ceph-demo/rbd-demo1.img --size 1G

# 2、查看列表

[root@node1 ~]# rbd -p ceph-demo ls

rbd-demo.img

rbd-demo1.img

# 3、查看某个块的信息(可以看到一个块被分成了256个objects)

[root@node1 ~]# rbd info ceph-demo/rbd-demo.img

rbd image 'rbd-demo.img':

size 1 GiB in 256 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 14a48cb7303ce

block_name_prefix: rbd_data.14a48cb7303ce

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten # 等会把这几个features都去掉

op_features:

flags:

create_timestamp: Sun Jan 24 14:13:37 2021

access_timestamp: Sun Jan 24 14:13:37 2021

modify_timestamp: Sun Jan 24 14:13:37 2021

# 4、删除块

[root@node1 ~]# rbd rm ceph-demo/rbd-demo1.img

Removing image: 100% complete...done.

2.3、使用块存储文件

# 1、查看当前pool有几个块文件

[root@node1 ~]# rbd list ceph-demo

rbd-demo.img

# 2、直接使用会报错,因为内核级别的一些东西不支持

[root@node1 ~]# rbd map ceph-demo/rbd-demo.img

rbd: sysfs write failed

RBD image feature set mismatch. You can disable features unsupported by the kernel with "rbd feature disable ceph-demo/rbd-demo.img object-map fast-diff deep-flatten".

In some cases useful info is found in syslog - try "dmesg | tail".

rbd: map failed: (6) No such device or address

# 3、disable 模块

[root@node1 ~]# rbd feature disable ceph-demo/rbd-demo.img deep-flatten

[root@node1 ~]# rbd feature disable ceph-demo/rbd-demo.img fast-diff

[root@node1 ~]# rbd feature disable ceph-demo/rbd-demo.img object-map

[root@node1 ~]# rbd feature disable ceph-demo/rbd-demo.img

# 4、查看是否成功禁用

[root@node1 ~]# rbd info ceph-demo/rbd-demo.img

rbd image 'rbd-demo.img':

size 1 GiB in 256 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 14a48cb7303ce

block_name_prefix: rbd_data.14a48cb7303ce

format: 2

features: layering # 看到这里是layering状态就可以测试挂载了

op_features:

flags:

create_timestamp: Sun Jan 24 14:13:37 2021

access_timestamp: Sun Jan 24 14:13:37 2021

modify_timestamp: Sun Jan 24 14:13:37 2021

# 5、再次使用刚刚创建的块存储文件

[root@node1 ~]# rbd map ceph-demo/rbd-demo.img

/dev/rbd0

# 6、查看device

[root@node1 ~]# rbd device list

id pool namespace image snap device

0 ceph-demo rbd-demo.img - /dev/rbd0 (这就相当于我们本地的一块磁盘一样,可以进行分区格式化操作)

# 7、fdisk查看可以查看到相应信息

[root@node1 ~]# fdisk -l | grep rbd0

Disk /dev/rbd0: 1073 MB, 1073741824 bytes, 2097152 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 4194304 bytes / 4194304 bytes

# 8、比如格式化

[root@node1 ~]# mkfs.ext4 /dev/rbd0

# 9、然后挂载

[root@node1 ~]# mkdir /mnt/rbd-demo

[root@node1 ~]# mount /dev/rbd0 /mnt/rbd-demo

# 10、df查看

[root@node1 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/rbd0 976M 2.6M 907M 1% /mnt/rbd-demo

2.4、块存储扩容

# 1、就拿之前创建的盘来操作

[root@node1 ~]# rbd -p ceph-demo ls

rbd-demo.img

# 2、查看它的信息

[root@node1 ~]# rbd -p ceph-demo info --image rbd-demo.img

rbd image 'rbd-demo.img':

size 1 GiB in 256 objects (目前是一个g)

order 22 (4 MiB objects)

snapshot_count: 0

id: 14a48cb7303ce

block_name_prefix: rbd_data.14a48cb7303ce

format: 2

features: layering

op_features:

flags:

create_timestamp: Sun Jan 24 14:13:37 2021

access_timestamp: Sun Jan 24 14:13:37 2021

modify_timestamp: Sun Jan 24 14:13:37 2021

# 3、扩容(缩容也可,但是不建议)

[root@node1 ~]# rbd resize ceph-demo/rbd-demo.img --size 2G

Resizing image: 100% complete...done.

# 4、扩容后查看

[root@node1 ~]# rbd -p ceph-demo info --image rbd-demo.img

rbd image 'rbd-demo.img':

size 2 GiB in 512 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 14a48cb7303ce

block_name_prefix: rbd_data.14a48cb7303ce

format: 2

features: layering

op_features:

flags:

create_timestamp: Sun Jan 24 14:13:37 2021

access_timestamp: Sun Jan 24 14:13:37 2021

modify_timestamp: Sun Jan 24 14:13:37 2021

# 5、此时的磁盘大小是扩上去了,可是文件系统挂载的是不会自动扩的

[root@node1 ~]# fdisk -l | grep rbd0

Disk /dev/rbd0: 2147 MB, 2147483648 bytes, 4194304 sectors

[root@node1 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/rbd0 976M 2.6M 907M 1% /mnt/rbd-demo # 此处还是1个G

# 6、扩容文件系统(注意不建议对这种磁盘进行分区,云上的也是一样,建议多买几块)

[root@node1 ~]# blkid

/dev/sr0: UUID="2018-11-25-23-54-16-00" LABEL="CentOS 7 x86_64" TYPE="iso9660" PTTYPE="dos"

/dev/sdb: UUID="k4g1pw-rOvV-NG7w-ajnZ-qipH-kXwq-h0jY0o" TYPE="LVM2_member"

/dev/sda1: UUID="ccb430ea-66c9-4c91-a4b4-ba870ca15943" TYPE="xfs"

/dev/sda2: UUID="NegVJw-3XZn-BJeZ-NfKW-VROQ-roSa-LcKgoy" TYPE="LVM2_member"

/dev/mapper/centos-root: UUID="59c5d6b6-e34d-4149-b28a-8a3b9c32536d" TYPE="xfs"

/dev/sdc: UUID="h65OWm-ELDd-R5pO-HaQ0-Ejjs-cUWn-8Ejcpq" TYPE="LVM2_member"

/dev/mapper/centos-swap: UUID="25f54a98-e472-4438-9641-eac952a46e3e" TYPE="swap"

/dev/rbd0: UUID="911aadb8-bbf4-48d2-a62d-d86886af79dc" TYPE="ext4"

# 扩它

[root@node1 ~]# resize2fs /dev/rbd0

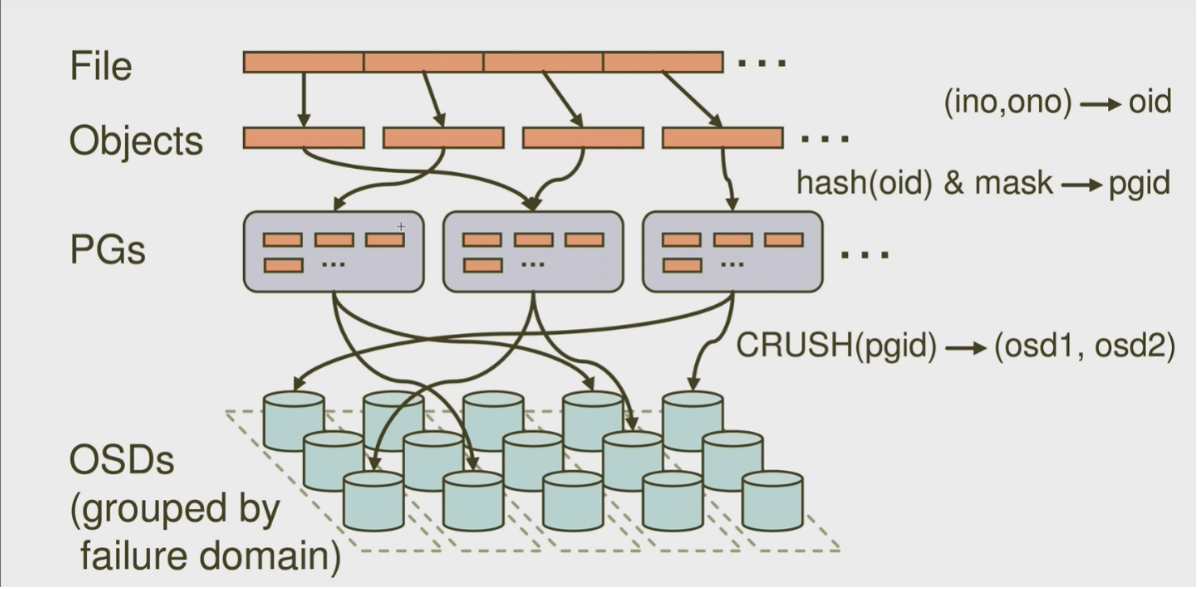

2.5、RBD数据写入流程

2.6、解决告警排查

# 1、发现问题

[root@node1 ~]# ceph -s

cluster:

id: 081dc49f-2525-4aaa-a56d-89d641cef302

health: HEALTH_WARN

application not enabled on 1 pool(s)

services:

mon: 3 daemons, quorum node1,node2,node3 (age 3h)

mgr: node2(active, since 3h), standbys: node3, node1

osd: 3 osds: 3 up (since 3h), 3 in (since 13h)

data:

pools: 1 pools, 128 pgs

objects: 22 objects, 38 MiB

usage: 3.1 GiB used, 57 GiB / 60 GiB avail

pgs: 128 active+clean

# 2、通过他提供的命令查看

[root@node1 ~]# ceph health detail

HEALTH_WARN application not enabled on 1 pool(s)

POOL_APP_NOT_ENABLED application not enabled on 1 pool(s)

application not enabled on pool 'ceph-demo' # 看这句

use 'ceph osd pool application enable <pool-name> <app-name>', where <app-name> is 'cephfs', 'rbd', 'rgw', or freeform for custom applications. # 这句是提示你怎么操作(就是把这个资源类型进行分类就行)

# 3、解决命令

[root@node1 ~]# ceph osd pool application enable ceph-demo rbd

enabled application 'rbd' on pool 'ceph-demo'

# 4、再次查看

[root@node1 ~]# ceph -s

cluster:

id: 081dc49f-2525-4aaa-a56d-89d641cef302

health: HEALTH_OK