master组件

kube-apiserver

kubernetes API集群的同一入口,各组件协调者,以RESTful API提供接口服务,所有对象资源的增删改查和监听操作都交给APIserver处理再提交给Etcd存储

kube-controller-manager

处理集群中常规后台任务,一个资源对应一个控制器,而ControllerManager就是负责管理这些控制器的

kube-scheduler

根据调度算法为新创建的POdxuanz yig Node节点,可以在任意部署,可以部署在同一个节点上,也可以部署在不同节点上

etcd

分布式键值存储系统,用于报销集群状态数据,比如Pod、service等对象信息

Node组件

kubelet

kubelet是master在node节点上的agent,管理本机运行容器的生命周期,比如创建容器、Pod挂载数据卷、下载secret、获取容器和节点状态等工作,kubelet将每个Pod转成一组容器。

kube-proxy

在Node节点上实现Pod网络代理,维护网络规则和四层负载均衡工作

docker或rocket

容器引擎,运行容器

服务器硬件配置推荐

|

实验环境 |

K8s master/node |

2核2G+ |

|

|

测试环境 |

K8s-master |

CPU |

2核 |

|

内存 |

4G |

||

|

硬盘 |

20G |

||

|

K8s-node |

CPU |

4核 |

|

|

内存 |

8G |

||

|

硬盘 |

20G |

||

|

生产环境 |

K8s-master |

CPU |

8核 |

|

内存 |

16G |

||

|

硬盘 |

100G |

||

|

K8s-node |

CPU |

16核 |

|

|

内存 |

64G |

||

|

硬盘 |

500G |

||

当然资源越多肯定是越好的,也要看实际需求来衡量需求的资源

单Master服务器规划

|

角色 |

IP |

组件 |

|

k8s-master-1 |

192.168.10.160 |

kube-apiserver,kube-controller-manager,kube-scheduler,etcd |

|

k8s-node-1 |

192.168.10.161 |

kubelet,kube-proxy,docker etcd |

|

k8s-node-2 |

192.168.10.162 |

kubelet,kube-proxy,docker,etcd |

Etcd 是一个分布式键值存储系统,Kubernetes使用Etcd进行数据存储,所以先准备一个Etcd数据库,为解决Etcd单点故障,应采用集群方式部署,

这里使用3台组建集群,可容忍1台机器故障,当然,你也可以使用5台组建集群,可容忍2台机器故障。

|

节点名称 |

IP |

|

etcd-1 |

192.168.10.160 |

|

etcd-2 |

192.168.10.161 |

|

etcd-3 |

192.168.10.162 |

注:为了节省机器,这里与K8s节点机器复用。也可以独立于k8s集群之外部署,只要apiserver能连接到就行。

系统环境

[root@localhost ~]# cat /etc/redhat-release CentOS Linux release 7.8.2003 (Core) [root@localhost ~]# uname -a Linux localhost 3.10.0-1127.18.2.el7.x86_64 #1 SMP Sun Jul 26 15:27:06 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux [root@localhost ~]#

一、基础优化

1、时间同步

echo "#time sync by fage at 2019-7-22" >>/var/spool/cron/root

echo "*/5 * * * * /usr/sbin/ntpdate ntp1.aliyun.com >/dev/null 2>&1" >>/var/spool/cron/root

systemctl restart crond.service

2、关闭防火墙和selinux

systemctl stop firewalld systemctl disable firewalld setenforce 0 sed -i s#SELINUX=enforcing#SELINUX=disable#g /etc/selinux/config

3、更改主机名

master

hostname k8s-master-1 echo "k8s-master-1" >/etc/hostname

node

hostname k8s-node-1 echo " k8s-node-1" >/etc/hostname hostname k8s-node-2 echo " k8s-node-2" >/etc/hostname

4、更改hosts文件

cat >/etc/hosts <<EOF 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.10.160 k8s-master-1 192.168.10.161 k8s-node-1 192.168.10.162 k8s-node-2 EOF

5、节点node要禁用swap设备 不禁用要配置声明

swapoff -a sed -i "s@/dev/mapper/centos-swap swap@#/dev/mapper/centos-swap swap@g" /etc/fstab

6、将桥接的IPv4流量传递到iptables的链

cat > /etc/sysctl.d/k8s.conf << EOF net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sysctl --system # 生效配置

二、部署Etcd集群

2.1 准备cfssl证书生成工具

找任意一台服务器操作,这里用Master节点

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64 mv cfssl_linux-amd64 /usr/local/bin/cfssl mv cfssljson_linux-amd64 /usr/local/bin/cfssljson mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

2.2 生成Etcd证书

2.2.1. 自签证书颁发机构(CA)

创建工作目录

mkdir -p ~/TLS/{etcd,k8s} && cd TLS/etcd

自签CA

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json << EOF

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

生成证书查看结果

cfssl gencert -initca ca-csr.json | cfssljson -bare ca - && ls *pem

2.2.2. 使用自签CA签发Etcd HTTPS证书

创建证书申请文件

cat > server-csr.json << EOF

{

"CN": "etcd",

"hosts": [

"192.168.10.160",

"192.168.10.161",

"192.168.10.162",

"192.168.10.163"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

注:上述文件hosts字段中IP为所有etcd节点的集群内部通信IP,一个都不能少!为了方便后期扩容可以多写几个预留的IP。

生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server ls server*pem

2.2.3、部署Etcd集群

以下在master上操作,为简化操作,待会将master生成的所有文件拷贝到节点2和节点3.

1. 创建工作目录并解压二进制包

包下载地址:https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz

mkdir -p /opt/etcd/{bin,cfg,ssl}

tar xf etcd-v3.4.9-linux-amd64.tar.gz

mv etcd-v3.4.9-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/

2. 创建etcd配置文件

cat > /opt/etcd/cfg/etcd.conf << EOF #[Member] ETCD_NAME="etcd-1" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.10.160:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.10.160:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.10.160:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.10.160:2379" ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.10.160:2380,etcd-2=https://192.168.10.161:2380,etcd-3=https://192.168.10.162:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" EOF

ETCD_NAME:节点名称,集群中唯一

ETCD_DATA_DIR:数据目录

ETCD_LISTEN_PEER_URLS:集群通信监听地址

ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址

ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址

ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址

ETCD_INITIAL_CLUSTER:集群节点地址

ETCD_INITIAL_CLUSTER_TOKEN:集群Token

ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new是新集群,existing表示加入已有集群

3. systemd管理etcd

cat > /usr/lib/systemd/system/etcd.service << EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify EnvironmentFile=/opt/etcd/cfg/etcd.conf ExecStart=/opt/etcd/bin/etcd --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --peer-cert-file=/opt/etcd/ssl/server.pem --peer-key-file=/opt/etcd/ssl/server-key.pem --trusted-ca-file=/opt/etcd/ssl/ca.pem --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem --logger=zap Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

4. 拷贝刚才生成的证书

master操作:把刚才生成的证书拷贝到配置文件中的路径

cp ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem /opt/etcd/ssl/

5. 启动并设置开机启动

systemctl daemon-reload && systemctl start etcd && systemctl enable etcd

6. 将上面节点1所有生成的文件拷贝到节点2和节点3

scp -r /opt/etcd/ root@192.168.10.161:/opt/ scp /usr/lib/systemd/system/etcd.service root@192.168.10.161:/usr/lib/systemd/system/ scp -r /opt/etcd/ root@192.168.10.162:/opt/ scp /usr/lib/systemd/system/etcd.service root@192.168.10.162:/usr/lib/systemd/system/

然后在节点2和节点3分别修改etcd.conf配置文件中的节点名称和当前

服务器IP:每台集群都要改成自身机器的名称和地址和检查一遍

vi /opt/etcd/cfg/etcd.conf #[Member] ETCD_NAME="etcd-1" # 修改此处,节点2改为etcd-2,节点3改为etcd-3 ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.10.160:2380" # 修改此处为当前服务器IP ETCD_LISTEN_CLIENT_URLS="https://192.168.10.160:2379" # 修改此处为当前服务器IP #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.10.160:2380" # 修改此处为当前服务器IP ETCD_ADVERTISE_CLIENT_URLS="https://192.168.10.160:2379" # 修改此处为当前服务器IP ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.10.160:2380,etcd-2=https://192.168.10.161:2380,etcd-3=https://192.168.10.162:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new"

在其他的全部节点上设置开机自启动

systemctl daemon-reload && systemctl start etcd && systemctl enable etcd

7. 查看ETCD集群状态

ETCDCTL_API=3 /opt/etcd/bin/etcdctl

--cacert=/opt/etcd/ssl/ca.pem

--cert=/opt/etcd/ssl/server.pem

--key=/opt/etcd/ssl/server-key.pem

--endpoints="https://192.168.10.160:2379,https://192.168.10.161:2379,https://192.168.10.162:2379" endpoint health #输出的结果 https://192.168.10.162:2379 is healthy: successfully committed proposal: took = 13.108591ms https://192.168.10.160:2379 is healthy: successfully committed proposal: took = 13.436323ms https://192.168.10.161:2379 is healthy: successfully committed proposal: took = 15.027817ms

如果输出上面信息,就说明集群部署成功。如果有问题第一步先看日志:/var/log/message 或 journalctl -u etcd

三、安装Docker

下载地址:https://download.docker.com/linux/static/stable/x86_64/docker-19.03.9.tgz

以下在所有节点操作。这里采用二进制安装,用yum安装也一样。

1、解压二进制包

tar xf docker-19.03.9.tgz mv docker/* /usr/bin

2、systemd管理docker

cat > /usr/lib/systemd/system/docker.service << EOF [Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target firewalld.service Wants=network-online.target [Service] Type=notify ExecStart=/usr/bin/dockerd ExecReload=/bin/kill -s HUP $MAINPID LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity TimeoutStartSec=0 Delegate=yes KillMode=process Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target EOF

3、创建配置文件

registry-mirrors 阿里云镜像加速器

mkdir /etc/docker

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]

}

EOF

复制到其他节点机器上

cd /usr/bin/ scp -r /usr/lib/systemd/system/docker.service root@192.168.10.161:/usr/lib/systemd/system/ scp -r containerd containerd-shim docker dockerd docker-init docker-proxy runc root@192.168.10.161:/usr/bin/ scp -r /etc/docker root@192.168.10.161:/etc/ cd /usr/bin/ scp -r /usr/lib/systemd/system/docker.service root@192.168.10.162:/usr/lib/systemd/system/ scp -r containerd containerd-shim docker dockerd docker-init docker-proxy runc root@192.168.10.162:/usr/bin/ scp -r /etc/docker root@192.168.10.162:/etc/

4、其他节点启动并设置开机启动

systemctl daemon-reload && systemctl start docker &&systemctl enable docker

四、部署Master Node (全在master操作)

4.1 生成kube-apiserver证书

1. 自签证书颁发机构(CA)

cd /root/TLS/k8s

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

生成证书

cfssl gencert -initca ca-csr.json | cfssljson -bare ca - && ls *pem ca-key.pem ca.pem

2. 使用自签CA签发kube-apiserver HTTPS证书

创建证书申请文件

cd /root/TLS/k8s

cat > server-csr.json << EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.10.160",

"192.168.10.161",

"192.168.10.162",

"192.168.10.163",

"192.168.10.164",

"192.168.10.165",

"192.168.10.166",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

注:上述文件hosts字段中IP为所有Master/LB/VIP IP,一个都不能少!为了方便后期扩容可以多写几个预留的IP。

生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server ls server*pem server-key.pem server.pem

4.2 从Github下载二进制文件

下载地址:

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.18.md#downloads-for-v1186

注:打开链接你会发现里面有很多包,下载一个server包就够了,包含了Master和Worker Node二进制文件。

https://storage.googleapis.com/kubernetes-release/release/v1.18.6/kubernetes-server-linux-amd64.tar.gz

下载1.18.6版本的

4.3 解压二进制包

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

tar xf kubernetes-server-linux-amd64.tar.gz

cd kubernetes/server/bin

cp kube-apiserver kube-scheduler kube-controller-manager /opt/kubernetes/bin

cp kubectl /usr/bin/

4.4 部署kube-apiserver

1. 创建配置文件

cat > /opt/kubernetes/cfg/kube-apiserver.conf << EOF KUBE_APISERVER_OPTS="--logtostderr=false \ --v=2 \ --log-dir=/opt/kubernetes/logs \ --etcd-servers=https://192.168.10.160:2379,https://192.168.10.161:2379,https://192.168.10.162:2379 \ --bind-address=192.168.10.160 \ --secure-port=6443 \ --advertise-address=192.168.10.160 \ --allow-privileged=true \ --service-cluster-ip-range=10.0.0.0/24 \ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \ --authorization-mode=RBAC,Node \ --enable-bootstrap-token-auth=true \ --token-auth-file=/opt/kubernetes/cfg/token.csv \ --service-node-port-range=30000-32767 \ --kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \ --kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \ --tls-cert-file=/opt/kubernetes/ssl/server.pem \ --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \ --client-ca-file=/opt/kubernetes/ssl/ca.pem \ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \ --etcd-cafile=/opt/etcd/ssl/ca.pem \ --etcd-certfile=/opt/etcd/ssl/server.pem \ --etcd-keyfile=/opt/etcd/ssl/server-key.pem \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=100 \ --audit-log-path=/opt/kubernetes/logs/k8s-audit.log" EOF

注:上面两个 第一个是转义符,第二个是换行符,使用转义符是为了使用EOF保留换行符。

–logtostderr:启用日志

—v:日志等级

–log-dir:日志目录

–etcd-servers:etcd集群地址

–bind-address:监听地址

–secure-port:https安全端口

–advertise-address:集群通告地址

–allow-privileged:启用授权

–service-cluster-ip-range:Service虚拟IP地址段

–enable-admission-plugins:准入控制模块

–authorization-mode:认证授权,启用RBAC授权和节点自管理

–enable-bootstrap-token-auth:启用TLS bootstrap机制

–token-auth-file:bootstrap token文件

–service-node-port-range:Service nodeport类型默认分配端口范围

–kubelet-client-xxx:apiserver访问kubelet客户端证书

–tls-xxx-file:apiserver https证书

–etcd-xxxfile:连接Etcd集群证书

–audit-log-xxx:审计日志

2. 拷贝刚才生成的证书

把刚才生成的证书拷贝到配置文件中的路径

cp ~/TLS/k8s/ca*pem ~/TLS/k8s/server*pem /opt/kubernetes/ssl/

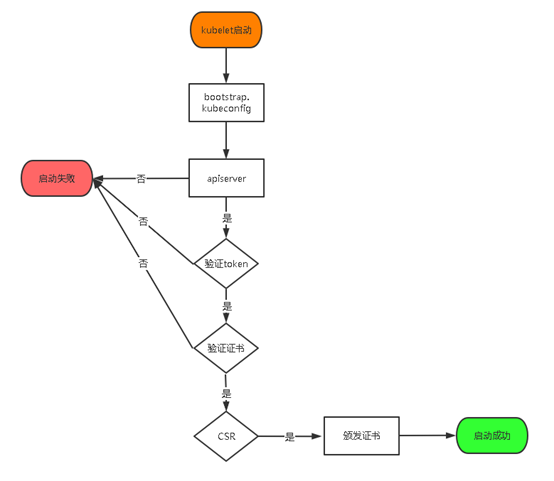

3. 启用 TLS Bootstrapping 机制

TLS Bootstraping:Master apiserver启用TLS认证后,Node节点kubelet和kube-proxy要与kube-apiserver进行通信,必须使用CA签发的有效证书才可以,当Node节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了简化流程,Kubernetes引入了TLS bootstraping机制来自动颁发客户端证书,kubelet会以一个低权限用户自动向apiserver申请证书,kubelet的证书由apiserver动态签署。所以强烈建议在Node上使用这种方式,目前主要用于kubelet,kube-proxy还是由我们统一颁发一个证书。

TLS bootstraping 工作流程

创建上述配置文件中token文件

cat > /opt/kubernetes/cfg/token.csv << EOF c47ffb939f5ca36231d9e3121a252940,kubelet-bootstrap,10001,"system:node-bootstrapper" EOF

格式:token,用户名,UID,用户组

token也可自行生成替换

head -c 16 /dev/urandom | od -An -t x | tr -d ' '

4. systemd管理apiserver

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

5. 启动并设置开机启动

systemctl daemon-reload && systemctl start kube-apiserver systemctl enable kube-apiserver && systemctl status kube-apiserver

6. 授权kubelet-bootstrap用户允许请求证书

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

4.5 部署kube-controller-manager

1. 创建配置文件

cat > /opt/kubernetes/cfg/kube-controller-manager.conf << EOF KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \ --v=2 \ --log-dir=/opt/kubernetes/logs \ --leader-elect=true \ --master=127.0.0.1:8080 \ --bind-address=127.0.0.1 \ --allocate-node-cidrs=true \ --cluster-cidr=10.244.0.0/16 \ --service-cluster-ip-range=10.0.0.0/24 \ --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \ --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \ --root-ca-file=/opt/kubernetes/ssl/ca.pem \ --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \ --experimental-cluster-signing-duration=87600h0m0s" EOF

–master:通过本地非安全本地端口8080连接apiserver。

–leader-elect:当该组件启动多个时,自动选举(HA)

–cluster-signing-cert-file/–cluster-signing-key-file:自动为kubelet颁发证书的CA,与apiserver保持一致

2. systemd管理controller-manager

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf ExecStart=/opt/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

3. 启动并设置开机启动

systemctl daemon-reload systemctl start kube-controller-manager systemctl enable kube-controller-manager systemctl status kube-controller-manager

4.6 部署kube-scheduler

1. 创建配置文件

cat > /opt/kubernetes/cfg/kube-scheduler.conf << EOF KUBE_SCHEDULER_OPTS="--logtostderr=false --v=2 --log-dir=/opt/kubernetes/logs --leader-elect --master=127.0.0.1:8080 --bind-address=127.0.0.1" EOF

–master:通过本地非安全本地端口8080连接apiserver。

–leader-elect:当该组件启动多个时,自动选举(HA)

2. systemd管理scheduler

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.conf ExecStart=/opt/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

3. 启动并设置开机启动

systemctl daemon-reload systemctl start kube-scheduler && systemctl enable kube-scheduler systemctl status kube-scheduler

4. 查看集群状态

所有组件都已经启动成功,通过kubectl工具查看当前集群组件状态

kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

如上输出说明Master节点组件运行正常。

五、部署Worker Node

下面还是在Master上操作,即同时作为Worker Node

5.1 创建工作目录并拷贝二进制文件

在所有worker node创建工作目录

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

从master节点拷贝

cd kubernetes/server/bin cp kubelet kube-proxy /opt/kubernetes/bin # 本地拷贝 scp -r kubelet kube-proxy root@192.168.10.161:/opt/kubernetes/bin/ scp -r kubelet kube-proxy root@192.168.10.162:/opt/kubernetes/bin/

5.2 部署kubelet

1. 创建配置文件

cat > /opt/kubernetes/cfg/kubelet.conf << EOF KUBELET_OPTS="--logtostderr=false \ --v=2 \ --log-dir=/opt/kubernetes/logs \ --hostname-override=k8s-master \ --network-plugin=cni \ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \ --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \ --config=/opt/kubernetes/cfg/kubelet-config.yml \ --cert-dir=/opt/kubernetes/ssl \ --pod-infra-container-image=lizhenliang/pause-amd64:3.0" EOF

–hostname-override:显示名称,集群中唯一

–network-plugin:启用CNI

–kubeconfig:空路径,会自动生成,后面用于连接apiserver

–bootstrap-kubeconfig:首次启动向apiserver申请证书

–config:配置参数文件

–cert-dir:kubelet证书生成目录

–pod-infra-container-image:管理Pod网络容器的镜像

2. 配置参数文件

cat > /opt/kubernetes/cfg/kubelet-config.yml << EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

EOF

3. 生成bootstrap.kubeconfig文件

KUBE_APISERVER="https://192.168.10.160:6443" # apiserver IP:PORT

TOKEN="c47ffb939f5ca36231d9e3121a252940" # 与token.csv里保持一致

注意:这里的命令是分开执行的,全是在命令行执行

# 生成 kubelet bootstrap kubeconfig 配置文件

kubectl config set-cluster kubernetes

--certificate-authority=/opt/kubernetes/ssl/ca.pem

--embed-certs=true

--server=${KUBE_APISERVER}

--kubeconfig=bootstrap.kubeconfig

kubectl config set-credentials "kubelet-bootstrap"

--token=${TOKEN}

--kubeconfig=bootstrap.kubeconfig

kubectl config set-context default

--cluster=kubernetes

--user="kubelet-bootstrap"

--kubeconfig=bootstrap.kubeconfig

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

拷贝到配置文件路径

cp bootstrap.kubeconfig /opt/kubernetes/cfg

4. systemd管理kubelet

cat > /usr/lib/systemd/system/kubelet.service << EOF [Unit] Description=Kubernetes Kubelet After=docker.service [Service] EnvironmentFile=/opt/kubernetes/cfg/kubelet.conf ExecStart=/opt/kubernetes/bin/kubelet $KUBELET_OPTS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

5. 启动并设置开机启动

systemctl daemon-reload && systemctl start kubelet &&systemctl enable kubelet

5.1 批准kubelet证书申请并加入集群

查看kubelet证书请求

kubectl get csr NAME AGE SIGNERNAME REQUESTOR CONDITION node-csr-uCEGPOIiDdlLODKts8J658HrFq9CZ--K6M4G7bjhk8A 6m3s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

批准申请

kubectl certificate approve node-csr-uCEGPOIiDdlLODKts8J658HrFq9CZ--K6M4G7bjhk8A

查看节点

kubectl get node

注:由于网络插件还没有部署,节点会没有准备就绪 NotReady

5.2 部署kube-proxy

1. 创建配置文件

cat > /opt/kubernetes/cfg/kube-proxy.conf << EOF KUBE_PROXY_OPTS="--logtostderr=false \ --v=2 \ --log-dir=/opt/kubernetes/logs \ --config=/opt/kubernetes/cfg/kube-proxy-config.yml" EOF

2. 配置参数文件

cat > /opt/kubernetes/cfg/kube-proxy-config.yml << EOF kind: KubeProxyConfiguration apiVersion: kubeproxy.config.k8s.io/v1alpha1 bindAddress: 0.0.0.0 metricsBindAddress: 0.0.0.0:10249 clientConnection: kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig hostnameOverride: k8s-master clusterCIDR: 10.0.0.0/24 EOF

3. 生成kube-proxy.kubeconfig文件

生成kube-proxy证书

# 切换工作目录

cd TLS/k8s

# 创建证书请求文件

cat > kube-proxy-csr.json << EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

# 生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

ls kube-proxy*pem

kube-proxy-key.pem kube-proxy.pem

生成kubeconfig文件

KUBE_APISERVER="https://192.168.10.160:6443"

kubectl config set-cluster kubernetes

--certificate-authority=/opt/kubernetes/ssl/ca.pem

--embed-certs=true

--server=${KUBE_APISERVER}

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy

--client-certificate=./kube-proxy.pem

--client-key=./kube-proxy-key.pem

--embed-certs=true

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default

--cluster=kubernetes

--user=kube-proxy

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

拷贝到配置文件指定路径

cp kube-proxy.kubeconfig /opt/kubernetes/cfg/

4. systemd管理kube-proxy

cat > /usr/lib/systemd/system/kube-proxy.service << EOF [Unit] Description=Kubernetes Proxy After=network.target [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.conf ExecStart=/opt/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

5. 启动并设置开机启动

systemctl daemon-reload systemctl start kube-proxy systemctl enable kube-proxy systemctl status kube-proxy

5.3 部署CNI网络

先准备好CNI二进制文件:

下载地址:https://github.com/containernetworking/plugins/releases/download/v0.8.6/cni-plugins-linux-amd64-v0.8.6.tgz

解压二进制包并移动到默认工作目录

mkdir -p /opt/cni/bin tar xf cni-plugins-linux-amd64-v0.8.6.tgz -C /opt/cni/bin

部署CNI网络

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml sed -i -r "s#quay.io/coreos/flannel:.*-amd64#lizhenliang/flannel:v0.12.0-amd64#g" kube-flannel.yml

默认镜像地址在国外无法访问,修改为docker hub镜像仓库地址

kubectl apply -f kube-flannel.yml kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE kube-flannel-ds-amd64-2pc95 1/1 Running 0 72s kubectl get node NAME STATUS ROLES AGE VERSION k8s-master Ready <none> 41m v1.18.6

部署好网络插件,Node准备就绪

5.4 授权apiserver访问kubelet

cat > apiserver-to-kubelet-rbac.yaml << EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

- pods/log

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

EOF

kubectl apply -f apiserver-to-kubelet-rbac.yaml

5.5 新增加Worker Node,其他节点操作

1. 拷贝已部署好的Node相关文件到新节点

在master节点将Worker Node涉及文件拷贝到新节点192.168.10.161/162

scp -r /opt/kubernetes root@192.168.10.161:/opt/

scp -r /usr/lib/systemd/system/{kubelet,kube-proxy}.service root@192.168.10.161:/usr/lib/systemd/system

scp -r /opt/cni/ root@192.168.10.161:/opt/

scp /opt/kubernetes/ssl/ca.pem root@192.168.10.161:/opt/kubernetes/ssl

2. 删除kubelet证书和kubeconfig文件

rm -f /opt/kubernetes/cfg/kubelet.kubeconfig rm -f /opt/kubernetes/ssl/kubelet*

注:这几个文件是证书申请审批后自动生成的,每个Node不同,必须删除重新生成。

3. 修改主机名

sed -i "s#k8s-master#k8s-node-1#g" /opt/kubernetes/cfg/kubelet.conf sed -i "s#k8s-master#k8s-node-1#g" /opt/kubernetes/cfg/kube-proxy-config.yml sed -i "s#k8s-master#k8s-node-2#g" /opt/kubernetes/cfg/kubelet.conf sed -i "s#k8s-master#k8s-node-2#g" /opt/kubernetes/cfg/kube-proxy-config.yml

4. 启动并设置开机启动

systemctl daemon-reload systemctl start kubelet systemctl enable kubelet systemctl start kube-proxy systemctl enable kube-proxy

5. 在Master上批准新所有Node kubelet证书申请

kubectl get csr NAME AGE SIGNERNAME REQUESTOR CONDITION node-csr-4zTjsaVSrhuyhIGqsefxzVoZDCNKei-aE2jyTP81Uro 89s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending kubectl certificate approve node-csr-4zTjsaVSrhuyhIGqsefxzVoZDCNKei-aE2jyTP81Uro

6. 查看Node状态

kubectl get node NAME STATUS ROLES AGE VERSION k8s-master Ready <none> 65m v1.18.6 k8s-node1 Ready <none> 12m v1.18.6 k8s-node2 Ready <none> 81s v1.18.6

Node2(192.168.10.162 )节点同上。记得修改主机名!

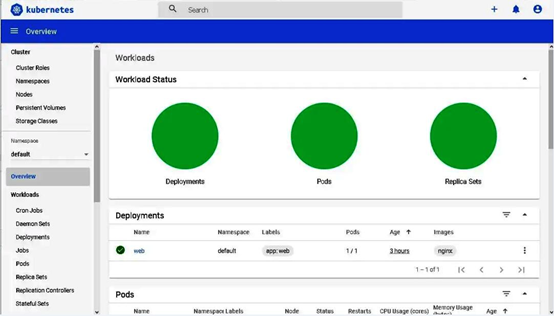

六、部署Dashboard和CoreDNS

6.1 部署Dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml

默认Dashboard只能集群内部访问,修改Service为NodePort类型,暴露到外部

vi recommended.yaml

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 30001

type: NodePort

selector:

k8s-app: kubernetes-dashboard

kubectl apply -f recommended.yaml

kubectl get pods,svc -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

pod/dashboard-metrics-scraper-694557449d-z8gfb 1/1 Running 0 2m18s

pod/kubernetes-dashboard-9774cc786-q2gsx 1/1 Running 0 2m19s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/dashboard-metrics-scraper ClusterIP 10.0.0.141 <none> 8000/TCP 2m19s

service/kubernetes-dashboard NodePort 10.0.0.239 <none> 443:30001/TCP 2m19s

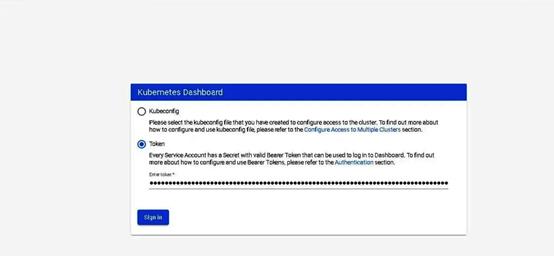

访问地址:https://NodeIP:30001

创建service account并绑定默认cluster-admin管理员集群角色

kubectl create serviceaccount dashboard-admin -n kube-system #创建用户

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin #授权

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}') #查看token

使用输出的token登录Dashboard

6.2 部署CoreDNS

CoreDNS用于集群内部Service名称解析

wget https://github.com/dsalamancaMS/CoreDNSforKube/blob/master/coredns.yaml

kubectl apply -f coredns.yaml kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE coredns-5ffbfd976d-j6shb 1/1 Running 0 32s kube-flannel-ds-amd64-2pc95 1/1 Running 0 38m kube-flannel-ds-amd64-7qhdx 1/1 Running 0 15m kube-flannel-ds-amd64-99cr8 1/1 Running 0 26m

DNS解析测试

kubectl run -it --rm dns-test --image=busybox:1.28.4 sh If you don't see a command prompt, try pressing enter. / # nslookup kubernetes Server: 10.0.0.2 Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local Name: kubernetes Address 1: 10.0.0.1 kubernetes.default.svc.cluster.local

至此,单Master集群部署完成