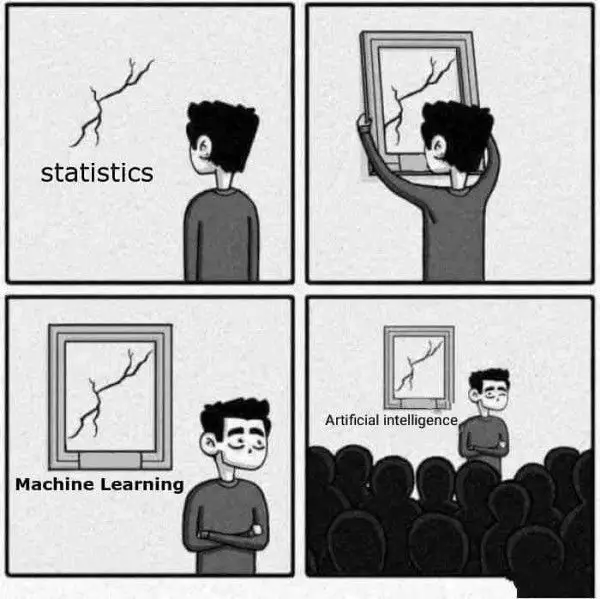

This meme has been all over social media lately, producing appreciative chuckles across the internet as the hype around deep learning begins to subside. The sentiment that machine learning is really nothing to get excited about, or that it’s just a redressing of age-old statistical techniques, is growing increasingly ubiquitous; the trouble is it isn’t true.

题图上这张在社交媒体上疯狂传播的恶搞漫画博得了不少转发,这似乎暗示着,对机器学习的炒作热度开始消退。越来越多的人都开始认为机器学习真的没有什么可值得兴奋的,它只不过是对老旧的统计技术的重新包装罢了。然而问题是,事实并非如此。

I get it — it’s not fashionable to be part of the overly enthusiastic, hype-drunk crowd of deep learning evangelists. ML experts who in 2013 preached deep learning from the rooftops now use the term only with a hint of chagrin, preferring instead to downplay the power of modern neural networks lest they be associated with the scores of people that still seem to think that

import kerasis the leap for every hurdle, and that they, in knowing it, have some tremendous advantage over their competition.可以看出,深度学习传播的狂热分子不流行了。甚至是那些站在科学顶端的专家们,现在对使用这个术语都失去了极大的热情,仅剩些许懊恼,反而更倾向于淡化现代神经网络的力量,避免让大量群众认为

import keras能够克服每一个障碍。

Machine Learning = Representation + Evaluation + Optimization

Machine learning is a class of computational algorithms which iteratively “learn” an approximation to some function. Pedro Domingos, a professor of computer science at the University of Washington, laid out three components that make up a machine learning algorithm: representation, evaluation, and optimization.

机器学习是一类计算算法,它采用迭代“学习”的方法向某个函数逼近。华盛顿大学计算机科学教授Pedro Domingos提出了构成机器学习算法的三个组成部分:映射、评估和优化。

Representation involves the transformation of inputs from one space to another more useful space which can be more easily interpreted. Think of this in the context of a Convolutional Neural Network. Raw pixels are not useful for distinguishing a dog from a cat, so we transform them to a more useful representation (e.g., logits from a softmax output) which can be interpreted and evaluated.

映射(Representation)就是把输入从一个空间转化到另一个更加有用的空间。在卷积神经网络中,原始像素对于区分猫狗的作用不大,因此我们把这些像素映射到另一个空间中(例如从softmax输出的逻辑值),使其能够被解释和评估。

Evaluation is essentially the loss function. How effectively did your algorithm transform your data to a more useful space? How closely did your softmax output resemble your one-hot encoded labels (classification)? Did you correctly predict the next word in the unrolled text sequence (text RNN)? How far did your latent distribution diverge from a unit Gaussian (VAE)? These questions tell you how well your representation function is working; more importantly, they define what it will learn to do.

评估(Evaluation)的本质就是损失函数。你的算法是否有效地把数据转化到另一个更有用的空间?你在softmax的输出与在one-hot编码的分类结果是否相近?你是否正确预测了展开文本序列中下一个会出现的单词(文本RNN)? 你的潜在分布离单位高斯(VAE)相差多少?这些问题的答案可以告诉你映射函数是否有效;更重要的是,它们定义了你需要学习的内容。

Optimization is the last piece of the puzzle. Once you have the evaluation component, you can optimize the representation function in order to improve your evaluation metric. In neural networks, this usually means using some variant of stochastic gradient descent to update the weights and biases of your network according to some defined loss function. And voila! You have the world’s best image classifier (at least, if you’re Geoffrey Hinton in 2012, you do).

优化(Optimization)是拼图的最后一块。当你有了评估的方法之后,你可以对映射函数进行优化,然后提高你的评估参数。在神经网络中,这通常意味着使用一些随机梯度下降的变量来根据某些定义的损失函数更新网络的权重和偏差。 这样一来,你就拥有了世界上最好的图像分类器(2012年,杰弗里·辛顿就是这样做到的)。

Regression Over 100 Million Variables — No Problem?

Let me also point out the difference between deep nets and traditional statistical models by their scale. Deep neural networks are huge. The VGG-16 ConvNet architecture, for example, has approximately 138 million parameters. How do you think your average academic advisor would respond to a student wanting to perform a multiple regression of over 100 million variables? The idea is ludicrous. That’s because training VGG-16 is not multiple regression — it’s machine learning.

我还要指出深度学习网络和传统统计模型的一个差别,就是它们的规模问题。深度神经网络的规模是巨大的。VGG-16 ConvNet架构具有1.38亿个参数。如果一个学生告诉导师要进行一个具有超过1亿变量的多重线性回归,他会有什么反应?这是很荒谬的。因为VGG-16不是多重线性回归,它是一种机器学习手段。

The Unknown Word

| The First Column | The Second Column |

|---|---|

| glorified | 美化的 |

| statistics | 统计学 |

| appreciative | 感激的 |

| ubiquitous | 无所不在的,普遍存在的[ju'bikwites] |

| zoomed out | 变焦 |