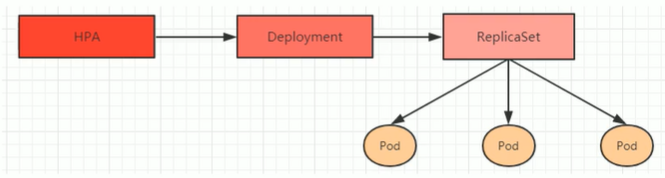

Horizontal Pod Autoscaler(HPA)

我们可以通过手动执行kubeclt sacle命令实现Pod的扩缩容,但是这显然不符合Kubernetes的定位目标—自动化和智能化。Kubernetes期望可以通过监测Pod的使用情况,实现Pod数量的自动调整,于是就产生了HPA这种控制器。

HPA可以获取每个Pod的利用率,然后和HPA中定义的指标进行对比,同时计算出需要伸缩的具体值,最后实现Pod的数量调整。其实HPA和之前的Deployment一样,也属于一种Kubernetes资源对象,它通过追踪分析目标Pod的负载变化情况,来确定是否需要针对性的调整目标Pod的副本数。

安装metrice-server(v0.3.6)

metrics-server可以用来收集集群中的资源使用情况。

获取metrics-server,可以直接访问Github进行获取,自行从Github上获取注意版本号。Github地址

这里我也提供了下载链接,点击下载

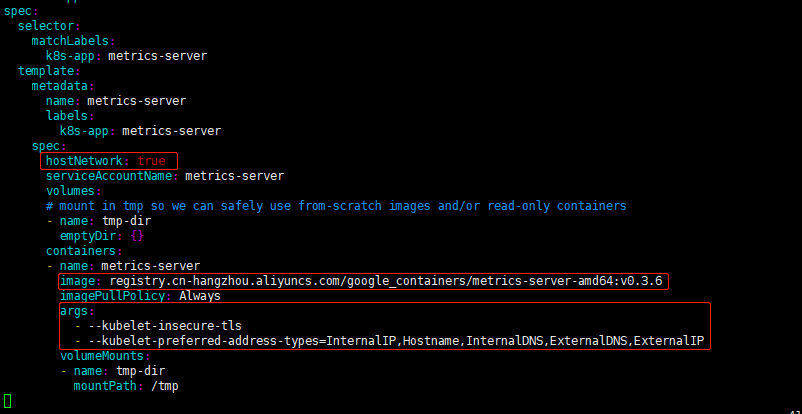

在部署前需要修改metrics-server-deployment.yaml文件 。

cd metrics-server-0.3.6/deploy/1.8+/

vim metrics-server-deployment.yaml

按照图片上标注的位置,加入下面配置:

hostNetwork: true

image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server-amd64:v0.3.6

args:

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP

修改完成后,安装metrics-server:

[root@master 1.8+]# kubectl apply -f ./

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

serviceaccount/metrics-server created

deployment.apps/metrics-server created

service/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

查看metrics-server生成的Pod :

# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-59877c7fb4-t5qvj 1/1 Running 0 25h

calico-node-67xqv 1/1 Running 0 150m

calico-node-8t2n5 1/1 Running 0 25h

calico-node-jnst5 1/1 Running 0 25h

coredns-7ff77c879f-9zmp4 1/1 Running 0 27h

coredns-7ff77c879f-kbmqc 1/1 Running 0 27h

etcd-master 1/1 Running 0 27h

kube-apiserver-master 1/1 Running 0 27h

kube-controller-manager-master 1/1 Running 0 27h

kube-proxy-255pm 1/1 Running 0 27h

kube-proxy-7b2qg 1/1 Running 0 150m

kube-proxy-xcd9f 1/1 Running 0 27h

kube-scheduler-master 1/1 Running 0 27h

metrics-server-5f55b696bd-rfxkv 1/1 Running 0 53s

我们发现metrics-server的pod已经生成。接着我们使用kubectl top命令查看资源使用情况:

# kubectl top node && kubectl top pod -n kube-system

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master 590m 7% 1524Mi 19%

node1 305m 3% 771Mi 21%

node2 308m 3% 506Mi 13%

NAME CPU(cores) MEMORY(bytes)

calico-kube-controllers-59877c7fb4-t5qvj 2m 16Mi

calico-node-67xqv 102m 47Mi

calico-node-8t2n5 91m 85Mi

calico-node-jnst5 90m 79Mi

coredns-7ff77c879f-9zmp4 7m 14Mi

coredns-7ff77c879f-kbmqc 10m 14Mi

etcd-master 43m 33Mi

kube-apiserver-master 125m 356Mi

kube-controller-manager-master 57m 48Mi

kube-proxy-255pm 2m 31Mi

kube-proxy-7b2qg 2m 16Mi

kube-proxy-xcd9f 2m 20Mi

kube-scheduler-master 8m 17Mi

metrics-server-5f55b696bd-rfxkv 2m 14Mi

HPA

现在我们用 Deployment 来创建一个 Nginx Pod,然后利用 HPA 来进行自动扩缩容。资源清单如下所示:(hpa-demo.yaml)

apiVersion: apps/v1 # 版本号

kind: Deployment # 类型

metadata: # 元数据

name: nginx # deployment的名称

namespace: dev # 命名类型

spec: # 详细描述

selector: # 选择器,通过它指定该控制器可以管理哪些Pod

matchLabels: # Labels匹配规则

app: nginx-pod

template: # 模块 当副本数据不足的时候,会根据下面的模板创建Pod副本

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: nginx # 容器名称

image: nginx:1.17.1 # 容器需要的镜像地址

ports:

- containerPort: 80 # 容器所监听的端口

protocol: TCP

resources: # 资源限制

requests:

cpu: "100m" # 100m表示100millicpu,即0.1个CPU

我们直接创建后查看状态:

# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-587f44948f-669b5 1/1 Running 0 26s

# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 1/1 1 1 34s

接着我们创建一个Service,为了给我们提供一个外部访问的IP,方便我们后面的压测:

# kubectl expose deployment nginx --name=nginx --type=NodePort --port=80 --target-port=80

service/nginx exposed

# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 27h

nginx NodePort 10.103.32.192 <none> 80:30372/TCP 51s

接下来我们需要创建HPA的资源文件了(hpa.yaml):

apiVersion: autoscaling/v1 # 版本号

kind: HorizontalPodAutoscaler # 类型

metadata: # 元数据

name: pc-hpa # deployment的名称

spec:

minReplicas: 1 # 最小Pod数量

maxReplicas: 10 # 最大Pod数量

targetCPUUtilizationPercentage: 3 # CPU使用率指标

scaleTargetRef: # 指定要控制的Deployment

apiVersion: apps/v1

kind: Deployment

name: nginx

创建后我们进行查看hpa的状态:

# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

pc-hpa Deployment/nginx 0%/3% 1 10 1 57s

下面我们准备一段go脚本,并发访问:

package testing

import (

"fmt"

"net/http"

"testing"

)

func Test_k8s(t *testing.T) {

for i := 0 ; i < 10000; i ++ {

go func(){

// ip : master Ip

// port: svc暴露给我们的port

resp, err := http.Get("http://192.168.209.148:30372")

if err != nil {

fmt.Println(err)

} else {

fmt.Println(resp)

}

}()

}

select{}

}

最后我们开启三个窗口,分别查看hpa,deploy以及pod的状态变化

hpa :

# kubectl get hpa -w

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

pc-hpa Deployment/nginx 0%/3% 1 10 1 14m

pc-hpa Deployment/nginx 188%/3% 1 10 1 14m

pc-hpa Deployment/nginx 188%/3% 1 10 4 14m

pc-hpa Deployment/nginx 188%/3% 1 10 8 15m

pc-hpa Deployment/nginx 188%/3% 1 10 10 15m

pc-hpa Deployment/nginx 2%/3% 1 10 10 15m

pc-hpa Deployment/nginx 0%/3% 1 10 10 16m

deploy:

# kubectl get deploy -w

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 1/1 1 1 21m

nginx 1/4 1 1 22m

nginx 1/4 1 1 22m

nginx 1/4 1 1 22m

nginx 1/4 4 1 22m

nginx 2/4 4 2 22m

nginx 3/4 4 3 22m

nginx 4/4 4 4 22m

nginx 4/8 4 4 23m

nginx 4/8 4 4 23m

nginx 4/8 4 4 23m

nginx 4/8 8 4 23m

nginx 5/8 8 5 23m

nginx 6/8 8 6 23m

nginx 7/8 8 7 23m

nginx 8/8 8 8 23m

nginx 8/10 8 8 23m

nginx 8/10 8 8 23m

nginx 8/10 8 8 23m

nginx 8/10 10 8 23m

nginx 9/10 10 9 23m

nginx 10/10 10 10 23m

pod:

# kubectl get pod -w

NAME READY STATUS RESTARTS AGE

nginx-587f44948f-669b5 1/1 Running 0 21m

nginx-587f44948f-dwcw8 0/1 Pending 0 0s

nginx-587f44948f-ktjrm 0/1 Pending 0 0s

nginx-587f44948f-mvz2d 0/1 Pending 0 0s

nginx-587f44948f-dwcw8 0/1 Pending 0 0s

nginx-587f44948f-ktjrm 0/1 Pending 0 0s

nginx-587f44948f-mvz2d 0/1 Pending 0 0s

nginx-587f44948f-dwcw8 0/1 ContainerCreating 0 0s

nginx-587f44948f-ktjrm 0/1 ContainerCreating 0 0s

nginx-587f44948f-mvz2d 0/1 ContainerCreating 0 0s

nginx-587f44948f-dwcw8 0/1 ContainerCreating 0 1s

nginx-587f44948f-mvz2d 0/1 ContainerCreating 0 1s

nginx-587f44948f-mvz2d 1/1 Running 0 2s

nginx-587f44948f-dwcw8 1/1 Running 0 2s

nginx-587f44948f-ktjrm 0/1 ContainerCreating 0 3s

nginx-587f44948f-ktjrm 1/1 Running 0 3s

nginx-587f44948f-kr49r 0/1 Pending 0 0s

nginx-587f44948f-vk24j 0/1 Pending 0 0s

nginx-587f44948f-bmkwv 0/1 Pending 0 0s

nginx-587f44948f-vfvwb 0/1 Pending 0 0s

nginx-587f44948f-kr49r 0/1 Pending 0 0s

nginx-587f44948f-vk24j 0/1 Pending 0 0s

nginx-587f44948f-bmkwv 0/1 Pending 0 0s

nginx-587f44948f-vfvwb 0/1 Pending 0 0s

nginx-587f44948f-vk24j 0/1 ContainerCreating 0 0s

nginx-587f44948f-kr49r 0/1 ContainerCreating 0 0s

nginx-587f44948f-vfvwb 0/1 ContainerCreating 0 0s

nginx-587f44948f-bmkwv 0/1 ContainerCreating 0 0s

nginx-587f44948f-vk24j 0/1 ContainerCreating 0 6s

nginx-587f44948f-vfvwb 0/1 ContainerCreating 0 6s

nginx-587f44948f-vk24j 1/1 Running 0 7s

nginx-587f44948f-vfvwb 1/1 Running 0 7s

nginx-587f44948f-bmkwv 0/1 ContainerCreating 0 11s

nginx-587f44948f-kr49r 0/1 ContainerCreating 0 12s

nginx-587f44948f-bmkwv 1/1 Running 0 12s

nginx-587f44948f-kr49r 1/1 Running 0 12s

nginx-587f44948f-4wxvp 0/1 Pending 0 0s

nginx-587f44948f-wq52m 0/1 Pending 0 0s

nginx-587f44948f-4wxvp 0/1 Pending 0 0s

nginx-587f44948f-wq52m 0/1 Pending 0 0s

nginx-587f44948f-4wxvp 0/1 ContainerCreating 0 0s

nginx-587f44948f-wq52m 0/1 ContainerCreating 0 0s

nginx-587f44948f-4wxvp 0/1 ContainerCreating 0 2s

nginx-587f44948f-wq52m 0/1 ContainerCreating 0 2s

nginx-587f44948f-4wxvp 1/1 Running 0 2s

nginx-587f44948f-wq52m 1/1 Running 0 3s