一、configmap

1.1、configmap简介

ConfigMap 功能在 Kubernetes1.2 版本中引入,许多应用程序会从配置文件、命令行参数或环境变量中读取配置信息。ConfigMap API 给我们提供了向容器中注入配置信息的机制,ConfigMap 可以被用来保存单个属性,也可以用来保存整个配置文件或者 JSON 二进制对象

1.2、ConfigMap 的创建

1.2.1、使用目录创建

[root@k8s-master01 dir]# ls

game.propertie ui.propertie

[root@k8s-master01 dir]# cat game.propertie

enemies=aliens

lives=3

enemies.cheat=true

enemies.cheat.level=noGoodRotten

secret.code.passphrase=UUDDLRLRBABAS

secret.code.allowed=true

secret.code.lives=30

[root@k8s-master01 dir]# cat ui.propertie

color.good=purple

color.bad=yellow

allow.textmode=true

how.nice.to.look=fairlyNice

#从目录创建configmap

#—from-file指定在目录下的所有文件都会被用在 ConfigMap 里面创建一个键值对,键的名字就是文件名,值就是文件的内容

[root@k8s-master01 dir]# kubectl create configmap game-config --from-file=./

configmap/game-config created

[root@k8s-master01 dir]# kubectl get configmap

NAME DATA AGE

game-config 2 14s

[root@k8s-master01 dir]# kubectl get cm

NAME DATA AGE

game-config 2 18s

[root@k8s-master01 dir]# kubectl get cm game-config -o yaml

apiVersion: v1

data:

game.propertie: |

enemies=aliens

lives=3

enemies.cheat=true

enemies.cheat.level=noGoodRotten

secret.code.passphrase=UUDDLRLRBABAS

secret.code.allowed=true

secret.code.lives=30

ui.propertie: |

color.good=purple

color.bad=yellow

allow.textmode=true

how.nice.to.look=fairlyNice

kind: ConfigMap

metadata:

creationTimestamp: "2020-02-04T05:13:30Z"

name: game-config

namespace: default

resourceVersion: "144337"

selfLink: /api/v1/namespaces/default/configmaps/game-config

uid: 0fc2df14-d5ee-4092-ba9e-cc733e8d5893

[root@k8s-master01 dir]# kubectl describe cm game-config

Name: game-config

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

game.propertie:

----

enemies=aliens

lives=3

enemies.cheat=true

enemies.cheat.level=noGoodRotten

secret.code.passphrase=UUDDLRLRBABAS

secret.code.allowed=true

secret.code.lives=30

ui.propertie:

----

color.good=purple

color.bad=yellow

allow.textmode=true

how.nice.to.look=fairlyNice

Events: <none>1.2.2、使用文件创建

只要指定为一个文件就可以从单个文件中创建 ConfigMap

—from-file这个参数可以使用多次,你可以使用两次分别指定上个实例中的那两个配置文件,效果就跟指定整个目录是一样的

[root@k8s-master01 dir]# kubectl create configmap game-config-2 --from-file=./game.propertie

configmap/game-config-2 created

[root@k8s-master01 dir]# kubectl get cm game-config-2

NAME DATA AGE

game-config-2 1 16s

[root@k8s-master01 dir]# kubectl get cm game-config-2 -o yaml

apiVersion: v1

data:

game.propertie: |

enemies=aliens

lives=3

enemies.cheat=true

enemies.cheat.level=noGoodRotten

secret.code.passphrase=UUDDLRLRBABAS

secret.code.allowed=true

secret.code.lives=30

kind: ConfigMap

metadata:

creationTimestamp: "2020-02-04T05:25:13Z"

name: game-config-2

namespace: default

resourceVersion: "145449"

selfLink: /api/v1/namespaces/default/configmaps/game-config-2

uid: df209574-202d-4564-95ca-19532abd6b7b1.2.3、使用字面值创建

使用文字值创建,利用—from-literal参数传递配置信息,该参数可以使用多次

[root@k8s-master01 dir]# kubectl create configmap special-config --from-literal=special.how=very --from-literal=special.type=charm configmap/special-config created [root@k8s-master01 dir]# kubectl get cm special-config -o yaml apiVersion: v1 data: special.how: very special.type: charm kind: ConfigMap metadata: creationTimestamp: "2020-02-04T05:27:43Z" name: special-config namespace: default resourceVersion: "145688" selfLink: /api/v1/namespaces/default/configmaps/special-config uid: 72b58e0e-f055-43dd-aa04-a7b2e9007227

1.3、Pod中使用Configmap

1.3.1、使用configmap代替环境变量

#创建config文件

[root@k8s-master01 usage]# cat config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: special-config

namespace: default

data:

special.how: very

special.type: charm

---

apiVersion: v1

kind: ConfigMap

metadata:

name: env-config

namespace: default

data:

log_level: INFO

[root@k8s-master01 usage]# kubectl apply -f config.yaml

configmap/special-config created

configmap/env-config created

#将ConfigMap文件注入到pod环境中

[root@k8s-master01 usage]# cat dapi-test.pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: dapi-test-pod

spec:

containers:

- name: test-container

image: hub.dianchou.com/library/myapp:v1

command: ["/bin/sh","-c","env"]

env: #第一种导入方案

- name: SPECIAL_LEVEL_KEY

valueFrom:

configMapKeyRef:

name: special-config #从哪个configMap导入

key: special.how #导入的是键名,就是将special.how键的键值赋予SPECIAL_LEVEL_KEY

- name: SPECIAL_TYPE_KEY

valueFrom:

configMapKeyRef:

name: special-config

key: special.type

envFrom: #第二种导入方案

- configMapRef:

name: env-config

restartPolicy: Never

[root@k8s-master01 usage]# kubectl create -f dapi-test.pod.yaml

pod/dapi-test-pod created

[root@k8s-master01 usage]# kubectl get pod

NAME READY STATUS RESTARTS AGE

dapi-test-pod 0/1 Completed 0 4s

#查看环境变量

[root@k8s-master01 usage]# kubectl logs dapi-test-pod

MYAPP_SVC_PORT_80_TCP_ADDR=10.98.57.156

KUBERNETES_PORT=tcp://10.96.0.1:443

KUBERNETES_SERVICE_PORT=443

MYAPP_SVC_PORT_80_TCP_PORT=80

HOSTNAME=dapi-test-pod

SHLVL=1

MYAPP_SVC_PORT_80_TCP_PROTO=tcp

HOME=/root

SPECIAL_TYPE_KEY=charm

MYAPP_SVC_PORT_80_TCP=tcp://10.98.57.156:80

NGINX_VERSION=1.12.2

KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

KUBERNETES_PORT_443_TCP_PORT=443

KUBERNETES_PORT_443_TCP_PROTO=tcp

MYAPP_SVC_SERVICE_HOST=10.98.57.156

SPECIAL_LEVEL_KEY=very

log_level=INFO

KUBERNETES_SERVICE_PORT_HTTPS=443

KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443

PWD=/

KUBERNETES_SERVICE_HOST=10.96.0.1

MYAPP_SVC_SERVICE_PORT=80

MYAPP_SVC_PORT=tcp://10.98.57.156:80

1.3.2、用 ConfigMap 设置命令行参数

[root@k8s-master01 usage]# cat dapi-test-pod-command.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: special-config

namespace: default

data:

special.how: very

special.type: charm

---

apiVersion: v1

kind: Pod

metadata:

name: dapi-test-pod2

spec:

containers:

- name: test-container-command

image: hub.dianchou.com/library/myapp:v1

command: ["/bin/sh","-c","echo $(SPECIAL_LEVEL_KEY) $(SPECIAL_TYPE_KEY)"]

env: #第一种导入方案

- name: SPECIAL_LEVEL_KEY

valueFrom:

configMapKeyRef:

name: special-config #从哪个configMap导入

key: special.how #导入的是键名,就是将special.how键的键值赋予SPECIAL_LEVEL_KEY

- name: SPECIAL_TYPE_KEY

valueFrom:

configMapKeyRef:

name: special-config

key: special.type

restartPolicy: Never

[root@k8s-master01 usage]# kubectl create -f dapi-test-pod-command.yaml

configmap/special-config created

pod/dapi-test-pod2 created

[root@k8s-master01 usage]# kubectl get cm

NAME DATA AGE

special-config 2 4s

[root@k8s-master01 usage]# kubectl get pod

NAME READY STATUS RESTARTS AGE

dapi-test-pod2 0/1 Completed 0 10s

[root@k8s-master01 usage]# kubectl logs dapi-test-pod2

very charm1.3.3、通过数据卷插件使用ConfigMap

在数据卷里面使用这个 ConfigMap,有不同的选项。最基本的就是将文件填入数据卷,在这个文件中,键就是文件名,键值就是文件内容

[root@k8s-master01 usage]# cat dapi-test-pod-volume.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: special-config

namespace: default

data:

special.how: very

special.type: charm

---

apiVersion: v1

kind: Pod

metadata:

name: dapi-test-pod3

spec:

containers:

- name: test-container

image: hub.dianchou.com/library/myapp:v1

command: ["/bin/sh","-c","sleep 600s"]

volumeMounts:

- name: config-volume

mountPath: /etc/config

volumes:

- name: config-volume

configMap:

name: special-config

restartPolicy: Never

[root@k8s-master01 usage]# kubectl create -f dapi-test-pod-volume.yaml

configmap/special-config created

pod/dapi-test-pod3 created

[root@k8s-master01 usage]# kubectl get pod

NAME READY STATUS RESTARTS AGE

dapi-test-pod3 1/1 Running 0 6s

[root@k8s-master01 usage]# kubectl exec dapi-test-pod3 -it -- /bin/sh

/ # cd /etc/config

/etc/config # ls

special.how special.type

/etc/config # cat special.how

/etc/config # cat special.type

charm/etc/config # 1.4、configmap热更新

1)创建configmap,deployment相关资源

[root@k8s-master01 usage]# cat config-hot-update.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: log-config

namespace: default

data:

log_level: INFO

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: my-nginx

spec:

replicas: 1

template:

metadata:

labels:

run: my-nginx

spec:

containers:

- name: my-nginx

image: hub.dianchou.com/library/myapp:v1

ports:

- containerPort: 80

volumeMounts:

- name: config-volume

mountPath: /etc/config

volumes:

- name: config-volume

configMap:

name: log-config

[root@k8s-master01 usage]# kubectl create -f config-hot-update.yaml

configmap/log-config created

deployment.extensions/my-nginx created

#查看日志级别

[root@k8s-master01 usage]# kubectl exec `kubectl get pods -l run=my-nginx -o=name|cut -d "/" -f2` cat /etc/config/log_level

INFO2)修改configmap

修改log_level的值为DEBUG等待大概 10 秒钟时间,再次查看环境变量的值

[root@k8s-master01 usage]# kubectl edit configmap log-config .... data: log_level: DEBUG .... #再次查看 [root@k8s-master01 usage]# kubectl exec `kubectl get pods -l run=my-nginx -o=name|cut -d "/" -f2` cat /etc/config/log_level DEBUG

3)注意:ConfigMap 更新后滚动更新 Pod

更新 ConfigMap 目前并不会触发相关 Pod 的滚动更新,可以通过修改 pod annotations 的方式强制触发滚动更新

$ kubectl patch deployment my-nginx --patch'{"spec": {"template": {"metadata": {"annotations":{"version/config": "20190411" }}}}}'

#在.spec.template.metadata.annotations中添加version/config,每次通过修改version/config来触发滚动更新二、Secret

2.1、secret作用

Secret 解决了密码、token、密钥等敏感数据的配置问题,而不需要把这些敏感数据暴露到镜像或者 Pod Spec中。Secret 可以以 Volume 或者环境变量的方式使用

2.2、secret类型

2.2.1、Service Account

用来访问 Kubernetes API,由 Kubernetes 自动创建,并且会自动挂载到 Pod 的/run/secrets/kubernetes.io/serviceaccount目录中,以kube-proxy为例:

[root@k8s-master01 secret]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-5c98db65d4-6vgp6 1/1 Running 2 2d1h coredns-5c98db65d4-8zbqt 1/1 Running 2 2d1h etcd-k8s-master01 1/1 Running 2 2d1h kube-apiserver-k8s-master01 1/1 Running 2 2d1h kube-controller-manager-k8s-master01 1/1 Running 2 2d1h kube-flannel-ds-amd64-m769r 1/1 Running 1 2d kube-flannel-ds-amd64-sjwph 1/1 Running 2 2d kube-flannel-ds-amd64-z76v7 1/1 Running 1 2d kube-proxy-4g57j 1/1 Running 1 2d kube-proxy-qd4xm 1/1 Running 2 2d1h kube-proxy-x66cd 1/1 Running 3 2d kube-scheduler-k8s-master01 1/1 Running 2 2d1h [root@k8s-master01 secret]# kubectl exec kube-proxy-4g57j -n kube-system -it -- /bin/bash OCI runtime exec failed: exec failed: container_linux.go:346: starting container process caused "exec: "/bin/bash": stat /bin/bash: no such file or directory": unknown command terminated with exit code 126 [root@k8s-master01 secret]# kubectl exec kube-proxy-4g57j -n kube-system -it -- /bin/sh # cd /run/secrets/kubernetes.io/serviceaccount # ls ca.crt namespace token # cat ca.crt -----BEGIN CERTIFICATE----- MIICyDCCAbCgAwIBAgIBADANBgkqhkiG9w0BAQsFADAVMRMwEQYDVQQDEwprdWJl cm5ldGVzMB4XDTIwMDIwMjA2MTc1N1oXDTMwMDEzMDA2MTc1N1owFTETMBEGA1UE AxMKa3ViZXJuZXRlczCCASIwDQYJKoZIhvcNAQEBBQADggEPADCCAQoCggEBANes AbseNkXcg1nS5EDXwoT7E/hMOA/rEQ6MH2HBC6VHgtnfJeI2Dj8VvqUGNr5dkF7T vjql1QT45lCJXIduhj+QZuRcBMwFGJwOJ843FGtAgRzeAOTOHq8UtDsHMSsn8tSt ZTQDlC1+YpQB5kLC3aQZrig2i2TTEv0N95Ee5OaT9I3F7NXdxwnRa3RB6rRtpvXK HDvuT+bABgIUGyFMO6GqEN8RszJxX0NakCxj9s0cZS/UO1xP+NroFpwUK24uMRxo HxBDmJhvu+h3Z1LG4I+X3O/oQzLHfEdKfRDJ/O715LEkk7601XKa3AArfC9/BYZm 5CzK/onTTSJ6DI2eqIUCAwEAAaMjMCEwDgYDVR0PAQH/BAQDAgKkMA8GA1UdEwEB /wQFMAMBAf8wDQYJKoZIhvcNAQELBQADggEBAEVdisGp9GFpxYOkLwkBOk9KfahK ZMJugoJIEvuSKEj97bRJBHNiVi4hrc7bWQeqVTqbW40hEBGcQzJyErJiYW2lI5hk xmkWI+9GrlhpmSSFl/wILSmdBhocr7Y0E9LshLwORgsbm4SPqJeW4sc6iuWO0LCd 9aK46ThwE613rVnUoof3AKrPCah0EsVIdYDzaEjaSppG9esaUlB5x61V14y2Ao9/ YCm+xxsoPjgA0yGKGLXyupMB7nMXHh+JPYZfQACyNrUeh3DVkhk1RmoL+Ro5TGZD hQWyhC77L6ONdNomjGDqTgNKhouq0GM5WFHHatCPJceqo0xB3maud7ttOmQ= -----END CERTIFICATE----- # cat namespace kube-system# # cat token eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlLXByb3h5LXRva2VuLWpiNjJjIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6Imt1YmUtcHJveHkiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJhNDQ2Y2I2OC02YTk3LTQ2MTUtYjRkZS1hOTU1YmVkMzc2Y2YiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06a3ViZS1wcm94eSJ9.hPCw3tgAOQwEHsq_Fl1vWYpWnParBVjCKelXPz65kZP6WlYBm-Ggw3VUqffDp0vE90ezf4Vwn7sLAzXpzR9fiRAjHauSvON6CWU6gnIOq5YsDfu3azapq3wZDTYf_r2gO8y88lhA9jbQF11M1l_0VGYXu73ALs4fLb_-1-RAVJ8uveUmUEDqRrqTkukN5whgI7ozFQ901puNYQcp-60wDvdGCJaTkUIFtXLcVFY9qBY8l7MpLm-tSP-BL9Jn3bJQsfYCQAc23GAz5liA4olE8ga-4KpO0uVzX7lXBaJzV7qcVA2NQWsr9Iqf3F51iG6yOjGTMqXfhWsqw4dsXc6nSQ# #

2.2.2、Opaque Secret

base64编码格式的Secret,用来存储密码、密钥等

Opaque 类型的数据是一个 map 类型,要求 value 是 base64 编码格式

1)加密用户名及密码

[root@k8s-master01 secret]# echo -n "admin" | base64 YWRtaW4= [root@k8s-master01 secret]# echo -n "1f2d1e2e67df" | base64 MWYyZDFlMmU2N2Rm #可以使用base64 -d解码 [root@k8s-master01 secret]# echo YWRtaW4= | base64 -d admin

2)创建secret yaml文件

[root@k8s-master01 secret]# cat secret.yaml apiVersion: v1 kind: Secret metadata: name: mysecret type: Opaque data: password: MWYyZDFlMmU2N2Rm username: YWRtaW4= [root@k8s-master01 secret]# kubectl create -f secret.yaml secret/mysecret created [root@k8s-master01 secret]# kubectl get secret NAME TYPE DATA AGE default-token-q9x9d kubernetes.io/service-account-token 3 2d1h mysecret Opaque 2 9s

Secret使用方式:

1)将 Secret 挂载到 Volume 中

[root@k8s-master01 secret]# cat secret-volume.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

name: secret-test

name: secret-test

spec:

volumes:

- name: secrets

secret:

secretName: mysecret

containers:

- image: hub.dianchou.com/library/myapp:v1

name: db

volumeMounts:

- name: secrets

mountPath: "/etc/secrets"

readOnly: true

[root@k8s-master01 secret]# kubectl apply -f secret-volume.yaml

pod/secret-test created

[root@k8s-master01 secret]# kubectl get pod

NAME READY STATUS RESTARTS AGE

my-nginx-5cd876c757-j2fst 1/1 Running 0 70m

secret-test 1/1 Running 0 11s

#进入容器查看

[root@k8s-master01 secret]# kubectl exec secret-test -it -- /bin/sh

/ # cd /etc/secrets/

/etc/secrets # ls

password username

/etc/secrets # cat password

1f2d1e2e67df/etc/secrets #

/etc/secrets # cat username

admin/etc/secrets #2)将 Secret 导出到环境变量中

[root@k8s-master01 secret]# cat secret-env.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: pod-deployment

spec:

replicas: 2

template:

metadata:

labels:

app: pod-deployment

spec:

containers:

- name: pod-1

image: hub.dianchou.com/library/myapp:v1

ports:

- containerPort: 80

env:

- name: TEST_USER

valueFrom:

secretKeyRef:

name: mysecret

key: username

- name: TEST_PASSWORD

valueFrom:

secretKeyRef:

name: mysecret

key: password

[root@k8s-master01 secret]# kubectl create -f secret-env.yaml

deployment.extensions/pod-deployment created

[root@k8s-master01 secret]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-deployment-6d6d744fd6-jks2k 1/1 Running 0 6s

pod-deployment-6d6d744fd6-v6nk2 1/1 Running 0 6s

secret-test 1/1 Running 0 3h32m

[root@k8s-master01 secret]# kubectl exec pod-deployment-6d6d744fd6-jks2k -it -- /bin/sh

/ # echo $TEST_USER

admin

/ # echo $TEST_PASSWORD

1f2d1e2e67df2.2.3、kubernetes.io/dockerconfigjson

用来存储私有 docker registry 的认证信息

实验模拟前提:

#在harbor上创建一个私有镜像,两台node节点退出登录harbor,如果之前登录过,解决方法: 1)rm -f /etc/docker/key.json 2)docker logout hub.dianchou.com 3)在node上删除相同镜像 [root@k8s-node01 docker]# docker pull hub.dianchou.com/apptest/myapp-nginx:v1 Error response from daemon: pull access denied for hub.dianchou.com/apptest/myapp-nginx, repository does not exist or may require 'docker login': denied: requested access to the resource is denied

1)使用 Kuberctl 创建 docker registry 认证的 secret

$ kubectl create secret docker-registry myregistrykey --docker-server=DOCKER_REGISTRY_SERVER --docker-username=DOCKER_USER --docker-password=DOCKER_PASSWORD --docker-email=DOCKER_EMAIL [root@k8s-master01 secret]# kubectl create secret docker-registry myregistrykey --docker-server=hub.dianchou.com --docker-username=admin --docker-password=Harbor12345 --docker-email=352972405@qq.com secret/myregistrykey created

2)创建 Pod 的时候,通过imagePullSecrets来引用刚创建的 myregistrykey

[root@k8s-master01 secret]# cat docker-config.yaml

apiVersion: v1

kind: Pod

metadata:

name: foo

spec:

containers:

- name: foo

image: hub.dianchou.com/apptest/myapp-nginx:v1

imagePullSecrets:

- name: myregistrykey

[root@k8s-master01 secret]# kubectl create -f docker-config.yaml

pod/foo created

[root@k8s-master01 secret]# kubectl get pod

NAME READY STATUS RESTARTS AGE

foo 1/1 Running 0 4s #镜像是可以正常pull下来的

[root@k8s-master01 secret]# kubectl describe pod foo

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 22s default-scheduler Successfully assigned default/foo to k8s-node01

Normal Pulling 21s kubelet, k8s-node01 Pulling image "hub.dianchou.com/apptest/myapp-nginx:v1"

Normal Pulled 21s kubelet, k8s-node01 Successfully pulled image "hub.dianchou.com/apptest/myapp-nginx:v1"

Normal Created 21s kubelet, k8s-node01 Created container foo

Normal Started 21s kubelet, k8s-node01 Started container foo三、Volume

3.1、相关说明

容器磁盘上的文件的生命周期是短暂的,这就使得在容器中运行重要应用时会出现一些问题。首先,当容器崩溃时,kubelet 会重启它,但是容器中的文件将丢失——容器以干净的状态(镜像最初的状态)重新启动。其次,在Pod中同时运行多个容器时,这些容器之间通常需要共享文件。Kubernetes 中的Volume就很好的解决了这些问题

Kubernetes 中的卷有明确的寿命 —— 与封装它的 Pod 相同。所f以,卷的生命比 Pod 中的所有容器都长,当这个容器重启时数据仍然得以保存。当然,当 Pod 不再存在时,卷也将不复存在。也许更重要的是,Kubernetes支持多种类型的卷,Pod 可以同时使用任意数量的卷

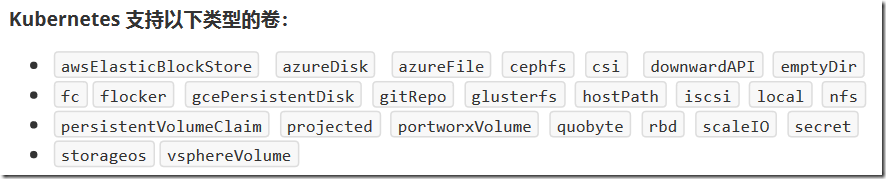

卷的类型:

3.2、emptyDir

当 Pod 被分配给节点时,首先创建emptyDir卷,并且只要该 Pod 在该节点上运行,该卷就会存在。正如卷的名字所述,它最初是空的。Pod 中的容器可以读取和写入emptyDir卷中的相同文件,尽管该卷可以挂载到每个容器中的相同或不同路径上。当出于任何原因从节点中删除 Pod 时,emptyDir中的数据将被永久删除

emptyDir的用法有:

- 暂存空间,例如用于基于磁盘的合并排序

- 用作长时间计算崩溃恢复时的检查点

- Web服务器容器提供数据时,保存内容管理器容器提取的文件

#一个pod中有两个容器,容器中不同路径共享一个挂在卷,一个容器向其中写入,另一个容器可以查看写入

[root@k8s-master01 volume]# cat emptyDir-test.yaml

apiVersion: v1

kind: Pod

metadata:

name: test-pd

spec:

containers:

- image: hub.dianchou.com/library/myapp:v1

name: test-container-1

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /cache

name: cache-volume

- image: busybox

name: test-container-2

imagePullPolicy: IfNotPresent

command: ["/bin/sh","-c","sleep 6000s"]

volumeMounts:

- mountPath: /test

name: cache-volume

volumes:

- name: cache-volume

emptyDir: {}

[root@k8s-master01 volume]# kubectl create -f emptyDir-test.yaml

pod/test-pd created

[root@k8s-master01 volume]# kubectl get pod

NAME READY STATUS RESTARTS AGE

test-pd 2/2 Running 0 7s

[root@k8s-master01 volume]# kubectl describe pod test-pd #检查状态

#进入一个容器中,写入数据

[root@k8s-master01 volume]# kubectl exec test-pd -c test-container-1 -it -- /bin/sh

/ # cd /cache/

/cache # ls

/cache # echo "test-container-1" >> index.html

/cache #

#进入第二个容器,查看写入的数据

[root@k8s-master01 ~]# kubectl exec test-pd -c test-container-2 -it -- /bin/sh

/ # cd /test

/test # ls

index.html

/test # cat index.html

test-container-1

/test # echo "test-container-2" >> index.html

/test #3.3、hostPath

hostPath卷将主机节点的文件系统中的文件或目录挂载到集群中

hostPath的用途如下:

- 运行需要访问 Docker 内部的容器;使用/var/lib/docker的hostPath

- 在容器中运行 cAdvisor;使用/dev/cgroups的hostPath

- 允许 pod 指定给定的 hostPath 是否应该在 pod 运行之前存在,是否应该创建,以及它应该以什么形式存在

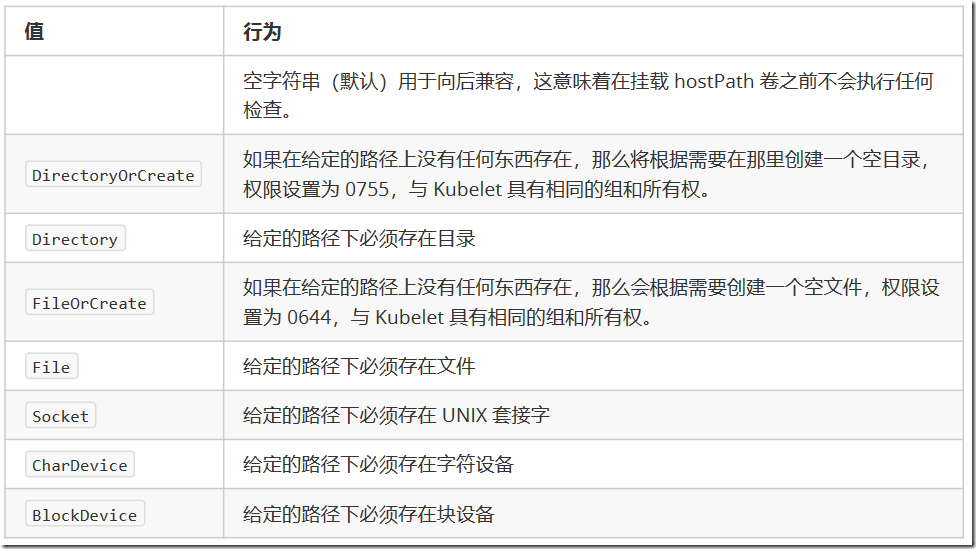

除了所需的path属性之外,用户还可以为hostPath卷指定type:

使用这种卷类型是请注意,因为:

- 由于每个节点上的文件都不同,具有相同配置(例如从 podTemplate 创建的)的 pod 在不同节点上的行为可能会有所不同

- 当 Kubernetes 按照计划添加资源感知调度时,将无法考虑hostPath使用的资源

- 在底层主机上创建的文件或目录只能由 root 写入。您需要在特权容器中以 root 身份运行进程,或修改主机上的文件权限以便写入hostPath卷

#两个node节点创建目录

[root@k8s-node01 ~]# mkdir /data

[root@k8s-node02 ~]# mkdir /data

#编写yaml文件

[root@k8s-master01 volume]# cat hostPath-test.yaml

apiVersion: v1

kind: Pod

metadata:

name: test-pd

spec:

containers:

- image: hub.dianchou.com/library/myapp:v2

name: test-container

volumeMounts:

- mountPath: /test-pd

name: test-volume

volumes:

- name: test-volume

hostPath:

# directory location on host

path: /data

# this field is optional type: Directory

type: Directory

[root@k8s-master01 volume]# kubectl create -f hostPath-test.yaml

pod/test-pd created

#查看pod分配的节点

[root@k8s-master01 volume]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-pd 1/1 Running 0 99s 10.244.1.160 k8s-node01 <none> <none>

#在pod中写入数据,并在节点目录查看

[root@k8s-master01 volume]# kubectl exec test-pd -it -- /bin/sh

/ # cd /test-pd/

/test-pd # echo "test" >> index.html

/test-pd #

#node01上查看,node02上是没有的

[root@k8s-node01 ~]# cd /data/

[root@k8s-node01 data]# ls

index.html

[root@k8s-node01 data]# cat index.html

test四、PV && PVC

4.1、相关概念

1)PersistentVolume(PV)

是由管理员设置的存储,它是群集的一部分。就像节点是集群中的资源一样,PV 也是集群中的资源。 PV 是Volume 之类的卷插件,但具有独立于使用 PV 的 Pod 的生命周期。此 API 对象包含存储实现的细节,即 NFS、iSCSI 或特定于云供应商的存储系统

2)PersistentVolumeClaim(PVC)

是用户存储的请求。它与 Pod 相似。Pod 消耗节点资源,PVC 消耗 PV 资源。Pod 可以请求特定级别的资源(CPU 和内存)。声明可以请求特定的大小和访问模式(例如,可以以读/写一次或只读多次模式挂载)

3)静态 pv

集群管理员创建一些 PV。它们带有可供群集用户使用的实际存储的细节。它们存在于 Kubernetes API 中,可用于消费

4)动态pv

当管理员创建的静态 PV 都不匹配用户的PersistentVolumeClaim时,集群可能会尝试动态地为 PVC 创建卷。此配置基于StorageClasses:PVC 必须请求 [存储类],并且管理员必须创建并配置该类才能进行动态创建。声明该类为""可以有效地禁用其动态配置

要启用基于存储级别的动态存储配置,集群管理员需要启用 API server 上的DefaultStorageClass[准入控制器]。例如,通过确保DefaultStorageClass位于 API server 组件的--admission-control标志,使用逗号分隔的有序值列表中,可以完成此操作

5)绑定

master 中的控制环路监视新的 PVC,寻找匹配的 PV(如果可能),并将它们绑定在一起。如果为新的 PVC 动态调配 PV,则该环路将始终将该 PV 绑定到 PVC。否则,用户总会得到他们所请求的存储,但是容量可能超出要求的数量。一旦 PV 和 PVC 绑定后,PersistentVolumeClaim绑定是排他性的,不管它们是如何绑定的。 PVC 跟PV 绑定是一对一的映射

6)持久化卷声明的保护

PVC 保护的目的是确保由 pod 正在使用的 PVC 不会从系统中移除,因为如果被移除的话可能会导致数据丢失

当启用PVC 保护 alpha 功能时,如果用户删除了一个 pod 正在使用的 PVC,则该 PVC 不会被立即删除。PVC 的删除将被推迟,直到 PVC 不再被任何 pod 使用

7)持久化卷类型

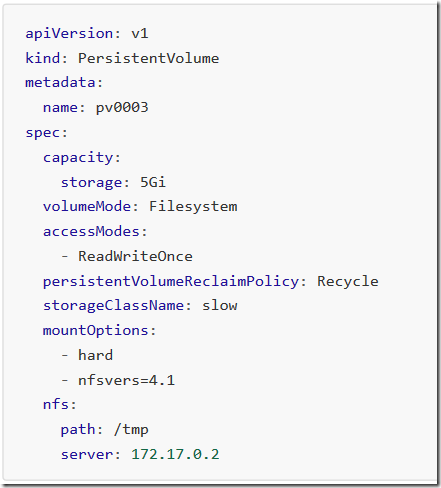

8)持久卷演示代码

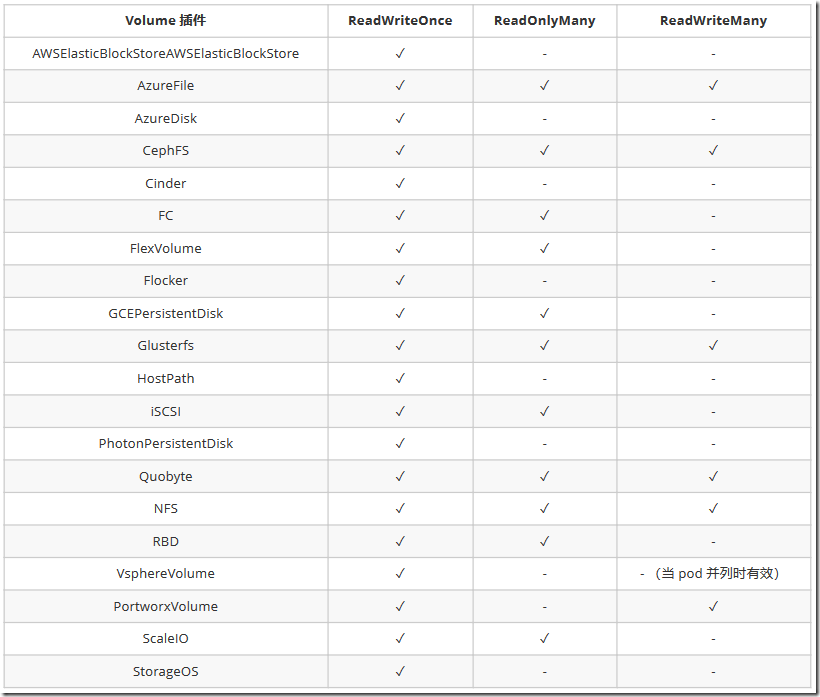

9)PV 访问模式

PersistentVolume可以以资源提供者支持的任何方式挂载到主机上。如下表所示,供应商具有不同的功能,每个PV 的访问模式都将被设置为该卷支持的特定模式。例如,NFS 可以支持多个读/写客户端,但特定的 NFS PV 可能以只读方式导出到服务器上。每个 PV 都有一套自己的用来描述特定功能的访问模式

ReadWriteOnce——该卷可以被单个节点以读/写模式挂载 ReadOnlyMany——该卷可以被多个节点以只读模式挂载 ReadWriteMany——该卷可以被多个节点以读/写模式挂载 #在命令行中,访问模式缩写为: RWO - ReadWriteOnce ROX - ReadOnlyMany RWX - ReadWriteMany

10)回收策略

Retain(保留)——手动回收 Recycle(回收)——基本擦除(rm -rf /thevolume/*) Delete(删除)——关联的存储资产(例如 AWS EBS、GCE PD、Azure Disk 和 OpenStack Cinder 卷)将被删除 #当前,只有 NFS 和 HostPath 支持回收策略。AWS EBS、GCE PD、Azure Disk 和 Cinder 卷支持删除策略

11)卷的状态

卷可以处于以下的某种状态:

Available(可用)——一块空闲资源还没有被任何声明绑定 Bound(已绑定)——卷已经被声明绑定 Released(已释放)——声明被删除,但是资源还未被集群重新声明 Failed(失败)——该卷的自动回收失败 #命令行会显示绑定到 PV 的 PVC 的名称

4.2、持久化演示说明--NFS

1)安装nfs服务器(在harbor服务器上测试)

#nfs服务器上配置

[root@harbor ~]# yum install -y nfs-common nfs-utils rpcbind

[root@harbor ~]# mkdir /nfsdata0{1..4}

[root@harbor ~]# chmod 777 /nfsdata0{1..4} #权限不对报403

[root@harbor ~]# chown nfsnobody /nfsdata0{1..4}

[root@harbor ~]# ll / |grep nfs*

drwxrwxrwx 2 nfsnobody root 6 Feb 5 11:28 nfsdata01

drwxrwxrwx 2 nfsnobody root 6 Feb 5 11:28 nfsdata02

drwxrwxrwx 2 nfsnobody root 6 Feb 5 11:28 nfsdata03

drwxrwxrwx 2 nfsnobody root 6 Feb 5 11:28 nfsdata04

[root@harbor ~]# vim /etc/exports

/nfsdata01 *(rw,no_root_squash,no_all_squash,sync)

/nfsdata02 *(rw,no_root_squash,no_all_squash,sync)

/nfsdata03 *(rw,no_root_squash,no_all_squash,sync)

/nfsdata04 *(rw,no_root_squash,no_all_squash,sync)

[root@harbor ~]# systemctl restart rpcbind

[root@harbor ~]# systemctl restart nfs

#客户端配置,以node01为例,所有节点都要配置

[root@k8s-node01 ~]# yum install rpcbind nfs-utils -y

#客户端测试挂载

[root@k8s-node01 ~]# showmount -e 10.0.0.12

Export list for 10.0.0.12:

/nfsdata04 *

/nfsdata03 *

/nfsdata02 *

/nfsdata01 *

[root@k8s-node01 ~]# mkdir /test

[root@k8s-node01 ~]# mount -t nfs 10.0.0.12:/nfsdata01 /test

[root@k8s-node01 test]# df -h

...

10.0.0.12:/nfsdata01 48G 5.6G 43G 12% /test

#卸载

[root@k8s-node01 ~]# umount /test

2)部署pv

[root@k8s-master01 pv]# cat pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfspv1

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs

nfs:

path: /nfsdata01

server: 10.0.0.12

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfspv2

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs

nfs:

path: /nfsdata02

server: 10.0.0.12

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfspv3

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs

nfs:

path: /nfsdata03

server: 10.0.0.12

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfspv4

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs

nfs:

path: /nfsdata04

server: 10.0.0.12

[root@k8s-master01 pv]# kubectl create -f pv.yaml

persistentvolume/nfspv1 created

persistentvolume/nfspv2 created

persistentvolume/nfspv3 created

persistentvolume/nfspv4 created

[root@k8s-master01 pv]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nfspv1 10Gi RWO Retain Available nfs 5s

nfspv2 5Gi RWX Retain Available nfs 5s

nfspv3 5Gi RWO Retain Available nfs 5s

nfspv4 50Gi RWO Retain Available nfs 5s3)创建服务并使用 PVC

[root@k8s-master01 pv]# cat statefulset-pod.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None #headless service

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

selector:

matchLabels:

app: nginx

serviceName: "nginx"

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: hub.dianchou.com/library/myapp:v1

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: ["ReadWriteOnce"]

storageClassName: "nfs"

resources:

requests:

storage: 1Gi

[root@k8s-master01 pv]# kubectl create -f statefulset-pod.yaml

service/nginx created

statefulset.apps/web created

[root@k8s-master01 pv]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nfspv1 10Gi RWO Retain Bound default/www-web-1 nfs 92s

nfspv2 5Gi RWX Retain Available nfs 92s

nfspv3 5Gi RWO Retain Bound default/www-web-0 nfs 92s

nfspv4 50Gi RWO Retain Bound default/www-web-2 nfs 92s

[root@k8s-master01 pv]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

www-web-0 Bound nfspv3 5Gi RWO nfs 13s

www-web-1 Bound nfspv1 10Gi RWO nfs 9s

www-web-2 Bound nfspv4 50Gi RWO nfs 6s

[root@k8s-master01 pv]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 19s

web-1 1/1 Running 0 15s

web-2 1/1 Running 0 12s4)测试:在nfs目录写入数据,pod访问

#nfs服务器操作 [root@harbor nfsdata01]# echo "nfsdata01" >> index.html [root@harbor nfsdata01]# chmod 777 index.html #master节点,可以正常访问 [root@k8s-master01 pv]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfspv1 10Gi RWO Retain Bound default/www-web-1 nfs 22m nfspv2 5Gi RWX Retain Available nfs 22m nfspv3 5Gi RWO Retain Bound default/www-web-0 nfs 22m nfspv4 50Gi RWO Retain Bound default/www-web-2 nfs 22m [root@k8s-master01 pv]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE www-web-0 Bound nfspv3 5Gi RWO nfs 20m www-web-1 Bound nfspv1 10Gi RWO nfs 20m www-web-2 Bound nfspv4 50Gi RWO nfs 20m [root@k8s-master01 pv]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES web-0 1/1 Running 0 21m 10.244.1.164 k8s-node01 <none> <none> web-1 1/1 Running 0 20m 10.244.2.155 k8s-node02 <none> <none> web-2 1/1 Running 0 20m 10.244.1.165 k8s-node01 <none> <none> [root@k8s-master01 pv]# curl 10.244.2.155 nfsdata01 #删除pod,数据不会丢失 [root@k8s-master01 pv]# kubectl delete pod web-1 pod "web-1" deleted [root@k8s-master01 pv]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES web-0 1/1 Running 0 22m 10.244.1.164 k8s-node01 <none> <none> web-1 1/1 Running 0 3s 10.244.2.156 k8s-node02 <none> <none> web-2 1/1 Running 0 22m 10.244.1.165 k8s-node01 <none> <none> [root@k8s-master01 pv]# curl 10.244.2.156 nfsdata01

4.3、关于StatefulSet相关说明

1)匹配 Pod name ( 网络标识 ) 的模式为:$(statefulset名称)-$(序号),比如上面的示例:web-0,web-1,web-2

2)StatefulSet 为每个 Pod 副本创建了一个 DNS 域名,这个域名的格式为: $(podname).(headless servername),也就意味着服务间是通过Pod域名来通信而非 Pod IP,因为当Pod所在Node发生故障时, Pod 会被飘移到其它 Node 上,Pod IP 会发生变化,但是 Pod 域名不会有变化

[root@k8s-master01 pv]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES web-0 1/1 Running 0 33m 10.244.1.164 k8s-node01 <none> <none> web-1 1/1 Running 0 11m 10.244.2.156 k8s-node02 <none> <none> web-2 1/1 Running 0 33m 10.244.1.165 k8s-node01 <none> <none> [root@k8s-master01 pv]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d21h nginx ClusterIP None <none> 80/TCP 34m #进入web-0中 [root@k8s-master01 pv]# kubectl exec web-0 -it -- /bin/sh / # ping web-1.nginx PING web-1.nginx (10.244.2.156): 56 data bytes 64 bytes from 10.244.2.156: seq=0 ttl=62 time=1.727 ms 64 bytes from 10.244.2.156: seq=1 ttl=62 time=1.250 ms #当删除web-1后,访问返回新的pod ip地址 [root@k8s-master01 pv]# kubectl delete pod web-1 pod "web-1" deleted [root@k8s-master01 pv]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES web-0 1/1 Running 0 36m 10.244.1.164 k8s-node01 <none> <none> web-1 1/1 Running 0 13s 10.244.2.157 k8s-node02 <none> <none> web-2 1/1 Running 0 35m 10.244.1.165 k8s-node01 <none> <none> / # ping web-1.nginx PING web-1.nginx (10.244.2.157): 56 data bytes 64 bytes from 10.244.2.157: seq=0 ttl=62 time=0.580 ms 64 bytes from 10.244.2.157: seq=1 ttl=62 time=1.039 ms

3)StatefulSet 使用 Headless 服务来控制 Pod 的域名,这个域名的 FQDN 为$(servicename).$(namespace).svc.cluster.local,其中,“cluster.local” 指的是集群的域名

[root@k8s-master01 pv]# kubectl get pod -n kube-system -o wide|grep coredns coredns-5c98db65d4-6vgp6 1/1 Running 3 2d21h 10.244.0.8 k8s-master01 <none> <none> coredns-5c98db65d4-8zbqt 1/1 Running 3 2d21h 10.244.0.9 k8s-master01 <none> <none> [root@k8s-master01 pv]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d21h nginx ClusterIP None <none> 80/TCP 41m [root@k8s-master01 pv]# dig -t A nginx.default.svc.cluster.local @10.244.0.8 ; <<>> DiG 9.11.4-P2-RedHat-9.11.4-9.P2.el7 <<>> -t A nginx.default.svc.cluster.local @10.244.0.8 ;; global options: +cmd ;; Got answer: ;; WARNING: .local is reserved for Multicast DNS ;; You are currently testing what happens when an mDNS query is leaked to DNS ;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 46992 ;; flags: qr aa rd; QUERY: 1, ANSWER: 3, AUTHORITY: 0, ADDITIONAL: 1 ;; WARNING: recursion requested but not available ;; OPT PSEUDOSECTION: ; EDNS: version: 0, flags:; udp: 4096 ;; QUESTION SECTION: ;nginx.default.svc.cluster.local. IN A ;; ANSWER SECTION: nginx.default.svc.cluster.local. 30 IN A 10.244.2.157 nginx.default.svc.cluster.local. 30 IN A 10.244.1.164 nginx.default.svc.cluster.local. 30 IN A 10.244.1.165 ;; Query time: 1 msec ;; SERVER: 10.244.0.8#53(10.244.0.8) ;; WHEN: Wed Feb 05 12:16:33 CST 2020 ;; MSG SIZE rcvd: 201

4)根据 volumeClaimTemplates,为每个 Pod 创建一个 pvc,pvc 的命名规则匹配模式:(volumeClaimTemplates.name)-(pod_name),比如上面的 volumeMounts.name=www, Podname=web-[0-2],因此创建出来的 PVC 是 www-web-0、www-web-1、www-web-2

5)删除 Pod 不会删除其 pvc,手动删除 pvc 将自动释放 pv

6)Statefulset的启停顺序

- 有序部署:部署StatefulSet时,如果有多个Pod副本,它们会被顺序地创建(从0到N-1)并且,在下一个Pod运行之前所有之前的Pod必须都是Running和Ready状态。

- 有序删除:当Pod被删除时,它们被终止的顺序是从N-1到0。

- 有序扩展:当对Pod执行扩展操作时,与部署一样,它前面的Pod必须都处于Running和Ready状态

#有序创建 [root@k8s-master01 pv]# kubectl get pod No resources found. [root@k8s-master01 pv]# kubectl create -f statefulset-pod.yaml service/nginx created statefulset.apps/web created [root@k8s-master01 pv]# kubectl get pod -w web-0 0/1 Pending 0 0s web-0 0/1 Pending 0 0s web-0 0/1 ContainerCreating 0 0s web-0 1/1 Running 0 2s web-1 0/1 Pending 0 0s web-1 0/1 Pending 0 0s web-1 0/1 ContainerCreating 0 0s web-1 1/1 Running 0 1s web-2 0/1 Pending 0 0s web-2 0/1 Pending 0 0s web-2 0/1 ContainerCreating 0 0s web-2 1/1 Running 0 2s #有序删除 [root@k8s-master01 pv]# kubectl delete -f statefulset-pod.yaml service "nginx" deleted statefulset.apps "web" deleted [root@k8s-master01 pv]# kubectl get pod -w #可能是太快了,效果不明显 NAME READY STATUS RESTARTS AGE web-0 1/1 Running 0 56s web-1 1/1 Running 0 54s web-2 1/1 Running 0 53s web-2 1/1 Terminating 0 64s web-1 1/1 Terminating 0 65s web-0 1/1 Terminating 0 67s web-0 0/1 Terminating 0 67s web-2 0/1 Terminating 0 64s web-1 0/1 Terminating 0 65s web-2 0/1 Terminating 0 65s web-2 0/1 Terminating 0 65s web-0 0/1 Terminating 0 68s web-0 0/1 Terminating 0 68s web-1 0/1 Terminating 0 66s web-1 0/1 Terminating 0 66s

7)当删除pod,pvc资源,pv状态还显示为Released,并不是Available,如何手动回收pv资源?

[root@k8s-master01 pv]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nfspv1 10Gi RWO Retain Bound default/www-web-1 nfs 66m

nfspv2 5Gi RWX Retain Available nfs 66m

nfspv3 5Gi RWO Retain Bound default/www-web-0 nfs 66m

nfspv4 50Gi RWO Retain Bound default/www-web-2 nfs 66m

[root@k8s-master01 pv]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

www-web-0 Bound nfspv3 5Gi RWO nfs 2m49s

www-web-1 Bound nfspv1 10Gi RWO nfs 2m47s

www-web-2 Bound nfspv4 50Gi RWO nfs 2m43s

[root@k8s-master01 pv]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 2m57s

web-1 1/1 Running 0 2m55s

web-2 1/1 Running 0 2m51s

[root@k8s-master01 pv]# kubectl delete -f statefulset-pod.yaml

service "nginx" deleted

statefulset.apps "web" deleted

[root@k8s-master01 pv]# kubectl get pod

No resources found.

#此时pvc资源不会自动删除

[root@k8s-master01 pv]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

www-web-0 Bound nfspv3 5Gi RWO nfs 3m29s

www-web-1 Bound nfspv1 10Gi RWO nfs 3m27s

www-web-2 Bound nfspv4 50Gi RWO nfs 3m23s

[root@k8s-master01 pv]# kubectl delete pvc --all

persistentvolumeclaim "www-web-0" deleted

persistentvolumeclaim "www-web-1" deleted

persistentvolumeclaim "www-web-2" deleted

[root@k8s-master01 pv]# kubectl get pvc

No resources found.

[root@k8s-master01 pv]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nfspv1 10Gi RWO Retain Released default/www-web-1 nfs 67m

nfspv2 5Gi RWX Retain Available nfs 67m

nfspv3 5Gi RWO Retain Released default/www-web-0 nfs 67m

nfspv4 50Gi RWO Retain Released default/www-web-2 nfs 67m

#编辑pv配置文件,删除spec.claimRef字段内容

[root@k8s-master01 pv]# kubectl edit pv nfspv1

...

claimRef:

apiVersion: v1

kind: PersistentVolumeClaim

name: www-web-1

namespace: default

resourceVersion: "209911"

uid: 818551ce-23ca-4534-8e7d-82e9f75f818a

....

[root@k8s-master01 pv]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nfspv1 10Gi RWO Retain Available nfs 71m

nfspv2 5Gi RWX Retain Available nfs 71m

nfspv3 5Gi RWO Retain Released default/www-web-0 nfs 71m

nfspv4 50Gi RWO Retain Released default/www-web-2 nfs 71m

#同理回收其他pv资源

[root@k8s-master01 pv]# kubectl edit pv nfspv3

persistentvolume/nfspv3 edited

[root@k8s-master01 pv]# kubectl edit pv nfspv4

persistentvolume/nfspv4 edited

[root@k8s-master01 pv]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nfspv1 10Gi RWO Retain Available nfs 72m

nfspv2 5Gi RWX Retain Available nfs 72m

nfspv3 5Gi RWO Retain Available nfs 72m

nfspv4 50Gi RWO Retain Available nfs 72m8)StatefulSet使用场景

- 稳定的持久化存储,即Pod重新调度后还是能访问到相同的持久化数据,基于 PVC 来实现。

- 稳定的网络标识符,即 Pod 重新调度后其 PodName 和 HostName 不变。

- 有序部署,有序扩展,基于 init containers 来实现。

- 有序收缩