目录

Openstack-Mitaka 高可用之 概述

Openstack-Mitaka 高可用之 环境初始化

Openstack-Mitaka 高可用之 Mariadb-Galera集群部署

Openstack-Mitaka 高可用之 Rabbitmq-server 集群部署

Openstack-Mitaka 高可用之 memcache

Openstack-Mitaka 高可用之 Pacemaker+corosync+pcs高可用集群

Openstack-Mitaka 高可用之 认证服务(keystone)

OpenStack-Mitaka 高可用之 镜像服务(glance)

Openstack-Mitaka 高可用之 计算服务(Nova)

Openstack-Mitaka 高可用之 网络服务(Neutron)

Openstack-Mitaka 高可用之 Dashboard

Openstack-Mitaka 高可用之 启动一个实例

Openstack-Mitaka 高可用之 测试

简介

镜像服务允许用户发现、注册和获取虚拟机镜像。它提供了一个API,允许查询虚拟机镜像的metadata 并获取一个现存的镜像。可以将虚拟机镜像存储到各种位置,从简单的文件系统到对象存储系统。

组件:

(1)glance-api

接收镜像API的调用,诸如镜像发现、恢复、存储

(2)glance-registry

存储、处理和恢复镜像的元数据,元数据包括项诸如大小和类型

(3)数据库

存放镜像源数据,诸如MySQL、SQLite

(4)镜像文件的存储仓库

支持多种类型的仓库,它们有普通文件系统、对象存储、RADOS块存储、HTTP

(5)元数据定义服务

通用API,是用于为厂商、管理员、服务、及用户自定义元数据

安装和配置

安装服务前,必须创建一个数据库、服务凭证和API端点

root@controller1 ~]# . admin-openrc [root@controller1 ~]# mysql -ugalera -pgalera -h 192.168.0.10 MariaDB [(none)]> CREATE DATABASE glance; Query OK, 1 row affected (0.07 sec) MariaDB [(none)]> grant all privileges on glance.* to 'glance'@'localhost' identified by 'glance'; Query OK, 0 rows affected (0.01 sec) MariaDB [(none)]> grant all privileges on glance.* to 'glance'@'%' identified by 'glance'; Query OK, 0 rows affected (0.01 sec) MariaDB [(none)]> flush privileges; Query OK, 0 rows affected (0.00 sec) 创建服务证书 [root@controller1 ~]# openstack user create --domain default --password-prompt glance # 密码 glance 创建服务实体 [root@controller1 ~]# openstack service create --name glance --description "OpenStack Image" image 创建镜像服务的API端点 [root@controller1 ~]# openstack endpoint create --region RegionOne image public http://controller:9292 [root@controller1 ~]# openstack endpoint create --region RegionOne image internal http://controller:9292 [root@controller1 ~]# openstack endpoint create --region RegionOne image admin http://controller:9292

安装并配置组件

三个节点都需要安装: # yum install openstack-glance -y 配置文件做如下修改: [root@controller1 ~]# vim /etc/glance/glance-api.conf … Bind_host = 192.168.0.xx # 监听ip地址,为了避免和vip冲突。 registry_host = 192.168.0.xx # 监听ip地址,为了避免和vip冲突。 [database] ... connection = mysql+pymysql://glance:glance@controller/glance [keystone_authtoken] ... auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller1:11211,controller2:11211,controller3:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = glance password = glance [paste_deploy] ... flavor = keystone [glance_store] ... stores = file,http default_store = file filesystem_store_datadir = /var/lib/glance/images/ [root@controller1 ~]# vim /etc/glance/glance-registry.conf [database] ... connection = mysql+pymysql://glance:glance@controller/glance … bind_host = 192.168.0.xx # 监听ip地址,为了避免和vip冲突。 [keystone_authtoken] ... auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller1:11211,controller2:11211,controller3:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = glance password = glance [paste_deploy] ... flavor = keystone

将修改后的配置文件拷贝到其他controller节点

[root@controller1 ~]# cd /etc/glance/ [root@controller1 glance]# scp glance-api.conf glance-registry.conf controller2:/etc/glance/ glance-api.conf 100% 137KB 137.5KB/s 00:00 glance-registry.conf 100% 65KB 65.5KB/s 00:00 [root@controller1 glance]# scp glance-api.conf glance-registry.conf controller3:/etc/glance/ glance-api.conf 100% 137KB 137.5KB/s 00:00 glance-registry.conf

注意修改监听地址

写入镜像服务数据库

[root@controller1 ~]# su -s /bin/sh -c "glance-manage db_sync" glance 忽视告警信息 Option "verbose" from group "DEFAULT" is deprecated for removal. Its value may be silently ignored in the future. /usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:1171: OsloDBDeprecationWarning: EngineFacade is deprecated; please use oslo_db.sqlalchemy.enginefacade expire_on_commit=expire_on_commit, _conf=conf) /usr/lib/python2.7/site-packages/pymysql/cursors.py:166: Warning: (1831, u'Duplicate index `ix_image_properties_image_id_name`. This is deprecated and will be disallowed in a future release.') result = self._query(query)

完成安装,启动镜像服务

每个controller节点都需要执行: # systemctl enable openstack-glance-api.service openstack-glance-registry.service # systemctl start openstack-glance-api.service openstack-glance-registry.service

Haproxy 详解

http://blog.itpub.net/28624388/viewspace-1288651/

listen galera_cluster mode tcp bind 192.168.0.10:3306 balance source option mysql-check user haproxy server controller1 192.168.0.11:3306 check inter 2000 rise 3 fall 3 backup server controller2 192.168.0.12:3306 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:3306 check inter 2000 rise 3 fall 3 backup listen memcache_cluster mode tcp bind 192.168.0.10:11211 balance source server controller1 192.168.0.11:11211 check inter 2000 rise 3 fall 3 server controller2 192.168.0.12:11211 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:11211 check inter 2000 rise 3 fall 3 listen dashboard_cluster mode tcp bind 192.168.0.10:80 balance source option tcplog option httplog server controller1 192.168.0.11:80 check inter 2000 rise 3 fall 3 server controller2 192.168.0.12:80 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:80 check inter 2000 rise 3 fall 3 listen keystone_admin_cluster mode tcp bind 192.168.0.10:35357 balance source option tcplog option httplog server controller1 192.168.0.11:35357 check inter 2000 rise 3 fall 3 server controller2 192.168.0.12:35357 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:35357 check inter 2000 rise 3 fall 3 listen keystone_public_internal_cluster mode tcp bind 192.168.0.10:5000 balance source option tcplog option httplog server controller1 192.168.0.11:5000 check inter 2000 rise 3 fall 3 server controller2 192.168.0.12:5000 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:5000 check inter 2000 rise 3 fall 3 listen glance_api_cluster mode tcp bind 192.168.0.10:9292 balance source option tcplog option httplog server controller1 192.168.0.11:9292 check inter 2000 rise 3 fall 3 server controller2 192.168.0.12:9292 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:9292 check inter 2000 rise 3 fall 3

确认无误,拷贝到其他controller节点

[root@controller1 glance]# systemctl restart haproxy [root@controller1 glance]# netstat -ntplu | grep haproxy tcp 0 0 192.168.0.10:5000 0.0.0.0:* LISTEN 10560/haproxy tcp 0 0 192.168.0.10:5672 0.0.0.0:* LISTEN 10560/haproxy tcp 0 0 192.168.0.10:3306 0.0.0.0:* LISTEN 10560/haproxy tcp 0 0 192.168.0.10:9292 0.0.0.0:* LISTEN 10560/haproxy

tcp 0 0 192.168.0.10:80 0.0.0.0:* LISTEN 10560/haproxy tcp 0 0 192.168.0.10:35357 0.0.0.0:* LISTEN 10560/haproxy udp 0 0 0.0.0.0:43543 0.0.0.0:* 10559/haproxy [root@controller1 ~]# scp /etc/haproxy/haproxy.cfg controller2:/etc/haproxy/ haproxy.cfg 100% 4747 4.6KB/s 00:00 [root@controller1 ~]# scp /etc/haproxy/haproxy.cfg controller3:/etc/haproxy/ haproxy.cfg 100% 4747 4.6KB/s 00:00

haproxy配置务必保持一致。

验证操作:

选择任意节点下载镜像:

# wget http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img # 可提前使用迅雷下载

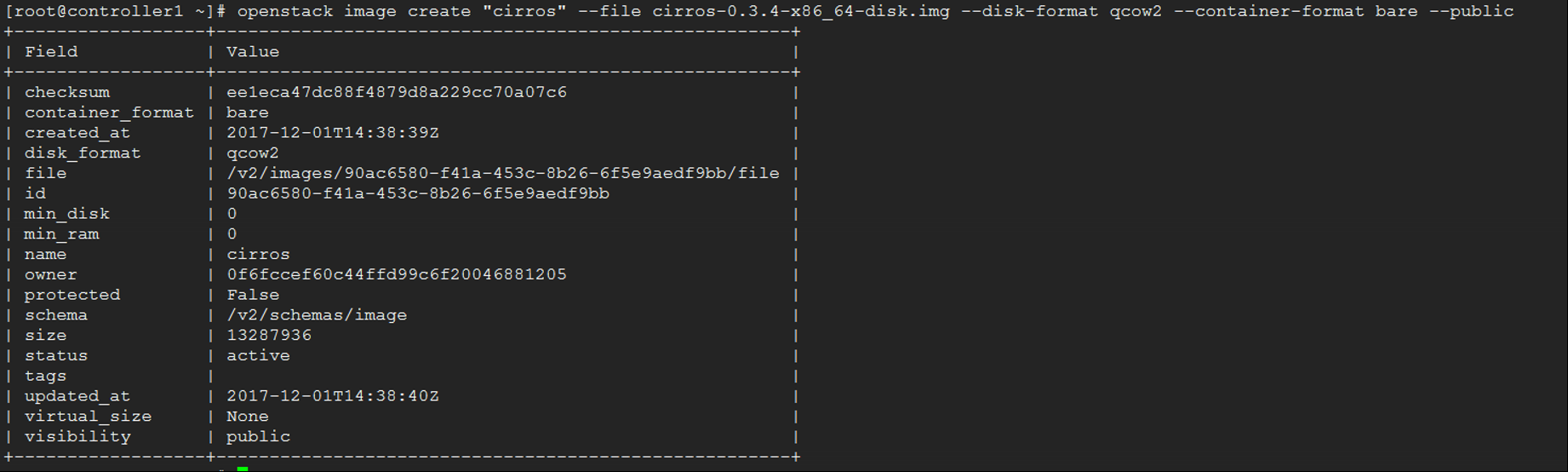

使用QCOW2磁盘格式,bare容器格式上传镜像到镜像服务并设置公共可见,这样所有的项目都可以访问它:

# openstack image create "cirros" --file cirros-0.3.4-x86_64-disk.img --disk-format qcow2 --container-format bare --public

创建成功,确认镜像上传并验证属性:

# openstack image list

glance部署成功。